BAGEL

Overview of BAGEL

What is BAGEL?

BAGEL is an open-source unified multimodal model designed to handle both generation and understanding tasks across text, image, and video modalities. It offers functionality comparable to proprietary systems like GPT-4o and Gemini 2.0 while being fully accessible for fine-tuning, distillation, and deployment. Released on May 20, 2025, BAGEL represents a significant advancement in open multimodal AI systems.

How Does BAGEL Work?

BAGEL employs a Mixture-of-Transformer-Experts (MoT) architecture to maximize learning capacity from diverse multimodal information. It utilizes two separate encoders to capture both pixel-level and semantic-level image features. The model follows a Next Group of Token Prediction paradigm, trained to predict the next group of language or visual tokens as compression targets.

Key Technical Features

- Multimodal Pre-training: Initialized from large language models, providing foundational reasoning and conversation capabilities

- Interleaved Data Training: Pre-trained on large-scale interleaved video and web data for high-fidelity generation

- Scalable Architecture: Uses pre-training, continued training, and supervised fine-tuning on trillions of multimodal tokens

- Dual Encoder System: Combines VAE and ViT features for improved intelligent editing capabilities

Core Capabilities

Multimodal Chat and Understanding

BAGEL can handle both image and text inputs and outputs in mixed formats. It demonstrates advanced conversational abilities about visual content, providing detailed descriptions, artistic context, and historical information about images.

Photorealistic Image Generation

The model generates high-fidelity, photorealistic images, video frames, and interleaved image-text content. Its training on interleaved data fosters a natural multimodal Chain-of-Thought that allows the model to reason before generating visual outputs.

Advanced Image Editing

BAGEL naturally learns to preserve visual identities and fine details while capturing complex visual motion from videos. With strong reasoning abilities inherited from visual-language models, it surpasses basic editing tasks with intellectual editing capabilities.

Style Transfer

The model can easily transform images from one style to another or shift them across different worlds using minimal alignment data, thanks to its deep understanding of visual content and styles.

Navigation and Environment Interaction

By learning from video data, BAGEL distills navigation knowledge from real-world simulations, allowing it to navigate various environments including sci-fi worlds and artistic paintings with diverse rotations and perspectives.

Composition and Reasoning

BAGEL learns a wide range of knowledge from video, web, and language data, enabling it to perform reasoning, model physical dynamics, predict future frames, and engage in multi-turn conversations seamlessly.

Thinking Mode

The model incorporates a thinking mode that leverages multimodal understanding to enhance generation and editing. By reasoning through prompts, BAGEL transforms brief descriptions into detailed and coherent outputs with nuanced context and logical consistency.

Performance Benchmarks

BAGEL demonstrates superior performance across standard understanding and generation benchmarks:

Understanding Performance

| Model | MME-P | MMBench | MMMU | MMVet |

|---|---|---|---|---|

| BAGEL | 1687 | 85 | 55.3 | 67.2 |

Generation Performance

BAGEL achieves an overall score of 0.88 across various generation tasks, outperforming comparable open models in areas including:

- Single object generation (0.98)

- Two object generation (0.95)

- Color accuracy (0.95)

- Position understanding (0.78)

Emerging Properties

As BAGEL scales with more multimodal tokens, consistent performance gains are observed across understanding, generation, and editing tasks. Different capabilities emerge at distinct training stages:

- Early stage: Multimodal understanding and generation

- Middle stage: Basic editing capabilities

- Advanced stage: Complex, intelligent editing

This progression suggests an emergent pattern where advanced multimodal reasoning builds on well-formed foundational skills.

Practical Applications

For Developers and Researchers

- Fine-tune and customize for specific multimodal tasks

- Distill knowledge for deployment on various platforms

- Research advanced multimodal reasoning capabilities

For Content Creators

- Generate photorealistic images and video content

- Perform intelligent image editing and style transfer

- Create cohesive multimodal narratives

For AI System Integrators

- Deploy as a unified multimodal solution

- Enhance existing systems with advanced AI capabilities

- Develop applications requiring complex visual reasoning

Why Choose BAGEL?

BAGEL offers several distinct advantages:

Open Accessibility

As an open-source model, BAGEL provides full access to weights, architecture, and training methodologies, unlike proprietary systems.

Comparable Performance

Demonstrates performance comparable to leading proprietary multimodal systems while maintaining open accessibility.

Scalable Architecture

The MoT architecture allows for continuous scaling and improvement as more multimodal data becomes available.

Comprehensive Capabilities

From basic generation to advanced reasoning and editing, BAGEL offers a complete suite of multimodal abilities in a single model.

Getting Started with BAGEL

BAGEL is available through multiple platforms:

- GitHub: Access source code and documentation

- HuggingFace: Download model weights and try demos

- Paper: Read detailed technical specifications

- Demo: Experiment with live capabilities

The model supports various deployment options including fine-tuning for specific tasks, distillation for resource-constrained environments, and full-scale deployment for production systems.

Future Developments

The BAGEL team continues to work on scaling the model with more multimodal tokens and exploring new emergent capabilities. The open-source nature encourages community contributions and improvements across various multimodal applications.

Best Alternative Tools to "BAGEL"

Nano Banana AI is an online AI image editor excelling in character consistency across multiple images. It offers fast processing, natural language editing, and multi-modal intelligence for professional image creation.

Experience FLUX.1 Kontext by Fluxx.AI: AI image editing & generation with character consistency, local editing, and style transfer. Try it free now!

Grok Imagine is an AI platform that turns text prompts into high-quality images and 6-second videos. Perfect for creating viral content with professional quality.

Seedream 4 AI offers fast 1.8-second 2K image generation and editing using text prompts. Try Seedream 4 AI for free, no sign-up required, and create stunning visuals.

Seedream 4.0 is a next-generation AI image generator and editor. Create high-quality 2K images in seconds, transform ideas with precise text-to-image tools, and enjoy advanced editing for professional-grade creativity. Start for free.

Generate video, images, music & sound with AI. Fast, realistic, fully controllable. Designed for creators, marketers, filmmakers, designers and teams.

Gemini-powered AI image editor excelling in character consistency, text-based editing & multi-image fusion with world knowledge understanding.

Create professional images with Nano Banana, Google's breakthrough AI featuring character consistency, multi-image fusion, and real-time speed.

Nano Banana is the best AI image editor. Transform any image with simple text prompts using Google's Gemini Flash model. New users get free credits for advanced editing like photo restoration and virtual makeup.

Seedream 4.0 is a cutting-edge AI image generator powered by ByteDance, offering ultra-fast 1.8-second generation, 4K resolution, batch processing, and advanced editing for creators and businesses seeking photorealistic visuals.

Discover Nano Banana AI, powered by Gemini 2.5 Flash Image, for free online image generation and editing. Create consistent characters, edit photos effortlessly, and explore styles like anime or 3D conversions at NanoBananaArt.ai.

Discover Nano Banana, Google's revolutionary text-to-image AI model for creating, editing, and enhancing images with context-aware intelligence, character consistency, and professional results. Ideal for artists, designers, and marketers.

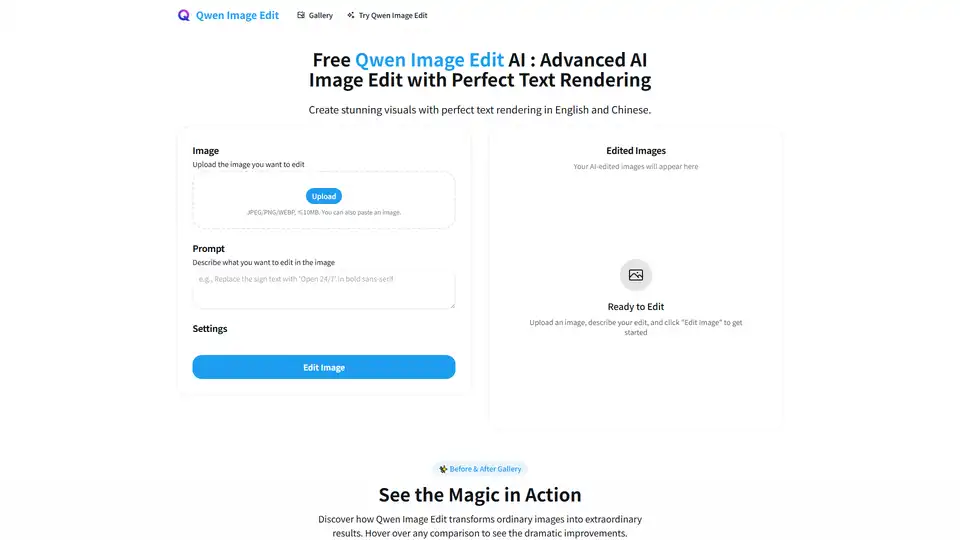

Qwen Image AI is a cutting-edge AI model for high-fidelity image generation with exceptional text rendering in English and Chinese. Edit your images with AI precision.

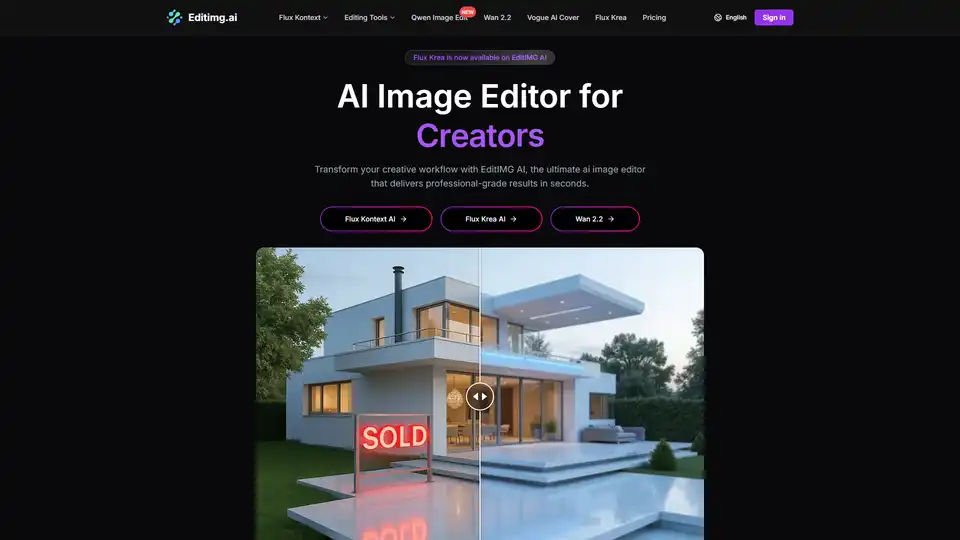

Transform your images with EditIMG AI, the most advanced AI image editor. Edit photos online with AI-powered tools for style transfer, background removal, object replacement, and more.