GPUX

Overview of GPUX

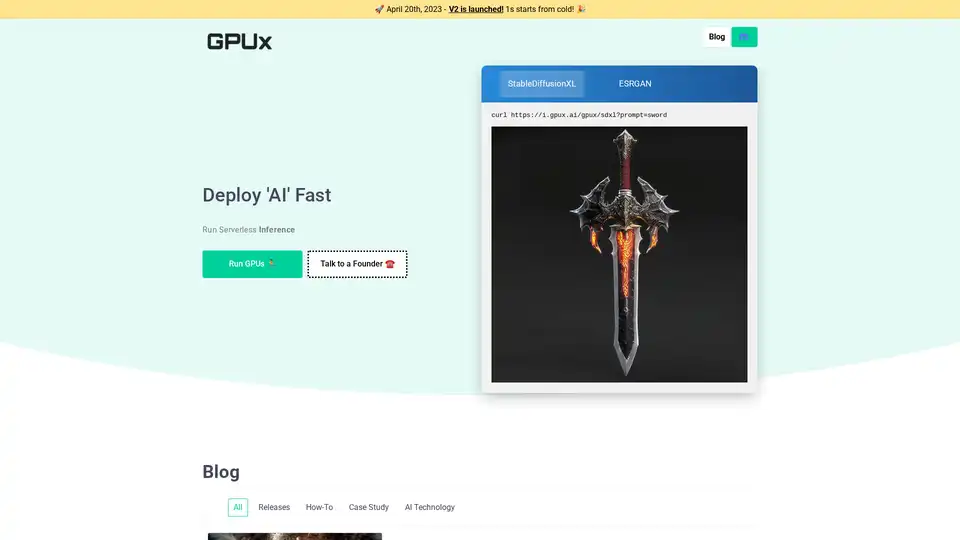

What is GPUX?

GPUX is a cutting-edge serverless GPU inference platform designed specifically for AI and machine learning workloads. The platform revolutionizes how developers and organizations deploy and run AI models by offering unprecedented 1-second cold start times, making it ideal for production environments where speed and responsiveness are critical.

How Does GPUX Work?

Serverless GPU Infrastructure

GPUX operates on a serverless architecture that eliminates the need for users to manage underlying infrastructure. The platform automatically provisions GPU resources on-demand, scaling seamlessly to handle varying workloads without manual intervention.

Cold Start Optimization Technology

The platform's breakthrough achievement is its ability to achieve 1-second cold starts from a completely idle state. This is particularly significant for AI inference workloads that traditionally suffered from lengthy initialization times.

P2P Capabilities

GPUX incorporates peer-to-peer technology that enables organizations to securely share and monetize their private AI models. This feature allows model owners to sell inference requests to other organizations while maintaining full control over their intellectual property.

Core Features and Capabilities

⚡ Lightning-Fast Inference

- 1-second cold starts from completely idle state

- Optimized performance for popular AI models

- Low-latency response times for production workloads

🎯 Supported AI Models

GPUX currently supports several leading AI models including:

- StableDiffusion and StableDiffusionXL for image generation

- ESRGAN for image super-resolution and enhancement

- AlpacaLLM for natural language processing

- Whisper for speech recognition and transcription

🔧 Technical Features

- Read/Write Volumes for persistent data storage

- P2P Model Sharing for secure model distribution

- curl-based API access for easy integration

- Cross-platform compatibility (Windows 10, Linux OS)

Performance Benchmarks

The platform has demonstrated remarkable performance improvements, notably making StableDiffusionXL 50% faster on RTX 4090 hardware. This optimization showcases GPUX's ability to extract maximum performance from available hardware resources.

How to Use GPUX?

Simple API Integration

Users can access GPUX's capabilities through simple curl commands:

curl https://i.gpux.ai/gpux/sdxl?prompt=sword

This straightforward approach eliminates complex setup procedures and enables rapid integration into existing workflows.

Deployment Options

- Web Application access through the GPUX platform

- GitHub availability for developers seeking open-source components

- Cross-platform support for various operating environments

Target Audience and Use Cases

Primary Users

- AI Researchers needing rapid model deployment

- Startups requiring cost-effective GPU resources

- Enterprises looking to monetize proprietary AI models

- Developers seeking simplified AI inference infrastructure

Ideal Applications

- Real-time image generation and manipulation

- Speech-to-text transcription services

- Natural language processing applications

- Research and development prototyping

- Production AI services requiring reliable inference

Why Choose GPUX?

Competitive Advantages

- Unmatched cold start performance - 1-second initialization

- Serverless architecture - no infrastructure management required

- Monetization opportunities - P2P model sharing capabilities

- Hardware optimization - maximized GPU utilization

- Developer-friendly - simple API integration

Business Value

GPUX addresses the fundamental challenge of GPU resource allocation for AI workloads, much like how specialized footwear addresses anatomical differences. The platform provides "the right fit" for machine learning workloads, ensuring optimal performance and cost efficiency.

Company Background

GPUX Inc. is headquartered in Toronto, Canada, with a distributed team including:

- Annie - Marketing based in Krakow

- Ivan - Technology based in Toronto

- Henry - Operations based in Hefei

The company maintains an active blog covering technical topics including AI technology, case studies, how-to guides, and release notes.

Getting Started

Users can access GPUX through multiple channels:

- Web application (V2 currently available)

- GitHub repository for open-source components

- Direct contact with the founding team

The platform continues to evolve, with regular updates and performance enhancements documented through their release notes and technical blog posts.

Tags Related to GPUX