Mind-Video

Overview of Mind-Video

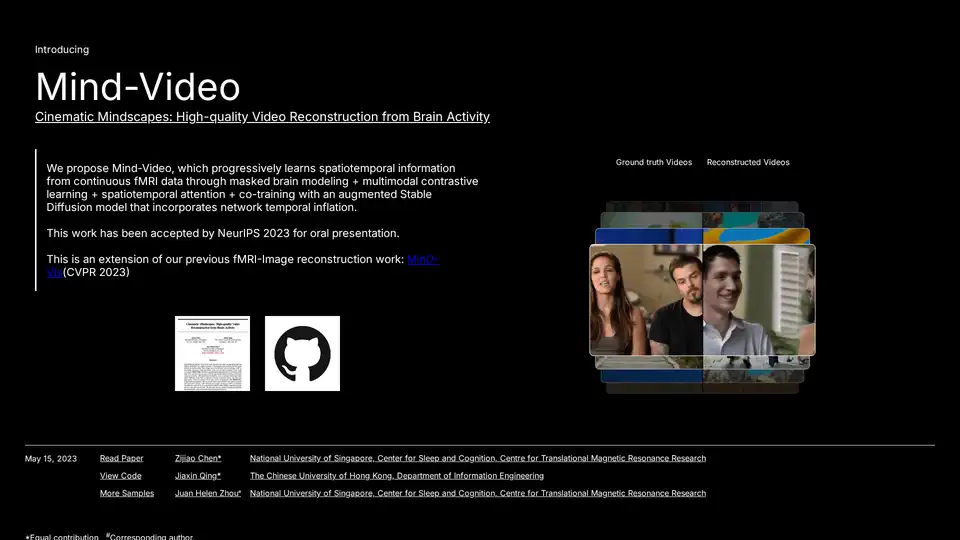

Mind-Video: Reconstructing Cinematic Mindscapes from Brain Activity

What is Mind-Video?

Mind-Video is an innovative AI tool designed to reconstruct high-quality videos from human brain activity. By leveraging functional magnetic resonance imaging (fMRI) data, Mind-Video offers a unique approach to understanding and visualizing cognitive processes. This tool, presented at NeurIPS 2023, builds upon previous work in fMRI-image reconstruction and extends it to the more complex domain of video.

How does Mind-Video work?

Mind-Video employs a sophisticated pipeline that combines several key techniques to achieve its impressive results:

- Masked Brain Modeling: This technique allows the model to learn general visual fMRI features through unsupervised learning on large datasets.

- Multimodal Contrastive Learning: By training the fMRI encoder in the CLIP space with contrastive learning, the model distills semantic-related features from the annotated dataset.

- Spatiotemporal Attention: A specialized attention mechanism processes multiple fMRI scans in a sliding window to capture the temporal dynamics of brain activity.

- Co-training with Augmented Stable Diffusion: The learned features are fine-tuned using an augmented stable diffusion model, specifically tailored for video generation under fMRI guidance.

The pipeline is decoupled into two modules – an fMRI encoder and an augmented stable diffusion model – which are trained separately and then fine-tuned together. This modular design provides flexibility and adaptability in brain decoding.

Key Features and Contributions

- High-Quality Video Reconstruction: Mind-Video generates videos with accurate semantics, including motions and scene dynamics.

- Progressive Learning Scheme: The encoder learns brain features through multiple stages, enhancing its ability to capture nuanced information.

- Biologically Plausible and Interpretable: Attention analysis reveals mapping to the visual cortex and higher cognitive networks, suggesting the model aligns with biological processes.

Why choose Mind-Video?

- Innovative Approach: Mind-Video addresses the limitations of previous methods by incorporating spatiotemporal information from continuous fMRI data.

- Significant Performance: The tool achieves an impressive 85% accuracy in semantic metrics and 0.19 in SSIM, outperforming state-of-the-art approaches by 45%.

- Potential Applications: Mind-Video opens new possibilities in brain-computer interfaces, neuroimaging, and neuroscience.

Who is Mind-Video for?

Mind-Video is valuable for researchers and professionals in various fields, including:

- Neuroscientists: Gaining insights into how the brain processes visual information and cognitive functions.

- AI Researchers: Exploring advanced techniques in brain decoding and video generation.

- Medical Professionals: Developing new diagnostic and therapeutic tools for neurological disorders.

Using Mind-Video

- Data Input: Input fMRI data representing brain activity.

- Processing: The model processes the data through its progressive learning scheme, capturing spatiotemporal information.

- Video Generation: The augmented stable diffusion model generates a video based on the decoded brain activity.

- Analysis: Analyze the reconstructed video to gain insights into the subject's cognitive processes.

Attention Analysis and Biological Plausibility

The attention analysis of Mind-Video's transformers decoding fMRI data provides valuable insights:

- Visual Cortex Dominance: The visual cortex plays a crucial role in processing visual spatiotemporal information.

- Layer-Dependent Hierarchy: Initial layers focus on structural information, while deeper layers learn more abstract visual features.

- Progressive Semantic Learning: The encoder improves its ability to assimilate more nuanced, semantic information throughout its training stages.

Limitations and Future Directions

- Pixel-Level Controllability: The generation process can lack strong control from the fMRI latent to generate strictly matching low-level features.

- Uncontrollable Factors: Mind wandering and imagination during the scan can lead to mismatches between the ground truth and the generation results.

Future research should focus on enhancing pixel-level controllability and mitigating the impact of uncontrollable factors during scans.

Mind-X: Exploring Multimodal Brain Decoding

Mind-Video is a product of Mind-X, a research interest group dedicated to exploring multimodal brain decoding with large models. The group aims to develop general-purpose brain decoding models that empower various applications in brain-computer interfaces, neuroimaging, and neuroscience.

Conclusion

Mind-Video represents a significant advancement in the field of brain decoding and video reconstruction. Its innovative approach, impressive performance, and biological plausibility make it a valuable tool for understanding and visualizing cognitive processes. As research continues, Mind-Video has the potential to unlock new insights into the human brain and pave the way for groundbreaking applications in neuroscience and beyond. By combining masked brain modeling, multimodal contrastive learning, and spatiotemporal attention, Mind-Video sets a new standard for AI-driven brain decoding, offering a glimpse into the cinematic mindscapes hidden within us.