Sagify

Overview of Sagify

What is Sagify?

Sagify is an innovative open-source Python library designed to simplify the complexities of machine learning (ML) and large language model (LLM) workflows on AWS SageMaker. By abstracting away the intricate details of cloud infrastructure, Sagify allows data scientists and ML engineers to focus on what truly matters: developing and deploying high-impact models. Whether you're training custom classifiers, tuning hyperparameters, or integrating powerful LLMs like OpenAI's GPT series or open-source alternatives such as Llama 2, Sagify provides a modular, intuitive interface that accelerates your path from prototype to production.

At its core, Sagify leverages AWS SageMaker's robust capabilities while eliminating the need for manual DevOps tasks. This makes it an essential tool for teams looking to harness the power of cloud-based ML without getting bogged down in setup and management. With support for both proprietary LLMs (e.g., from OpenAI, Anthropic) and open-source models deployed on SageMaker endpoints, Sagify bridges the gap between experimentation and scalable deployment, ensuring your ML projects are efficient, cost-effective, and innovative.

How Does Sagify Work?

Sagify operates through a command-line interface (CLI) and Python API that automates key stages of the ML lifecycle. Its architecture is built around modularity, with distinct components for general ML workflows and a specialized LLM Gateway for handling language models.

Core Architecture for ML Workflows

For traditional ML tasks, Sagify starts by initializing a project structure with sagify init. This creates a standardized directory layout, including training and prediction modules, Docker configurations, and local testing environments. Users implement simple functions like train() and predict() in provided templates, which Sagify packages into Docker images via sagify build.

Once built, these images can be pushed to AWS ECR with sagify push, and training commences on SageMaker using sagify cloud train. The tool handles data upload to S3, resource provisioning (e.g., EC2 instance types like ml.m4.xlarge), and output management. For deployment, sagify cloud deploy spins up endpoints that serve predictions via REST APIs, supporting real-time inference with minimal latency.

Sagify also excels in advanced features like hyperparameter optimization. By defining parameter ranges in a JSON config (e.g., for SVM kernels or gamma values), users can run Bayesian tuning jobs with sagify cloud hyperparameter-optimization. This automates trial-and-error processes, logging metrics like precision or accuracy directly from your training code using Sagify's log_metric function. Spot instances are supported for cost savings on longer jobs, making it ideal for resource-intensive tasks.

Batch transform and streaming inference round out the ML capabilities. Batch jobs process large datasets offline (e.g., sagify cloud batch-transform), while experimental streaming via Lambda and SQS enables real-time pipelines for applications like recommenders.

LLM Gateway: Unified Access to Large Language Models

One of Sagify's standout features is the LLM Gateway, a FastAPI-based RESTful API that provides a single entry point for interacting with diverse LLMs. This gateway supports multiple backends:

- Proprietary LLMs: Direct integration with OpenAI (e.g., GPT-4, DALL-E for image generation), Anthropic (Claude models), and upcoming platforms like Amazon Bedrock or Cohere.

- Open-Source LLMs: Deployment of models like Llama 2, Stable Diffusion, or embedding models (e.g., BGE, GTE) as SageMaker endpoints.

The workflow is straightforward: Deploy models with no-code commands like sagify cloud foundation-model-deploy for foundation models, or sagify llm start for custom configs. Environment variables configure API keys and endpoints, and the gateway handles requests for chat completions, embeddings, and image generations.

For instance, to generate embeddings in batch mode, prepare JSONL inputs with unique IDs (e.g., recipes for semantic search), upload to S3, and trigger sagify llm batch-inference. Outputs link back via IDs, perfect for populating vector databases in search or recommendation systems. Supported instance types like ml.p3.2xlarge ensure scalability for high-dimensional embeddings.

API endpoints mirror OpenAI's format for easy migration:

- Chat Completions: POST to

/v1/chat/completionswith messages, temperature, and max tokens. - Embeddings: POST to

/v1/embeddingsfor vector representations. - Image Generations: POST to

/v1/images/generationswith prompts and dimensions.

Deployment options include local Docker runs or AWS Fargate for production, with CloudFormation templates for orchestration.

Key Features and Benefits

Sagify's features are tailored to streamline ML and LLM development:

- Automation of Infrastructure: No more manual provisioning—Sagify manages Docker builds, ECR pushes, S3 data handling, and SageMaker jobs.

- Local Testing: Commands like

sagify local trainandsagify local deploysimulate cloud environments on your machine. - Lightning Deployment: For pre-trained models (e.g., scikit-learn, Hugging Face, XGBoost), use

sagify cloud lightning-deploywithout custom code. - Model Monitoring and Management: List platforms and models with

sagify llm platformsorsagify llm models; start/stop infrastructure on demand. - Cost Efficiency: Leverage spot instances, batch processing, and auto-scaling to optimize AWS spend.

The practical value is immense. Teams can reduce deployment time from weeks to days, as highlighted in Sagify's promise: "from idea to deployed model in just a day." This is particularly useful for iterative experimentation with LLMs, where switching between providers (e.g., GPT-4 for chat, Stable Diffusion for visuals) would otherwise require fragmented setups.

User testimonials and examples, such as training an Iris classifier or deploying Llama 2 for chat, demonstrate reliability. For embeddings, batch inference on models like GTE-large enables efficient RAG (Retrieval-Augmented Generation) systems, while image endpoints power creative AI apps.

Using Sagify: Step-by-Step Guide

Installation and Setup

Prerequisites include Python 3.7+, Docker, and AWS CLI. Install via pip:

pip install sagify

Configure your AWS account by creating IAM roles with policies like AmazonSageMakerFullAccess and setting up profiles in ~/.aws/config.

Quick Start for ML

- Clone a demo repo (e.g., Iris classification).

- Run

sagify initto set up the project. - Implement

train()andpredict()functions. - Build and test locally:

sagify build,sagify local train,sagify local deploy. - Push and train on cloud:

sagify push,sagify cloud upload-data,sagify cloud train. - Deploy:

sagify cloud deployand invoke via curl or Postman.

Quick Start for LLMs

- Deploy a model:

sagify cloud foundation-model-deploy --model-id model-txt2img-stabilityai-stable-diffusion-v2-1-base. - Set env vars (e.g., API keys for OpenAI).

- Start gateway:

sagify llm gateway --start-local. - Query APIs: Use curl, Python requests, or JS fetch for completions, embeddings, or images.

For batch inference, prepare JSONL files and run sagify llm batch-inference.

Why Choose Sagify for Your ML and LLM Projects?

In a landscape crowded with ML frameworks, Sagify stands out for its SageMaker-specific optimizations and LLM unification. It addresses common pain points like infrastructure overhead and model fragmentation, enabling faster innovation. Ideal for startups scaling AI prototypes or enterprises building production-grade LLM apps, Sagify's open-source nature fosters community contributions, with ongoing support for new models (e.g., Mistral, Gemma).

Who is it for? Data scientists tired of boilerplate code, ML engineers seeking automation, and AI developers experimenting with LLMs. By focusing on model logic over ops, Sagify empowers users to deliver impactful solutions—whether semantic search, generative art, or predictive analytics—while adhering to best practices for secure, scalable AWS deployments.

For the best results in ML workflows or LLM integrations, start with Sagify today. Its blend of simplicity and power makes it the go-to tool for unlocking AWS SageMaker's full potential.

Best Alternative Tools to "Sagify"

Float16.Cloud provides serverless GPUs for fast AI development. Run, train, and scale AI models instantly with no setup. Features H100 GPUs, per-second billing, and Python execution.

Labellerr is a data labeling and image annotation software that provides high-quality, scalable data labeling for AI and ML. It offers automated annotation, advanced analytics, and smart QA to help AI teams prepare data faster and more accurately.

Langbase is a serverless AI developer platform that allows you to build, deploy, and scale AI agents with memory and tools. It offers a unified API for 250+ LLMs and features like RAG, cost prediction and open-source AI agents.

Weco AI automates machine learning experiments using AIDE ML technology, optimizing ML pipelines through AI-driven code evaluation and systematic experimentation for improved accuracy and performance metrics.

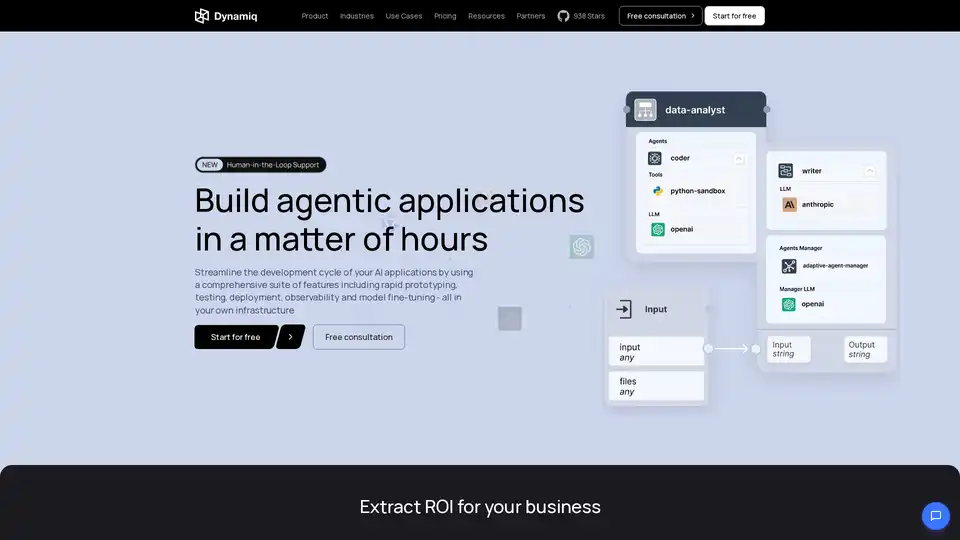

Dynamiq is an on-premise platform for building, deploying, and monitoring GenAI applications. Streamline AI development with features like LLM fine-tuning, RAG integration, and observability to cut costs and boost business ROI.

Xander is an open-source desktop platform that enables no-code AI model training. Describe tasks in natural language for automated pipelines in text classification, image analysis, and LLM fine-tuning, ensuring privacy and performance on your local machine.

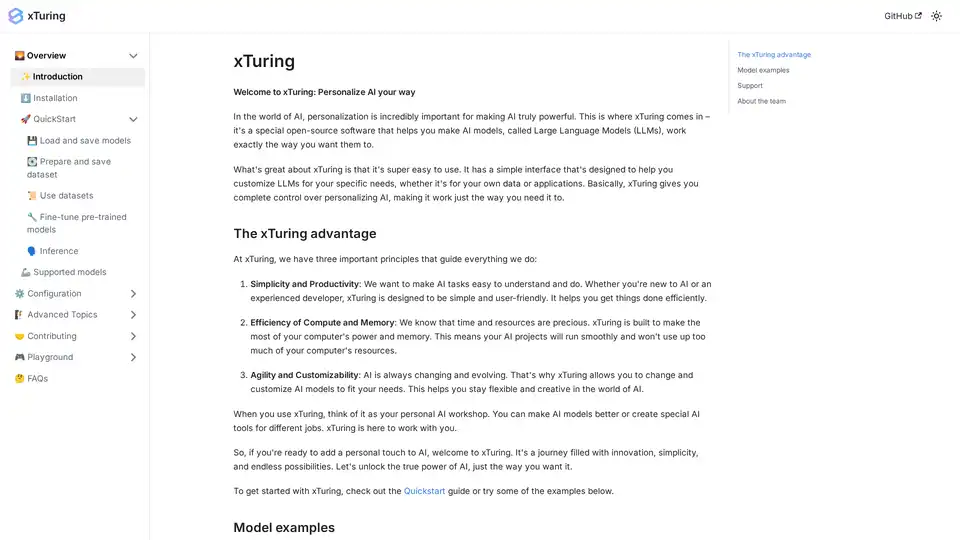

xTuring is an open-source library that empowers users to customize and fine-tune Large Language Models (LLMs) efficiently, focusing on simplicity, resource optimization, and flexibility for AI personalization.

Qubinets is an open-source platform simplifying the deployment and management of AI and big data infrastructure. Build, connect, and deploy with ease. Focus on code, not configs.

Openlayer is an enterprise AI platform providing unified AI evaluation, observability, and governance for AI systems, from ML to LLMs. Test, monitor, and govern AI systems throughout the AI lifecycle.

Trelent is an Enterprise AI platform that builds custom AI solutions tailored for your business, providing AI blueprints for rapid deployment.

Build AI-powered Data Apps in minutes with Morph. Python framework + hosting with built-in authentication, data connectors, CI/CD.

IOTA ERP and CRM offers ERP and CRM development, focusing on business automation with AI and LLM integration for enhanced efficiency.

Lumino is an easy-to-use SDK for AI training on a global cloud platform. Reduce ML training costs by up to 80% and access GPUs not available elsewhere. Start training your AI models today!

Anyscale, powered by Ray, is a platform for running and scaling all ML and AI workloads on any cloud or on-premises. Build, debug, and deploy AI applications with ease and efficiency.