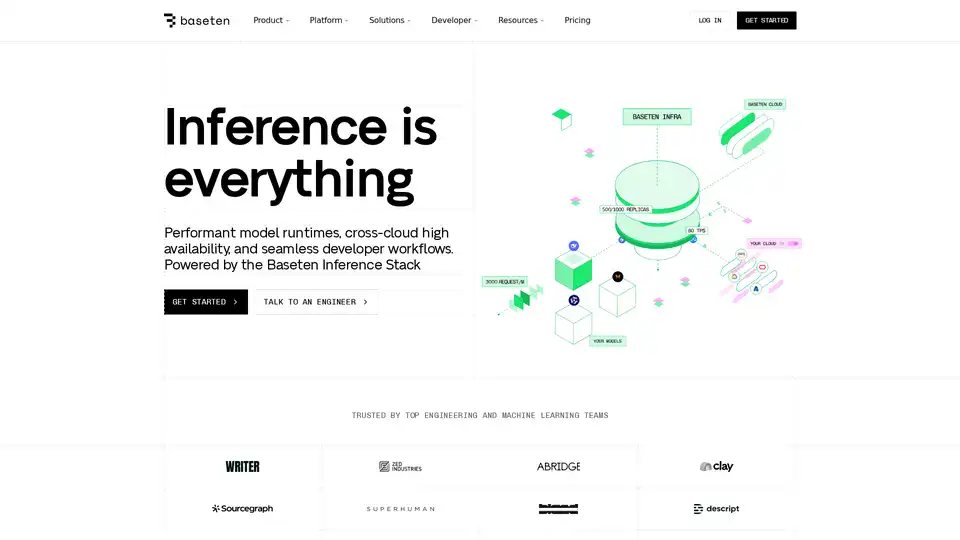

Baseten

Overview of Baseten

What is Baseten?

Baseten is a platform designed to simplify the deployment and scaling of AI models in production. It provides the infrastructure, tooling, and expertise needed to bring AI products to market quickly.

How does Baseten work?

Baseten’s platform is built around the Baseten Inference Stack, which includes cutting-edge performance research, cloud-native infrastructure, and a developer experience designed for inference.

Here's a breakdown of key components:

- Model APIs: Quickly test new workloads, prototype products, and evaluate the latest models with production-grade performance.

- Training on Baseten: Train models using inference-optimized infrastructure without restrictions or overhead.

- Applied Performance Research: Utilize custom kernels, decoding techniques, and advanced caching to optimize model performance.

- Cloud-Native Infrastructure: Scale workloads across any region and cloud (Baseten Cloud or your own), with fast cold starts and high uptime.

- Developer Experience (DevEx): Deploy, optimize, and manage models and compound AI solutions with a production-ready developer experience.

Key Features and Benefits

- Dedicated Deployments: Designed for high-scale workloads, allowing you to serve open-source, custom, and fine-tuned AI models on infrastructure built for production.

- Multi-Cloud Capacity Management: Run workloads on Baseten Cloud, self-host, or flex on demand. The platform is compatible with any cloud provider.

- Custom Model Deployment: Deploy any custom or proprietary model with out-of-the-box performance optimizations.

- Support for Gen AI: Custom performance optimizations tailored for Gen AI applications.

- Model Library: Explore and deploy pre-built models with ease.

Specific Applications

Baseten caters to a range of AI applications, including:

- Image Generation: Serve custom models or ComfyUI workflows, fine-tune for your use case, or deploy any open-source model in minutes.

- Transcription: Utilizes a customized Whisper model for fast, accurate, and cost-efficient transcription.

- Text-to-Speech: Supports real-time audio streaming for low-latency AI phone calls, voice agents, translation, and more.

- Large Language Models (LLMs): Achieve higher throughput and lower latency for models like DeepSeek, Llama, and Qwen with Dedicated Deployments.

- Embeddings: Offers Baseten Embeddings Inference (BEI) with higher throughput and lower latency compared to other solutions.

- Compound AI: Enables granular hardware and autoscaling for compound AI, improving GPU usage and reducing latency.

Why Choose Baseten?

Here are several reasons why Baseten stands out:

- Performance: Optimized infrastructure for fast inference times.

- Scalability: Seamless scaling in Baseten's cloud or your own.

- Developer Experience: Tools and workflows designed for production environments.

- Flexibility: Supports various models, including open-source, custom, and fine-tuned models.

- Cost-Effectiveness: Optimizes resource utilization to reduce costs.

Who is Baseten for?

Baseten is ideal for:

- Machine Learning Engineers: Streamline model deployment and management.

- AI Product Teams: Accelerate time to market for AI products.

- Companies: Seeking scalable and reliable AI infrastructure.

Customer Testimonials

- Nathan Sobo, Co-founder: Baseten has provided the best possible experience for users and the company.

- Sahaj Garg, Co-founder and CTO: Gained a lot of control over the inference pipeline and optimized each step with Baseten's team.

- Lily Clifford, Co-founder and CEO: Rime's state-of-the-art latency and uptime are driven by a shared focus on fundamentals with Baseten.

- Isaiah Granet, CEO and Co-founder: Enabled insane revenue numbers without worrying about GPUs and scaling.

- Waseem Alshikh, CTO and Co-founder of Writer: Achieved cost-effective, high-performance model serving for custom-built LLMs without burdening internal engineering teams.

Baseten provides a comprehensive solution for deploying and scaling AI models in production, offering high performance, flexibility, and a user-friendly developer experience. Whether you're working with image generation, transcription, LLMs, or custom models, Baseten aims to streamline the entire process.

Best Alternative Tools to "Baseten"

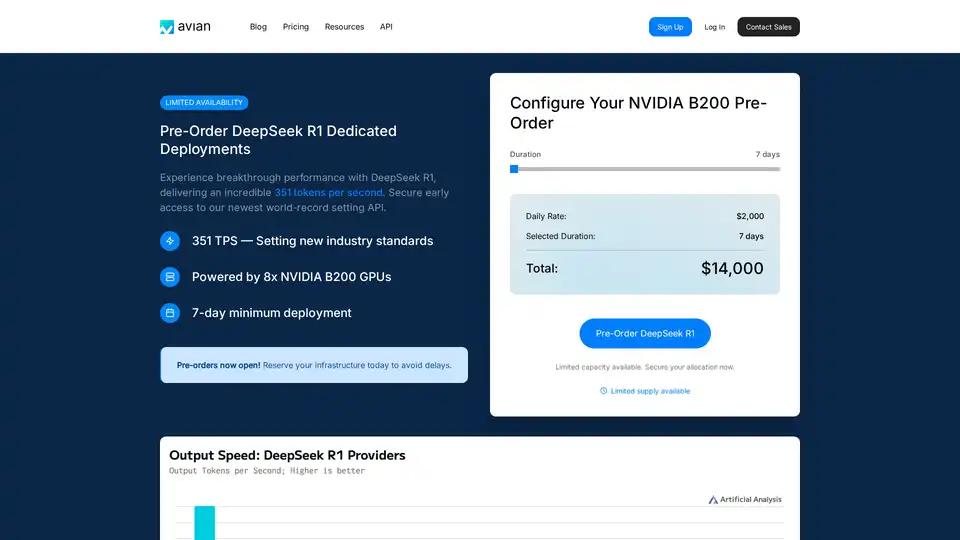

Avian API offers the fastest AI inference for open source LLMs, achieving 351 TPS on DeepSeek R1. Deploy any HuggingFace LLM at 3-10x speed with an OpenAI-compatible API. Enterprise-grade performance and privacy.

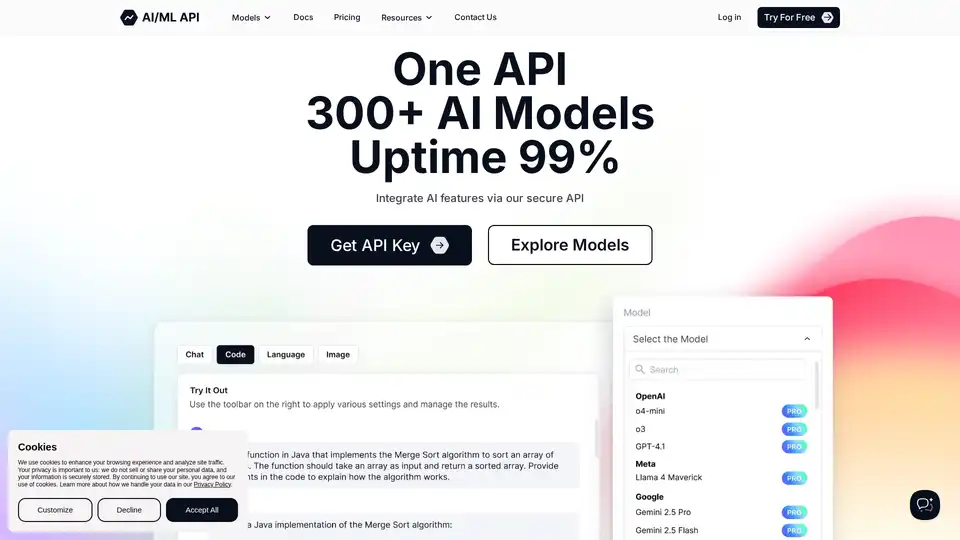

AIMLAPI provides access to 300+ AI models through a single, low-latency API. Save up to 80% compared to OpenAI with fast, cost-efficient AI solutions for machine learning.

Float16.cloud offers serverless GPUs for AI development. Deploy models instantly on H100 GPUs with pay-per-use pricing. Ideal for LLMs, fine-tuning, and training.

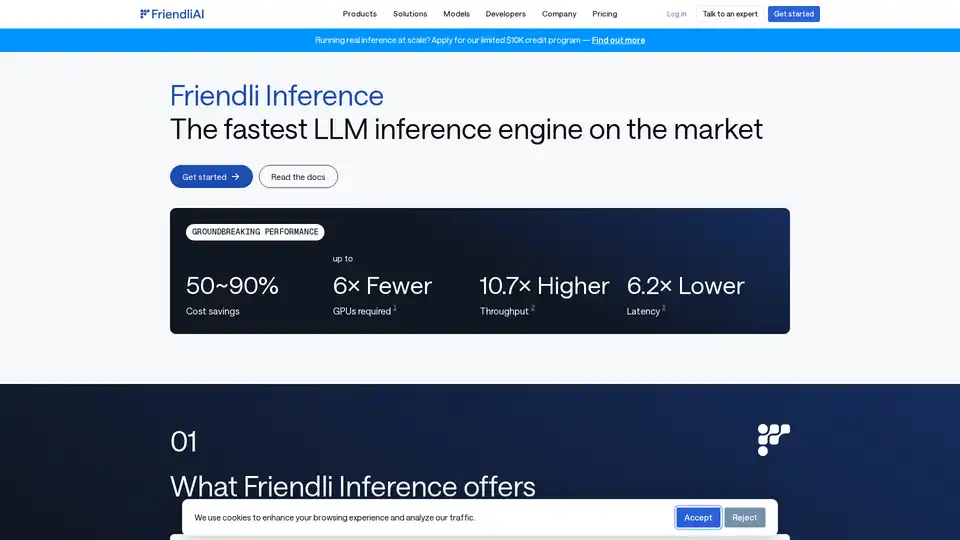

Friendli Inference is the fastest LLM inference engine, optimized for speed and cost-effectiveness, slashing GPU costs by 50-90% while delivering high throughput and low latency.

Explore NVIDIA NIM APIs for optimized inference and deployment of leading AI models. Build enterprise generative AI applications with serverless APIs or self-host on your GPU infrastructure.

Runpod is an AI cloud platform simplifying AI model building and deployment. Offering on-demand GPU resources, serverless scaling, and enterprise-grade uptime for AI developers.

GPUX is a serverless GPU inference platform that enables 1-second cold starts for AI models like StableDiffusionXL, ESRGAN, and AlpacaLLM with optimized performance and P2P capabilities.

Lightning-fast AI platform for developers. Deploy, fine-tune, and run 200+ optimized LLMs and multimodal models with simple APIs - SiliconFlow.

Inferless offers blazing fast serverless GPU inference for deploying ML models. It provides scalable, effortless custom machine learning model deployment with features like automatic scaling, dynamic batching, and enterprise security.

Runpod is an all-in-one AI cloud platform that simplifies building and deploying AI models. Train, fine-tune, and deploy AI effortlessly with powerful compute and autoscaling.

Simplify AI deployment with Synexa. Run powerful AI models instantly with just one line of code. Fast, stable, and developer-friendly serverless AI API platform.

Modal: Serverless platform for AI and data teams. Run CPU, GPU, and data-intensive compute at scale with your own code.

UltiHash: Lightning-fast, S3-compatible object storage built for AI, reducing storage costs without compromising speed for inference, training, and RAG.

Batteries Included is a self-hosted AI platform that simplifies deploying LLMs, vector databases, and Jupyter notebooks. Build world-class AI applications on your infrastructure.