Nebius

Overview of Nebius

Nebius: The Ultimate Cloud for AI Innovators

Nebius is designed as the ultimate cloud platform for AI innovators, aiming to democratize AI infrastructure and empower builders worldwide. It offers a comprehensive suite of resources and services to support every stage of the AI development lifecycle.

What is Nebius?

Nebius is a cloud platform specifically engineered for demanding AI workloads. It integrates NVIDIA GPU accelerators with pre-configured drivers, high-performance InfiniBand, and orchestration tools like Kubernetes or Slurm. This combination ensures peak efficiency for both AI model training and inference at any scale.

Key Features and Benefits:

- Flexible Architecture: Seamlessly scale AI workloads from a single GPU to pre-optimized clusters with thousands of NVIDIA GPUs.

- Tested Performance: Engineered for demanding AI workloads, ensuring peak efficiency.

- Long-Term Value: Optimizes every layer of the stack for unparalleled efficiency and substantial customer value.

- Latest NVIDIA GPUs: Access to NVIDIA GB200 NVL72, HGX B200, H200, H100, and L40S GPUs, connected by an InfiniBand network with up to 3.2Tbit/s per host.

- Managed Services: Reliable deployment of MLflow, PostgreSQL, and Apache Spark with zero maintenance effort.

- Cloud-Native Experience: Manage infrastructure as code using Terraform, API, and CLI, or use the intuitive console.

- Expert Support: 24/7 expert support and dedicated assistance from solution architects.

How does Nebius work?

Nebius optimizes every layer of the AI stack to provide a high-performance environment. This includes:

- NVIDIA GPU Integration: Utilizes NVIDIA GPU accelerators with pre-configured drivers.

- High-Performance Networking: Employs InfiniBand technology to ensure fast data transfer.

- Orchestration Tools: Leverages Kubernetes and Slurm for efficient resource management.

Why choose Nebius?

Nebius offers several advantages over competitors:

- Cost Savings: Improved cost savings on NVIDIA GPUs with a commitment of hundreds of units for at least 3 months.

- Scalability: Ability to scale from a single GPU to thousands in a cluster.

- Reliability: Fully managed services ensure reliable deployment of critical tools and frameworks.

Who is Nebius for?

Nebius is ideal for:

- AI researchers and developers

- Machine learning engineers

- Data scientists

- Organizations needing scalable AI infrastructure

Practical Applications and Use Cases:

Nebius has been instrumental in accelerating AI innovation across various domains:

- CRISPR-GPT (Gene Editing): Enabled rapid model screening and fine-tuning, transforming gene editing into automated workflows.

- Shopify (E-commerce): Provides large-scale GPU clusters for AI model development, enhancing product search and checkout processes.

- vLLM (Open-Source LLM Inference): Optimized inference performance for transformer-based models with high throughput and seamless scalability.

- Brave Software (Web Search): Delivers real-time AI summaries for search queries while maintaining privacy standards.

- CentML Platform (AI Deployment): Optimizes inference platforms, delivering flexible scaling and enhanced hardware utilization.

- TheStage AI (Stable Diffusion): Reduces GPU costs through DNN optimization tools.

- Recraft (AI Design Tool): Trained the first generative AI model for designers from scratch.

- Wubble (Music Creation): Streamlined music creation with high-quality, royalty-free music generation.

- Simulacra AI (Quantum Chemistry): Generates high-precision datasets for molecular dynamics models.

- Quantori (Drug Discovery): Develops an AI framework for generating molecules with precise 3D shapes.

Nebius AI Cloud vs. Nebius AI Studio

Nebius offers two primary products:

- AI Cloud: Provides self-service access to AI infrastructure.

- AI Studio: Offers a managed environment for AI development.

Pricing

Nebius provides competitive pricing for NVIDIA GPUs, including:

- NVIDIA B200 GPU

- NVIDIA H200 GPU

- NVIDIA H100 GPU

In conclusion

Nebius stands out as a robust, flexible, and cost-efficient cloud platform designed to accelerate AI innovation. By providing access to cutting-edge NVIDIA GPUs, optimized infrastructure, and expert support, Nebius empowers AI builders to achieve remarkable results across various industries.

Best Alternative Tools to "Nebius"

Runpod is an AI cloud platform simplifying AI model building and deployment. Offering on-demand GPU resources, serverless scaling, and enterprise-grade uptime for AI developers.

Lumino is an easy-to-use SDK for AI training on a global cloud platform. Reduce ML training costs by up to 80% and access GPUs not available elsewhere. Start training your AI models today!

Massed Compute offers on-demand GPU and CPU cloud computing infrastructure for AI, machine learning, and data analysis. Access high-performance NVIDIA GPUs with flexible, affordable plans.

Anyscale, powered by Ray, is a platform for running and scaling all ML and AI workloads on any cloud or on-premises. Build, debug, and deploy AI applications with ease and efficiency.

AIStocks.io is an AI-powered stock research platform providing real-time forecasts, automated trading signals, and comprehensive risk management tools for confident investment decisions.

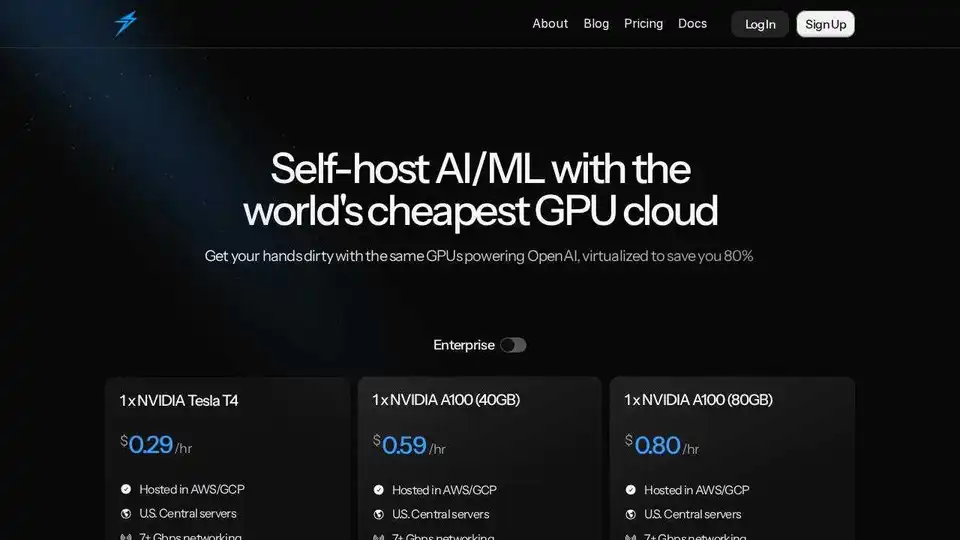

Thunder Compute is a GPU cloud platform for AI/ML, offering one-click GPU instances in VSCode at prices 80% lower than competitors. Perfect for researchers, startups, and data scientists.

Rent high-performance GPUs at low cost with Vast.ai. Instantly deploy GPU rentals for AI, machine learning, deep learning, and rendering. Flexible pricing & fast setup.

Cirrascale AI Innovation Cloud accelerates AI development, training, and inference workloads. Test and deploy on leading AI accelerators with high throughput and low latency.

Juice enables GPU-over-IP, allowing you to network-attach and pool your GPUs with software for AI and graphics workloads.

Phala Cloud offers a trustless, open-source cloud infrastructure for deploying AI agents and Web3 applications, powered by TEE. It ensures privacy, scalability, and is governed by code.

SaladCloud offers affordable, secure, and community-driven distributed GPU cloud for AI/ML inference. Save up to 90% on compute costs. Ideal for AI inference, batch processing, and more.

Vocareum provides AI education platform with virtual computer lab. Enhance computer science courses through hands-on learning and advanced tech solutions. Secure, scalable AI & cloud resources.

Denvr Dataworks provides high-performance AI compute services, including on-demand GPU cloud, AI inference, and a private AI platform. Accelerate your AI development with NVIDIA H100, A100 & Intel Gaudi HPUs.

Enable efficient LLM inference with llama.cpp, a C/C++ library optimized for diverse hardware, supporting quantization, CUDA, and GGUF models. Ideal for local and cloud deployment.