Runpod

Overview of Runpod

Runpod: The All-in-One AI Cloud Platform

What is Runpod?

Runpod is a comprehensive cloud platform designed to streamline the process of building, training, and deploying AI models. It addresses the common challenges faced by AI engineers, such as infrastructure management, scaling, and cold starts. By providing a fully-loaded, GPU-enabled environment that can be spun up in under a minute, Runpod aims to make AI development more accessible and efficient.

Key Features:

- Effortless Deployment: Simplifies the process of getting AI models into production.

- Global Reach: Allows users to run workloads across multiple regions worldwide.

- Autoscaling: Dynamically adapts to workload demands, scaling from 0 to 100+ compute workers in real-time.

- Zero Cold Starts: Ensures uninterrupted execution with always-on GPUs.

- FlashBoot: Enables lightning-fast scaling with sub-200ms cold starts.

- Persistent Data Storage: Offers S3-compatible storage for full AI pipelines without egress fees.

How does Runpod work?

Runpod provides a platform where you can quickly deploy and scale your AI workloads. It offers several key features that make this possible:

- GPU-Enabled Environments: Runpod provides access to GPU-powered environments, essential for training and running AI models.

- Autoscaling: The platform can automatically scale resources based on demand, ensuring you have the compute power you need when you need it.

- Low Latency: With features like FlashBoot and global deployment options, Runpod helps reduce latency and improve performance.

- Persistent Storage: The platform offers persistent data storage, allowing you to manage your data without incurring egress fees.

Use Cases:

Runpod is suitable for a wide range of AI applications, including:

- Inference: Serving inference for image, text, and audio generation.

- Fine-tuning: Training custom models on specific datasets.

- Agents: Building intelligent agent-based systems and workflows.

- Compute-Heavy Tasks: Running rendering and simulations.

Benefits:

- Cost-Effective: Pay only for what you use, billed by the millisecond.

- Scalable: Instantly respond to demand with GPU workers that scale from 0 to 1000s in seconds.

- Reliable: Guarantees 99.9% uptime for critical workloads.

- Secure: Committed to security with SOC2, HIPAA, and GDPR certifications in progress.

How to get started with Runpod?

- Sign Up: Create an account on the Runpod platform.

- Explore Templates: Use pre-built templates to kickstart your AI workflows.

- Customize: Configure GPU models, scaling behaviors, and other settings to fit your specific needs.

- Deploy: Runpod simplifies the process of deploying and scaling AI models, allowing you to focus on innovation rather than infrastructure management.

Why is Runpod important?

Runpod addresses the challenges of deploying AI models by providing a scalable, cost-effective, and easy-to-use platform. It allows AI engineers to focus on building and innovating without being bogged down by infrastructure management. With features like autoscaling, zero cold starts, and persistent data storage, Runpod is a valuable tool for anyone working with AI.

Customer Testimonials:

Several companies have praised Runpod for its ability to scale GPU infrastructure and reduce infrastructure costs. For example, Bharat, Co-founder of InstaHeadshots, stated that Runpod has allowed them to focus entirely on growth and product development without worrying about GPU infrastructure.

Conclusion:

Runpod is a powerful and versatile cloud platform for AI development and deployment. Its focus on simplicity, scalability, and cost-effectiveness makes it an attractive option for businesses and individuals looking to leverage the power of AI. By abstracting away the complexities of infrastructure management, Runpod empowers users to build the future without being held back by infrastructure limitations.

Best Alternative Tools to "Runpod"

Modal: Serverless platform for AI and data teams. Run CPU, GPU, and data-intensive compute at scale with your own code.

Lumino is an easy-to-use SDK for AI training on a global cloud platform. Reduce ML training costs by up to 80% and access GPUs not available elsewhere. Start training your AI models today!

GreenNode offers comprehensive AI-ready infrastructure and cloud solutions with H100 GPUs, starting from $2.34/hour. Access pre-configured instances and a full-stack AI platform for your AI journey.

Anyscale, powered by Ray, is a platform for running and scaling all ML and AI workloads on any cloud or on-premises. Build, debug, and deploy AI applications with ease and efficiency.

Runpod is an AI cloud platform simplifying AI model building and deployment. Offering on-demand GPU resources, serverless scaling, and enterprise-grade uptime for AI developers.

Novita AI provides 200+ Model APIs, custom deployment, GPU Instances, and Serverless GPUs. Scale AI, optimize performance, and innovate with ease and efficiency.

Float16.Cloud provides serverless GPUs for fast AI development. Run, train, and scale AI models instantly with no setup. Features H100 GPUs, per-second billing, and Python execution.

Design and generate floor plans with AI for free using Floor Plan AI. Turn text or sketches into layouts and 3D-ready visuals in just a few clicks. No signup needed.

Denvr Dataworks provides high-performance AI compute services, including on-demand GPU cloud, AI inference, and a private AI platform. Accelerate your AI development with NVIDIA H100, A100 & Intel Gaudi HPUs.

Nebius is an AI cloud platform designed to democratize AI infrastructure, offering flexible architecture, tested performance, and long-term value with NVIDIA GPUs and optimized clusters for training and inference.

Enable efficient LLM inference with llama.cpp, a C/C++ library optimized for diverse hardware, supporting quantization, CUDA, and GGUF models. Ideal for local and cloud deployment.

SaladCloud offers affordable, secure, and community-driven distributed GPU cloud for AI/ML inference. Save up to 90% on compute costs. Ideal for AI inference, batch processing, and more.

LM Studio is a user-friendly desktop application for running and downloading open-source large language models (LLMs) like LLaMa and Gemma locally on your computer. It features an in-app chat UI and an OpenAI compatible server for offline AI model interaction, making advanced AI accessible without programming skills.

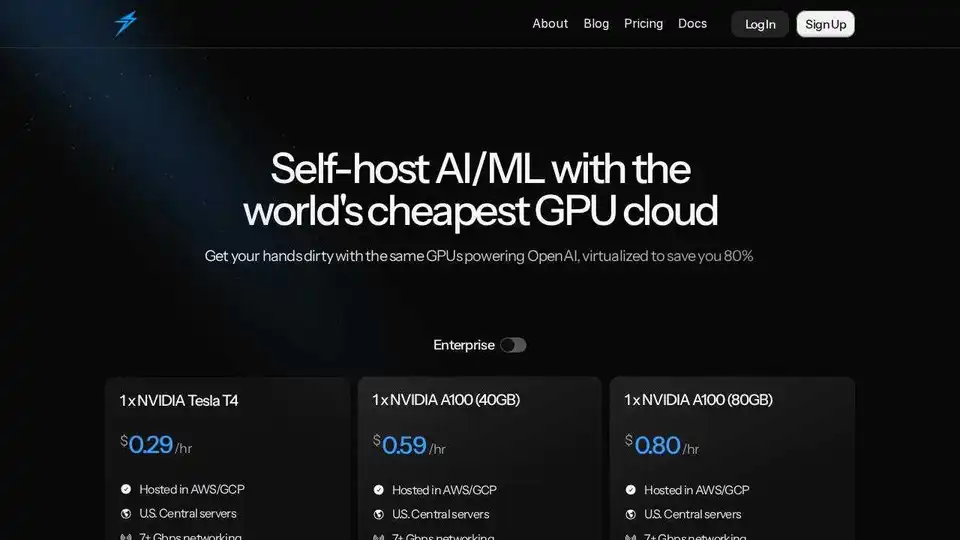

Thunder Compute is a GPU cloud platform for AI/ML, offering one-click GPU instances in VSCode at prices 80% lower than competitors. Perfect for researchers, startups, and data scientists.