Deep Comparison and Selection Guide for Mainstream AI Agent Frameworks (LangChain vs. LangGraph vs. CrewAI vs. AutoGen vs. Semantic Kernel, 2026)

With continuous breakthroughs in Large Language Model (LLM) capabilities, AI Agents are transitioning from proof-of-concept to industrial-grade implementation. Between 2023 and 2024 alone, the number of open-source AI Agent frameworks grew several-fold, with over 20,000 "AI Agent" related repositories now appearing on GitHub. For developers, technical leads, and entrepreneurs, choosing between solutions like LangChain, AutoGen, and CrewAI has become the primary decision in building AI applications. Should you prioritize the most mature ecosystem or a clearer, more controllable architecture? Are you looking for a quick prototype or long-term engineering stability and enterprise scalability?

This article aims to provide a "clear and actionable selection roadmap" for this decision. Based on public data and community trends as of December 2025, we will conduct a systematic horizontal comparison of five mainstream AI Agent frameworks across multiple dimensions: core design paradigms, engineering complexity, ecosystem maturity, real-world project feedback, and the trade-off between performance and cost. This guide goes beyond a mere list of features, combining specific business scenarios, team sizes, and technical backgrounds to distill a repeatable methodology for framework selection.

The content of this article is based on our team's practical experience in projects such as content automation systems, the NavGood AI tool navigation site, and internal corporate process agents. We aim to bridge the gap between concepts, engineering, and business needs to help you find the right AI Agent product or framework during this critical 2026 acceleration phase of AI Agent adoption.

Recommended Audience:

- Tech enthusiasts and entry-level learners

- Enterprise decision-makers and business leads

- General users interested in the future trends of AI

Table of Contents:

- Part 1: Overview of the AI Agent Framework Landscape

- Part 2: Deep Comparison of the Five Mainstream Frameworks

- Part 3: Detailed Framework Analysis

- Part 4: Practical Selection Guide

- Part 5: Performance and Cost Considerations

- Part 6: Future Trends (2025–2026)

- Conclusion: No "Best" Framework, Only the "Right" Choice

- Frequently Asked Questions (FAQ)

- Resources

Part 1: Overview of the AI Agent Framework Landscape

What is an AI Agent Framework?

An AI Agent framework is a collection of tools and libraries designed to simplify the process of building intelligent agents based on LLMs that possess autonomy, planning, tool usage, and memory capabilities. Its core value lies in abstracting the universal capabilities required by an Agent—such as Chain-of-Thought planning, short/long-term memory management, tool-calling orchestration, and multi-agent collaboration—into reusable modules. This allows developers to focus on business logic rather than building complex state and control flows from scratch.

The Three Stages of AI Agent Framework Evolution

- Early Experimental Phase (2022 - Early 2023): Represented by LangChain and BabyAGI. Characterized by proof-of-concept, introducing "Chain" logic to LLM applications. It solved basic tool calling and memory issues but had a relatively simple architecture.

- Feature Refinement Phase (2023 - 2024): As applications deepened, the demand for complex workflows surged. Represented by LangGraph, CrewAI, and AutoGen, frameworks began emphasizing state management, visual orchestration, role-based collaboration, and conversation scheduling, evolving from simple linear chains to more complex paradigms like graphs and teams.

- Ecosystem Maturity & Specialization (2025 - Present): Frameworks began to differentiate and integrate deeply with cloud services and enterprise IT stacks. The focus shifted to production readiness, performance optimization, security, compliance, and low-code experiences. The expansion of Semantic Kernel and Haystack in the enterprise market is a hallmark of this stage.

Current AI Agent Market Landscape

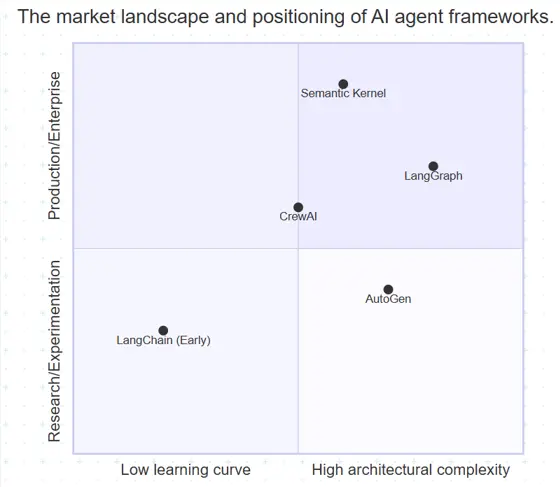

The current market presents a "one leader, many challengers" structure. The following diagram illustrates the niches and relationships of mainstream frameworks:

- Leader: LangChain holds the first-mover advantage and the largest community, offering the most complete ecosystem of tools, integrations, and knowledge bases. It remains the top choice for many developers.

- Strong Challengers: CrewAI stands out in team collaboration scenarios with its intuitive "Role-Task-Process" model; AutoGen has built a deep moat in multi-agent conversation orchestration and research.

- Ecosystem Evolvers: LangGraph, as an "upgrade" within the LangChain ecosystem, focuses on solving complex, stateful business processes using a state machine graph. It is becoming the new standard for high-complexity applications.

- Enterprise Players: Semantic Kernel, backed by Microsoft, integrates deeply with Azure OpenAI and the .NET ecosystem, offering natural advantages in security, observability, and integration with existing corporate systems.

Part 2: Deep Comparison of the Five Mainstream Frameworks

Comparison Dimensions

We evaluate these frameworks across six core dimensions: Design Philosophy (determines the mindset), Architectural Features (impacts scalability and maintenance), Learning Curve (team onboarding cost), Community Vitality (ability to get help and ensure long-term evolution), Enterprise Support (crucial for production), and Performance (the foundation for large-scale apps).

Detailed Comparison Table

| Feature Dimension | LangChain | LangGraph | CrewAI | AutoGen | Semantic Kernel |

|---|---|---|---|---|---|

| Core Positioning | General AI App Framework | State-driven Complex Workflow Framework | Role-driven Team Collaboration Framework | Multi-Agent Conversation Orchestration | Enterprise AI Integration Framework |

| Design Philosophy | "LEGO-style" modularity | "Visual flowchart" state machine | "Company structure" role synergy | "Group chat" style conversation orchestration | "Plugin-based skill" planning and execution |

| Core Architecture | Chains, Agents, Tools | State Graph, Nodes, Edges | Agent, Task, Process, Crew | ConversableAgent, GroupChat | Plugins, Planners, Memories |

| Learning Curve | Moderate: Many concepts, but rich ecosystem | Moderate-High: Requires understanding state/loops | Low: Intuitive and straightforward concepts | High: Flexible but complex configuration | Moderate: Clear concepts, needs ecosystem familiarity |

| GitHub Stars | ~ 122k+ (2025.12) | ~ 22.3k+ (2025.12) | ~ 41.5k+ (2025.12) | ~ 52.7k+ (2025.12) | ~ 26.9k+ (2025.12) |

| Docs/Community | Excellent, massive tutorials | Good, rapidly improving | Excellent, rich use cases | Moderate, API-heavy | Excellent, enterprise-grade docs |

| Multi-Agent Support | Basic, requires manual orchestration | Native & Excellent, natural graph structure | Core Feature, built for collaboration | Core Feature, specialized in conversations | Achieved via Planner combinations |

| Tool/Integration | Extremely Rich, covers all major services | Relies on LangChain, focuses on flow | Good, focuses on common tools | Good, supports customization | Deep Microsoft Ecosystem (Azure, Copilot) |

| Visual Debugging | Limited (LangSmith) | Strong Advantage, visual execution | Basic process visualization | Requires 3rd-party tools | Provided via Azure services |

| Enterprise Features | Via LangSmith/LangServe | High, supports complex logic tracking | Moderate | Lower, research-oriented | Very High, security, monitoring, compliance |

| Best Scenario | Quick prototypes, education, custom components | Customer support, approval flows, ETL complex workflows | Content creation, market analysis, team-based tasks | Academic research, complex debates, simulations | High-compliance industries (Finance/Healthcare) |

Data Sources:

https://github.com/langchain-ai/langchain

https://github.com/langchain-ai/langgraph

https://github.com/crewaiinc/crewai

https://github.com/microsoft/autogen

https://github.com/microsoft/semantic-kernel

Core Design Paradigms Behind the Frameworks

The differences between frameworks stem from their underlying design paradigms:

| Architectural Paradigm | Core Idea | Representative Framework | Pros | Cons |

|---|---|---|---|---|

| Chain / Pipeline | Breaks tasks into linear execution "chains" | LangChain (Early) | Simple and intuitive | Hard to handle loops/branches |

| Graph / State Machine | Uses nodes and edges to form stateful workflows | LangGraph | Powerful/flexible, perfect for complex logic | High learning curve and design complexity |

| Role-based Collaboration | Assigns Agents clear roles/tasks to simulate a team | CrewAI | Natural modeling, easy division of labor | Less flexible for strict linear flows than graphs |

| Conversation-Oriented | Uses "Dialogue" as the core mechanism for Agent interaction | AutoGen | Ideal for negotiation or iterative communication | Unpredictable execution flow, hard to debug |

| Plugin / Skill-based | Encapsulates capabilities as plugins, dynamically called by a Planner | Semantic Kernel | Decoupled, easy to extend and integrate | Requires powerful Planner; performance challenges |

Part 3: Detailed Framework Analysis

LangChain: The Most Complete General Framework

Core Advantage: The ecosystem is its biggest moat. It boasts the most comprehensive documentation, the most community tutorials, and the richest third-party integrations (hundreds of official and community integrations covering major databases, vector stores, and SaaS services). Its modular design allows developers to assemble prototypes like LEGO bricks.

Typical Applications:

- Quick Proof-of-Concept: For example, building a Q&A bot based on private documents in a single afternoon.

- Learning AI Agent Principles: Due to its clear modularity, it is the best textbook for understanding concepts like Tools, Memory, and Chains.

- Highly Customized Needs: When you need to deeply customize a specific link (like a unique memory indexing method), LangChain's low-level interfaces make it possible.

Note: "Comprehensive" brings complexity. It might feel bloated for simple, fixed workflows. Its Agent executor may struggle with extremely complex, multi-step loops; in such cases, consider LangGraph.

In our NavGood testing for automation scripts, we initially tried handling a 10-step scraping logic with a standard LangChain Agent. We found that due to the "black box" nature of Chains, once an intermediate step failed, recovering state was difficult. This prompted us to migrate our core logic to LangGraph.

LangGraph: The Top Choice for Complex Business Processes

Core Advantage: State management and visualization. It abstracts the entire Agent system into a directed graph where each node is a function or sub-agent, and edges define the conditions for state transition. This enables developers to clearly model business processes involving loops, conditional branching, parallelism, and wait states.

Relationship with LangChain: It is a "high-level component" of the LangChain ecosystem, specifically designed to address LangChain's shortcomings in complex, stateful workflows. Their toolchains and model interfaces are fully compatible.

Use Cases:

- Customer Service Ticket Handling: Routing based on user intent (returns, inquiries, complaints), with potential back-and-forth between nodes.

- Multi-step Approval Processes: Simulating "Submit → Manager Approval → Finance Approval → Archive," where rejection at any stage requires returning for modification.

- Data Processing Pipelines: Executing a "Scrape → Clean → Validate → Analyze → Report" pipeline with retries or exception branches.

Note: Requires a shift from "chain" thinking to "graph" thinking. Designing a good State Schema is key; poor design leads to chaos.

Beginners often cram all intermediate results, contexts, and logs into a single State object, making it bloated. Our suggestion: split State into "Core Business State" and "Temporary Calculation Data," keeping only essential cross-node info in the State.

CrewAI: The Choice for Team Collaboration

Core Advantage: Role-driven abstraction. It uses Agent (defining roles, goals, backstories), Task (defining objectives, expected output), and Crew (organizing Agents and Tasks with a defined Process, such as sequential or parallel). This closely mirrors how humans collaborate in teams.

Unique Concept: The hierarchical mode in Process allows for a "Manager" Agent to coordinate "Worker" Agents, perfect for simulating corporate hierarchies.

Use Cases:

- Content Creation Team: Planner (generates outline), Writer (drafts), Editor (polishes), and Publishing Manager (formatting) working in sync.

- Market Analysis Reports: Researchers, Data Analysts, Insight Summarizers, and PPT Makers completing tasks in a relay.

- Agile Project Management: Product Manager, Dev, and QA Agents simulating a sprint process.

Note: Its process is relatively structured. For scenarios requiring extremely free, dynamic dialogue (like an open debate), it may not be as flexible as AutoGen.

A common mistake is setting grand role descriptions (e.g., "Senior Market Expert") without specific responsibilities or output constraints, leading to result drift. Suggestion: Define "Input → Output Format → Criteria" clearly within the Agent, and keep expected_output for Tasks structured (e.g., Markdown templates or JSON fields).

AutoGen: The Choice for Multi-Agent Conversation Research

Core Advantage: Flexibility in conversation orchestration and research depth. The heart of AutoGen is the ConversableAgent, which collaborates by sending messages. It offers rich conversation patterns (like GroupChat with GroupChatManager) and customizable "Human-in-the-loop" hooks, making it ideal for exploratory multi-agent interaction research. Notably, Microsoft's 2025 overhaul (AutoGen 0.4+ or Magentic-One) introduced native multimodal support and the AutoGen Studio visual interface.

Use Cases:

- Academic Research: Simulating multiple AI experts with different backgrounds discussing a problem.

- Complex Dialogue Systems: Building immersive RPG NPCs or interactive stories that require multiple AI roles.

- Code Generation & Review: Creating a "Programmer" Agent and a "Reviewer" Agent that optimize code through multi-turn dialogue.

Note: Configuration and debugging are complex. It leans toward exploration and interaction rather than fixed pipelines, requiring extra engineering constraints for deterministic production tasks.

Avoid "endless loops" where Agents keep questioning each other without a clear termination. Suggestion: Set a clear max_round or termination function, and use a "Summarizer Agent" to converge on a conclusion.

Semantic Kernel: The Steady Choice for Enterprise Integration

Core Advantage: Enterprise-grade features and seamless Microsoft integration. Developed by Microsoft, it natively supports Azure OpenAI, Azure AI Search, and other cloud services, excelling in security, authentication, and observability (via Azure Monitor). Its multi-language SDK (Python, C#, Java) is a powerful tool for large enterprises to unify AI development across heterogeneous tech stacks. In 2025, Semantic Kernel emphasized the "Agentic SDK" concept, focusing on distributed transaction safety.

Core Concepts: Plugin (skills containing Functions), Planner (automatically plans which skills to call), and Memory (vector storage).

Use Cases:

- Enterprise Digital Transformation: Wrapping existing CRM/ERP APIs into Plugins, allowing AI Agents to safely operate on business data.

- Building Internal Copilots: Deep integration with Microsoft 365 to provide smart assistants for employees.

- High-Compliance Industries: Finance and Healthcare, where strict access control, audit logs, and data governance are mandatory.

Note: If your team isn't tied to the Microsoft ecosystem (.NET, Azure), some of its advantages may be lost. The community is smaller than the Python-led frameworks mentioned above.

Part 4: Practical Selection Guide

To quickly choose the right framework among these five, you can use a decision tree or consider team skills, project phase, and future scalability.

Decision Tree: Based on Your Core Needs

First, ask yourself: What is the core problem I am solving with an AI Agent?

- Is the need to "quickly validate an AI idea" or "entry-level learning"?

- Yes → Choose LangChain. Rich ecosystem, easy to find examples.

- No → Go to the next question.

- Is the core scenario "simulating a team (e.g., Marketing, R&D) to complete tasks"?

- Yes → Choose CrewAI. Its role-task model fits best.

- No → Go to the next question.

- Is the core scenario "building a business process with complex logic, loops, and state" (e.g., approvals, tickets)?

- Yes → Choose LangGraph. Graph state machines are the ultimate weapon here.

- No → Go to the next question.

- Is the core scenario "researching multi-agent dialogue/debates or requiring highly flexible interaction"?

- Yes → Choose AutoGen.

- No → Go to the next question.

- Is your project within the Microsoft stack, or do you have extreme requirements for security and compliance?

- Yes → Choose Semantic Kernel.

- No → Return to step 1 or start with LangChain/CrewAI.

Selection by Team and Project Phase

| Framework | Recommended For | Not Recommended For |

|---|---|---|

| LangChain | Personal learning, startup prototypes, POCs requiring many integrations. | Extremely complex, strict state-logic production workflows. |

| LangGraph | Mid-to-large teams developing complex business flows (e.g., e-commerce orders, risk audits). | Simple one-off scripts or scenarios where visual flow is irrelevant. |

| CrewAI | Projects focused on "role division" and "team output" (e.g., content creation). | Fine-grained control of internal reasoning or highly non-linear, dynamic flows. |

| AutoGen | Academic labs exploring multi-agent mechanisms or experimental dialogue systems. | Stable, predictable output for production tasks. |

| Semantic Kernel | Large enterprises using .NET/C# and Azure with strong security/audit needs. | Individual developers or startups seeking maximum speed and community support. |

Quick Selection Recommendations

For rapid prototyping: LangChain

For complex workflows: LangGraph

For team collaboration: CrewAI

For researching multi-agent conversations: AutoGen

For enterprise-level systems: Semantic Kernel

Part 5: Performance and Cost Considerations

In AI Agent systems, performance depends not just on the framework, but on LLM model calls, workflow strategy, task complexity, and deployment architecture.

Performance Benchmarks and Real-world Behavior

LLM Calls are the Main Bottleneck: The number of LLM calls and context length are the primary factors affecting performance and cost. For simple tasks, framework performance differences are negligible; the bottleneck is LLM latency and token consumption.

In other words, in simple processes like "generate content in one step → one tool call → return result," it's difficult to clearly see the performance differences of the framework itself.

Framework Behavior Impacts Cost in Complex Scenarios:

LangGraph reduces redundant calls through explicit state management and DAG workflows, lowering token and time costs during error recovery.

CrewAI's hierarchical flow may generate extra calls when Agents exchange feedback or coordinate steps.

Semantic Kernel's Planner tends to plan before every task split, which can create extra model call "hotspots" that might be inefficient for fixed simple flows.

Development and Maintenance Costs

Learning Curve Ranking (Community Feedback): AutoGen > LangGraph ≈ Semantic Kernel > LangChain > CrewAI

This reflects a spectrum ranging from the most complex to the easiest to use: AutoGen offers high flexibility but has a high barrier to entry in terms of setup and debugging; CrewAI, due to its role abstraction, is more intuitive and beginner-friendly.

Maintenance:

LangChain & CrewAI are intuitive for simple-to-medium tasks, making them easier for team collaboration or handovers.

LangGraph can become hard to maintain if the graph becomes massive with too many nodes/branches without proper documentation.

Semantic Kernel often requires existing infrastructure expertise (Azure DevOps, security compliance).

Cloud Deployment and Running Costs

Cold Start and Memory Consumption: The runtime abstraction layer of the framework itself will inevitably affect memory usage. Lighter designs (such as directly calling the Python SDK) usually have faster cold starts, while frameworks with more complex stateful or Planner modules may require additional memory.

Containerization and Serverless Friendliness: Currently, all major frameworks are optimizing for containerized deployment (such as Docker/Kubernetes) and serverless modes. This means that costs can be further reduced through resource isolation, automatic scaling, etc., but this also depends on correct engineering practices.

Token and Model Costs: Even within the same framework, the token prices of different models vary significantly—more powerful models like GPT-4.1 are far more expensive than lighter versions (refer to the cost comparison for 2023–2025). Choosing the right model and controlling the context length are key steps in cost optimization.

Part 6: Future Trends (2025–2026)

As AI agent technology continues to mature, the ecosystem is gradually evolving from single tools and frameworks to a larger-scale, more autonomous, more specialized, and more engineered architectural system. The following are several key trends to watch in 2025–2026:

1. Framework Integration and the Emergence of a "Meta-Coordination Layer"

The AI Agent ecosystem is evolving from "individual frameworks operating in isolation" to "interconnectivity and unified governance." The future will likely see the emergence of a "meta-coordination layer" capable of scheduling, monitoring, and coordinating different Agent stacks across frameworks, enabling enterprises to share data, execution strategies, and security policies across different Agent engines. For example, consulting firm PwC has launched a middleware platform that can coordinate agents from different sources; this type of "AI Agent OS" is becoming a key component of enterprise multi-agent architectures.

2. Low-Code/Visual Orchestration Will Become Standard

Lowering the barrier to entry for AI Agent use is crucial for widespread adoption. Low-code and visual orchestration tools—not just simple drag-and-drop interfaces, but those integrating task workflows, state machine visualization, debugging tools, and automatic error prompts—are rapidly growing in the market. For example, drag-and-drop Agent Builder tools from GitHub and other platforms promise to allow developers to build complex agent workflows without extensive programming. This trend will transform AI Agents from a specialized tool for engineering teams into a productivity platform accessible to business and product teams, driving broader adoption across various business scenarios.

3. Rise of Vertical-Specific AI Agent Frameworks and Solutions

While general frameworks are important, different vertical industries have varying needs regarding compliance, security, and scenario-specific features. We expect to see more industry-specific AI Agent frameworks or platforms, such as dedicated solutions for financial compliance agents, medical intelligent assistants, legal document agents, and manufacturing/game process automation. These frameworks will be more mature in terms of built-in industry knowledge, rule engines, and auditing mechanisms.

4. Deep Integration with AI-Native Development Paradigms

In the future, AI Agents will no longer be features added to traditional applications, but will become the fundamental paradigm for AI-native application development. This means that Agent frameworks will be more tightly integrated with concepts such as workflow programming, event-driven systems, prompt engineering techniques (such as ReAct, Chain-of-Thought reasoning, etc.), and unified interfaces for different data modalities (text, images, structured data). This evolution will drive the transformation of Agents from "task executors" to "intelligent business components," becoming the core foundation of next-generation application development.

5. Diverging Trends in Open Source and Commercialization

The AI agent ecosystem will continue to maintain its open-source innovation vitality, but mainstream frameworks are also accelerating the release of commercial versions and managed services to meet enterprise-level needs. For example, mature framework ecosystems are seeing the emergence of value-added service modules such as monitoring, hosting, automatic scaling, security policies, and audit logs. This development model is similar to the commercialization path of basic software in the past. The open-source side focuses on innovation and community ecosystem expansion, while commercial versions serve enterprise users who demand stability, security, and observability.

6. Improved Agent Standardization, Security, and Authorization Mechanisms

As various industries begin to deploy AI Agents in production environments, security and authorization mechanisms will become a key technical focus. Current Agents require more secure and controllable authorization protocols when accessing external systems and user data, driving the gradual formation of new standards (such as unified API authentication, secure sandboxes, and trusted execution environments). For enterprise-level deployments, these standardization mechanisms will become important indicators for evaluating framework selection and implementation capabilities.

7. Rapid Market Growth and Project Integration Pressure

Industry analysts predict that although many AI Agent projects may be abandoned due to cost and business model issues, the overall market demand and investment enthusiasm for Agent technology will continue to grow from 2026 to 2028. More and more enterprises plan to incorporate AI Agents into their core business processes, driving a shift from the "pilot phase" to the "large-scale application phase."

Conclusion: No "Best" Framework, Only the "Right" Choice

The world of AI Agents is evolving rapidly. Choosing a framework is essentially choosing a mindset for building complex intelligent systems.

Our final advice:

- Define the Core Scenario: Map out your ideal Agent workflow before picking a tool.

- Validate Quickly: Use 1-2 weeks to implement a core module in a candidate framework to test the experience.

- Evaluate Team & Ecosystem: Consider your technical background and required integrations.

- Look Ahead: Ensure the framework's roadmap aligns with your product's direction.

Deeply understanding the design philosophy of any framework and starting to build is more valuable than waiting for a "perfect" one.

Frequently Asked Questions (FAQ)

Q1: Which should I learn first, LangChain or LangGraph? It's recommended to first master the core concepts of LangChain (Tools, Chains, Agents), as this is the foundation. Learn LangGraph when you need to build complex workflows. They are complementary.

Q2: Which framework is best for a startup company? CrewAI or LangChain. If your product idea naturally involves "multi-agent collaboration" (like an AI company), choose CrewAI; if you need to quickly iterate and integrate with various APIs, choose LangChain.

Q3: How much Python knowledge is required? At least an intermediate level of Python is needed, including familiarity with asynchronous programming, decorators, and Pydantic models. For Semantic Kernel, if using the C# version, you'll need corresponding .NET knowledge.

Q4: Can these frameworks be used in a production environment? Yes, but it requires additional investment. LangGraph + LangSmith, CrewAI, and Semantic Kernel all have good production-ready features. The key is to have robust error handling, logging, monitoring, and rollback mechanisms.

Q5: Are there Chinese documentation and community support? LangChain and CrewAI have relatively active Chinese communities (e.g., Zhihu columns, technical blogs, WeChat groups). Chinese resources for LangGraph and AutoGen are growing rapidly. Chinese resources for Semantic Kernel are mainly provided by Microsoft.

Q6: Is the cost of migrating between frameworks high if the initial choice is wrong? Yes, it's quite high. The design paradigms of different frameworks vary significantly, and migration means rewriting the core business logic orchestration part. Therefore, initial framework selection is crucial. It's recommended to start with small prototypes to verify framework suitability.

About the Author

This content is curated by the NavGood Editorial Team. NavGood is a platform dedicated to the AI tool ecosystem, tracking the development of AI Agents and automated workflows.

Disclaimer: This article does not represent the official stance of any framework and does not constitute commercial or investment advice. The experimental data presented is based on a specific testing environment and is for comparative reference only; actual results in a production environment may differ.

Resources

- Official Links:

LangChain

LangGraph

CrewAI

AutoGen

Semantic Kernel