The Difference Between Prompts in English and Other Languages: How Language Shapes AI Interaction

When interacting with generative AI tools like ChatGPT, Midjourney, or DALL·E, users often rely on AI prompts—short, descriptive texts that guide the model’s output.

But have you ever wondered how the language of your AI prompt affects the result? This article explores how prompts written in English differ from those written in other languages like Spanish, Chinese, Japanese, and more. It includes real-world examples, best practices, and SEO-optimized insights for global readers who want to master prompt engineering.

Why Language Matters in AI Prompts

English has become the de facto language for interacting with large language models (LLMs), but it’s not the only option. While most advanced AI models are trained primarily on English datasets, many are multilingual to a degree. However, the performance, accuracy, and nuance of the AI’s output can vary significantly depending on the language used in the prompt.

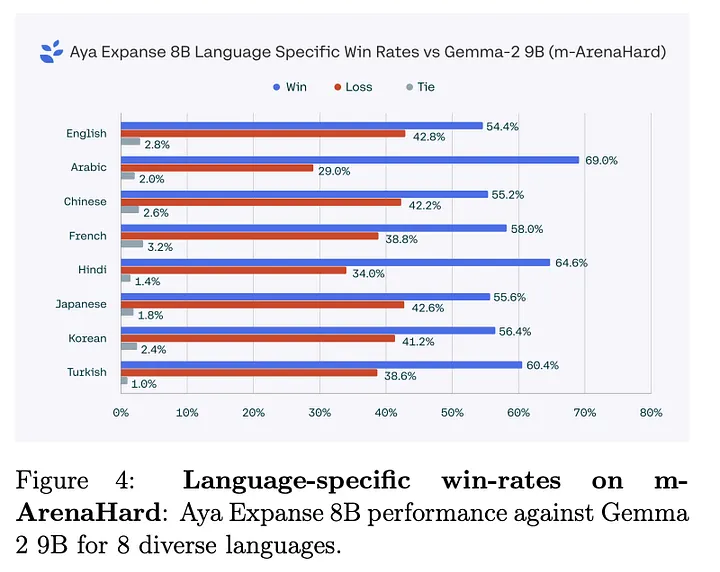

A comparative chart showing multilingual model performance on translation and reasoning tasks.

Keyword Focus

- AI prompts in English vs other languages

- multilingual AI interaction

- prompt engineering by language

- best practices for writing prompts in different languages

How AI Models Are Trained

Most foundation models like GPT (Generative Pre-trained Transformer), PaLM (Pathways Language Model), or Claude are trained using large corpora primarily in English. A 2023 study by Hugging Face showed that over 60% of the pretraining data for common LLMs is in English, while other languages like Chinese (7%), German (4%), and Spanish (3%) follow far behind.

This training bias explains why English prompts often yield better, more nuanced, and faster results.

English Prompts: Rich, Informative, Default

Since a large amount of data used in model training is in English, English prompts are our preferred language for communicating with the model.

Advantages

- Rich training data coverage

- Better understanding of idioms and cultural references

- More responsive to detailed instructions

Example

Prompt (English): "Write a persuasive product description for a new AI-powered writing tool targeting marketing teams."

Result: Clear, fluent, and persuasive content with business tone.

Non-English Prompts: What Changes?

Spanish, French, and German

These European languages perform reasonably well due to linguistic similarity and relatively strong training presence.

- Spanish: Good for informal prompts and storytelling

- German: Structured and logical results

- French: Sometimes overly verbose

Example Comparison

Prompt (Spanish): "Escribe una descripción persuasiva de un nuevo software de escritura con inteligencia artificial para equipos de marketing."

Often produces slightly more general and less punchy content compared to its English counterpart.

Chinese and Japanese

These languages pose greater challenges due to linguistic structure and character-based writing systems.

- Chinese: Results may lack fluency or include translation artifacts

- Japanese: May oversimplify or misinterpret nuance

Cultural and Semantic Impacts

Different languages reflect cultural differences in different regions, which will directly affect the way of language expression. For instance, Japanese prompts are often more indirect and polite, while English favors clarity and directness. This can influence how effectively an AI understands the intent behind a prompt.

Strategies to Improve Non-English Prompts

- Use English When Possible: Translate to English, prompt the AI, then translate the output.

- Simplify Syntax: Use short, clear sentences to reduce ambiguity.

- Provide Examples: Including examples helps models understand context.

- Back-Translate: Translate your prompt into English, verify its meaning, and revise if needed.

Mixed Language Prompts: Best of Both Worlds?

Some users experiment with mixing English and their native language within a single prompt. This can enhance clarity but also confuse models not trained for code-switching.

Example

"请用中文写一段产品介绍,参考如下英文语气:'Elevate your writing game with our AI-powered assistant.'"

This approach may work best with bilingual models like GPT-4.

Implications for SEO and Global Communication

Understanding how language impacts prompt effectiveness can help SEO writers, content creators, and AI marketers.

- Use English to fine-tune initial output

- Localize content after getting high-quality English results

- Test prompts in multiple languages for A/B comparison

Model selection tips

Since the big language model is affected by the language of the training data, we can choose the big prediction model according to our needs when creating content or performing automated tasks. For example, when we want to generate articles with Chinese content, we can choose Chinese models such as DeepSeek, and when we want to create English content, we can choose models such as ChatGPT and Gemini.

Conclusion: One Prompt Doesn’t Fit All

Your choice of language affects not only the AI’s performance but also the tone, relevance, and quality of output. For best results, tailor your prompts to the model’s strengths, and consider starting in English before localizing.

Published by Global AI Insights