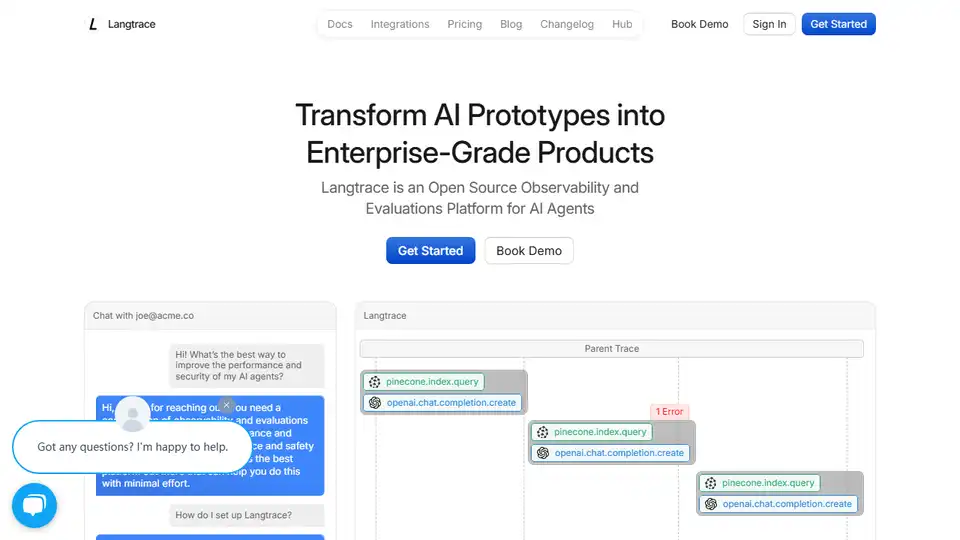

Langtrace

Overview of Langtrace

Langtrace: Open Source Observability and Evaluations Platform for AI Agents

What is Langtrace?

Langtrace is an open-source platform designed to provide observability and evaluation capabilities for AI agents, particularly those powered by Large Language Models (LLMs). It helps developers and organizations measure performance, improve security, and iterate towards better and safer AI agent deployments.

How does Langtrace work?

Langtrace works by integrating with your AI agent's code using simple SDKs in Python and TypeScript. It then traces and monitors various aspects of the agent's operation, including:

- Token usage and cost: Track the number of tokens used and the associated cost.

- Inference latency: Measure the time taken for the agent to generate responses.

- Accuracy: Evaluate the accuracy of the agent's outputs using automated evaluations and curated datasets.

- API Requests: Automatically trace your GenAI stack and surface relevant metadata

This data is then visualized in dashboards, allowing you to gain insights into your agent's performance and identify areas for improvement. Key Features

- Simple Setup: Langtrace offers a non-intrusive setup that can be integrated with just a few lines of code.

- Real-Time Monitoring: Dashboards provide real-time insights into key metrics like token usage, cost, latency, and accuracy.

- Evaluations: Langtrace facilitates the measurement of baseline performance and the creation of datasets for automated evaluations and fine-tuning.

- Prompt Version Control: Store, version control, and compare the performance of prompts across different models using the playground.

- Enterprise-Grade Security: Langtrace provides industry-leading security protocols and is SOC2 Type II certified.

- Open Source: The open-source nature of Langtrace allows for customization, auditing, and community contributions.

Supported Frameworks and Integrations

Langtrace supports a variety of popular LLM frameworks and vector databases, including:

- CrewAI

- DSPy

- LlamaIndex

- Langchain

- Wide range of LLM providers

- VectorDBs

Deploying Langtrace

Deploying Langtrace is straightforward. It involves creating a project, generating an API key, and installing the appropriate SDK. The SDK is then instantiated with the API key. Code examples are available for both Python and TypeScript.

Why is Langtrace important?

Langtrace is important because it helps address the challenges of deploying AI agents in real-world scenarios. By providing observability and evaluation capabilities, Langtrace enables organizations to:

- Improve performance: Identify and address performance bottlenecks.

- Reduce costs: Optimize token usage and minimize expenses.

- Enhance security: Protect data with enterprise-grade security protocols.

- Ensure compliance: Meet stringent compliance requirements for data protection.

Who is Langtrace for?

Langtrace is for any organization or individual developing and deploying AI agents, including:

- AI developers

- Machine learning engineers

- Data scientists

- Enterprises adopting AI

User Testimonials

Users have praised Langtrace for its ease of integration, intuitive setup, and valuable insights.

- Adrian Cole, Principal Engineer at Elastic, noted the coexistence of Langtrace's open-source community in a competitive space.

- Aman Purwar, Founding Engineer at Fulcrum AI, highlighted the easy and quick integration process.

- Steven Moon, Founder of Aech AI, emphasized the real plan for helping businesses with privacy through on-prem installs.

- Denis Ergashbaev, CTO of Salomatic, found Langtrace easy to set up and intuitive for their DSPy-based application.

Getting Started with Langtrace

To get started with Langtrace:

- Visit the Langtrace website.

- Explore the documentation.

- Join the community on Discord.

Langtrace empowers you to transform AI prototypes into enterprise-grade products by providing the tools and insights you need to build reliable, secure, and performant AI agents. It is a valuable resource for anyone working with LLMs and AI agents.

Best Alternative Tools to "Langtrace"

Arize AI provides a unified LLM observability and agent evaluation platform for AI applications, from development to production. Optimize prompts, trace agents, and monitor AI performance in real time.

The AI Engineer Pack by ElevenLabs is the AI starter pack every developer needs. It offers exclusive access to premium AI tools and services like ElevenLabs, Mistral, and Perplexity.

Velvet, acquired by Arize, provided a developer gateway for analyzing, evaluating, and monitoring AI features. Arize is a unified platform for AI evaluation and observability, helping accelerate AI development.

Lunary is an open-source LLM engineering platform providing observability, prompt management, and analytics for building reliable AI applications. It offers tools for debugging, tracking performance, and ensuring data security.

Confident AI is an LLM evaluation platform built on DeepEval, enabling engineering teams to test, benchmark, safeguard, and enhance LLM application performance. It provides best-in-class metrics, guardrails, and observability for optimizing AI systems and catching regressions.

WhyLabs provides AI observability, LLM security, and model monitoring. Guardrail Generative AI applications in real-time to mitigate risks.

Future AGI offers a unified LLM observability and AI agent evaluation platform for AI applications, ensuring accuracy and responsible AI from development to production.

Openlayer is an enterprise AI platform providing unified AI evaluation, observability, and governance for AI systems, from ML to LLMs. Test, monitor, and govern AI systems throughout the AI lifecycle.

Athina is a collaborative AI platform that helps teams build, test, and monitor LLM-based features 10x faster. With tools for prompt management, evaluations, and observability, it ensures data privacy and supports custom models.

Future AGI is a unified LLM observability and AI agent evaluation platform that helps enterprises achieve 99% accuracy in AI applications through comprehensive testing, evaluation, and optimization tools.

Maxim AI is an end-to-end evaluation and observability platform that helps teams ship AI agents reliably and 5x faster with comprehensive testing, monitoring, and quality assurance tools.

HoneyHive provides AI evaluation, testing, and observability tools for teams building LLM applications. It offers a unified LLMOps platform.

Infrabase.ai is the directory for discovering AI infrastructure tools and services. Find vector databases, prompt engineering tools, inference APIs, and more to build world-class AI products.

PromptLayer is an AI engineering platform for prompt management, evaluation, and LLM observability. Collaborate with experts, monitor AI agents, and improve prompt quality with powerful tools.