Arize AI

Overview of Arize AI

Arize AI: The LLM Observability & Evaluation Platform

What is Arize AI?

Arize AI is a comprehensive platform designed to bridge the gap between AI development and production. It offers unified LLM observability and agent evaluation, enabling AI teams to build, evaluate, and monitor their AI applications in one place. Arize AI helps to close the loop between AI development and production, enabling a data-driven iteration cycle where real production data powers better development, and production observability aligns with trusted evaluations.

How does Arize AI work?

Arize AI provides a suite of tools to help AI teams build and maintain high-quality AI applications:

Key Features:

- Agent Tracing: Trace agents and frameworks with speed, flexibility, and simplicity powered by OpenTelemetry (OTEL). This allows users to understand the execution flow of their AI agents and identify potential issues.

- LLM Evaluation: Evaluate prompts and agent actions at scale with LLM-as-a-Judge. This enables eval-driven development by automatically evaluating prompts and agent actions, ensuring consistent quality.

- Prompt Optimization: Optimize prompts automatically using evaluations and annotations. Make agents self-improving by continuously refining prompts based on performance data.

- Real-Time Monitoring: Monitor AI applications in real-time with advanced analytical dashboards. Catch problems instantly with AI evaluating AI through online evaluations.

Arize AX vs. Phoenix OSS

Arize offers two main products: Arize AX and Phoenix OSS.

- Arize AX: Observability built for enterprise, providing the power to manage and improve AI offerings at scale.

- Phoenix OSS: An open-source tool created by AI engineers for AI engineers, offering great exploratory analysis and model debugging capabilities.

Use Cases

Arize AI is used by leading AI teams across various industries to:

- Improve AI Agent Performance: Continuously monitor and evaluate AI agent performance to identify areas for improvement.

- Optimize Prompts: Automatically optimize prompts to enhance the accuracy and efficiency of AI applications.

- Debug and Troubleshoot Issues: Trace agent behavior and debug issues in real-time to ensure smooth operation.

- Scale AI Applications: Manage and improve AI offerings at scale with enterprise-grade observability.

- Ensure Data Quality: Evaluate data quality and catch problems instantly with AI evaluating AI.

Why is Arize AI important?

In the rapidly evolving landscape of AI, ensuring the reliability, accuracy, and performance of AI applications is crucial. Arize AI provides the tools and insights needed to build trustworthy, high-performing AI systems.

Who is Arize AI for?

Arize AI is for:

- AI Engineers: To trace, debug, and improve AI models.

- MLOps Engineers: To monitor and manage AI performance in production.

- Data Scientists: To evaluate and optimize prompts and agent actions.

- AI Product Managers: To gain visibility into AI performance and ensure alignment with business goals.

- Enterprises: To scale AI applications with confidence and manage risk.

Benefits of Using Arize AI

- Improved AI Performance: Arize AI helps you identify and fix issues quickly, leading to improved AI performance.

- Faster Development Cycles: Arize AI enables a data-driven iteration cycle, allowing you to develop and deploy AI applications faster.

- Enhanced Trust: Arize AI helps you build trustworthy AI systems by providing visibility into model behavior and performance.

- Reduced Costs: Arize AI helps you optimize your AI infrastructure and reduce costs by identifying inefficiencies.

- Open Source Flexibility: Arize AI is built on open source and open standards, giving you total control and transparency.

Testimonials

Leading companies across various industries trust Arize AI to power their AI initiatives:

- PepsiCo: “As we continue to scale GenAI across PepsiCo’s digital platforms, Arize gives us the visibility, control, and insights essential for building trustworthy, high-performing systems.”

- Handshake: “Arize gives us the observability we need to understand how these models behave in the wild—tracing outputs, monitoring quality, and managing cost.”

- Tripadvisor: “As we build out new AI products and capabilities, having the right infrastructure in place to evaluate and observe is important. Arize has been a valuable partner on that front.”

- Radiant Security: "Implementing Arize was one of the most impactful decisions we've made. It completely transformed how we understand and monitor our AI agents."

- Siemens: "As we scale GenAI across Siemens, ensuring accuracy and trust is critical. Arize’s evaluation and monitoring capabilities help us catch potential issues early, giving our teams the confidence to roll out AI responsibly and effectively."

Conclusion

Arize AI is a powerful platform that provides the observability, evaluation, and tools needed to build and maintain high-quality AI applications. Whether you are building AI agents, optimizing prompts, or monitoring model performance in production, Arize AI can help you achieve your goals.

Best Alternative Tools to "Arize AI"

HoneyHive provides AI evaluation, testing, and observability tools for teams building LLM applications. It offers a unified LLMOps platform.

The AI Engineer Pack by ElevenLabs is the AI starter pack every developer needs. It offers exclusive access to premium AI tools and services like ElevenLabs, Mistral, and Perplexity.

Pydantic AI is a GenAI agent framework in Python, designed for building production-grade applications with Generative AI. Supports various models, offers seamless observability, and ensures type-safe development.

Athina is a collaborative AI platform that helps teams build, test, and monitor LLM-based features 10x faster. With tools for prompt management, evaluations, and observability, it ensures data privacy and supports custom models.

Velvet, acquired by Arize, provided a developer gateway for analyzing, evaluating, and monitoring AI features. Arize is a unified platform for AI evaluation and observability, helping accelerate AI development.

PromptLayer is an AI engineering platform for prompt management, evaluation, and LLM observability. Collaborate with experts, monitor AI agents, and improve prompt quality with powerful tools.

Langtrace is an open-source observability and evaluations platform designed to improve the performance and security of AI agents. Track vital metrics, evaluate performance, and ensure enterprise-grade security for your LLM applications.

Vivgrid is an AI agent infrastructure platform that helps developers build, observe, evaluate, and deploy AI agents with safety guardrails and low-latency inference. It supports GPT-5, Gemini 2.5 Pro, and DeepSeek-V3.

Lunary is an open-source LLM engineering platform providing observability, prompt management, and analytics for building reliable AI applications. It offers tools for debugging, tracking performance, and ensuring data security.

Teammately is the AI Agent for AI Engineers, automating and fast-tracking every step of building reliable AI at scale. Build production-grade AI faster with prompt generation, RAG, and observability.

Future AGI is a unified LLM observability and AI agent evaluation platform that helps enterprises achieve 99% accuracy in AI applications through comprehensive testing, evaluation, and optimization tools.

Future AGI offers a unified LLM observability and AI agent evaluation platform for AI applications, ensuring accuracy and responsible AI from development to production.

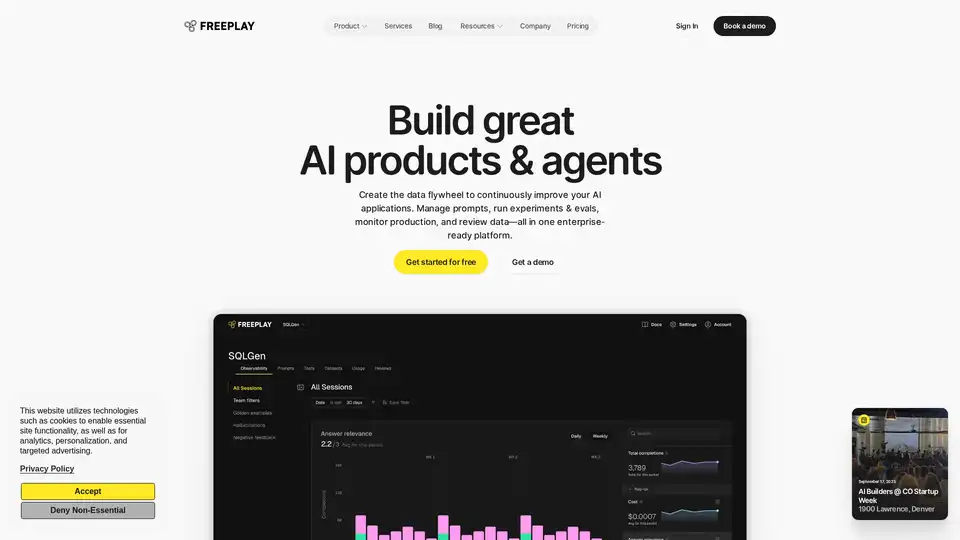

Freeplay is an AI platform designed to help teams build, test, and improve AI products through prompt management, evaluations, observability, and data review workflows. It streamlines AI development and ensures high product quality.

Vellum AI is an LLM orchestration and observability platform to build, evaluate, and productionize enterprise AI workflows and agents with a visual builder and SDK.