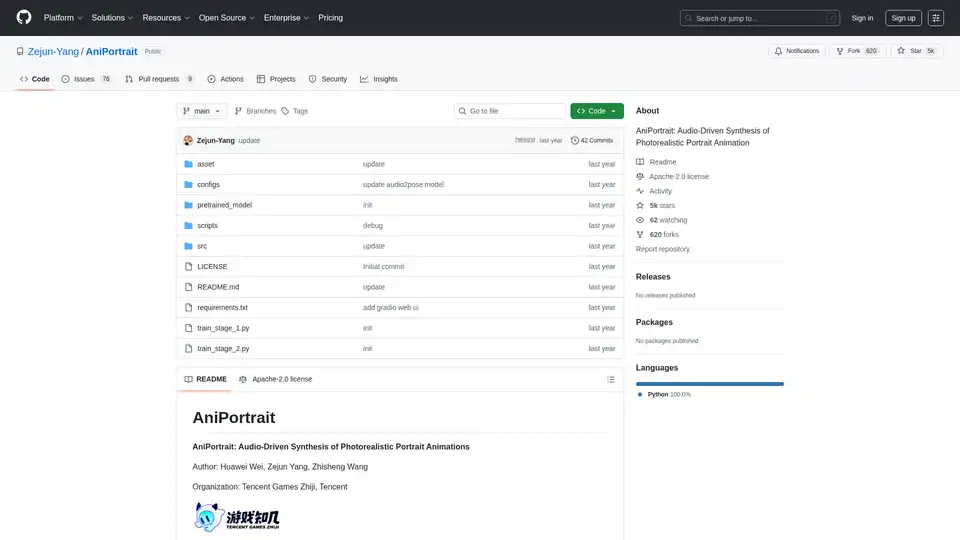

AniPortrait

Overview of AniPortrait

What is AniPortrait?

AniPortrait is an innovative open-source framework designed for audio-driven synthesis of photorealistic portrait animations. Developed by Huawei Wei, Zejun Yang, and Zhisheng Wang from Tencent Games Zhiji and Tencent, this tool leverages advanced AI techniques to create high-quality animated portraits from a single reference image and audio or video inputs. Whether you're animating a static portrait with speech audio or reenacting facial expressions from a source video, AniPortrait delivers lifelike results that capture subtle nuances like lip-sync and head movements. Ideal for content creators, game developers, and researchers in computer vision, it stands out in the realm of AI video generation tools by focusing on portrait-specific animations.

Released on GitHub under the Apache-2.0 license, AniPortrait has garnered over 5,000 stars, reflecting its popularity in the AI community. The project emphasizes accessibility, with pre-trained models, detailed installation guides, and even a Gradio web UI for easy testing.

How Does AniPortrait Work?

At its core, AniPortrait employs a multi-stage pipeline that integrates diffusion models, audio processing, and pose estimation to generate animations. The framework builds on established models like Stable Diffusion V1.5 and wav2vec2 for feature extraction, ensuring robust handling of audio-visual synchronization.

Key Components and Workflow

- Input Processing: Start with a reference portrait image. For audio-driven mode, audio inputs are processed using wav2vec2-base-960h to extract speech features. In video modes, source videos are converted to pose sequences via keypoint extraction.

- Pose Generation: The audio2pose model generates head pose sequences (e.g., pose_temp.npy) from audio, enabling control over facial orientations. For face reenactment, a pose retarget strategy maps movements from the source video to the reference image, supporting substantial pose differences.

- Animation Synthesis: Utilizes denoising UNet, reference UNet, and motion modules to synthesize frames. The pose guider ensures alignment, while optional frame interpolation accelerates inference.

- Output Refinement: Generates videos at resolutions like 512x512, with options for acceleration using film_net_fp16.pt to reduce processing time.

This modular approach allows for self-driven animations (using predefined poses), face reenactment (transferring expressions), and fully audio-driven synthesis, making it versatile for various AI portrait animation scenarios.

Core Features of AniPortrait

AniPortrait packs a range of powerful features tailored for realistic portrait animation:

- Audio-Driven Portrait Animation: Syncs lip movements and expressions to audio inputs, perfect for dubbing or virtual avatars.

- Face Reenactment: Transfers facial performances from a source video to a target portrait, ideal for deepfake-like ethical applications in media.

- Pose Control and Retargeting: Updated strategies handle diverse head poses, including generation of custom pose files for precise control.

- High-Resolution Output: Produces photorealistic videos with support for longer sequences (up to 300 frames or more).

- Acceleration Options: Frame interpolation and FP16 models speed up inference without sacrificing quality.

- Gradio Web UI: A user-friendly interface for quick demos, also hosted on Hugging Face Spaces for online access.

- Pre-Trained Models: Includes weights for audio2mesh, audio2pose, and diffusion components, downloadable from sources like Wisemodel.

These features make AniPortrait a go-to tool for AI-driven video synthesis, surpassing basic tools by focusing on portrait fidelity and audio-visual coherence.

Installation and Setup

Getting started is straightforward for users with Python >=3.10 and CUDA 11.7:

- Clone the repository:

git clone https://github.com/Zejun-Yang/AniPortrait. - Install dependencies:

pip install -r requirements.txt. - Download pre-trained weights to

./pretrained_weights/, including Stable Diffusion components, wav2vec2, and custom models likedenoising_unet.pthandaudio2pose.pt. - Organize files as per the directory structure in the README.

For training, prepare datasets like VFHQ or CelebV-HQ by extracting keypoints and running preprocessing scripts. Training occurs in two stages using Accelerate for distributed processing.

How to Use AniPortrait?

Inference Modes

AniPortrait supports three primary modes via command-line scripts:

Self-Driven Animation:

python -m scripts.pose2vid --config ./configs/prompts/animation.yaml -W 512 -H 512 -accCustomize with reference images or pose videos. Convert videos to poses using

python -m scripts.vid2pose --video_path input.mp4.Face Reenactment:

python -m scripts.vid2vid --config ./configs/prompts/animation_facereenac.yaml -W 512 -H 512 -accEdit the YAML to include source videos and references.

Audio-Driven Synthesis:

python -m scripts.audio2vid --config ./configs/prompts/animation_audio.yaml -W 512 -H 512 -accAdd audios and images to the config. Enable audio2pose by removing pose_temp for automatic pose generation.

For head pose control, generate reference poses with python -m scripts.generate_ref_pose.

Web Demo

Launch the Gradio UI: python -m scripts.app. Or try the online version on Hugging Face Spaces.

Users can experiment with sample videos like 'cxk.mp4' or 'jijin.mp4' to see audio-sync in action, sourced from platforms like Bilibili.

Training AniPortrait from Scratch

Advanced users can train custom models:

- Data Prep: Download datasets, preprocess with

python -m scripts.preprocess_dataset, and update JSON paths. - Stage 1:

accelerate launch train_stage_1.py --config ./configs/train/stage1.yaml. - Stage 2: Download motion module weights, specify Stage 1 checkpoints, and run

accelerate launch train_stage_2.py --config ./configs/train/stage2.yaml.

This process fine-tunes on portrait-specific data, enhancing generalization for AI animation tasks.

Why Choose AniPortrait?

In a crowded field of AI tools for video generation, AniPortrait excels due to its specialized focus on photorealistic portraits. Unlike general-purpose models, it handles audio-lip sync and subtle expressions with precision, reducing artifacts in facial animations. The open-source nature allows customization, and recent updates—like the April 2024 audio2pose release and acceleration modules—keep it cutting-edge. Community acknowledgments to projects like EMO and AnimateAnyone highlight its collaborative roots, ensuring reliable performance.

Practical value includes faster prototyping for virtual influencers, educational videos, or game assets. With arXiv paper availability (eprint 2403.17694), it serves researchers exploring audio-visual synthesis in computer vision.

Who is AniPortrait For?

- Content Creators and Filmmakers: For quick dubbing or expression transfers in short-form videos.

- Game Developers at Tencent-like Studios: Integrating animated portraits into interactive media.

- AI Researchers: Experimenting with diffusion-based animation and pose retargeting.

- Hobbyists and Educators: Using the web UI to teach AI concepts without heavy setup.

If you're seeking the best way to create audio-driven portrait animations, AniPortrait's balance of quality, speed, and accessibility makes it a top choice.

Potential Applications and Use Cases

- Virtual Avatars: Animate digital characters with synced speech for social media or metaverses.

- Educational Tools: Generate talking head videos for lectures or tutorials.

- Media Production: Ethical face reenactment for historical reenactments or ads.

- Research Prototyping: Benchmark audio-to-video models in CV papers.

Demonstrations include self-driven clips like 'solo.mp4' and audio examples like 'kara.mp4', showcasing seamless integration.

For troubleshooting, check the 76 open issues on GitHub or contribute via pull requests. Overall, AniPortrait empowers users to push boundaries in AI portrait animation with reliable, high-fidelity results.

Best Alternative Tools to "AniPortrait"

Lip Sync AI transforms static photos into talking videos using advanced AI lip sync technology. Upload a photo and audio file to generate realistic lip-synced videos with natural expressions.

Media.io is an all-in-one AI platform for video, image, and audio creation. It offers tools like AI video generator, image to video, text to music, and watermark remover, catering to both personal and commercial use.

Mango AI is an AI-powered video generator that creates talking photos, avatars, & face swaps effortlessly. Ideal for marketers, educators & content creators.

AIVidly is an all-in-one AI video maker app for iPhone that turns text into professional videos with AI voiceovers, effects, and optimizations for TikTok and YouTube Shorts—no editing skills required.

PICOAI.app offers cutting-edge AI tools to generate stunning images and videos. Create professional content effortlessly using the latest generative AI models.

MirrorizeAI is a vibrant AI art community empowering creators to generate stunning images, videos, and music with cinematic realism. Collaborate globally, iterate fast, and unlock your imagination without subscriptions.

Discover Slides to Videos, the AI tool that turns Google Slides into professional videos with AI images, animations, and narration. Ideal for content creators, marketers, and educators to produce engaging social media and YouTube content quickly.

Discover Wan 2.2 AI, a cutting-edge platform for text-to-video and image-to-video generation with cinema-grade controls, professional motion, and 720p resolution. Ideal for creators, marketers, and producers seeking high-quality AI video tools.

VidHex mixes various AI video tools together, such as Video Enhancer, efficiently and effortlessly improving content and optimizing visual experience. Transform blurry videos into high-quality visuals with one click.

CharGen is an AI-powered fantasy content generator that creates characters, NPCs, monsters, maps, and campaign tools for D&D, Pathfinder, and RPG enthusiasts.

Create stunning videos with Wondershare Filmora AI video editing software! Features include AI smart long video to short video, AI portrait matting, dynamic subtitles, multi-camera editing and more. Easy and fun for beginners and professionals!

Cliptics offers free AI tools for image editing, text to speech, background removal, and content creation. No signup, no watermarks. Enhance images, generate voiceovers, and create content effortlessly.

UniFab AI is an AI-powered solution enhancing video & audio quality. Features include video/audio enhancers, converter, editor. Upscale to 16K, denoise, colorize & more.

CREATUS.AI offers an AI-native workspace with autonomous team members, integrating AI features for SMEs to boost productivity and optimize resourcing costs. Try free AI tools and integrate with apps like Canva, Notion, and Zapier.