ExLlama

Overview of ExLlama

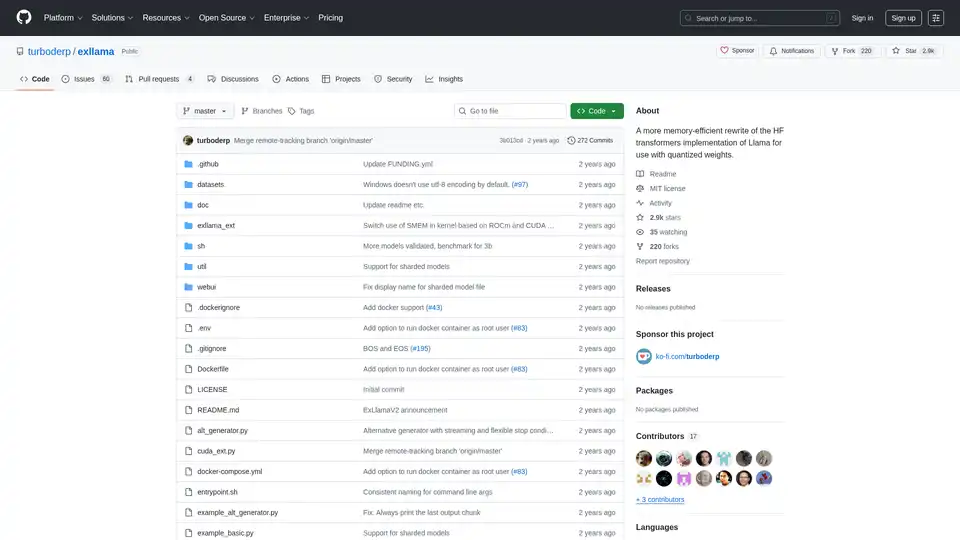

ExLlama: Memory-Efficient Llama Implementation for Quantized Weights

ExLlama is a standalone Python/C++/CUDA implementation of Llama designed for speed and memory efficiency when using 4-bit GPTQ weights on modern GPUs. This project aims to provide a faster and more memory-efficient alternative to the Hugging Face Transformers implementation, particularly for users working with quantized models.

What is ExLlama?

ExLlama is designed to be a high-performance inference engine for the Llama family of language models. It leverages CUDA for GPU acceleration and is optimized for 4-bit GPTQ quantized weights, enabling users to run large language models on GPUs with limited memory.

How does ExLlama work?

ExLlama optimizes memory usage and inference speed through several techniques:

- CUDA Implementation: Utilizes CUDA for efficient GPU computation.

- Quantization Support: Specifically designed for 4-bit GPTQ quantized weights.

- Memory Efficiency: Reduces memory footprint compared to standard implementations.

Key Features and Benefits:

- High Performance: Optimized for fast inference.

- Memory Efficiency: Allows running large models on less powerful GPUs.

- Standalone Implementation: No need for the Hugging Face Transformers library.

- Web UI: Includes a simple web UI for easy interaction with the model (JavaScript written by ChatGPT, so beware!).

- Docker Support: Can be run in a Docker container for easier deployment and security.

How to use ExLlama?

Installation:

- Clone the repository:

git clone https://github.com/turboderp/exllama - Navigate to the directory:

cd exllama - Install dependencies:

pip install -r requirements.txt

- Clone the repository:

Running the Benchmark:

python test_benchmark_inference.py -d <path_to_model_files> -p -ppl

Running the Chatbot Example:

python example_chatbot.py -d <path_to_model_files> -un "Jeff" -p prompt_chatbort.txt

Web UI:

- Install additional dependencies:

pip install -r requirements-web.txt - Run the web UI:

python webui/app.py -d <path_to_model_files>

- Install additional dependencies:

Why choose ExLlama?

ExLlama offers several advantages:

- Performance: Delivers faster inference speeds compared to other implementations.

- Accessibility: Enables users with limited GPU memory to run large language models.

- Flexibility: Can be integrated into other projects via the Python module.

- Ease of Use: Provides a simple web UI for interacting with the model.

Who is ExLlama for?

ExLlama is suitable for:

- Researchers and developers working with large language models.

- Users with NVIDIA GPUs (30-series and later recommended).

- Those seeking a memory-efficient and high-performance inference solution.

- Anyone interested in running Llama models with 4-bit GPTQ quantization.

Hardware Requirements:

- NVIDIA GPUs (RTX 30-series or later recommended)

- ROCm support is theoretical but untested

Dependencies:

- Python 3.9+

- PyTorch (tested on 2.0.1 and 2.1.0 nightly) with CUDA 11.8

- safetensors 0.3.2

- sentencepiece

- ninja

- flask and waitress (for web UI)

Docker Support:

ExLlama can be run in a Docker container for easier deployment and security. The Docker image supports NVIDIA GPUs.

Results and Benchmarks:

ExLlama demonstrates significant performance improvements compared to other implementations, especially in terms of tokens per second (t/s) during inference. Benchmarks are provided for various Llama model sizes (7B, 13B, 33B, 65B, 70B) on different GPU configurations.

Example usage

import torch

from exllama.model import ExLlama, ExLlamaCache, ExLlamaConfig

from exllama.tokenizer import ExLlamaTokenizer

## Initialize model and tokenizer

model_directory = "/path/to/your/model"

tokenizer_path = os.path.join(model_directory, "tokenizer.model")

model_config_path = os.path.join(model_directory, "config.json")

config = ExLlamaConfig(model_config_path)

config.model_path = os.path.join(model_directory, "model.safetensors")

tokenizer = ExLlamaTokenizer(tokenizer_path)

model = ExLlama(config)

cache = ExLlamaCache(model)

## Prepare input

prompt = "The quick brown fox jumps over the lazy"

input_ids = tokenizer.encode(prompt)

## Generate output

model.forward(input_ids, cache)

token = model.sample(temperature = 0.7, top_k = 50, top_p = 0.7)

output = tokenizer.decode([token])

print(prompt + output)

Compatibility and Model Support:

ExLlama is compatible with a range of Llama models, including Llama 1 and Llama 2. The project is continuously updated to support new models and features.

ExLlama is a powerful tool for anyone looking to run Llama models efficiently. Its focus on memory optimization and speed makes it an excellent choice for both research and practical applications.

Best Alternative Tools to "ExLlama"

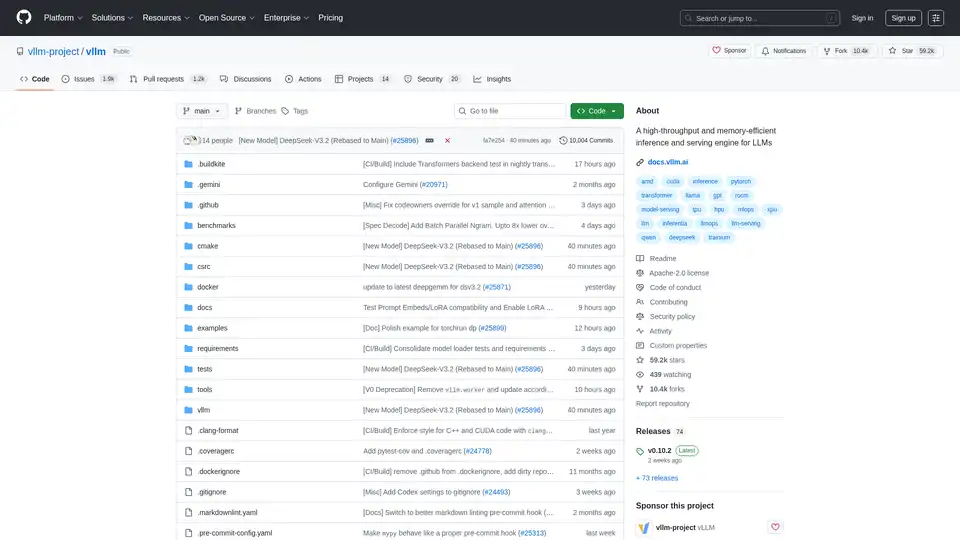

vLLM is a high-throughput and memory-efficient inference and serving engine for LLMs, featuring PagedAttention and continuous batching for optimized performance.

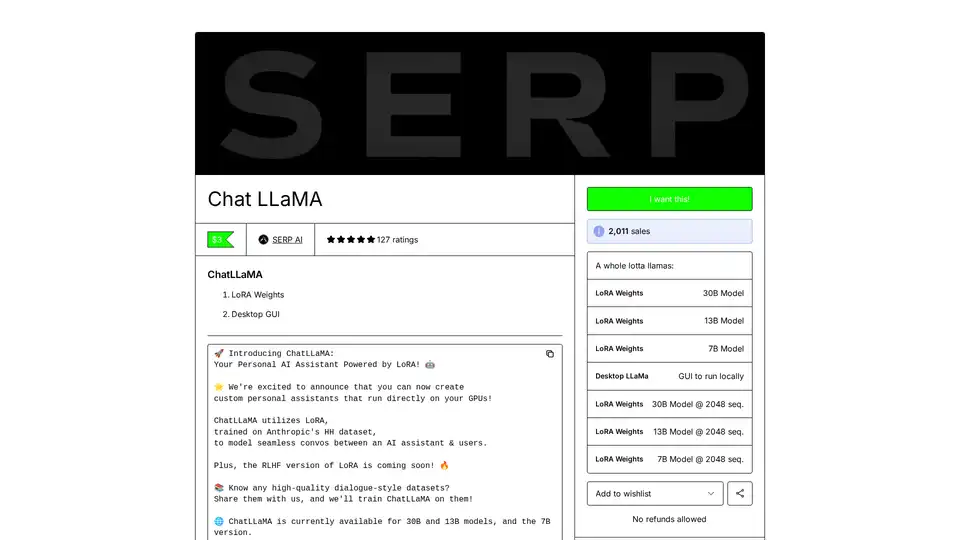

ChatLLaMA is a LoRA-trained AI assistant based on LLaMA models, enabling custom personal conversations on your local GPU. Features desktop GUI, trained on Anthropic's HH dataset, available for 7B, 13B, and 30B models.

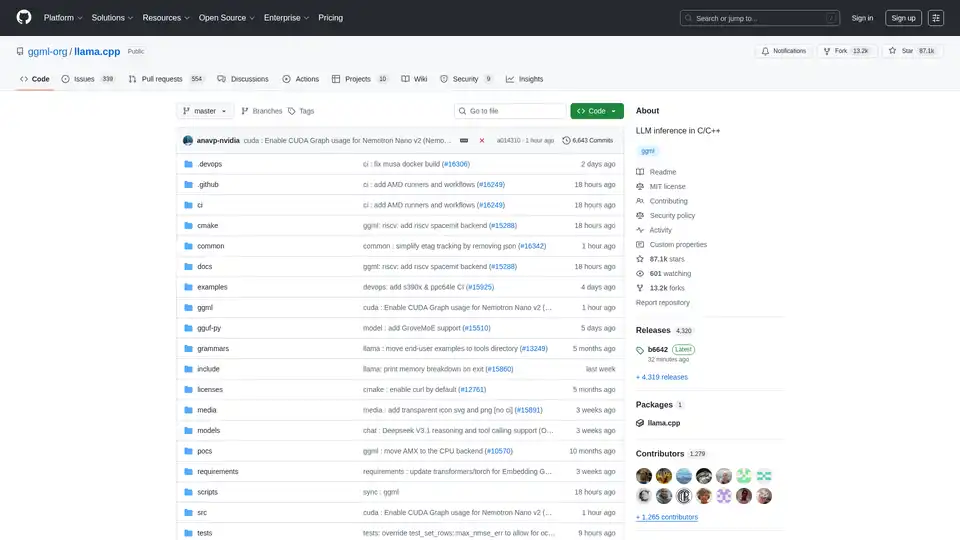

Enable efficient LLM inference with llama.cpp, a C/C++ library optimized for diverse hardware, supporting quantization, CUDA, and GGUF models. Ideal for local and cloud deployment.

Instantly run any Llama model from HuggingFace without setting up any servers. Over 11,900+ models available. Starting at $10/month for unlimited access.