Featherless.ai

Overview of Featherless.ai

What is Featherless.ai?

Featherless.ai is a serverless LLM hosting provider that gives you access to a vast library of open-source models from Hugging Face. Forget about the complexities of server management and operational overhead; Featherless handles it all, letting you focus on leveraging AI for your projects.

Key Features:

- Extensive Model Catalog: Access over 11,900 open-source models.

- Serverless Inference: Deploy models without managing servers.

- Flat Pricing: Predictable billing with unlimited tokens.

- Low Latency: Benefit from advanced model loading and GPU orchestration.

- LangChain Compatibility: Power your applications with Featherless using OpenAI SDK compatibility.

How to use Featherless.ai?

- Sign Up: Create an account on Featherless.ai.

- Explore Models: Browse the extensive catalog of models.

- Deploy: Instantly deploy models for fine-tuning, testing, or production.

- Integrate: Use the API to integrate models into your applications.

Why choose Featherless.ai?

Featherless.ai offers a compelling alternative to other providers by combining a vast model catalog with serverless infrastructure and predictable pricing. It's the ideal solution for AI teams that want to leverage the power of open-source models without the hassle of server management.

Use Cases:

- OpenHands: Streamline software development with AI-powered coding tasks.

- NovelCrafter: Enhance creative writing with AI assistance throughout the novel-writing process.

- WyvernChat: Create unique characters with custom personalities using a wide range of open-source models.

Pricing:

Featherless.ai offers three pricing plans:

- Feather Basic: $10/month for models up to 15B parameters.

- Feather Premium: $25/month for access to DeepSeek and Kimi-K2 models.

- Feather Scale: $75/month for business plans with scalable concurrency.

FAQ:

What is Featherless?

Featherless is an LLM hosting provider that offers access to a continually expanding library of HuggingFace models.

Do you log my chat history?

No, prompts and completions sent to the API are not logged.

Which model architectures are supported?

A wide range of llama models are supported, including Llama 2 and 3, Mistral, Qwen, and DeepSeek.

For more details, visit Featherless.ai and explore the documentation.

Best Alternative Tools to "Featherless.ai"

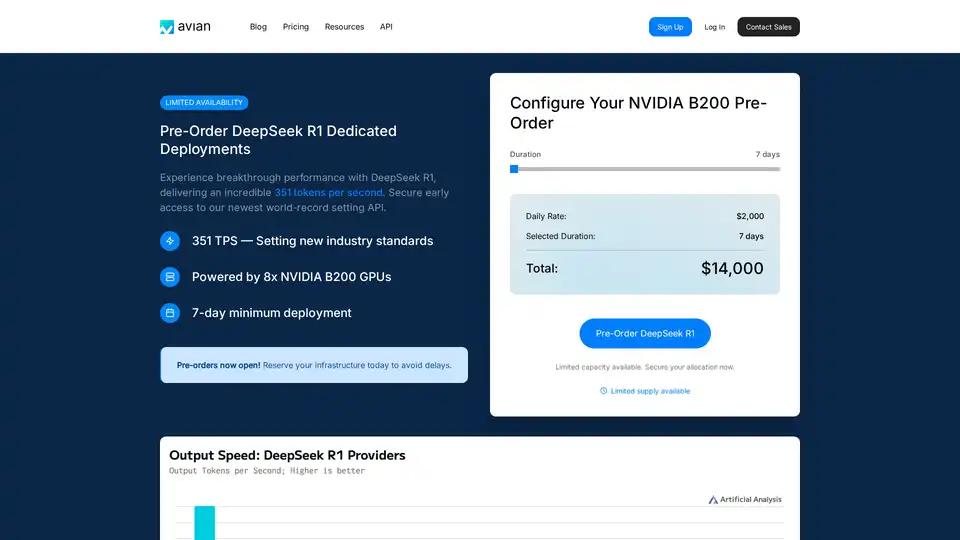

Avian API offers the fastest AI inference for open source LLMs, achieving 351 TPS on DeepSeek R1. Deploy any HuggingFace LLM at 3-10x speed with an OpenAI-compatible API. Enterprise-grade performance and privacy.

Lightning-fast AI platform for developers. Deploy, fine-tune, and run 200+ optimized LLMs and multimodal models with simple APIs - SiliconFlow.

Batteries Included is a self-hosted AI platform that simplifies deploying LLMs, vector databases, and Jupyter notebooks. Build world-class AI applications on your infrastructure.

Awan LLM offers an unrestricted and cost-effective LLM inference API platform with unlimited tokens, ideal for developers and power users. Process data, complete code, and build AI agents without token limits.

Awan LLM provides an unlimited, unrestricted, and cost-effective LLM Inference API platform. It allows users and developers to access powerful LLM models without token limitations, ideal for AI agents, roleplay, data processing, and code completion.

Deep Infra is a platform for low-cost, scalable AI inference with 100+ ML models like DeepSeek-V3.2, Qwen, and OCR tools. Offers developer-friendly APIs, GPU rentals, zero data retention, and US-based secure infrastructure for production AI workloads.

Enable efficient LLM inference with llama.cpp, a C/C++ library optimized for diverse hardware, supporting quantization, CUDA, and GGUF models. Ideal for local and cloud deployment.

Phala Cloud offers a trustless, open-source cloud infrastructure for deploying AI agents and Web3 applications, powered by TEE. It ensures privacy, scalability, and is governed by code.

Falcon LLM is an open-source generative large language model family from TII, featuring models like Falcon 3, Falcon-H1, and Falcon Arabic for multilingual, multimodal AI applications that run efficiently on everyday devices.

Explore Qwen3 Coder, Alibaba Cloud's advanced AI code generation model. Learn about its features, performance benchmarks, and how to use this powerful, open-source tool for development.

Magic Loops is a no-code platform that combines LLMs and code to build professional AI-native apps in minutes. Automate tasks, create custom tools, and explore community apps without any coding skills.

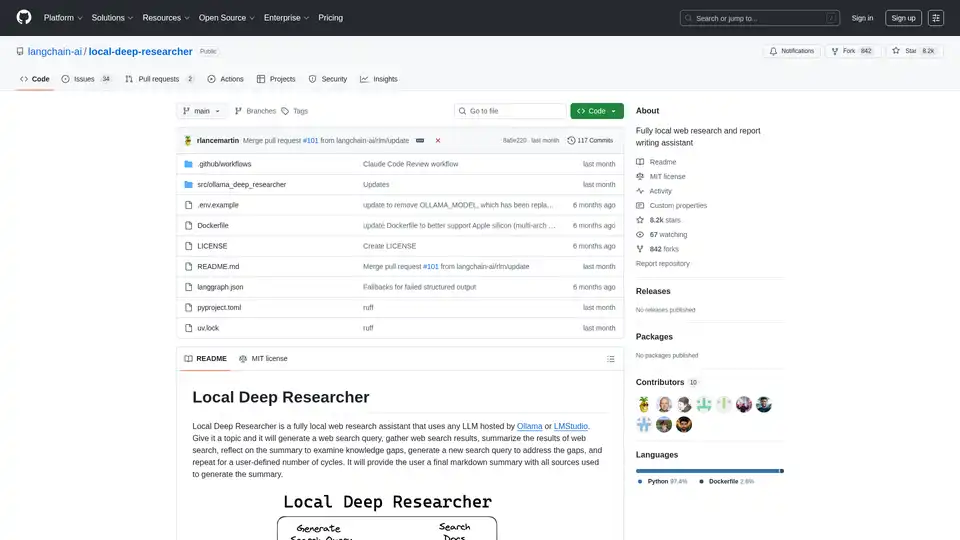

Local Deep Researcher is a fully local web research assistant that uses LLMs via Ollama or LMStudio to generate search queries, gather results, summarize findings, and create comprehensive research reports with proper citations.

CalStudio is a no-code platform that empowers creators to build, launch, and monetize custom AI apps in minutes. It offers seamless hosting, embedding, analytics, and access to leading AI models without API keys.

Vext builds custom AI solutions for enterprises, including LLM applications, model fine-tuning, managed hosting, and custom API services.