Mixpeek

Overview of Mixpeek

Mixpeek: The Multimodal Data Warehouse for Developers

What is Mixpeek?

Mixpeek is a developer-first API designed for AI-native content understanding. It empowers developers to process, extract features, and search across a variety of unstructured data, including text, images, video, audio, and PDFs.

How does Mixpeek work?

Mixpeek offers a unified API to search, monitor, classify, and cluster your unstructured data. Here's a simplified workflow:

- Upload Objects: Ingest your unstructured data from various sources, such as AWS S3 buckets, supporting multi-format uploads (PDF, images, video, audio). Mixpeek automatically detects content types.

- Extract Features: Utilize specialized extraction models to process features from any type of unstructured data, including video, text, image, PDF, time series, tabular, and audio data.

- Enrich Features: Enhance the extracted features for better analysis and retrieval.

- Build Retrievers: Construct search indexes for faster content discovery.

Key Features:

- Unified Search: Semantic search across video, audio, images, and documents.

- Automated Classification: Custom models to classify content for moderation, targeting, and organization.

- Unsupervised Clustering: Automatically group similar content to discover trends and patterns.

- Feature Extractors: Specialized extraction models for every data type.

- Seamless Model Upgrades: Automatically upgrade to newer models without breaking existing queries.

- Cross-Model Compatibility: Query across multiple embedding spaces.

- A/B Testing Infrastructure: Compare embedding model performance with built-in testing tools.

Why is Mixpeek important?

Mixpeek simplifies the embedding lifecycle with incremental updates, version management, backward compatibility, and intelligent embedding translation, all managed for you.

Use Cases:

Mixpeek is suitable for a wide range of industries:

- Advertising & Media: Faster creative analysis and automated brand safety checks.

- Media & Entertainment: Improved content discovery and monetization, dynamic video tagging.

- Retail & E-commerce: Visual product search and automated product tagging.

- Security & Surveillance: Faster security incident analysis and automated suspicious activity alerts.

- Healthcare & Life Sciences: Improved diagnostic efficiency and integrated multimodal patient analysis.

- Education Technology: Faster content organization and higher student engagement.

- Manufacturing & Industrial Operations: Reduction in workplace accidents and decrease in defect rates.

- Legal & Compliance: Faster discovery process and compliance achievement.

- Dataset Engineering & Management: Accelerated dataset development cycles and improved dataset quality.

Pricing:

Mixpeek offers usage-based pricing, charging only for the data indexed. You can run unlimited queries without additional costs.

Get Started:

Visit the Mixpeek website to schedule a demo, explore the documentation, and start building powerful multimodal search and analytics applications today.

Best Alternative Tools to "Mixpeek"

Zensors is a physical AI platform that automates real-world processes using sensors, cameras, and data fusion. It delivers real-time operational support and accurate forecasts for enhanced efficiency and safety.

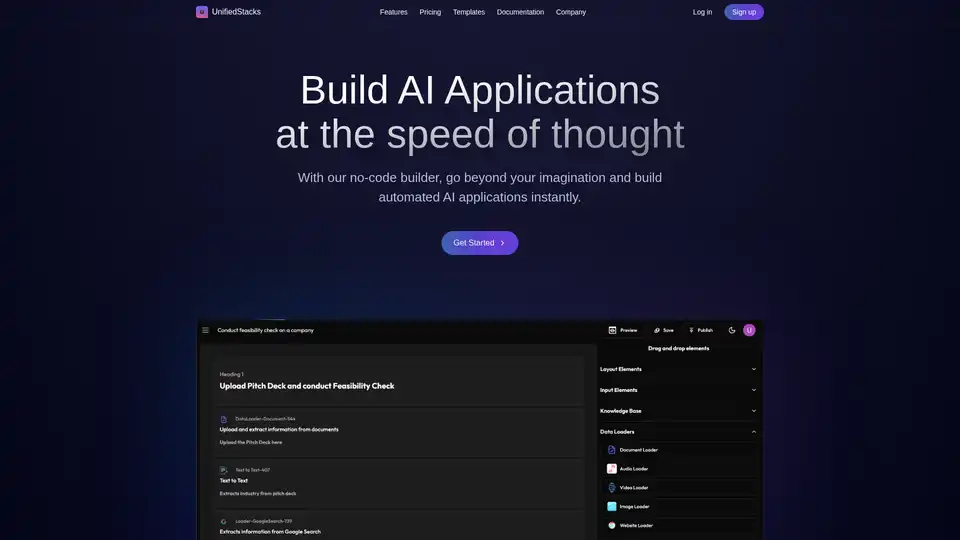

UnifiedStacks is a no-code AI platform for building automated AI applications. Drag, drop, and deploy production-ready AI solutions instantly, integrating with internal & external data sources.

T-Rex Label is an AI-powered data annotation tool supporting Grounding DINO, DINO-X, and T-Rex models. It's compatible with COCO and YOLO datasets, offering features like bounding boxes, image segmentation, and mask annotation for efficient computer vision dataset creation.

Ocular AI is a multimodal data lakehouse platform that allows you to ingest, curate, search, annotate, and train custom AI models on unstructured data. Built for the multi-modal AI era.

Encord is the AI data management platform. Accelerate and simplify multimodal data curation, annotation, and model eval to get better AI into production faster.

Discover DataChain, an AI-native platform for curating, enriching, and versioning multimodal datasets like videos, audio, PDFs, and MRI scans. It empowers teams with ETL pipelines, data lineage, and scalable processing without data duplication.

Jina AI provides best-in-class embeddings, rerankers, web reader, deep search, and small language models. A Search AI solution for multilingual and multimodal data.

Roboto is an analytics engine designed for robotics and physical AI, enabling teams to efficiently search, transform, and analyze multimodal data from robots at scale, identify anomalies, and automate analysis.

BAGEL is an open-source unified multimodal AI model that combines image generation, editing, and understanding capabilities with advanced reasoning, offering photorealistic outputs and comparable performance to proprietary systems like GPT-4o.

FiftyOne is the leading open-source visual AI & computer vision data platform trusted by top enterprises to maximize AI performance with better data. Data Curation, Smarter Annotation, Model Evaluation.

Innovatiana delivers expert data labeling and builds high-quality AI datasets for ML, DL, LLM, VLM, RAG, and RLHF, ensuring ethical and impactful AI solutions.

Orga AI is a conversational and multimodal AI platform for businesses, enhancing customer service and boosting productivity with human-like interactions.

Luma AI offers AI video generation with Ray2 and Dream Machine. Create realistic motion content from text, images, or video for storytelling.

PolygrAI Interviewer is an AI-first platform that automates, analyzes, and authenticates interviews using AI to detect deception and provide insights into candidate behavior.