MotionAgent

Overview of MotionAgent

What is MotionAgent?

MotionAgent is an innovative open-source AI assistant designed to convert creative ideas into engaging motion pictures. Powered by the ModelScope community, this deep learning tool simplifies the video production process by integrating multiple AI models for script creation, image generation, video synthesis, and music composition. Whether you're a storyteller, filmmaker, or content creator, MotionAgent streamlines the journey from concept to final output, making professional-quality videos accessible without extensive technical expertise.

At its core, MotionAgent leverages large language models (LLMs) like Qwen-7B-Chat for script generation, Stable Diffusion XL (SDXL) for movie stills, I2VGen-XL for transforming images into videos, and MusicGen for crafting custom background scores. This modular approach ensures that each step of video creation is handled by specialized, state-of-the-art models, resulting in cohesive and high-quality productions.

How Does MotionAgent Work?

MotionAgent operates through a user-friendly pipeline that breaks down video creation into intuitive stages. Here's a breakdown of its workflow:

Script Generation: Start by inputting a story theme or background details. The tool uses an LLM-based model, such as Qwen-7B-Chat, to produce detailed scripts in various styles. This step mimics the brainstorming phase of traditional filmmaking, generating dialogue, scene descriptions, and plot outlines tailored to your vision.

Movie Still Generation: Once the script is ready, MotionAgent creates visual representations of key scenes. Drawing from SDXL 1.0, it generates high-fidelity images that serve as storyboards or stills, capturing the essence of your narrative with realistic or stylized aesthetics.

Video Generation: The magic happens here as the tool converts these static images into dynamic videos. Using I2VGen-XL, MotionAgent supports high-resolution video synthesis, adding motion, transitions, and fluidity to bring scenes to life. This image-to-video (I2V) capability is particularly powerful for short films, animations, or promotional clips.

Music Generation: To enhance the emotional impact, MotionAgent composes original background music in custom styles via MusicGen. Users can specify genres like orchestral, electronic, or ambient, ensuring the audio perfectly complements the visuals.

The entire process is orchestrated through a simple Python application (app.py), which can be run locally after cloning the GitHub repository. All models are sourced from the ModelScope platform, ensuring reliability and community-driven improvements.

How to Use MotionAgent?

Getting started with MotionAgent is straightforward, especially for those comfortable with basic command-line operations. The tool is compatible with Python 3.8, PyTorch 2.0.1, and CUDA 11.7, optimized for environments like Ubuntu 20.04 with an NVIDIA A100 GPU (40GB). Resource demands include at least 36GB of GPU memory and 50GB of disk space for model downloads and outputs.

Follow these steps for installation and usage:

Set Up Environment: Create a Conda virtual environment with

conda create -n motion_agent python=3.8and activate it usingconda activate motion_agent.Clone Repository: Use

GIT_LFS_SKIP_SMUDGE=1 git clone https://github.com/modelscope/motionagent.git --depth 1to download the project, then navigate to the directory withcd motionagent.Install Dependencies: Run

pip3 install -r requirements.txtto set up necessary libraries.Launch the App: Execute

python3 app.pyto start the web interface. For multi-GPU setups, specifyCUDA_VISIBLE_DEVICES=0 python3 app.py. If storage is limited (e.g., under 100GB), enable cache clearing with--clear_cacheto manage model downloads efficiently.

Access the generated URL in the console to interact via a browser-based UI. Input your ideas, and the tool will guide you through script refinement, image creation, video rendering, and music addition. For experimentation, a demo Jupyter notebook (motion_agent_demo.ipynb) is included for step-by-step exploration.

Why Choose MotionAgent?

In a landscape crowded with AI tools, MotionAgent stands out for its end-to-end integration and open-source nature. Unlike standalone video editors or generators, it combines narrative scripting with multimedia synthesis, reducing the need for multiple subscriptions or software switches. Its reliance on proven models like Qwen-7B-Chat (for natural language processing) and I2VGen-XL (for advanced video diffusion) ensures outputs rival commercial alternatives, often at zero cost beyond hardware.

Key advantages include:

- Versatility: Supports diverse genres, from dramatic shorts to animated explainers.

- Efficiency: Automates time-consuming tasks like storyboarding and scoring, ideal for rapid prototyping.

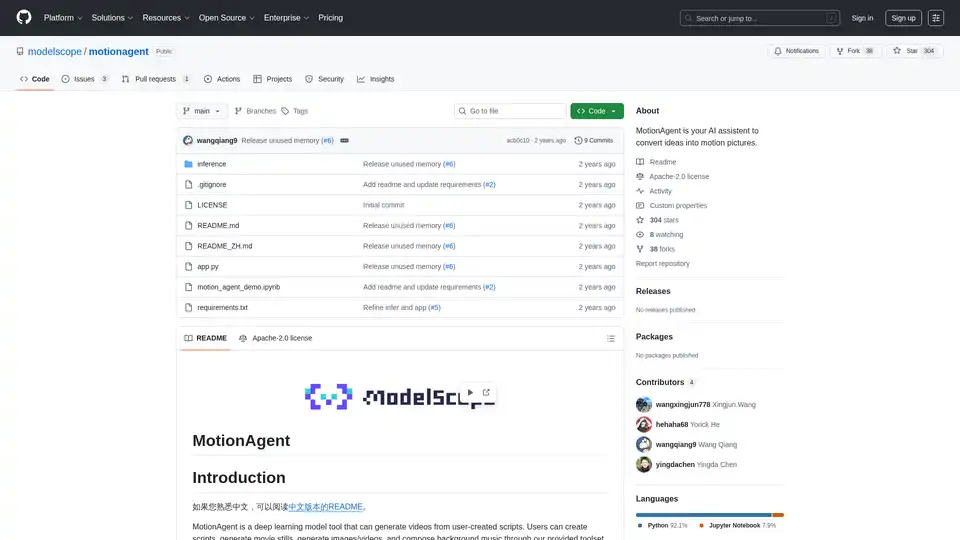

- Community Backing: Hosted on GitHub with 304 stars, 38 forks, and contributions from developers like Wang Qiang and Yorick He, it's actively maintained under the Apache 2.0 license.

- Scalability: While single-GPU focused, future updates could expand to distributed computing.

Users report faster production cycles—generating a full short video in hours rather than days—making it a game-changer for indie creators facing tight deadlines.

Who is MotionAgent For?

MotionAgent is tailored for a wide audience in the creative and tech spaces:

- Filmmakers and Animators: Perfect for pre-production, turning raw concepts into polished demos.

- Content Creators and Marketers: Ideal for social media videos, ads, or educational clips where quick iteration is key.

- Educators and Students: Use it to visualize stories in classrooms or film studies projects.

- Developers and AI Enthusiasts: Leverage its open-source code to customize or integrate into larger pipelines.

It's especially valuable for those with access to high-end GPUs, though cloud alternatives like ModelScope Notebooks can bridge hardware gaps. Beginners may need a learning curve for setup, but the demo notebook eases onboarding.

Best Ways to Maximize MotionAgent's Potential

To get the most out of this tool:

- Refine Inputs: Provide detailed prompts for scripts (e.g., 'A sci-fi thriller set in 2050 with AI protagonists') to yield richer outputs.

- Iterate Visually: Generate multiple stills and select the best for video conversion to maintain consistency.

- Experiment with Music: Match audio styles to video tone—e.g., upbeat tracks for promotional content.

- Optimize Resources: On lower-end setups, downscale resolutions or use the clear_cache flag to avoid storage overflows.

Common use cases include creating explainer videos for tech products, animated book trailers, or even personal vlogs with AI-enhanced flair. For instance, a marketing team could input a product pitch, generate a scripted demo video with visuals and music, and deploy it across platforms in under a day.

Practical Value and Real-World Applications

MotionAgent democratizes video production by lowering barriers to entry. In an era where visual content drives engagement—think YouTube, TikTok, or corporate training—tools like this empower non-professionals to compete with studios. Its integration with ModelScope's ecosystem also opens doors to further AI resources, such as fine-tuning models or collaborating on extensions.

While it requires significant compute power, the payoff is immense: faster ideation, cost savings on stock assets, and endless creative possibilities. As AI video generation evolves, MotionAgent positions users at the forefront, ready to craft the next viral motion picture from a simple idea.

For more details, explore the GitHub repository at https://github.com/modelscope/motionagent, where you'll find the full codebase, requirements, and community discussions.

Best Alternative Tools to "MotionAgent"

MagicLight.ai is an AI-powered story video generator that effortlessly turns ideas into animated stories. It offers AI-powered script generation, seamless character consistency, and supports content of any genre up to 30 minutes long.

Shorts Generator is an AI-powered video generator that helps you create viral short videos in minutes. Transform your ideas into engaging content with its powerful AI features.

Turn PDFs, scripts, or audio into polished videos with Visla’s AI Video Generator—complete with voiceover, stock footage, and optional AI Avatar. Create professional videos instantly without editing skills.

Generate video, images, music & sound with AI. Fast, realistic, fully controllable. Designed for creators, marketers, filmmakers, designers and teams.

Funy AI: Free AI Video Generator, Image to Video, Text to Video, AI Kissing Generator, Face Swap, AI Art Generator and AI Hairstyle! Free and No Sign Up!

All-in-One AI Creator Tools: Your One-Stop AI Platform for Text, Image, Video, and Digital Human Creation. Transform ideas into stunning visuals quickly with advanced AI features.

Discover Veo3.bot, a free Google Veo 3 AI video generator with native audio. Create high-quality 1080p videos from text or images, featuring precise lip sync and realistic physics—no Gemini subscription needed.

Klyra AI is the ultimate all-in-one platform for creating videos, voiceovers, images, blogs, music, and more using advanced AI tools. Boost productivity with seamless content automation and powerful features.

Hypergro is an AI creative partner that turns ideas into high-performing image and video ads for Meta, YouTube, and Instagram in minutes. Ideal for marketers seeking time-saving, cost-effective ad creation with easy customization and multi-language support.

Discover Skelet AI, your all-in-one platform for generating AI-powered content, stunning images, and natural text-to-speech in 80+ languages. Free plan available with premium upgrades for HD features.

Experience the future of video creation with SuperMaker AI, an all-in-one AI Video Generator for AI music, image, and voice. Create cinema-quality videos effortlessly. Start free, no login required!

GenXi is an AI-powered platform that generates realistic images and videos from text. Easy to use with DALL App, ScriptToVid Tool, Imagine AI Tool, and AI Logo Maker. Try it free now!

StoryShort AI generates viral faceless videos for TikTok & YouTube Shorts using AI. Create and post daily videos without any effort.

CoCoClip.AI is an AI video editor for creating engaging content for YouTube Shorts, TikTok, and Instagram Reels. Effortlessly create captivating videos with AI.