Predibase

Overview of Predibase

Predibase: The Developer Platform for Fine-tuning and Serving LLMs

What is Predibase? Predibase is a comprehensive platform designed for developers to fine-tune and serve open-source Large Language Models (LLMs). It allows users to customize and serve models that can outperform GPT-4 within their own cloud or Predibase's infrastructure.

How does Predibase work? Predibase provides an end-to-end training and serving infrastructure that includes features like reinforcement fine-tuning, LoRAX-powered multi-LoRA serving, and Turbo LoRA for faster throughput. It enables users to train with significantly less data and serve models at maximum speed.

Key Features and Benefits:

- Reinforcement Fine-Tuning (RFT): Enables continuous learning through live reward functions, allowing models to achieve exceptional accuracy even with limited training data. You can train task-specific models with minimal data and improve model performance with each iteration. Adjust reward functions in real-time for immediate course correction.

- Turbo LoRA: Delivers 4x faster throughput compared to other solutions, ensuring ultra-fast serving speeds without sacrificing accuracy.

- LoRAX-Powered Multi-LoRA Serving: Allows running massive-scale inference, efficiently utilizing GPU capacity by serving hundreds of fine-tuned models on a single GPU.

- Effortless GPU Scaling: Dynamically scales GPUs in real-time to meet any inference surge, ensuring zero slowdowns and no wasted compute. Dedicated A100 & H100 GPUs can be reserved for enterprise-grade reliability.

Use Cases:

- Adapt and Serve Open-Source LLMs: Customize and deploy open-source LLMs to fit specific use cases, leveraging Predibase's powerful platform.

- Precision Fine-Tuning: Harness reward functions and minimal labeled data to train models that outperform GPT-4.

- Seamless Enterprise-Grade Deployment: Deploy fine-tuned models without needing separate infrastructure, making training cost-effective.

Why is Predibase important?

Predibase is important because it addresses the challenges of training and serving LLMs, offering a cost-effective, high-performance solution. It empowers developers to fine-tune models with less data, serve them faster, and scale efficiently.

Where can I use Predibase?

You can use Predibase in various scenarios, including:

- Customer Service: Build better products for your customers, leading to more transparent and efficient practices.

- Automation: Unlock new automation use cases that were previously uneconomical.

- Enterprise-Grade Applications: Deploy mission-critical AI applications with multi-region high availability, logging and metrics, and 24/7 on-call rotation.

User Testimonials:

- Giuseppe Romagnuolo, VP of AI, Convirza: "Predibase provides the reliability we need for these high-volume workloads. The thought of building and maintaining this infrastructure on our own is daunting—thankfully, with Predibase, we don’t have to."

- Vlad Bukhin, Staff ML Engineer, Checkr: "By fine-tuning and serving Llama-3-8b on Predibase, we've improved accuracy, achieved lightning-fast inference and reduced costs by 5x compared to GPT-4."

- Paul Beswick, Global CIO, Marsh McLennan: "With Predibase, I didn’t need separate infrastructure for every fine-tuned model, and training became incredibly cost-effective—tens of dollars, not hundreds of thousands."

Predibase Platform Benefits:

- Most powerful way to train.

- Fastest way to serve.

- Smartest way to scale.

Pricing:

For detailed pricing information, please visit the Predibase Pricing page.

Best way to Fine-Tune and Serve LLMs? Predibase simplifies the process of fine-tuning and serving LLMs by offering a comprehensive platform with reinforcement fine-tuning, Turbo LoRA, and LoRAX. Its seamless enterprise-grade deployment, effortless GPU scaling, and flexible deployment options make it the best solution for developers looking to maximize the performance and efficiency of their AI models.

Best Alternative Tools to "Predibase"

BasicAI offers a leading data annotation platform and professional labeling services for AI/ML models, trusted by thousands in AV, ADAS, and Smart City applications. With 7+ years of expertise, it ensures high-quality, efficient data solutions.

Lightning-fast AI platform for developers. Deploy, fine-tune, and run 200+ optimized LLMs and multimodal models with simple APIs - SiliconFlow.

Float16.Cloud provides serverless GPUs for fast AI development. Run, train, and scale AI models instantly with no setup. Features H100 GPUs, per-second billing, and Python execution.

Stable Code Alpha is Stability AI's first LLM generative AI product for coding, designed to assist programmers and provide a learning tool for new developers.

Build task-oriented custom agents for your codebase that perform engineering tasks with high precision powered by intelligence and context from your data. Build agents for use cases like system design, debugging, integration testing, onboarding etc.

Xander is an open-source desktop platform that enables no-code AI model training. Describe tasks in natural language for automated pipelines in text classification, image analysis, and LLM fine-tuning, ensuring privacy and performance on your local machine.

Dynamiq is an on-premise platform for building, deploying, and monitoring GenAI applications. Streamline AI development with features like LLM fine-tuning, RAG integration, and observability to cut costs and boost business ROI.

Unsloth AI offers open-source fine-tuning and reinforcement learning for LLMs like gpt-oss and Llama, boasting 30x faster training and reduced memory usage, making AI training accessible and efficient.

Label Studio is a flexible open-source data labeling platform for fine-tuning LLMs, preparing training data, and evaluating AI models. Supports various data types including text, images, audio and video.

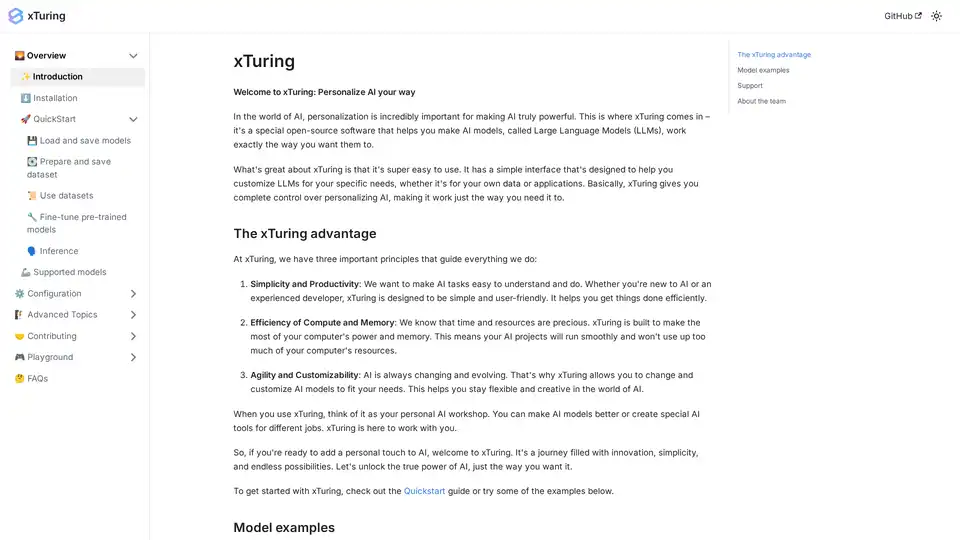

xTuring is an open-source library that empowers users to customize and fine-tune Large Language Models (LLMs) efficiently, focusing on simplicity, resource optimization, and flexibility for AI personalization.

UBIAI enables you to build powerful and accurate custom LLMs in minutes. Streamline your AI development process and fine-tune LLMs for reliable AI solutions.

Train, manage, and evaluate custom large language models (LLMs) fast and efficiently on Entry Point AI with no code required.

Metatext is a no-code NLP platform that enables users to create custom text classification and extraction models 10x faster using their own data and expertise.

Refact.ai, the #1 open-source AI agent for software development, automates coding, debugging, and testing with full context awareness. An open-source alternative to Cursor and Copilot.