SiliconFlow

Overview of SiliconFlow

What is SiliconFlow?

SiliconFlow is a cutting-edge AI infrastructure platform designed specifically for large language models (LLMs) and multimodal models. It empowers developers and enterprises to deploy, fine-tune, and run over 200 optimized models with lightning-fast inference capabilities. Whether you're working on text generation, image processing, or video analysis, SiliconFlow provides a unified solution that eliminates fragmentation across serverless, reserved, or private-cloud setups. This platform stands out in the AI inference landscape by offering seamless integration through simple, OpenAI-compatible APIs, making it ideal for scaling AI applications without the usual infrastructure headaches.

In an era where AI models are becoming increasingly complex, SiliconFlow addresses key pain points like latency, throughput, and cost predictability. From small development teams to large enterprises, it supports a wide range of use cases, ensuring high performance for both open-source and commercial LLMs. By focusing on speed and efficiency, SiliconFlow helps users accelerate their AI development, turning innovative ideas into deployable solutions faster than ever.

Core Features of SiliconFlow

SiliconFlow packs a robust set of features tailored for AI practitioners. Here's what makes it a go-to platform:

Optimized Model Library: Access to 200+ models, including popular LLMs like DeepSeek-V3.1, GLM-4.5, Qwen3 series, and multimodal options such as Qwen-Image and FLUX.1. These are pre-optimized for faster inference, supporting tasks from natural language processing to visual understanding.

High-Speed Inference: Achieve blazing-fast performance with lower latency and higher throughput. The platform's stack is engineered to handle both language and multimodal workloads efficiently, making it suitable for real-time applications.

Flexible Deployment Options: Choose from serverless inference for instant scalability, reserved GPUs for predictable performance, or custom private-cloud setups. No need to manage infrastructure—SiliconFlow handles the heavy lifting.

Fine-Tuning Capabilities: Adapt base models to your specific data with built-in monitoring and elastic compute resources. This feature is invaluable for customizing AI behavior without deep expertise in model training.

Privacy and Control: Your data remains yours, with no storage on the platform. Enjoy full control over model deployment, scaling, and fine-tuning, avoiding vendor lock-in.

Simplicity in Integration: A single API endpoint that's fully compatible with OpenAI standards simplifies development. Developers can switch or integrate models effortlessly, reducing onboarding time.

These features ensure SiliconFlow isn't just another hosting service—it's a comprehensive ecosystem for AI inference that prioritizes developer needs like reliability and cost-efficiency.

How Does SiliconFlow Work?

Getting started with SiliconFlow is straightforward, aligning with modern DevOps practices. The platform operates on a unified inference engine that abstracts away the complexities of model serving.

First, sign up for a free account and explore the model catalog. Select from LLMs for text-based tasks or multimodal models for image and video generation. For instance, to run an LLM like DeepSeek-V3, you simply call the API with your prompt—no setup required in serverless mode.

Here's a step-by-step breakdown:

Model Selection: Browse the extensive library, which includes advanced models like Kimi-K2-Instruct and Qwen3-Coder for specialized coding tasks.

Deployment: Opt for serverless to pay-per-use, ideal for prototyping, or reserve GPUs for production workloads ensuring stable latency.

Inference Execution: Send requests via the OpenAI-compatible API. The platform optimizes routing to deliver results with minimal delay.

Fine-Tuning Process: Upload your dataset, configure parameters through the dashboard, and let SiliconFlow's elastic compute handle the training. Monitor progress with integrated tools to iterate quickly.

Scaling and Monitoring: As demands grow, seamlessly scale without interruptions. The system provides metrics on throughput and costs for informed decision-making.

This workflow minimizes friction, allowing focus on innovation rather than operations. For multimodal tasks, such as generating images with Qwen-Image-Edit, the process mirrors LLM inference but extends to visual inputs, leveraging the platform's high-speed capabilities for beyond-text modalities.

Main Use Cases and Practical Value

SiliconFlow shines in diverse scenarios where fast, reliable AI inference is crucial. Developers building chatbots, content generators, or recommendation systems can leverage its LLMs for accurate, low-latency responses. Enterprises in media or e-commerce benefit from multimodal features, like video-to-image transformations with Wan2.2 models, enhancing user experiences without hefty compute investments.

Key Use Cases:

- AI-Powered Applications: Integrate LLMs into apps for real-time natural language understanding, such as virtual assistants or automated customer support.

- Creative Workflows: Use multimodal models for image generation, editing, and video synthesis, speeding up design processes for artists and marketers.

- Research and Development: Fine-tune models on proprietary data for domain-specific AI, like financial analysis or medical imaging.

- High-Volume Inference: Reserved options suit scheduled jobs, such as batch processing large datasets for machine learning pipelines.

The practical value lies in its cost-effectiveness—pay only for usage in serverless mode—and performance gains, often outperforming traditional setups. Users report up to 50% reduction in inference times, making it a smart choice for budget-conscious teams aiming to stay competitive in AI-driven markets.

Who is SiliconFlow For?

This platform caters to a broad audience:

- Developers and Startups: Those needing quick prototyping without infrastructure costs.

- AI Researchers: Fine-tuning experts requiring elastic resources for experiments.

- Enterprises: Large teams handling high-scale inference with privacy guarantees.

- Multimodal Innovators: Creators in computer vision or generative AI pushing boundaries in image and video tech.

If you're tired of fragmented tools and seeking a reliable, scalable AI backbone, SiliconFlow is your ally. It's particularly valuable for teams transitioning from local setups to cloud-based inference, offering a smooth learning curve.

Why Choose SiliconFlow Over Competitors?

In the crowded AI infrastructure space, SiliconFlow differentiates through its developer-centric design. Unlike rigid cloud providers, it offers flexibility without lock-in, combined with superior speed for multimodal tasks. Pricing is transparent and usage-based, avoiding surprise bills, while the OpenAI compatibility eases migration from existing workflows.

User feedback highlights its reliability: "The API simplicity saved us weeks in integration," notes one developer. For reliability, the platform ensures 99.9% uptime with built-in redundancies. Security features like no-data-storage align with GDPR and enterprise standards, building trust.

Frequently Asked Questions

What Types of Models Can I Deploy on SiliconFlow?

SiliconFlow supports a vast array of LLMs (e.g., DeepSeek, Qwen3) and multimodal models (e.g., FLUX.1 for images, Wan2.2 for videos), covering text, image, and video domains.

How Does Pricing Work?

It's flexible: serverless is pay-per-token/use, reserved GPUs offer fixed rates for volume, ensuring predictable costs.

Can I Fine-Tune Models?

Yes, with easy data upload and monitoring—no infrastructure management needed.

What Support is Available?

Comprehensive docs, API references, and sales contact for enterprises, plus community resources.

Is It Compatible with OpenAI?

Fully—use the same SDKs and endpoints for seamless integration.

Ready to supercharge your AI projects? Start with SiliconFlow's free tier today and experience the difference in speed and simplicity.

Best Alternative Tools to "SiliconFlow"

Nexa SDK enables fast and private on-device AI inference for LLMs, multimodal, ASR & TTS models. Deploy to mobile, PC, automotive & IoT devices with production-ready performance across NPU, GPU & CPU.

Awan LLM provides an unlimited, unrestricted, and cost-effective LLM Inference API platform. It allows users and developers to access powerful LLM models without token limitations, ideal for AI agents, roleplay, data processing, and code completion.

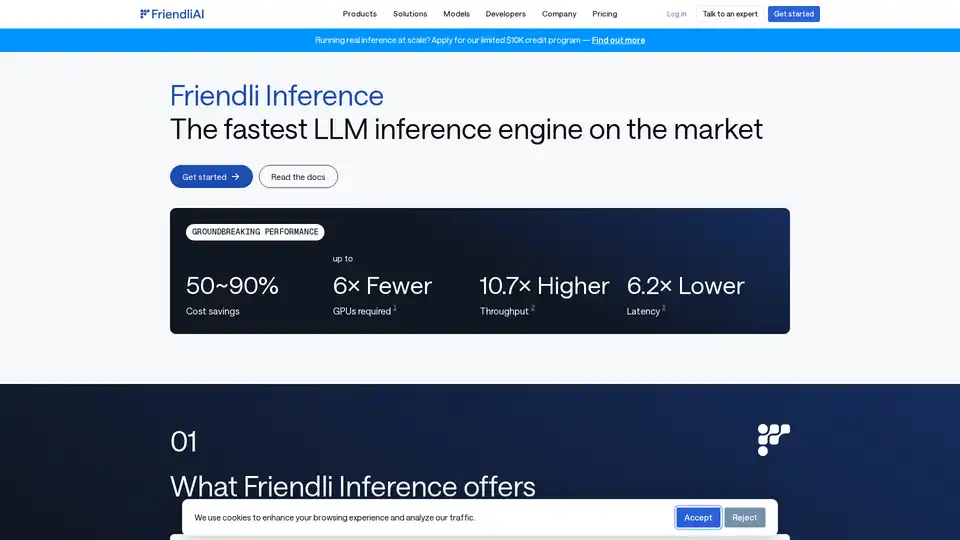

Friendli Inference is the fastest LLM inference engine, optimized for speed and cost-effectiveness, slashing GPU costs by 50-90% while delivering high throughput and low latency.

Awan LLM offers an unrestricted and cost-effective LLM inference API platform with unlimited tokens, ideal for developers and power users. Process data, complete code, and build AI agents without token limits.

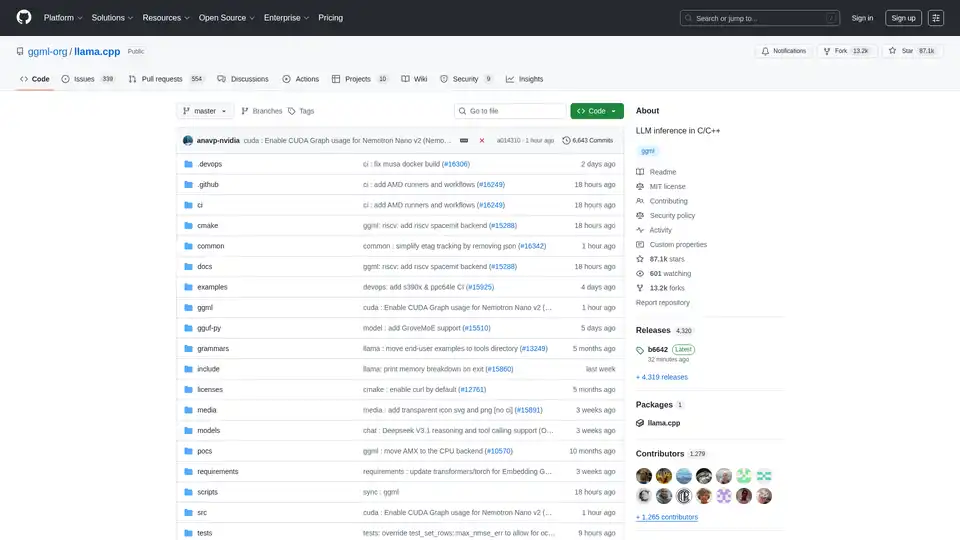

Enable efficient LLM inference with llama.cpp, a C/C++ library optimized for diverse hardware, supporting quantization, CUDA, and GGUF models. Ideal for local and cloud deployment.

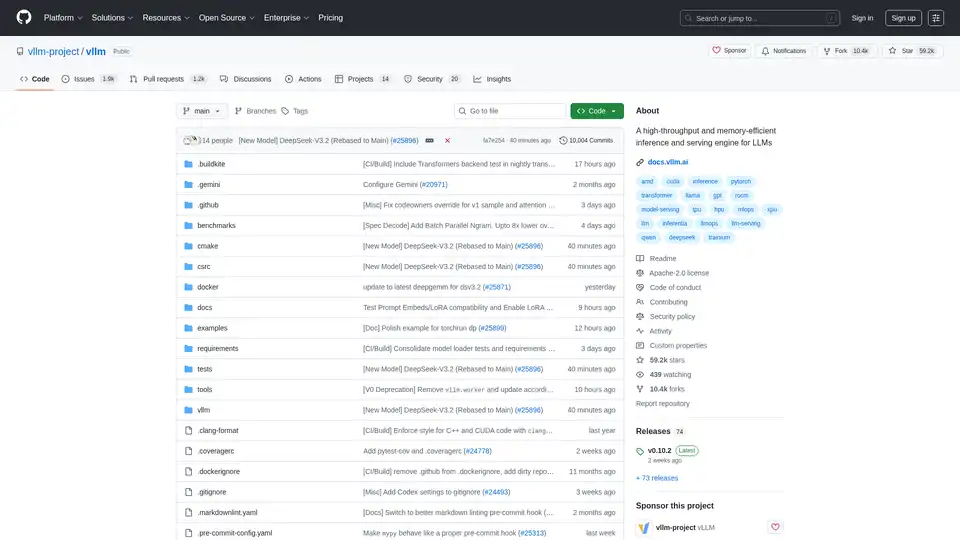

vLLM is a high-throughput and memory-efficient inference and serving engine for LLMs, featuring PagedAttention and continuous batching for optimized performance.

JudgeAI is the world's first independent AI arbitration system, offering unbiased resolutions for economic disputes using advanced legal algorithms and multimodal AI for evidence analysis.

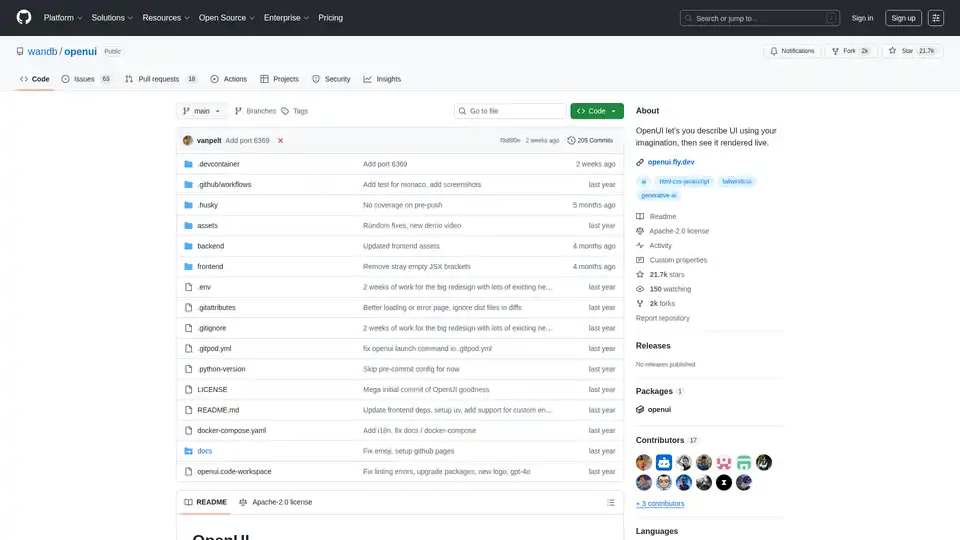

OpenUI is an open-source tool that lets you describe UI components in natural language and renders them live using LLMs. Convert descriptions to HTML, React, or Svelte for fast prototyping.

Falcon LLM is an open-source generative large language model family from TII, featuring models like Falcon 3, Falcon-H1, and Falcon Arabic for multilingual, multimodal AI applications that run efficiently on everyday devices.

mistral.rs is a blazingly fast LLM inference engine written in Rust, supporting multimodal workflows and quantization. Offers Rust, Python, and OpenAI-compatible HTTP server APIs.

Oda Studio offers AI-powered solutions for complex data analysis, transforming unstructured data into actionable insights for construction, finance, and media industries. Experts in Vision-Language AI & knowledge graphs.

Secure your AI systems with Mindgard's automated red teaming and security testing. Identify and resolve AI-specific risks, ensuring robust AI models and applications.

Discover Beyond Limits' Industrial Enterprise AI, powered by Hybrid AI. Secure, scalable AI solutions for industries like oil & gas, manufacturing, and healthcare, optimizing operations and driving data-driven decisions.

LM-Kit provides enterprise-grade toolkits for local AI agent integration, combining speed, privacy, and reliability to power next-generation applications. Leverage local LLMs for faster, cost-efficient, and secure AI solutions.