Prompt Token Counter for OpenAI Models

Overview of Prompt Token Counter for OpenAI Models

Prompt Token Counter: A Simple Tool for OpenAI Model Token Estimation

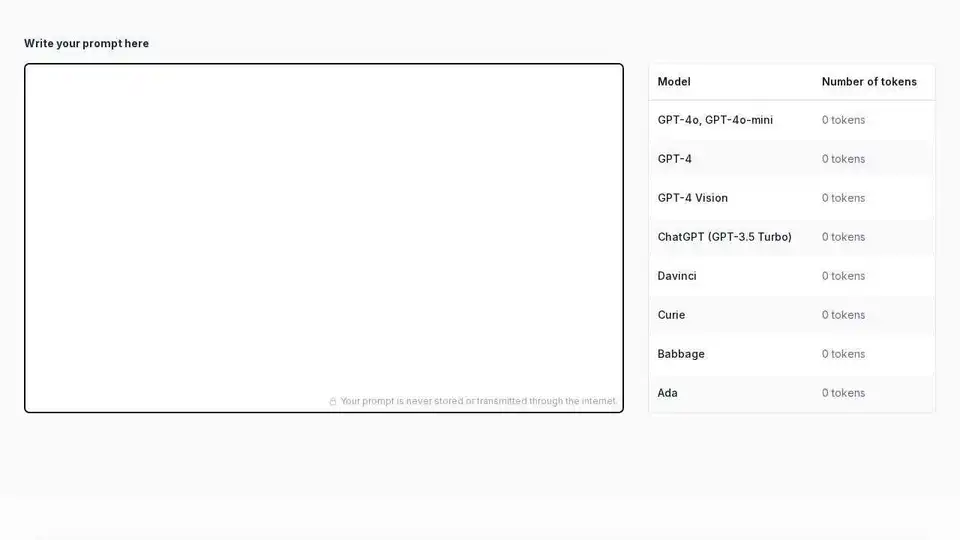

What is Prompt Token Counter?

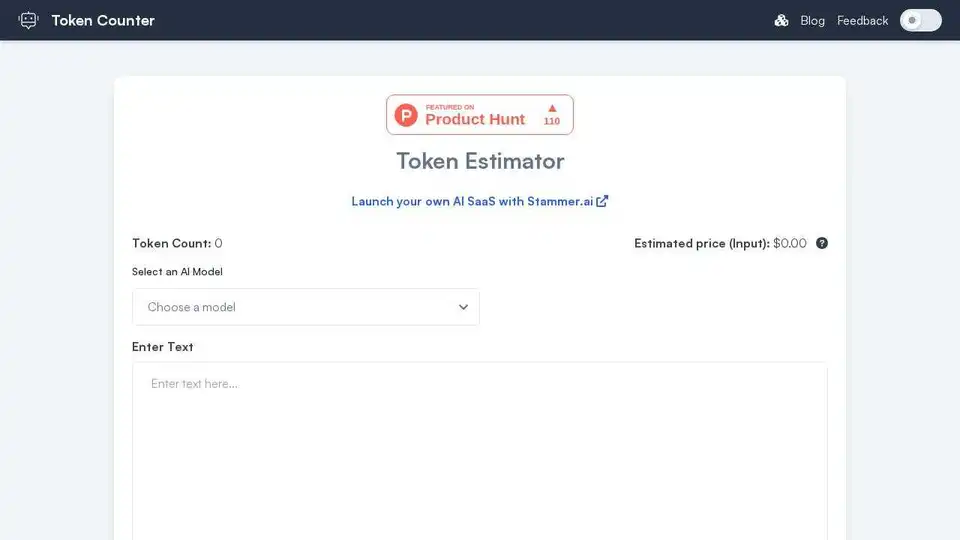

Prompt Token Counter is an online tool designed to help users estimate the number of tokens their prompts will consume when using OpenAI models like GPT-3.5, GPT-4, and others. Understanding token usage is crucial for staying within model limits and managing costs effectively.

How does Prompt Token Counter work?

Simply type or paste your prompt into the provided text area. The tool instantly calculates and displays the token count for various OpenAI models, including GPT-4o, GPT-4, ChatGPT (GPT-3.5 Turbo), Davinci, Curie, Babbage, and Ada. The counter updates in real-time as you type, providing immediate feedback on token usage.

Why is Prompt Token Counter important?

- Stay within model limits: OpenAI models have limits on the number of tokens they can process in a single interaction. Exceeding these limits can result in errors or truncated outputs.

- Cost control: OpenAI charges based on token usage. Estimating token count helps you manage costs and avoid unexpected expenses.

- Efficient prompt engineering: Understanding token counts allows you to craft concise and effective prompts that maximize the model's capabilities without exceeding limits.

Key Features:

- Real-time token counting: The token count updates instantly as you type, providing immediate feedback.

- Support for multiple OpenAI models: The tool supports a wide range of OpenAI models, including the latest GPT-4o, GPT-4, ChatGPT, and older models like Davinci and Ada.

- Privacy-focused: Your prompts are never stored or transmitted over the internet, ensuring your privacy.

How to Use Prompt Token Counter:

- Visit the Prompt Token Counter website.

- Type or paste your prompt into the text area.

- Observe the token count for your desired OpenAI model.

- Adjust your prompt as needed to stay within token limits.

Understanding Tokens:

In natural language processing, a token is the smallest unit of text that a model processes. Tokens can be words, characters, or subwords, depending on the tokenization method used. Different models might tokenize the same text slightly differently.

What is a Prompt?

A prompt is the input you provide to a language model to initiate a task or generate a response. A well-crafted prompt is clear, concise, and includes all necessary information to get the desired output from the model. It sets the context for the model's subsequent output. For example, providing the model with “Write a short poem about the ocean” is a prompt. The quality and specificity of your prompt greatly influence the generated result.

Staying Within Token Limits:

Staying within token limits is crucial for several reasons:

- Preventing Errors: Exceeding token limits can cause errors or incomplete responses from the model.

- Managing Costs: OpenAI charges based on token usage, so staying within limits helps control expenses.

- Optimizing Performance: Shorter, more focused prompts can sometimes yield better results than lengthy, rambling ones.

By using Prompt Token Counter, you can ensure your prompts are optimized for cost and performance when using OpenAI models.

Best Alternative Tools to "Prompt Token Counter for OpenAI Models"

AskCodi is an AI-powered API platform that simplifies code development by providing access to multiple AI models like GPT-4, Claude, and Gemini through a single interface. Streamline your workflow and build smarter applications.

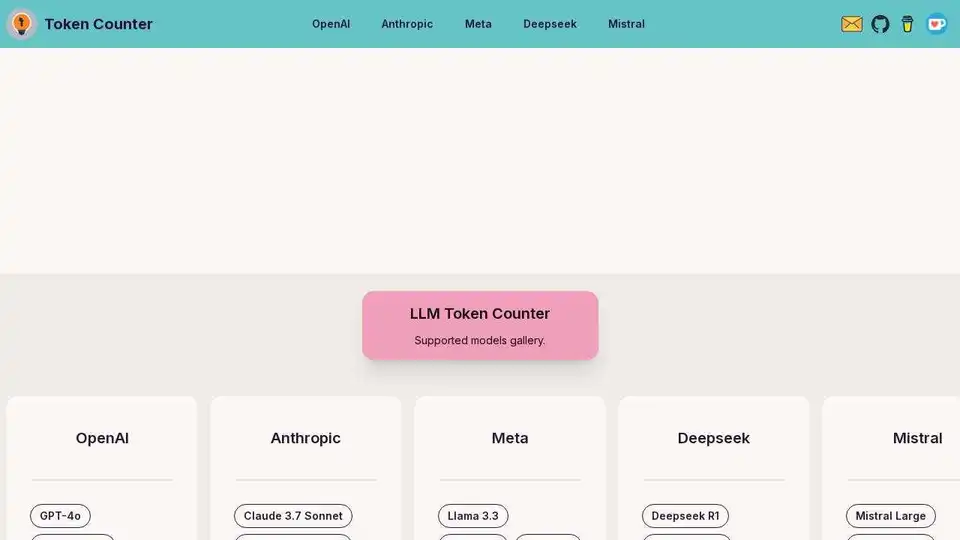

Calculate tokens of prompt for all popular LLMs including GPT-4, Claude-3, Llama-3 using browser-based Tokenizer.

16x Prompt is an AI coding tool for managing code context, customizing prompts, and shipping features faster with LLM API integrations. Ideal for developers seeking efficient AI-assisted coding.

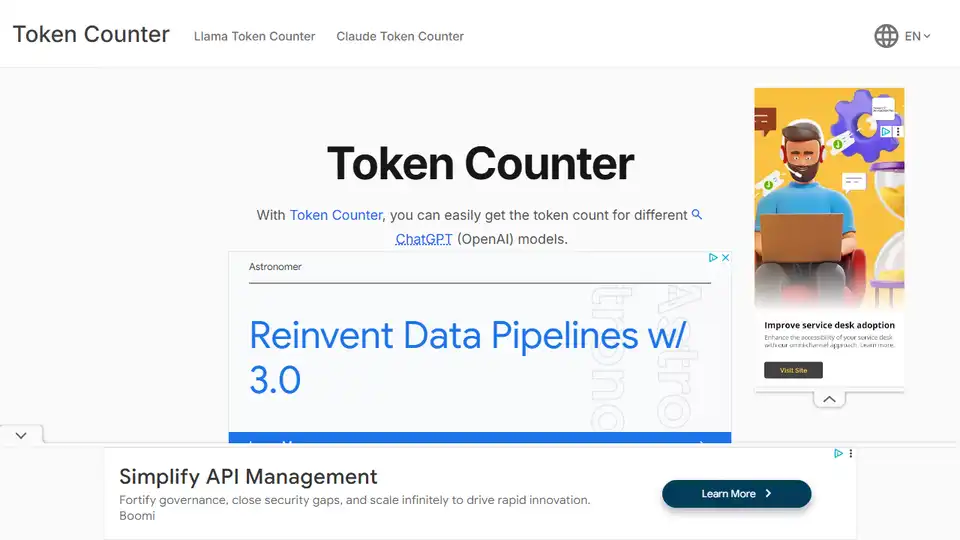

Token Counter: Count tokens, estimate costs for any AI model. Optimize prompts, manage budget, maximize efficiency in AI interactions.

Token Counter helps estimate AI model costs for ChatGPT & GPT-3. Input text, get token count & cost, boosting efficiency & preventing wastage.

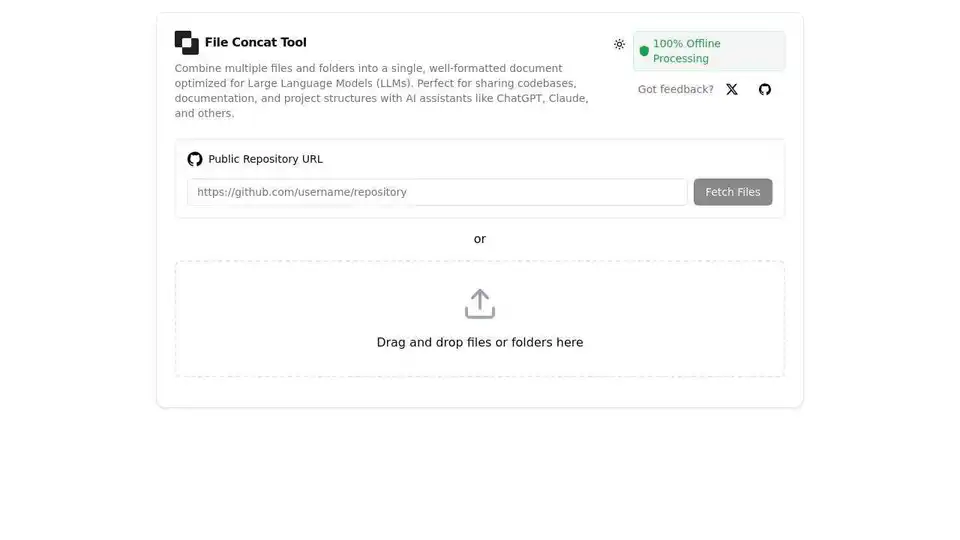

Free online file concatenation tool for AI assistants. Combine files into optimized format for ChatGPT, Claude, Gemini & other LLMs.

Deck AI is a powerful Clash Royale deck-builder that creates the best decks by specializing its results to your cards, card levels, arena, and league.

ChatLLaMA is a LoRA-trained AI assistant based on LLaMA models, enabling custom personal conversations on your local GPU. Features desktop GUI, trained on Anthropic's HH dataset, available for 7B, 13B, and 30B models.

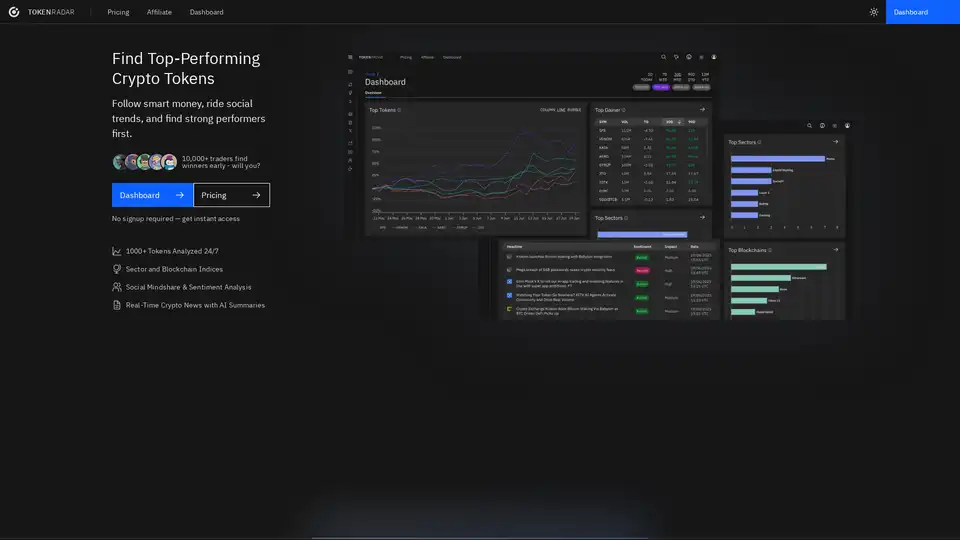

Token Radar is an AI-powered platform for tracking top-performing crypto tokens, analyzing sectors and blockchains, with real-time sentiment and social mindshare insights to spot trends early.

AiPrice offers an API for calculating OpenAI token pricing. Estimate prompt token count accurately for various LLM models. Free plan available, no credit card needed.

Awan LLM offers an unrestricted and cost-effective LLM inference API platform with unlimited tokens, ideal for developers and power users. Process data, complete code, and build AI agents without token limits.

Awan LLM provides an unlimited, unrestricted, and cost-effective LLM Inference API platform. It allows users and developers to access powerful LLM models without token limitations, ideal for AI agents, roleplay, data processing, and code completion.

Explore Grok 4 Code, xAI's AI coding assistant, boasting a 131k token context window. Features advanced code generation, debugging, and seamless IDE integration for developers.

Email 5 is rebuilding email from the protocol up with HTML5-native, token-powered, and privacy-first design. Built on open standards for a resilient and future-proof messaging solution.