Awan LLM

Overview of Awan LLM

Awan LLM: Unleash the Power of Unlimited LLM Inference

What is Awan LLM? Awan LLM is a cutting-edge LLM (Large Language Model) Inference API platform designed for power users and developers who require unrestricted access and cost-effective solutions. Unlike traditional token-based pricing models, Awan LLM offers unlimited tokens, allowing you to maximize your AI applications without worrying about escalating costs.

Key Features and Benefits:

- Unlimited Tokens: Say goodbye to token limits and hello to boundless creativity and processing power. Send and receive unlimited tokens up to the models' context limit.

- Unrestricted Access: Utilize LLM models without constraints or censorship. Explore the full potential of AI without limitations.

- Cost-Effective: Enjoy predictable monthly pricing instead of unpredictable per-token charges. Perfect for projects with high usage demands.

How does Awan LLM work?

Awan LLM owns its datacenters and GPUs, which allows to provide unlimited token generation without the high costs associated with renting resources from other providers.

Use Cases:

- AI Assistants: Provide unlimited assistance to your users with AI-powered support.

- AI Agents: Enable your agents to work on complex tasks without token concerns.

- Roleplay: Immerse yourself in uncensored and limitless roleplaying experiences.

- Data Processing: Process massive datasets efficiently and without restrictions.

- Code Completion: Accelerate code development with unlimited code completions.

- Applications: Create profitable AI-powered applications by eliminating token costs.

How to use Awan LLM?

- Sign up for an account on the Awan LLM website.

- Check the Quick-Start page to get familiar with API endpoints.

Why choose Awan LLM?

Awan LLM stands out from other LLM API providers due to its unique approach to pricing and resource management. By owning its infrastructure, Awan LLM can provide unlimited token generation at a significantly lower cost than providers that charge based on token usage. This makes it an ideal choice for developers and power users who require high-volume LLM inference without budget constraints.

Frequently Asked Questions:

- How can you provide unlimited token generation? Awan LLM owns its datacenters and GPUs.

- How do I contact Awan LLM support? Contact them at contact.awanllm@gmail.com or use the contact button on the website.

- Do you keep logs of prompts and generation? No. Awan LLM does not log any prompt or generation as explained in their Privacy Policy.

- Is there a hidden limit imposed? Request rate limits are explained on the Models and Pricing page.

- Why use Awan LLM API instead of self-hosting LLMs? It will cost significantly less than renting GPUs in the cloud or running your own GPUs.

- What if I want to use a model that's not here? Contact Awan LLM to request the addition of the model.

Who is Awan LLM for?

Awan LLM is ideal for:

- Developers building AI-powered applications.

- Power users who require high-volume LLM inference.

- Researchers working on cutting-edge AI projects.

- Businesses looking to reduce the cost of LLM usage.

With its unlimited tokens, unrestricted access, and cost-effective pricing, Awan LLM empowers you to unlock the full potential of Large Language Models. Start for free and experience the future of AI inference.

Best Alternative Tools to "Awan LLM"

Awan LLM provides an unlimited, unrestricted, and cost-effective LLM Inference API platform. It allows users and developers to access powerful LLM models without token limitations, ideal for AI agents, roleplay, data processing, and code completion.

Try DeepSeek V3 online for free with no registration. This powerful open-source AI model features 671B parameters, supports commercial use, and offers unlimited access via browser demo or local installation on GitHub.

Instantly run any Llama model from HuggingFace without setting up any servers. Over 11,900+ models available. Starting at $10/month for unlimited access.

Meteron AI is an all-in-one AI toolset that handles LLM and generative AI metering, load-balancing, and storage, freeing developers to focus on building AI-powered products.

DeepSeek-v3 is an AI model based on MoE architecture, providing stable and fast AI solutions with extensive training and multiple language support.

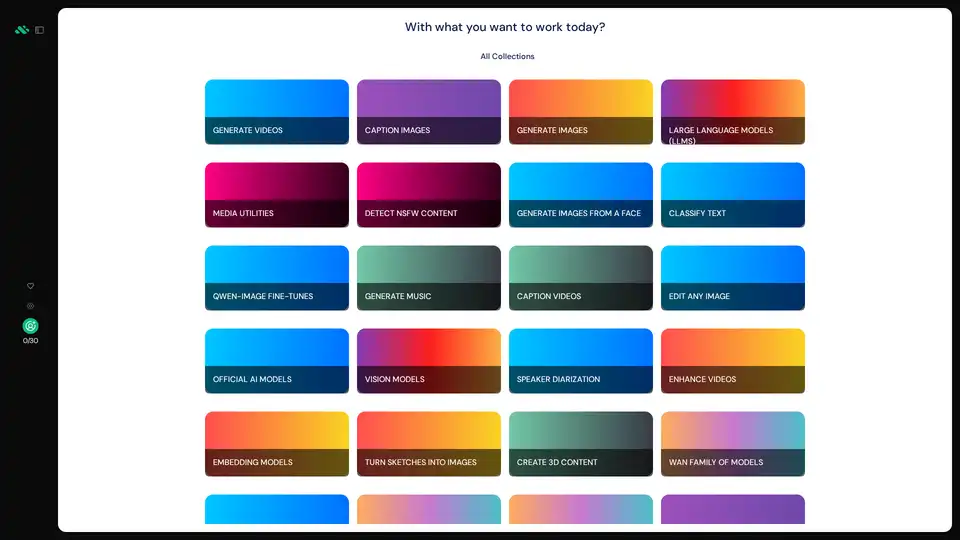

AIverse is an all-in-one platform granting access to thousands of AI models for image/video generation, LLMs, speech-to-text, music creation, and more. Enjoy unlimited use for $20/month with easy integration.

Magic Loops is a no-code platform that combines LLMs and code to build professional AI-native apps in minutes. Automate tasks, create custom tools, and explore community apps without any coding skills.

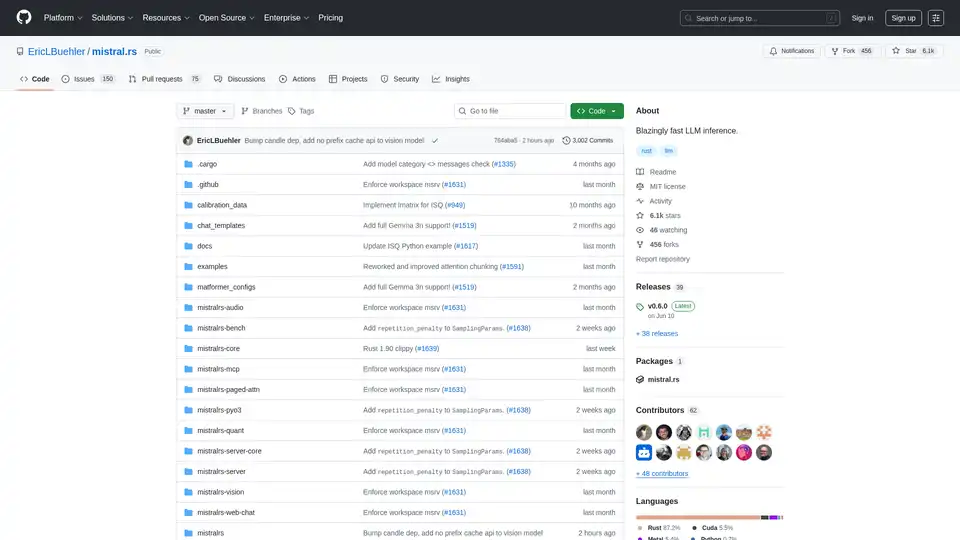

mistral.rs is a blazingly fast LLM inference engine written in Rust, supporting multimodal workflows and quantization. Offers Rust, Python, and OpenAI-compatible HTTP server APIs.

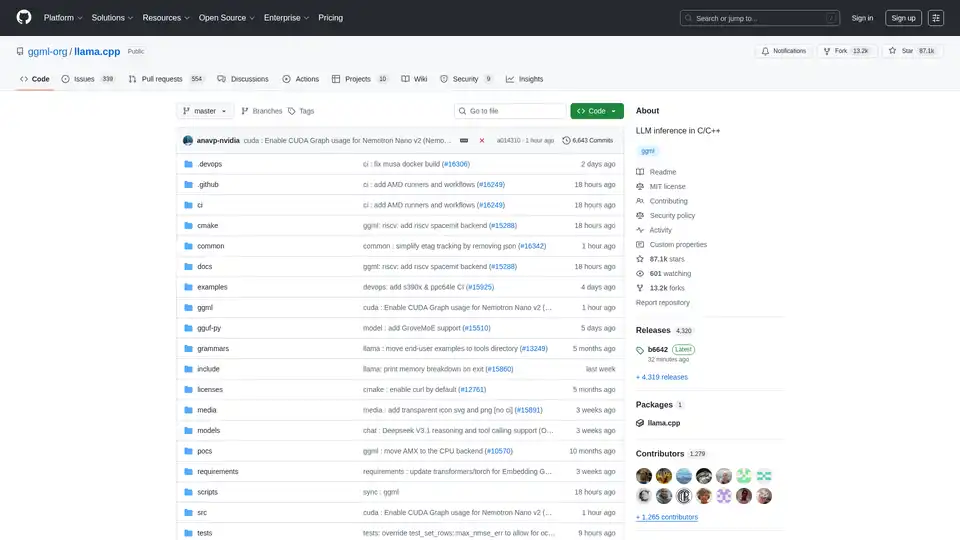

Enable efficient LLM inference with llama.cpp, a C/C++ library optimized for diverse hardware, supporting quantization, CUDA, and GGUF models. Ideal for local and cloud deployment.

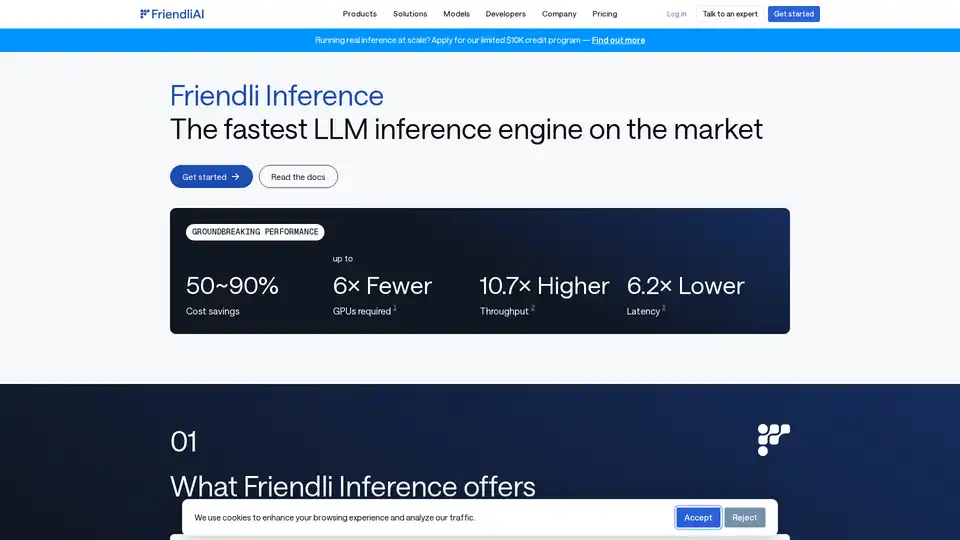

Friendli Inference is the fastest LLM inference engine, optimized for speed and cost-effectiveness, slashing GPU costs by 50-90% while delivering high throughput and low latency.

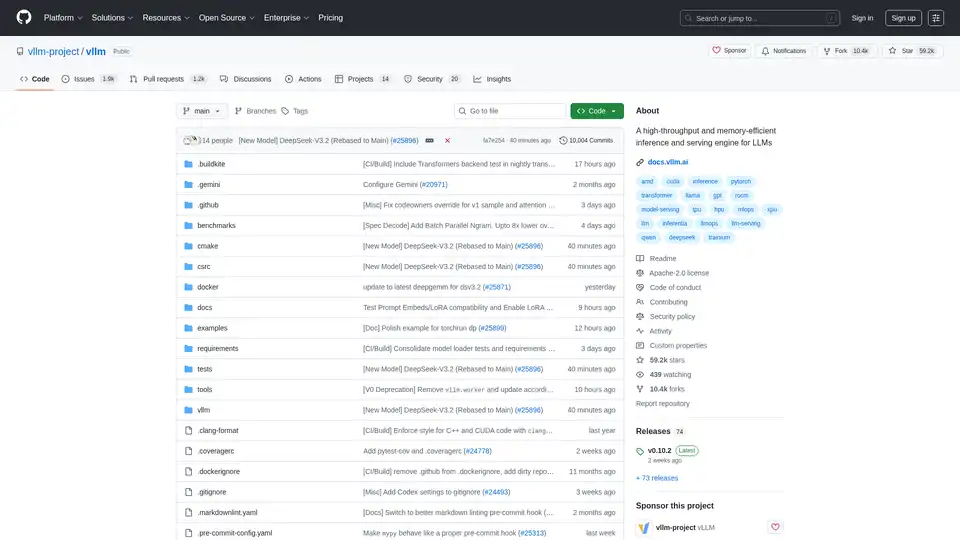

vLLM is a high-throughput and memory-efficient inference and serving engine for LLMs, featuring PagedAttention and continuous batching for optimized performance.

Private LLM is a local AI chatbot for iOS and macOS that works offline, keeping your information completely on-device, safe and private. Enjoy uncensored chat on your iPhone, iPad, and Mac.

Nebius is an AI cloud platform designed to democratize AI infrastructure, offering flexible architecture, tested performance, and long-term value with NVIDIA GPUs and optimized clusters for training and inference.

Lightning-fast AI platform for developers. Deploy, fine-tune, and run 200+ optimized LLMs and multimodal models with simple APIs - SiliconFlow.