Stable Diffusion

Overview of Stable Diffusion

What is Stable Diffusion AI?

Stable Diffusion is a groundbreaking open-source AI system that transforms text descriptions into stunning, realistic images. Developed by the CompVis group at Ludwig Maximilian University of Munich, in collaboration with Runway ML and Stability AI, it leverages diffusion models to enable text-to-image generation, image editing, and more. Unlike proprietary tools, Stable Diffusion's code, pretrained models, and license are fully open-sourced, allowing users to run it on a single GPU right on their devices. This accessibility has democratized AI-powered creativity, making high-quality image generation available to artists, designers, and hobbyists without needing enterprise-level resources.

At its core, Stable Diffusion excels in producing detailed visuals from simple prompts, supporting resolutions up to 1024x1024 pixels. It's particularly noted for its versatility in generating landscapes, portraits, abstract art, and even conceptual designs. For those venturing into AI art, Stable Diffusion stands out as a reliable entry point, offering creative freedom while being mindful of ethical use to avoid biases from its training data.

How Does Stable Diffusion Work?

Stable Diffusion operates on a Latent Diffusion Model (LDM) architecture, which efficiently compresses and processes images in a latent space rather than full pixel space, reducing computational demands. The system comprises three key components:

- Variational Autoencoder (VAE): This compresses input images into a compact latent representation, preserving essential semantic details while discarding noise.

- U-Net: The denoising backbone, built on a ResNet structure, iteratively removes Gaussian noise added during the forward diffusion process. It uses cross-attention mechanisms to incorporate text prompts, guiding the generation toward user-described outputs.

- Text Encoder (Optional): Converts textual descriptions into embeddings that influence the denoising steps.

The process begins with adding noise to a latent image (or starting from pure noise for generation). The U-Net then reverses this diffusion step-by-step, refining the output until a coherent image emerges. Once denoised, the VAE decoder reconstructs the final pixel-based image. This elegant workflow ensures high-fidelity results, even for complex prompts involving styles, compositions, or subjects.

Training on the massive LAION-5B dataset—comprising billions of image-text pairs from web sources—allows Stable Diffusion to learn diverse visual concepts. Data is filtered for quality, resolution, and aesthetics, with techniques like Classifier-Free Guidance enhancing prompt adherence. However, this web-sourced data introduces cultural biases, primarily toward English and Western content, which users should consider when generating diverse representations.

Core Features and Capabilities of Stable Diffusion

Stable Diffusion isn't just about basic image creation; it offers a suite of advanced features:

- Text-to-Image Generation: Input a descriptive prompt like "a serene mountain landscape at sunset" and generate original artwork in seconds.

- Image Editing Tools: Use inpainting to fill in or modify parts of an image (e.g., changing backgrounds) and outpainting to expand beyond original borders.

- Image-to-Image Translation: Redraw existing photos with new textual guidance, preserving structure while altering styles or elements.

- ControlNet Integration: Maintain geometric structures, poses, or edges from reference images while applying stylistic changes.

- High-Resolution Support: The XL variant (Stable Diffusion XL 1.0) boosts capabilities with a 6-billion-parameter dual model, enabling 1024x1024 outputs, better text rendering in images, and simplified prompting for faster, more realistic results.

Enhancements like LoRAs (Low-Rank Adaptations) allow fine-tuning for specific details—such as faces, clothing, or anime styles—without retraining the entire model. Embeddings capture visual styles for consistent outputs, while negative prompts exclude unwanted elements like distortions or extra limbs, refining quality.

How to Use Stable Diffusion AI

Getting started with Stable Diffusion is straightforward, whether online or offline.

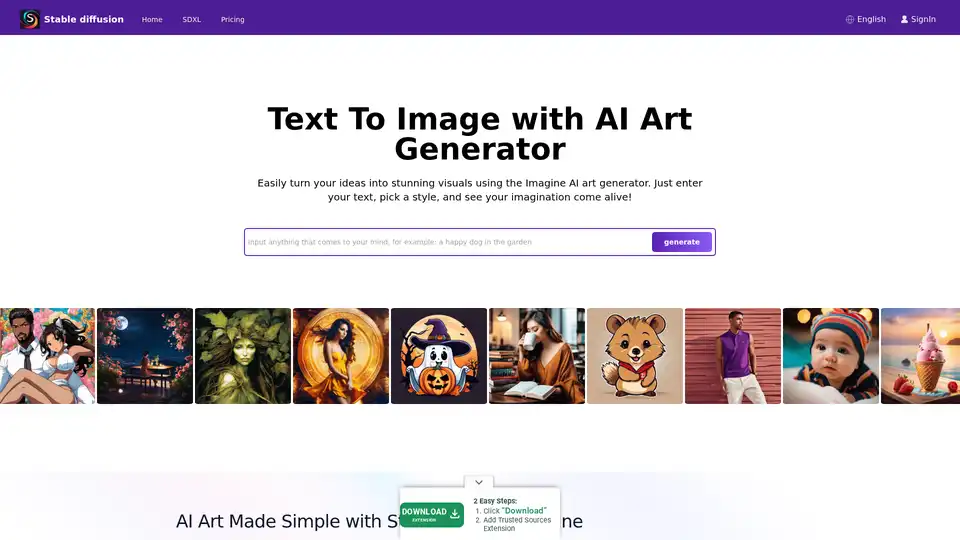

Online Access via Platforms

For beginners, platforms like Stablediffusionai.ai provide a user-friendly web interface:

- Visit stablediffusionai.ai and sign in.

- Enter your text prompt in the input field.

- Select styles, resolutions (e.g., SDXL for high-res), and adjust parameters like sampling steps.

- Click "Generate" or "Dream" to create images.

- Refine with negative prompts (e.g., "blurry, low quality") and download favorites.

This no-install option is ideal for quick experiments, though it requires internet.

Local Installation and Download

For full control and offline use:

- Download from GitHub (github.com/CompVis/stable-diffusion) by clicking "Code" > "Download ZIP" (needs ~10GB space).

- Install prerequisites: Python 3.10+, Git, and a GPU with 4GB+ VRAM (NVIDIA recommended).

- Extract the ZIP, place model checkpoints (e.g., from Hugging Face) in the models folder.

- Run webui-user.bat (Windows) or equivalent script to launch the local UI.

- Input prompts, tweak settings like inference steps (20-50 for balance), and generate.

Extensions like Automatic1111's web UI add features such as batch processing. Once set up, it runs entirely offline, prioritizing privacy.

Training Your Own Stable Diffusion Model

Advanced users can customize Stable Diffusion:

- Gather a dataset of image-text pairs (e.g., for niche styles).

- Prepare data by cleaning and captioning.

- Modify configs for your dataset and hyperparameters (batch size, learning rate).

- Train components separately (VAE, U-Net, text encoder) using scripts—rent cloud GPUs for heavy lifting.

- Evaluate and fine-tune iteratively.

This process demands technical know-how but unlocks tailored models for specific domains like fashion or architecture.

Stable Diffusion XL: The Upgraded Version

Released in July 2023 by Stability AI, SDXL builds on the original with a larger parameter count for superior detail. It simplifies prompts (fewer words needed), includes built-in styles, and excels in legible text within images. For professionals, SDXL Online via dedicated platforms delivers ultra-high-res outputs for marketing visuals, game assets, or prints. It's a step up for those seeking photorealism or intricate designs without compromising speed.

Using LoRAs, Embeddings, and Negative Prompts

- LoRAs: Download specialized files (e.g., for portraits) and activate via prompts like "lora:portrait_style:1.0". They enhance details efficiently.

- Embeddings: Train on style datasets, then invoke with ":style_name:" in prompts for thematic consistency.

- Negative Prompts: Specify avoids like "deformed, ugly" to minimize flaws, improving overall output precision.

Practical Applications and Use Cases

Stable Diffusion shines in various scenarios:

- Artists and Designers: Prototype concepts, generate references, or experiment with styles for digital art, illustrations, or UI/UX mockups.

- Marketing and Media: Create custom visuals for ads, social media, or content without stock photos—ideal for e-commerce product renders.

- Education and Hobbyists: Teach AI concepts or hobby-craft personalized art, like family portraits in fantasy settings.

- Game Development: Asset creation for characters, environments, or textures, especially with ControlNet for pose control.

Its offline capability suits remote creators, while API access (via Dream Studio or Hugging Face) integrates into workflows.

Who is Stable Diffusion For?

This tool targets creative professionals, from novice digital artists to experienced developers. Beginners appreciate the intuitive interfaces, while experts value customization options like fine-tuning. It's perfect for those prioritizing open-source ethics and local privacy over cloud dependencies. However, it's less suited for non-creative tasks or users without basic tech setup.

Limitations and Best Practices

Despite strengths, Stable Diffusion has hurdles:

- Biases: Outputs may favor Western aesthetics; diverse prompts and fine-tuning help mitigate.

- Anatomical Challenges: Hands and faces can distort—use negative prompts or LoRAs.

- Resource Needs: Local runs require decent hardware; cloud alternatives like Stablediffusionai.ai bridge gaps.

Always review for ethical issues, such as copyright in training data. Communities on Civitai or Reddit offer models and tips to overcome flaws.

Why Choose Stable Diffusion?

In a crowded AI landscape, Stable Diffusion's open-source nature fosters innovation, with constant community updates. Compared to closed tools like DALL-E, it offers unlimited generations without quotas and full ownership of outputs. For high-res needs, SDXL delivers pro-level quality affordably. Whether sparking ideas or finalizing projects, it empowers users to blend human ingenuity with AI efficiency.

Pricing and Access

Core Stable Diffusion is free to download and use. Platforms like Stablediffusionai.ai may offer free tiers with paid upgrades for faster generations or advanced features. Dream Studio API credits start low, scaling for heavy use. Local setups eliminate ongoing costs, making it economically viable for sustained creativity.

In essence, Stable Diffusion redefines AI art generation by putting power in users' hands. Dive into its ecosystem via GitHub or online demos, and unlock endless possibilities for visual storytelling.

Tags Related to Stable Diffusion