Denvr Dataworks

Overview of Denvr Dataworks

Denvr Dataworks: High-Performance AI Services

Denvr Dataworks offers high-performance computing services specifically designed for developers and operators working with Artificial Intelligence. They provide on-demand and dedicated access to the latest GPU architectures, optimized for both AI training and inferencing. Think of it as a GPU cloud service tailored for AI workloads.

What is Denvr Dataworks?

Denvr Dataworks is a vertically integrated provider of software, hardware, and data centers located in both Canada and the USA. They focus on delivering AI compute, AI inference, and AI platform solutions to accelerate AI development and deployment.

How does Denvr Dataworks work?

Denvr Dataworks offers several key AI services:

- AI Compute: Provides on-demand or reserved AI compute resources across virtualized and bare metal environments. This includes support for powerful GPUs like NVIDIA H100 and A100, as well as Intel Gaudi HPUs.

- AI Inference: Enables the execution of foundation models or custom models with high-speed, low-cost inference. They offer fully managed API endpoints for easy deployment.

- AI Platform: Facilitates the deployment of a private AI factory, providing pre-integrated AI compute, networking, and storage.

Key Features and Benefits:

- High-Performance GPU Clusters: Scale up to 1024 GPU clusters with NVIDIA HGX H100, A100, and Intel Gaudi AI processors. Benefit from high-speed interconnects like InfiniBand or RoCE v2 up to 3.2 Tbps.

- Orchestration Layer: Utilize Intel Xeon CPU nodes for job scheduling, system operations, and platform services, fully compatible with Kubernetes, SLURM, or custom orchestration stacks.

- Fast, Scalable Filesystems: Support AI workloads with petabyte-scale network filesystems providing over 10 GB/s bandwidth, built on NVMe SSD.

- Developer Platform: Provides Jupyter Notebooks for data exploration and rapid development. Includes pre-loaded ML machine images with GPU drivers, Docker, and container toolkits. APIs and CLI tools support automation, with language bindings for Python and Terraform. Comprehensive documentation is available with user guides and FAQs.

Startup Program

Denvr Dataworks offers the AI Ascend startup program for early-stage companies. This program provides up to $500,000 in AI compute credits. New applicants receive an initial $1,000 start-up credit to begin immediately. This program is designed to help AI startups bring their technology to life faster by providing access to advanced GPUs optimized for AI workloads.

Infrastructure Highlights

Denvr Dataworks' full-stack AI infrastructure combines high-performance compute clusters, ultra-fast networking, and networked storage. This infrastructure is engineered to accelerate training, inference, and production workloads at any scale.

Technical Support

Denvr Dataworks provides comprehensive technical support:

- High-Touch Technical Support: Access expert staff to support developers and operators.

- Global Help Desk: Contact the support team via web ticketing or email, with options for Slack or Teams support for enterprise tiers.

- ML Experts: Collaborate directly with Denvr’s team of Solution Engineers for platform integration, best practices, and troubleshooting.

- Real-time Monitoring: The support team is notified of anomalies in real time and often resolves problems before they impact workflows.

- Security & Compliance: Support processes follow industry best practices with SOC 2-compliant controls.

Partnerships

Denvr Dataworks has partnerships with key technology providers:

- NVIDIA Cloud Partner: Offers optimized AI infrastructure designed for production workloads, supporting everything from foundation model training to generative AI and next-gen AI agents.

- Intel Partner Alliance: Works with Intel to bring the latest processors and HPUs to market faster, providing cost-efficient Xeon and Gaudi systems.

Why is Denvr Dataworks important?

Denvr Dataworks is important because it provides crucial AI infrastructure and services that enable organizations to develop and deploy AI solutions more efficiently. Their integrated approach and focus on high performance can significantly accelerate the AI innovation process.

Where can I use Denvr Dataworks?

Denvr Dataworks can be used for a wide range of AI applications, including:

- Model training

- AI inferencing

- Data science

- Generative AI

How to get started with Denvr Dataworks?

To get started with Denvr Dataworks, you can visit their website and explore their products and services. They offer a startup program with AI compute credits and a free trial. You can also contact their sales team to discuss your specific requirements.

Ready to get started?

Denvr AI Cloud is for innovators, creators, entrepreneurs, and business leaders. Start your AI journey today!

Best Alternative Tools to "Denvr Dataworks"

Runpod is an AI cloud platform simplifying AI model building and deployment. Offering on-demand GPU resources, serverless scaling, and enterprise-grade uptime for AI developers.

Runpod is an all-in-one AI cloud platform that simplifies building and deploying AI models. Train, fine-tune, and deploy AI effortlessly with powerful compute and autoscaling.

Rent high-performance GPUs at low cost with Vast.ai. Instantly deploy GPU rentals for AI, machine learning, deep learning, and rendering. Flexible pricing & fast setup.

Massed Compute offers on-demand GPU and CPU cloud computing infrastructure for AI, machine learning, and data analysis. Access high-performance NVIDIA GPUs with flexible, affordable plans.

Deep Infra is a platform for low-cost, scalable AI inference with 100+ ML models like DeepSeek-V3.2, Qwen, and OCR tools. Offers developer-friendly APIs, GPU rentals, zero data retention, and US-based secure infrastructure for production AI workloads.

Modal: Serverless platform for AI and data teams. Run CPU, GPU, and data-intensive compute at scale with your own code.

Anyscale, powered by Ray, is a platform for running and scaling all ML and AI workloads on any cloud or on-premises. Build, debug, and deploy AI applications with ease and efficiency.

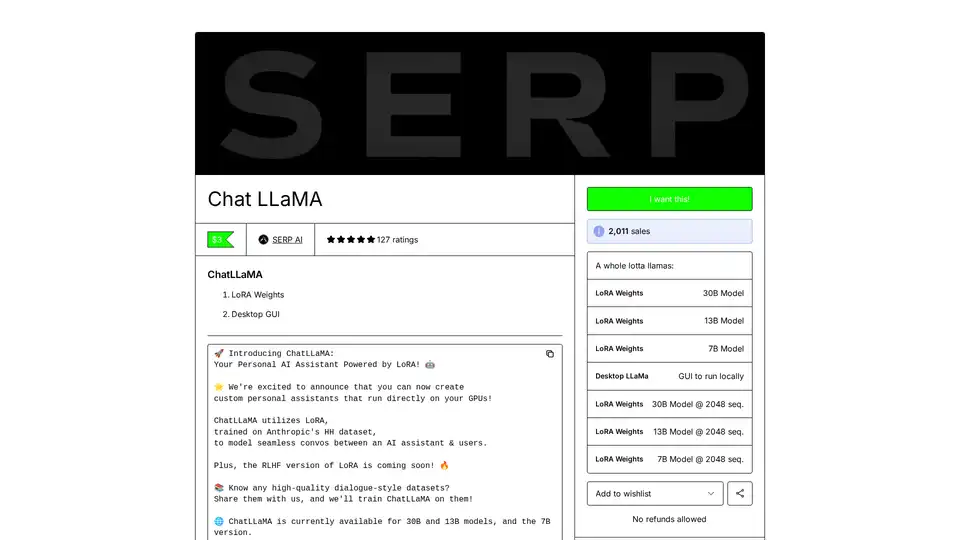

ChatLLaMA is a LoRA-trained AI assistant based on LLaMA models, enabling custom personal conversations on your local GPU. Features desktop GUI, trained on Anthropic's HH dataset, available for 7B, 13B, and 30B models.

Float16.Cloud provides serverless GPUs for fast AI development. Run, train, and scale AI models instantly with no setup. Features H100 GPUs, per-second billing, and Python execution.

Lightning AI is an all-in-one cloud workspace designed to build, deploy, and train AI agents, data & AI apps. Get model APIs, GPU training, and multi-cloud deployment in one subscription.

Juice enables GPU-over-IP, allowing you to network-attach and pool your GPUs with software for AI and graphics workloads.

Predibase is a developer platform for fine-tuning and serving open-source LLMs. Achieve unmatched accuracy and speed with end-to-end training and serving infrastructure, featuring reinforcement fine-tuning.

Try DeepSeek V3 online for free with no registration. This powerful open-source AI model features 671B parameters, supports commercial use, and offers unlimited access via browser demo or local installation on GitHub.

Phala Cloud offers a trustless, open-source cloud infrastructure for deploying AI agents and Web3 applications, powered by TEE. It ensures privacy, scalability, and is governed by code.