FramePack

Overview of FramePack

FramePack: Revolutionizing Video Generation on Consumer GPUs

What is FramePack? FramePack is a groundbreaking, open-source video diffusion technology designed to enable high-quality video generation on consumer-grade GPUs, requiring as little as 6GB of VRAM. It uses an innovative frame context packing approach, making AI video creation more accessible than ever before.

Key Features and Benefits:

- Low VRAM Requirements: Generate high-quality videos on laptops and mid-range systems with just 6GB of VRAM.

- Anti-Drifting Technology: Maintain consistent quality over long video sequences using FramePack's bi-directional sampling approach.

- Local Execution: Generate videos directly on your hardware, eliminating the need for cloud processing or expensive GPU rentals.

How FramePack Works

FramePack offers an intuitive workflow for generating high-quality video content:

- Installation and Setup: Install via GitHub and set up your environment.

- Define Your Initial Frame: Start with an image or generate one from a text prompt to begin your video sequence.

- Create Motion Prompts: Describe the desired movement and action in natural language to guide the video generation.

- Generate and Review: Watch as FramePack generates your video frame by frame with impressive temporal consistency.

Core Technologies Explained

- Frame Context Packing: Efficiently compress and utilize frame context information to enable processing on consumer hardware. This is key to FramePack's low VRAM requirement.

- Local Video Generation: Generate videos directly on your device without sending data to external servers, ensuring privacy and control.

- Bi-Directional Sampling: Maintain consistency across long video sequences with anti-drifting technology. This prevents the video quality from degrading over time.

- Optimized Performance: Generate frames at approximately 1.5 seconds per frame on high-end GPUs with Teacache optimization. Even on lower-end hardware, the performance is usable for prototyping.

- Open Source Access: Benefit from a fully open-source implementation that allows for customization and community contributions. This fosters innovation and ensures long-term support.

- Multimodal Input: Use both text prompts and image inputs to guide your video generation, providing flexibility and control over the creative process.

Why is FramePack Important?

FramePack democratizes AI video generation by making it accessible to users with limited hardware resources. The ability to run video generation locally is a significant advantage for privacy-conscious users and those with limited internet bandwidth. The open-source nature of FramePack encourages community collaboration and continuous improvement.

User Testimonials

- Emily Johnson, Independent Animator: "FramePack has transformed how I create animations. Being able to generate high-quality video on my laptop means I can work from anywhere, and the results are impressive enough for client presentations."

- Michael Rodriguez, VFX Specialist: "As someone who works with multiple creative teams, FramePack has been a game-changer. It provides a fast, efficient way to prototype video concepts without waiting for render farms, saving us countless hours in production."

- Sarah Chen, AI Researcher: "This tool has transformed how we approach video generation research. FramePack's innovative frame context packing allows us to experiment with longer sequences on standard lab equipment, dramatically accelerating our research cycle."

FAQ

- What exactly is FramePack and how does it work? FramePack is an open-source video diffusion technology that enables next-frame prediction on consumer GPUs. It works by efficiently packing frame context information and using a constant-length input format, allowing it to generate high-quality videos frame-by-frame even on hardware with limited VRAM.

- What are the system requirements for FramePack? FramePack requires an NVIDIA GPU with at least 6GB VRAM (like RTX 3060), CUDA support, PyTorch 2.6+, and runs on Windows or Linux. For optimal performance, an RTX 30 or 40 series GPU with 8GB+ VRAM is recommended.

- How fast can FramePack generate videos? On high-end GPUs like the RTX 4090, FramePack can generate frames at approximately 1.5 seconds per frame with Teacache optimization. On laptops with 6GB VRAM, generation is 4-8x slower but still usable for prototyping.

- Is FramePack free to use? FramePack offers a free open-source version with full functionality. Premium tiers may provide additional features, priority support, and extended capabilities for professional users and teams.

- What is 'frame context packing' in FramePack? Frame context packing is FramePack's core innovation that efficiently compresses information from previous frames into a constant-length format. This allows the model to maintain temporal consistency without requiring increasing memory as the video lengthens.

- How does FramePack compare to other video generation tools? Unlike cloud-based solutions, FramePack runs entirely locally on your hardware. While some cloud services may offer faster generation, FramePack provides superior privacy, no usage limits, and the ability to generate longer sequences with consistent quality.

Conclusion

FramePack represents a significant step forward in AI video generation. Its low VRAM requirements, open-source nature, and innovative frame context packing technology make it a valuable tool for both hobbyists and professionals. Whether you're creating animations, prototyping video concepts, or conducting research, FramePack offers a fast, efficient, and accessible solution for generating high-quality videos on consumer GPUs. What is the best way to generate video on your local machine? FramePack is definitely a top contender.

Best Alternative Tools to "FramePack"

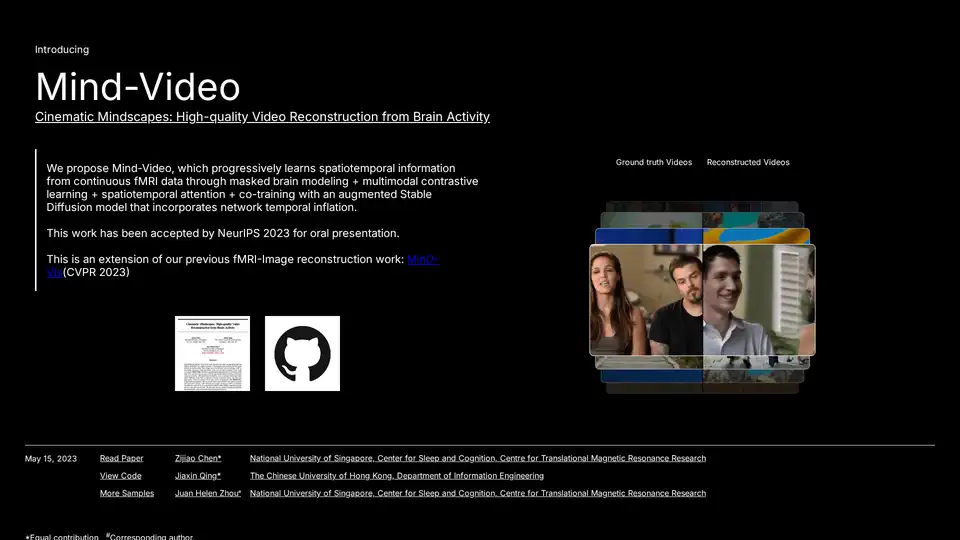

Mind-Video uses AI to reconstruct videos from brain activity captured via fMRI. This innovative tool combines masked brain modeling, multimodal contrastive learning, and spatiotemporal attention to generate high-quality video.

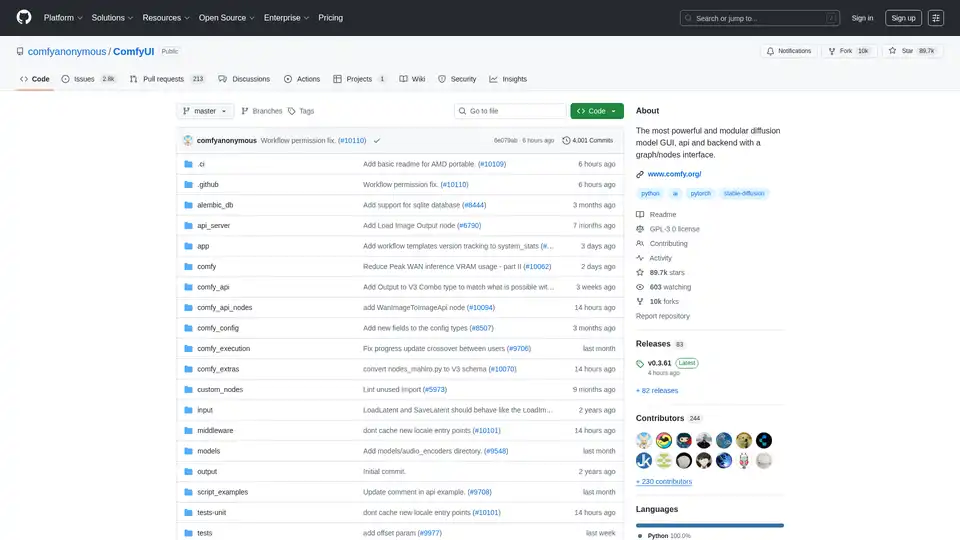

ComfyUI is a powerful, modular, visual AI engine for designing and executing advanced Stable Diffusion pipelines using a graph/nodes interface. Available on Windows, Linux, and macOS.

Lumiere, by Google Research, is a space-time diffusion model for video generation. It supports text-to-video, image-to-video, video stylization, cinemagraphs, and inpainting, generating realistic and coherent motion.

Discover NightCafe, the ultimate free AI art generator with top models like Flux and DALL-E 3, vibrant community, and daily challenges for endless creativity.

Explore AI Library, the comprehensive catalog of over 2150 neural networks and AI tools for generative content creation. Discover top AI art models, tools for text-to-image, video generation, and more to boost your creative projects.

Hypergro is an AI creative partner that turns ideas into high-performing image and video ads for Meta, YouTube, and Instagram in minutes. Ideal for marketers seeking time-saving, cost-effective ad creation with easy customization and multi-language support.

Prodia turns complex AI infrastructure into production-ready workflows — fast, scalable, and developer-friendly.

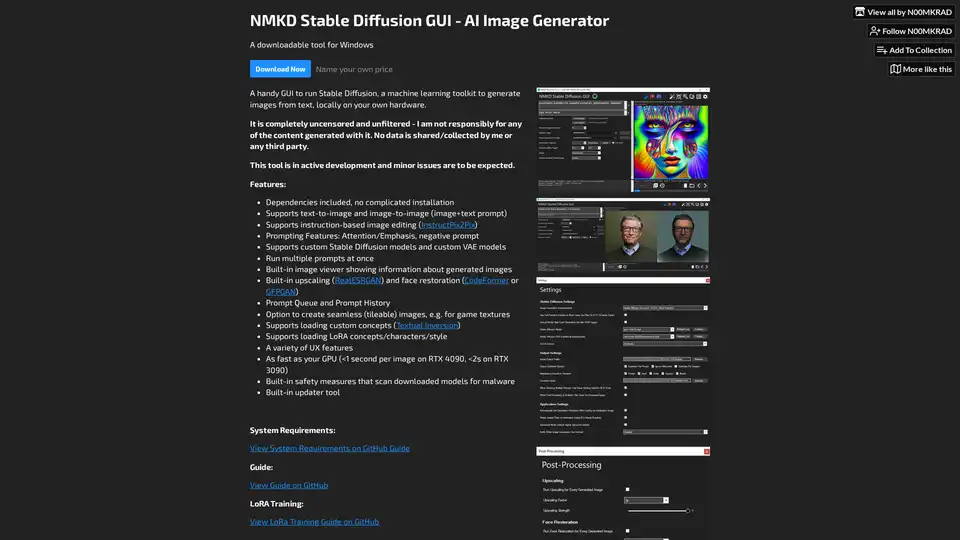

NMKD Stable Diffusion GUI is a free, open-source tool for generating AI images locally on your GPU using Stable Diffusion. It supports text-to-image, image editing, upscaling, and LoRA models with no censorship or data collection.

Use Pollo AI, the free, ultimate, all-in-one AI image & video generator, to create images/videos with text prompts, images or videos. Turn your ideas to images and videos with high resolution and quality.

Discover Stock Imagery AI, the easiest free tool to generate hyper-realistic images, motion videos, text-to-video content, and upscale photos. Perfect for creators needing quick, high-quality stock visuals for blogs, social media, and more.

Discover Wan 2.2 AI, a cutting-edge platform for text-to-video and image-to-video generation with cinema-grade controls, professional motion, and 720p resolution. Ideal for creators, marketers, and producers seeking high-quality AI video tools.

PayPerQ (PPQ.AI) offers instant access to leading AI models like GPT-4o using Bitcoin and crypto. Pay per query with no subscriptions or registration required, supporting text, image, and video generation.

Wan 2.2 is Alibaba's leading AI video generation model, now open-source. It offers cinematic vision control, supports text-to-video and image-to-video generation, and provides efficient high-definition hybrid TI2V.

Gen-Image: AI image generator with Stable Diffusion 3.5 and other models. Generate stunning images instantly. Try it now!