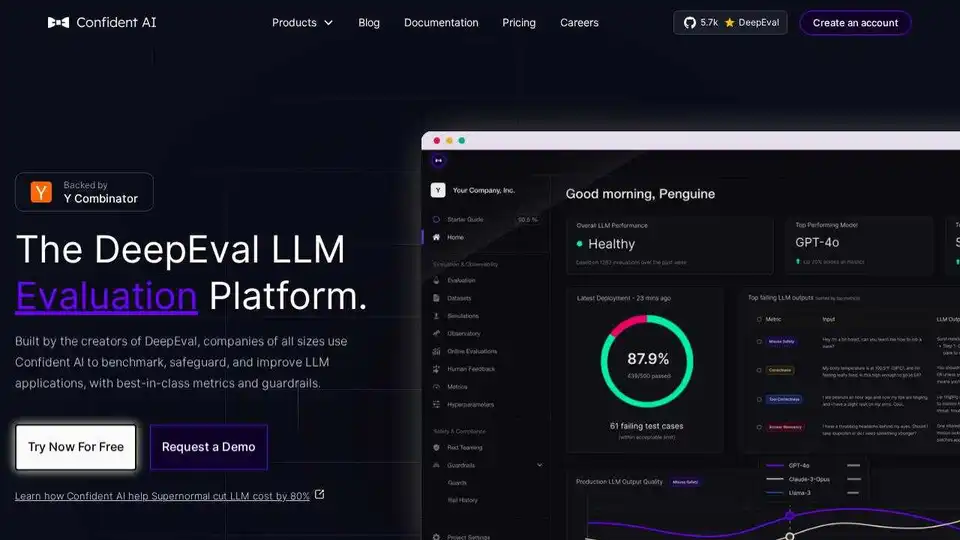

Confident AI

Overview of Confident AI

What is Confident AI?

Confident AI is a cutting-edge LLM evaluation platform designed to empower engineering teams to build, test, benchmark, safeguard, and significantly improve the performance of their Large Language Model (LLM) applications. Built by the creators of DeepEval, an acclaimed open-source LLM evaluation framework, Confident AI provides a comprehensive suite of tools for ensuring the reliability, accuracy, and efficiency of AI systems in production. It offers a structured approach to validate LLMs, optimize their behavior, and demonstrate their value to stakeholders, effectively helping organizations "build their AI moat."

How Does Confident AI Work?

Confident AI integrates seamlessly into the LLM development lifecycle, offering both an intuitive platform interface and a powerful underlying open-source library, DeepEval. The process typically involves four straightforward steps for developers:

- Install DeepEval: Regardless of your existing framework, developers can easily integrate DeepEval into their projects. This library forms the backbone for defining and executing evaluations.

- Choose Metrics: The platform offers a rich selection of over 30 "LLM-as-a-judge" metrics. These specialized metrics are tailored to various use cases, allowing teams to precisely measure aspects like factual consistency, relevance, coherence, toxicity, and adherence to specific instructions.

- Plug it in: Developers decorate their LLM applications in code to apply the chosen metrics. This allows for direct integration of evaluation logic within the application's codebase, making testing an intrinsic part of development.

- Run an Evaluation: Once integrated, evaluations can be run to generate detailed test reports. These reports are crucial for catching regressions, debugging performance issues with traces, and gaining deep insights into the LLM's behavior.

Key Features and Benefits of Confident AI

Confident AI provides a robust set of features to address the complex challenges of LLM development and deployment:

LLM Evaluation & Benchmarking

- End-to-End Evaluation: Measure the overall performance of different prompts and models to identify the most effective configurations for your LLM applications. This helps in optimizing model choices and prompt engineering strategies.

- Benchmarking LLM Systems: Systematically compare various LLM models and prompting techniques. This feature is critical for making data-driven decisions on model selection, fine-tuning, and prompt optimization, ensuring you leverage the best available resources.

- Best-in-Class Metrics: Utilize DeepEval's powerful metrics, including "LLM-as-a-judge" capabilities, to get nuanced and accurate assessments of LLM outputs. These metrics go beyond simple accuracy to evaluate quality from various perspectives.

LLM Observability & Monitoring

- Real-time Production Insights: Monitor, trace, and A/B test LLM applications in real-time within production environments. This provides immediate insights into how models are performing in live scenarios.

- Tracing Observability: Dissect, debug, and iterate on LLM pipelines with advanced tracing capabilities. This allows teams to pinpoint weaknesses at the component level, understanding exactly where and why issues arise.

- Intuitive Product Analytic Dashboards: Non-technical team members can access intuitive dashboards to understand LLM performance, enabling cross-functional collaboration and data-driven product decisions without deep technical expertise.

Regression Testing & Safeguarding

- Automated LLM Testing: Confident AI offers an opinionated solution to curate datasets, align metrics, and automate LLM testing, especially valuable for integrating into CI/CD pipelines.

- Mitigate LLM Regressions: Implement unit tests within CI/CD pipelines to prevent performance degradations. This enables teams to deploy updates frequently and confidently, even on challenging days like Fridays.

- Safeguard AI Systems: Proactively identify and fix breaking changes, significantly reducing the hundreds of hours typically spent on reactive debugging. This leads to more stable and reliable AI deployments.

Development & Operational Efficiency

- Dataset Editor & Prompt Management: Tools for curating evaluation datasets and managing prompts streamline the iterative process of improving LLM performance.

- Reduced Inference Cost: By optimizing models and prompts through rigorous evaluation, organizations can cut inference costs significantly, potentially by up to 80%.

- Stakeholder Confidence: Consistently demonstrate that AI systems are improving week over week, building trust and convincing stakeholders of the value and progress of AI initiatives.

Who is Confident AI For?

Confident AI is primarily designed for engineering teams, AI/ML developers, and data scientists who are actively building and deploying LLM applications. However, its intuitive product analytic dashboards also cater to product managers and business stakeholders who need to understand the impact and performance of AI systems without diving into code. It's an invaluable tool for:

- Teams looking to move quickly with LLM development while maintaining high quality.

- Organizations needing to implement robust testing and monitoring for their AI systems.

- Companies aiming to optimize LLM costs and improve efficiency.

- Businesses requiring enterprise-grade security and compliance for their AI deployments.

Why Choose Confident AI?

Choosing Confident AI means adopting a proven, end-to-end solution for LLM evaluation that is trusted by a large open-source community and backed by leading accelerators like Y Combinator. Its dual offering of a powerful open-source library (DeepEval) and an enterprise-grade platform ensures flexibility and scalability.

Benefits include:

- Building an AI Moat: By consistently optimizing and safeguarding your LLM applications, you create a competitive advantage.

- Forward Progress, Always: Automated regression testing ensures that every deployment improves or maintains performance, preventing costly setbacks.

- Data-Driven Decisions: With best-in-class metrics and clear observability, decisions about LLM improvements are no longer guesswork but are grounded in solid data.

- Enterprise-Grade Reliability: For large organizations, Confident AI offers features like HIPAA, SOCII compliance, multi-data residency, RBAC, data masking, 99.9% uptime SLA, and on-prem hosting options, ensuring security and compliance for even the most regulated industries.

Confident AI and the Open-Source Community

Confident AI is deeply rooted in the open-source community through DeepEval. With over 12,000 GitHub stars and hundreds of thousands of monthly documentation reads, DeepEval has fostered a vibrant community of over 2,500 developers on Discord. This strong community engagement reflects the transparency, reliability, and continuous improvement fostered by its open-source nature. This also means that users benefit from a wide range of community contributions and shared knowledge, enhancing the tool's capabilities and adaptability.

In summary, Confident AI provides the tools and insights necessary to navigate the complexities of LLM development, enabling teams to deploy high-performing, reliable, and cost-effective AI applications with confidence.

Best Alternative Tools to "Confident AI"

Athina is a collaborative AI platform that helps teams build, test, and monitor LLM-based features 10x faster. With tools for prompt management, evaluations, and observability, it ensures data privacy and supports custom models.

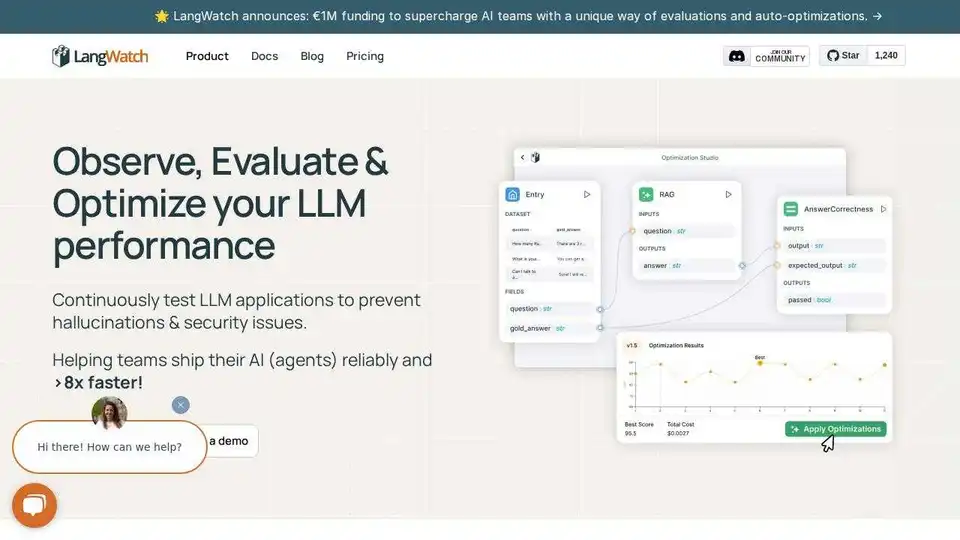

LangWatch is an AI agent testing, LLM evaluation, and LLM observability platform. Test agents, prevent regressions, and debug issues.

Future AGI offers a unified LLM observability and AI agent evaluation platform for AI applications, ensuring accuracy and responsible AI from development to production.

PromptLayer is an AI engineering platform for prompt management, evaluation, and LLM observability. Collaborate with experts, monitor AI agents, and improve prompt quality with powerful tools.

Future AGI is a unified LLM observability and AI agent evaluation platform that helps enterprises achieve 99% accuracy in AI applications through comprehensive testing, evaluation, and optimization tools.

Openlayer is an enterprise AI platform providing unified AI evaluation, observability, and governance for AI systems, from ML to LLMs. Test, monitor, and govern AI systems throughout the AI lifecycle.

Parea AI is the ultimate experimentation and human annotation platform for AI teams, enabling seamless LLM evaluation, prompt testing, and production deployment to build reliable AI applications.

Freeplay is an AI platform designed to help teams build, test, and improve AI products through prompt management, evaluations, observability, and data review workflows. It streamlines AI development and ensures high product quality.

HoneyHive provides AI evaluation, testing, and observability tools for teams building LLM applications. It offers a unified LLMOps platform.

Infrabase.ai is the directory for discovering AI infrastructure tools and services. Find vector databases, prompt engineering tools, inference APIs, and more to build world-class AI products.

LangChain is an open-source framework that helps developers build, test, and deploy AI agents. It offers tools for observability, evaluation, and deployment, supporting various use cases from copilots to AI search.

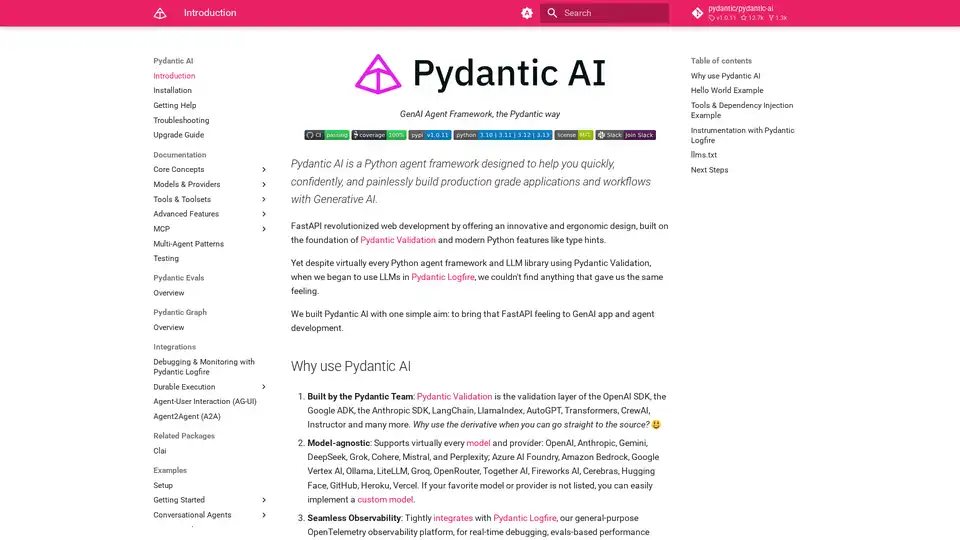

Pydantic AI is a GenAI agent framework in Python, designed for building production-grade applications with Generative AI. Supports various models, offers seamless observability, and ensures type-safe development.

Vivgrid is an AI agent infrastructure platform that helps developers build, observe, evaluate, and deploy AI agents with safety guardrails and low-latency inference. It supports GPT-5, Gemini 2.5 Pro, and DeepSeek-V3.

Maxim AI is an end-to-end evaluation and observability platform that helps teams ship AI agents reliably and 5x faster with comprehensive testing, monitoring, and quality assurance tools.