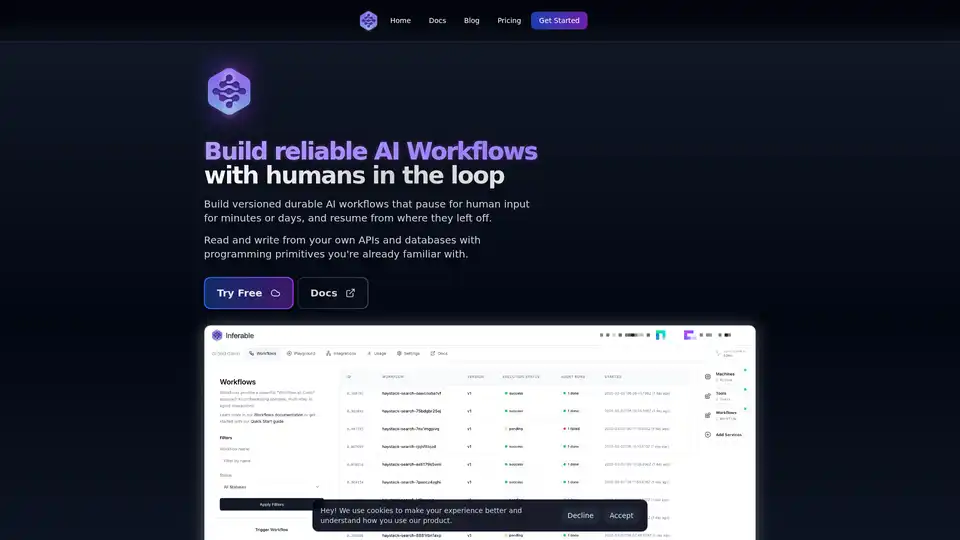

Inferable

Overview of Inferable

What is Inferable?

Inferable is an open-source platform designed to streamline the creation of AI agents, allowing developers to integrate their existing codebases, APIs, and data seamlessly. It focuses on building reliable AI workflows, especially those requiring human-in-the-loop validation.

How does Inferable work?

Inferable provides a set of production-ready LLM primitives that handle many of the complexities involved in building AI workflows. Key features include:

- Workflow Versioning: Enables evolving long-running workflows over time in a backward-compatible manner. Different versions of the same workflow can be defined as requirements change, ensuring that ongoing executions continue using the original version until completion.

- Managed State: Inferable manages all the state required for durable workflows, eliminating the need for developers to provision and manage databases.

- Human-in-the-loop: Build AI workflows that pause for human input for minutes or days, and resume from where they left off.

- Observability: Offers end-to-end observability with a developer console and the ability to plug into existing observability stacks.

- On-Premise Execution: Workflows run on the user's infrastructure, removing the need for a deployment step.

- No Inbound Connections: Enhanced security with outbound-only connections, ensuring infrastructure remains secure without opening inbound ports.

- Open Source: Inferable is completely open source, offering full transparency and control over the codebase.

Code Example:

The platform uses a simple, code-driven approach:

import { Inferable } from "inferable";

const inferable = new Inferable({

apiSecret: require("./cluster.json").apiKey,

});

const workflow = inferable.workflows.create({

name: "customerDataProcessor",

inputSchema: z.object({

executionId: z.string(),

customerId: z.string(),

}),

});

// Initial version of the workflow

workflow.version(1).define(async (ctx, input) => {

const customerData = await fetchCustomerData(input.customerId);

// Process the data with a simple analysis

const analysis = await ctx.llm.structured({

input: JSON.stringify(customerData),

schema: z.object({

riskLevel: z.enum(["low", "medium", "high"]),

summary: z.string(),

}),

});

return { analysis };

});

// Enhanced version with more detailed analysis

workflow.version(2).define(async (ctx, input) => {

const customerData = await fetchCustomerData(input.customerId);

const transactionHistory =

await fetchTransactionHistory(input.customerId);

// Process the data with more advanced analysis

const analysis = await ctx.llm.structured({

input: JSON.stringify({ customerData, transactionHistory }),

schema: z.object({

riskLevel: z.enum(["low", "medium", "high"]),

summary: z.string(),

recommendations: z.array(z.string()),

factors: z.array(z.object({

name: z.string(),

impact: z.enum(["positive", "negative", "neutral"]),

weight: z.number(),

})),

}),

});

return {

analysis,

version: 2,

processedAt: new Date().toISOString()

};

});

This example demonstrates how to define and version a workflow for processing customer data using LLM-based analysis.

Why choose Inferable?

- Flexibility: Works with existing programming primitives for control flow, without inverting the programming model.

- Control: Full control over data and compute by self-hosting on your own infrastructure.

- Transparency: Benefit from full transparency and control over the codebase due to its open-source nature.

- Enhanced Security: Secure infrastructure with outbound-only connections.

Who is Inferable for?

Inferable is ideal for startups and scale-ups looking to build reliable AI-powered workflows with human-in-the-loop validation. It's particularly useful for those who need to:

- Automate complex processes with AI.

- Maintain control over their data and infrastructure.

- Ensure compliance with data privacy regulations.

Best way to get started with Inferable?

- Explore the Documentation: Comprehensive documentation is available to guide you through setup and usage.

- Self-Hosting Guide: Provides instructions for deploying Inferable on your own infrastructure.

- GitHub Repository: Access the complete open-source codebase.

Inferable offers a way to implement AI workflows that are versioned, durable, and observable, with the added benefit of human oversight, making it a valuable tool for companies looking to leverage AI in their operations.

AI Programming Assistant Auto Code Completion AI Code Review and Optimization AI Low-Code and No-Code Development

Best Alternative Tools to "Inferable"

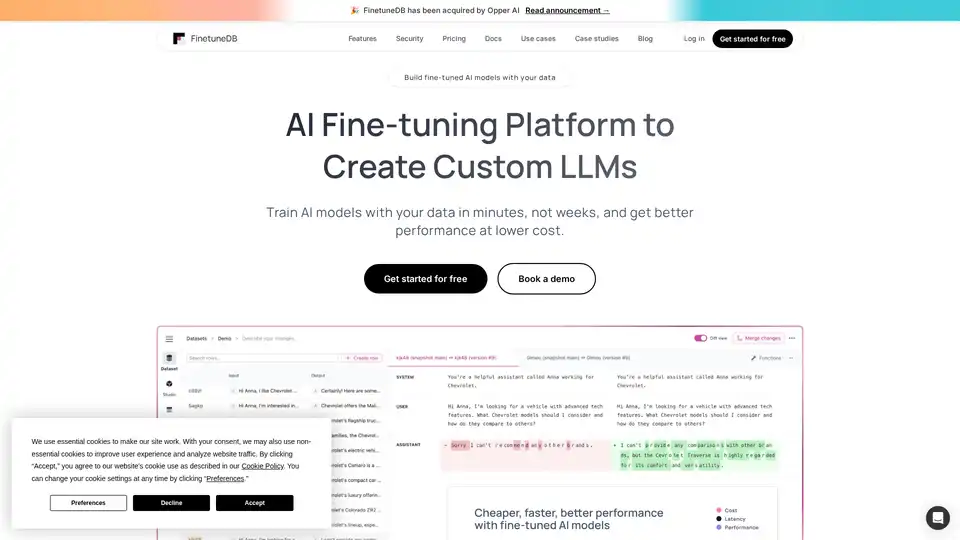

FinetuneDB is an AI fine-tuning platform that lets you create and manage datasets to train custom LLMs quickly and cost-effectively, improving model performance with production data and collaborative tools.

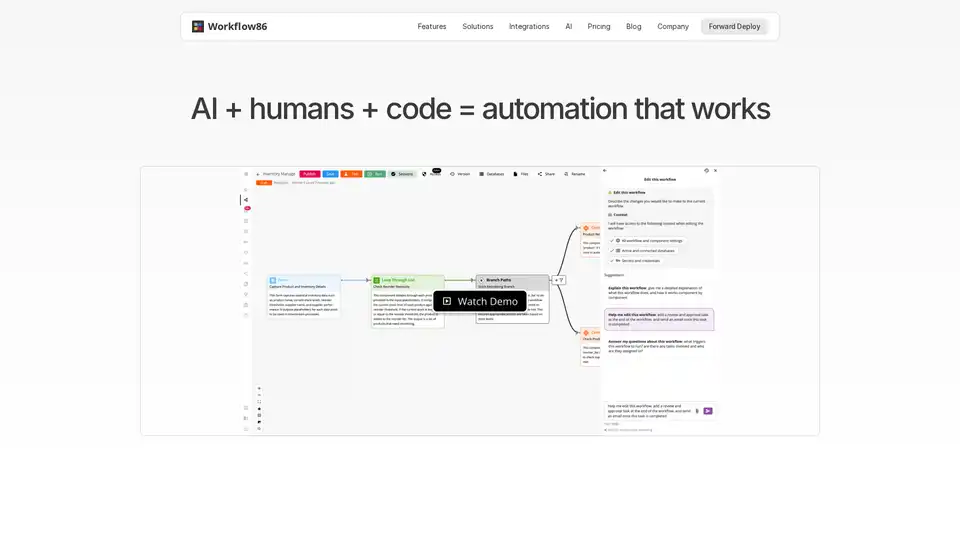

Workflow86 is an AI-powered platform for automating business processes. It combines AI, human-in-the-loop, and code to create flexible workflows. Features include AI assistants, task management, integrations, and custom code execution.

AlphaWatch uses AI agents to analyze complex enterprise data, offering multilingual models, human-in-the-loop automation, and integrations for better decision-making.

Langdock is an all-in-one AI platform designed for enterprises, offering AI chat, assistants, integrations, and workflow automation. It empowers employees and developers to leverage AI effectively.