Runpod

Overview of Runpod

Runpod: The Cloud Built for AI

Runpod is an all-in-one cloud platform designed to streamline the process of training, fine-tuning, and deploying AI models. It caters to AI developers by providing simplified GPU infrastructure and an end-to-end AI cloud solution.

What is Runpod?

Runpod is a comprehensive cloud platform that simplifies the complexities of building and deploying AI models. It offers a range of GPU resources and tools that enable developers to focus on innovation rather than infrastructure management.

How does Runpod work?

Runpod simplifies the AI workflow into a single, cohesive flow, allowing users to move from idea to deployment seamlessly. Here’s how it works:

- Spin Up: Launch a GPU pod in seconds, eliminating provisioning delays.

- Build: Train models, render simulations, or process data without limitations.

- Iterate: Experiment with confidence using instant feedback and safe rollbacks.

- Deploy: Auto-scale across regions with zero idle costs and downtime.

Key Features and Benefits:

- On-Demand GPU Resources:

- Supports over 30 GPU SKUs, from B200s to RTX 4090s.

- Provides fully-loaded, GPU-enabled environments in under a minute.

- Global Deployment:

- Run workloads across 8+ regions worldwide.

- Ensures low-latency performance and global reliability.

- Serverless Scaling:

- Adapts to your workload in real-time, scaling from 0 to 100 compute workers.

- Pay only for what you use.

- Enterprise-Grade Uptime:

- Handles failovers, ensuring workloads run smoothly.

- Managed Orchestration:

- Serverless queues and distributes tasks seamlessly.

- Real-Time Logs:

- Provides real-time logs, monitoring, and metrics.

Why choose Runpod?

- Cost-Effective:

- Runpod is designed to maximize throughput, accelerate scaling, and increase efficiency, ensuring every dollar works harder.

- Flexibility and Scalability:

- Runpod’s scalable GPU infrastructure provides the flexibility needed to match customer traffic and model complexity.

- Developer-Friendly:

- Runpod simplifies every step of the AI workflow, allowing developers to focus on building and innovating.

- Reliability:

- Offers enterprise-grade uptime and ensures that workloads run smoothly, even when resources don’t.

Who is Runpod for?

Runpod is designed for:

- AI developers

- Machine learning engineers

- Data scientists

- Researchers

- Startups

- Enterprises

How to use Runpod?

- Sign Up: Create an account on the Runpod platform.

- Launch a GPU Pod: Choose from a variety of GPU SKUs and launch a fully-loaded environment in seconds.

- Build and Train: Use the environment to train models, render simulations, or process data.

- Deploy: Scale your workloads across multiple regions with zero downtime.

Customer Success Stories:

Many developers and companies have found success using Runpod. Here are a few examples:

- InstaHeadshots: Saved 90% on their infrastructure bill by using bursty compute whenever needed.

- Coframe: Scaled up effortlessly to meet demand at launch, thanks to the flexibility offered by Runpod.

Real-world Applications

Runpod is versatile and supports various applications, including:

- Inference

- Fine-tuning

- AI Agents

- Compute-heavy tasks

By choosing Runpod, organizations can:

- Reduce infrastructure management overhead.

- Accelerate AI development cycles.

- Achieve cost-effective scaling.

- Ensure reliable performance.

Runpod makes infrastructure management their job, allowing you to focus on building what’s next. Whether you're a startup or an enterprise, Runpod's AI cloud platform provides the resources and support needed to bring your AI projects to life.

In summary, Runpod offers a comprehensive, cost-effective, and scalable solution for AI development and deployment. It is an ideal platform for developers looking to build, train, and scale machine learning models efficiently.

Best Alternative Tools to "Runpod"

Runpod is an all-in-one AI cloud platform that simplifies building and deploying AI models. Train, fine-tune, and deploy AI effortlessly with powerful compute and autoscaling.

Novita AI provides 200+ Model APIs, custom deployment, GPU Instances, and Serverless GPUs. Scale AI, optimize performance, and innovate with ease and efficiency.

Float16.Cloud provides serverless GPUs for fast AI development. Run, train, and scale AI models instantly with no setup. Features H100 GPUs, per-second billing, and Python execution.

Modal: Serverless platform for AI and data teams. Run CPU, GPU, and data-intensive compute at scale with your own code.

GreenNode offers comprehensive AI-ready infrastructure and cloud solutions with H100 GPUs, starting from $2.34/hour. Access pre-configured instances and a full-stack AI platform for your AI journey.

Lumino is an easy-to-use SDK for AI training on a global cloud platform. Reduce ML training costs by up to 80% and access GPUs not available elsewhere. Start training your AI models today!

Design and generate floor plans with AI for free using Floor Plan AI. Turn text or sketches into layouts and 3D-ready visuals in just a few clicks. No signup needed.

Denvr Dataworks provides high-performance AI compute services, including on-demand GPU cloud, AI inference, and a private AI platform. Accelerate your AI development with NVIDIA H100, A100 & Intel Gaudi HPUs.

Anyscale, powered by Ray, is a platform for running and scaling all ML and AI workloads on any cloud or on-premises. Build, debug, and deploy AI applications with ease and efficiency.

Nebius is an AI cloud platform designed to democratize AI infrastructure, offering flexible architecture, tested performance, and long-term value with NVIDIA GPUs and optimized clusters for training and inference.

Enable efficient LLM inference with llama.cpp, a C/C++ library optimized for diverse hardware, supporting quantization, CUDA, and GGUF models. Ideal for local and cloud deployment.

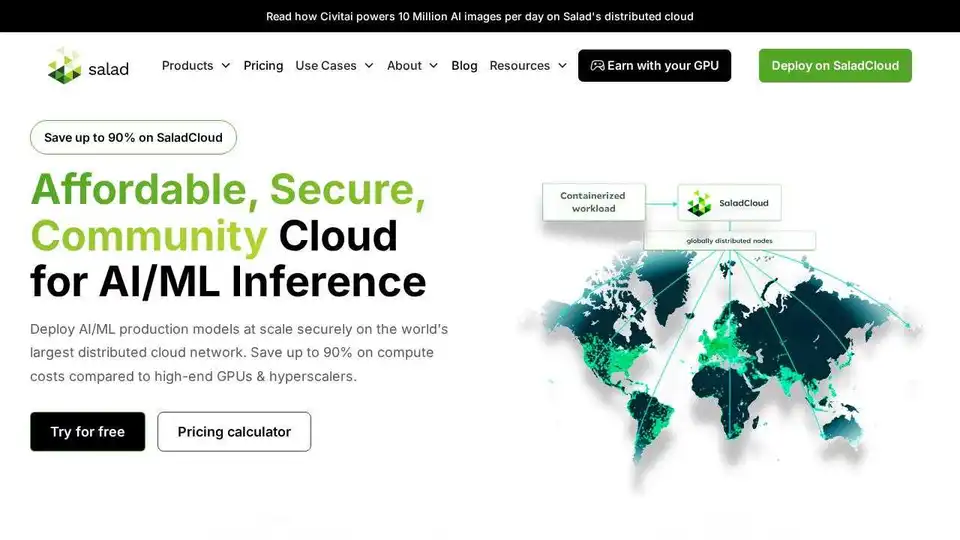

SaladCloud offers affordable, secure, and community-driven distributed GPU cloud for AI/ML inference. Save up to 90% on compute costs. Ideal for AI inference, batch processing, and more.

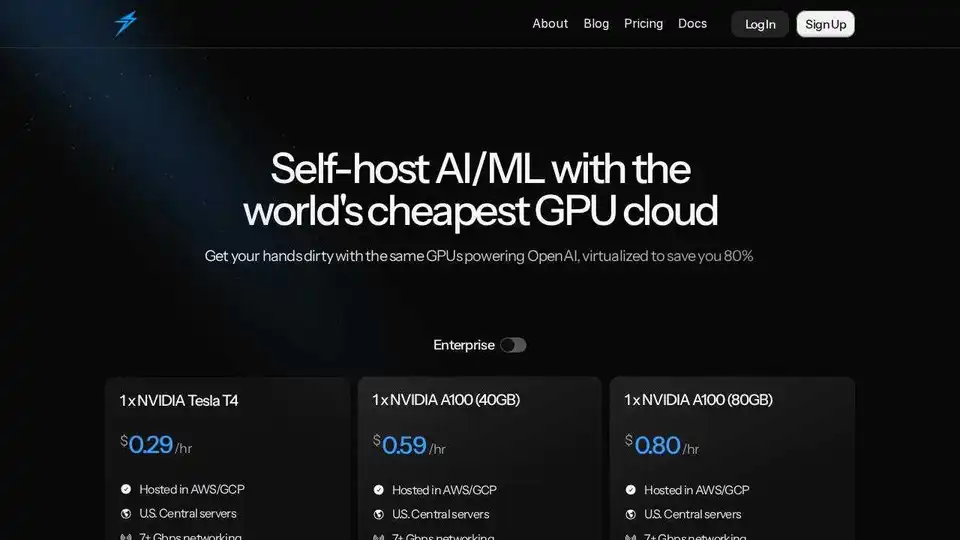

Thunder Compute is a GPU cloud platform for AI/ML, offering one-click GPU instances in VSCode at prices 80% lower than competitors. Perfect for researchers, startups, and data scientists.

Rent high-performance GPUs at low cost with Vast.ai. Instantly deploy GPU rentals for AI, machine learning, deep learning, and rendering. Flexible pricing & fast setup.