Nebius AI Studio Inference Service

Overview of Nebius AI Studio Inference Service

What is Nebius AI Studio Inference Service?

Nebius AI Studio Inference Service is a powerful platform designed to help developers and enterprises run state-of-the-art open-source AI models with enterprise-grade performance. Launched as a key product from Nebius, it simplifies the deployment of large language models (LLMs) for inference tasks, eliminating the need for complex MLOps setups. Whether you're building AI applications, prototypes, or scaling to production, this service provides endpoints for popular models like Meta's Llama series, DeepSeek-R1, and Mistral variants, ensuring high accuracy, low latency, and cost efficiency.

At its core, the service hosts these models on optimized infrastructure located in Europe (Finland), leveraging a highly efficient serving pipeline. This setup guarantees ultra-low latency, especially for time-to-first-token responses, making it suitable for real-time applications such as chatbots, RAG (Retrieval-Augmented Generation), and contextual AI scenarios. Users benefit from unlimited scalability, meaning you can transition from initial testing to high-volume production without performance bottlenecks or hidden limits.

How Does Nebius AI Studio Inference Service Work?

The service operates through a straightforward API that's compatible with familiar libraries like OpenAI's SDK, making integration seamless for developers already using similar tools. To get started, sign up for free credits and access the Playground—a user-friendly web interface for testing models without coding. From there, you can switch to API calls for programmatic use.

Here's a basic example of how to interact with it using Python:

import openai

import os

client = openai.OpenAI(

api_key=os.environ.get("NEBIUS_API_KEY"),

base_url='https://api.studio.nebius.com/v1'

)

completion = client.chat.completions.create(

messages=[{'role': 'user', 'content': 'What is the answer to all questions?'}],

model='meta-llama/Meta-Llama-3.1-8B-Instruct-fast'

)

This code snippet demonstrates querying a model like Meta-Llama-3.1-8B-Instruct in 'fast' mode, delivering quick responses. The service supports two flavors: 'fast' for speed-critical tasks at a premium price, and 'base' for economical processing ideal for bulk workloads. All models undergo rigorous testing to verify quality, ensuring outputs rival proprietary models like GPT-4o in benchmarks for Llama-405B, with up to 3x savings on input tokens.

Data security is a priority, with servers in Finland adhering to strict European regulations. No data leaves the infrastructure unnecessarily, and users can request dedicated instances for enhanced isolation via the self-service console or support team.

Core Features and Main Advantages

Nebius AI Studio stands out with several key features that address common pain points in AI inference:

Unlimited Scalability Guarantee: Run models without quotas or throttling. Seamlessly scale from prototypes to production, handling diverse workloads effortlessly.

Cost Optimization: Pay only for what you use, with pricing up to 3x cheaper on input tokens compared to competitors. Flexible plans start with $1 in free credits, and options like 'base' flavor keep expenses low for RAG and long-context applications.

Ultra-Low Latency: Optimized pipelines deliver fast time-to-first-token, particularly in Europe. Benchmark results show superior performance over rivals, even for complex reasoning tasks.

Verified Model Quality: Each model is tested for accuracy across math, code, reasoning, and multilingual capabilities. Available models include:

- Meta Llama-3.3-70B-Instruct: 128k context, enhanced text performance.

- Meta Llama-3.1-405B-Instruct: 128k context, GPT-4 comparable power.

- DeepSeek-R1: MIT-licensed, excels in math and code (128k context).

- Mixtral-8x22B-Instruct-v0.1: MoE model for coding/math, multilingual support (65k context).

- OLMo-7B-Instruct: Fully open with published training data (2k context).

- Phi-3-mini-4k-instruct: Strong in reasoning (4k context).

- Mistral-Nemo-Instruct-2407: Compact yet outperforming larger models (128k context).

More models are added regularly—check the Playground for the latest.

No MLOps Required: Pre-configured infrastructure means you focus on building, not managing servers or deployments.

Simple UI and API: The Playground offers a no-code environment for experimentation, while the API supports easy integration into apps.

These features make the service not just efficient but also accessible, backed by benchmarks showing better speed and cost for models like Llama-405B.

Who is Nebius AI Studio Inference Service For?

This service targets a wide range of users, from individual developers prototyping AI apps to enterprises handling large-scale production workloads. It's ideal for:

App Builders and Startups: Simplify foundation model integration without heavy infrastructure costs. The free credits and Playground lower the entry barrier.

Enterprises in Gen AI, RAG, and ML Inference: Perfect for industries like biotech, media, entertainment, and finance needing reliable, scalable AI for data preparation, fine-tuning, or real-time processing.

Researchers and ML Engineers: Access top open-source models with verified quality, supporting tasks in reasoning, coding, math, and multilingual applications. Programs like Research Cloud Credits add value for academic pursuits.

Teams Seeking Cost Efficiency: Businesses tired of expensive proprietary APIs will appreciate the 3x token savings and flexible pricing, especially for contextual scenarios.

If you're dealing with production workloads, the service confirms it's built for them, with options for custom models via request forms and dedicated instances.

Why Choose Nebius AI Studio Over Competitors?

In a crowded AI landscape, Nebius differentiates through its focus on open-source excellence. Unlike proprietary APIs that lock you into vendor ecosystems, Nebius offers freedom with models under licenses like Apache 2.0, MIT, and Llama-specific terms—all while matching or exceeding performance. Users save on costs without sacrificing speed or accuracy, as evidenced by benchmarks: faster time-to-first-token in Europe and comparable quality to GPT-4o.

Community engagement via X/Twitter, LinkedIn, and Discord provides updates, technical support, and discussions, fostering a collaborative environment. For security-conscious users, European hosting ensures compliance, and the service avoids unnecessary data tracking.

How to Get Started with Nebius AI Studio

Getting up to speed is quick:

- Sign Up: Create an account and claim $1 in free credits.

- Explore the Playground: Test models interactively via the web UI.

- Integrate via API: Use the OpenAI-compatible endpoint with your API key.

- Scale and Optimize: Choose flavors, request models, or contact sales for enterprise needs.

- Monitor and Adjust: Track usage to stay within budget, with options for dedicated resources.

For custom requests, log in and use the form to suggest additional open-source models. Pricing details are transparent—check the AI Studio pricing page for endpoint costs based on speed vs. economy.

Real-World Use Cases and Practical Value

Nebius AI Studio powers diverse applications:

RAG Systems: Economical token handling for retrieval-augmented queries in search or knowledge bases.

Chatbots and Assistants: Low-latency responses for customer service or virtual agents.

Code Generation and Math Solvers: Leverage models like DeepSeek-R1 or Mixtral for developer tools.

Content Creation: Multilingual support in Mistral models for global apps.

The practical value lies in its balance of performance and affordability, enabling faster innovation. Users report seamless scaling and reliable outputs, reducing development time and costs. For instance, in media and entertainment, it accelerates Gen AI services; in biotech, it supports data analysis without MLOps overhead.

In summary, Nebius AI Studio Inference Service is the go-to for anyone seeking high-performance open-source AI inference. It empowers users to build smarter applications with ease, delivering real ROI through efficiency and scalability. Switch to Nebius today and experience the difference in speed, savings, and simplicity.

Best Alternative Tools to "Nebius AI Studio Inference Service"

Baseten is a platform for deploying and scaling AI models in production. It offers performant model runtimes, cross-cloud high availability, and seamless developer workflows, powered by the Baseten Inference Stack.

CHAI AI is a leading conversational AI platform focused on research and development of generative AI models. It offers tools and infrastructure for building and deploying social AI applications, emphasizing user feedback and incentives.

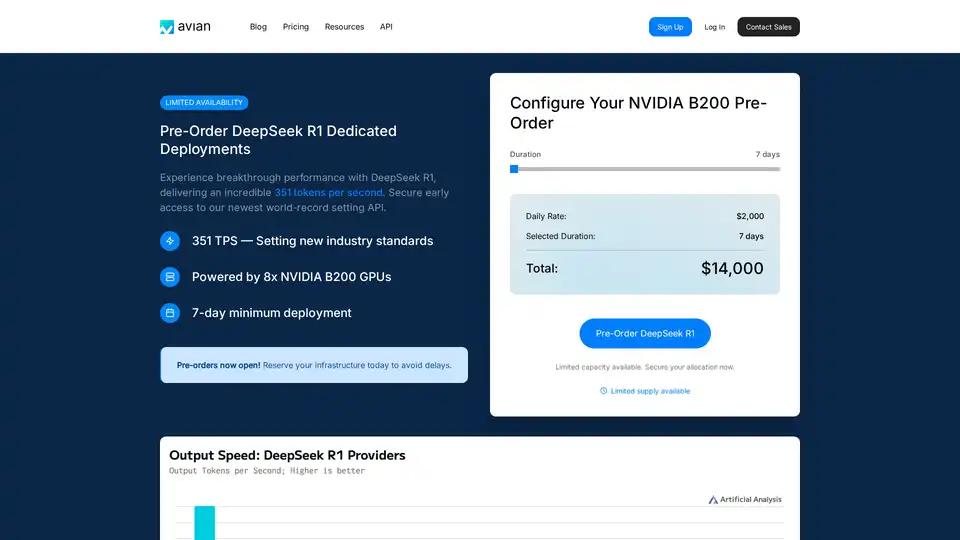

Avian API offers the fastest AI inference for open source LLMs, achieving 351 TPS on DeepSeek R1. Deploy any HuggingFace LLM at 3-10x speed with an OpenAI-compatible API. Enterprise-grade performance and privacy.

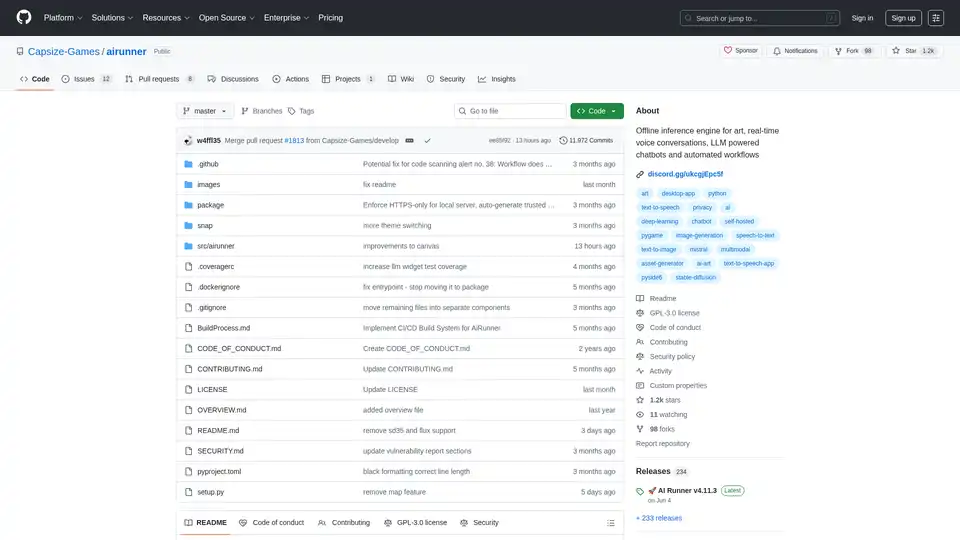

AI Runner is an offline AI inference engine for art, real-time voice conversations, LLM-powered chatbots, and automated workflows. Run image generation, voice chat, and more locally!

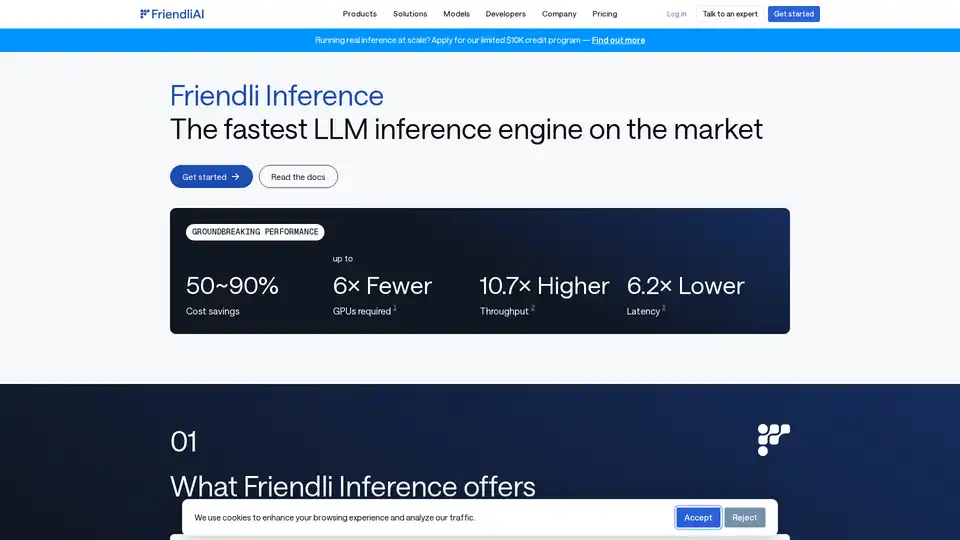

Friendli Inference is the fastest LLM inference engine, optimized for speed and cost-effectiveness, slashing GPU costs by 50-90% while delivering high throughput and low latency.

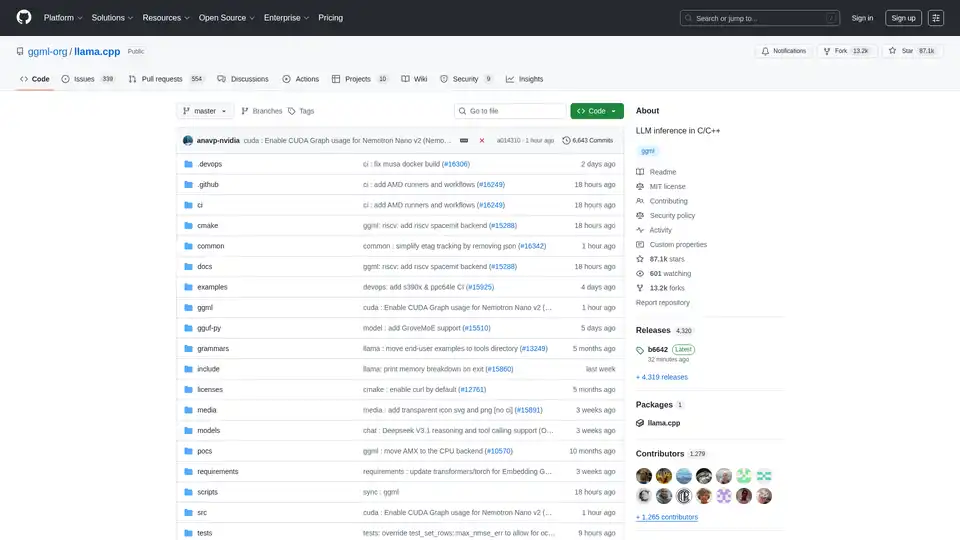

Enable efficient LLM inference with llama.cpp, a C/C++ library optimized for diverse hardware, supporting quantization, CUDA, and GGUF models. Ideal for local and cloud deployment.

Gnothi is an AI-powered journal that provides personalized insights and resources for self-reflection, behavior tracking, and personal growth through intelligent analysis of your entries.

Lightning-fast AI platform for developers. Deploy, fine-tune, and run 200+ optimized LLMs and multimodal models with simple APIs - SiliconFlow.

PremAI is an AI research lab providing secure, personalized AI models for enterprises and developers. Features include TrustML encrypted inference and open-source models.

xTuring is an open-source library that empowers users to customize and fine-tune Large Language Models (LLMs) efficiently, focusing on simplicity, resource optimization, and flexibility for AI personalization.

Falcon LLM is an open-source generative large language model family from TII, featuring models like Falcon 3, Falcon-H1, and Falcon Arabic for multilingual, multimodal AI applications that run efficiently on everyday devices.

PremAI is an applied AI research lab providing secure, personalized AI models, encrypted inference with TrustML™, and open-source tools like LocalAI for running LLMs locally.

Predibase is a developer platform for fine-tuning and serving open-source LLMs. Achieve unmatched accuracy and speed with end-to-end training and serving infrastructure, featuring reinforcement fine-tuning.

Fireworks AI delivers blazing-fast inference for generative AI using state-of-the-art, open-source models. Fine-tune and deploy your own models at no extra cost. Scale AI workloads globally.