AnimateDiff

Overview of AnimateDiff

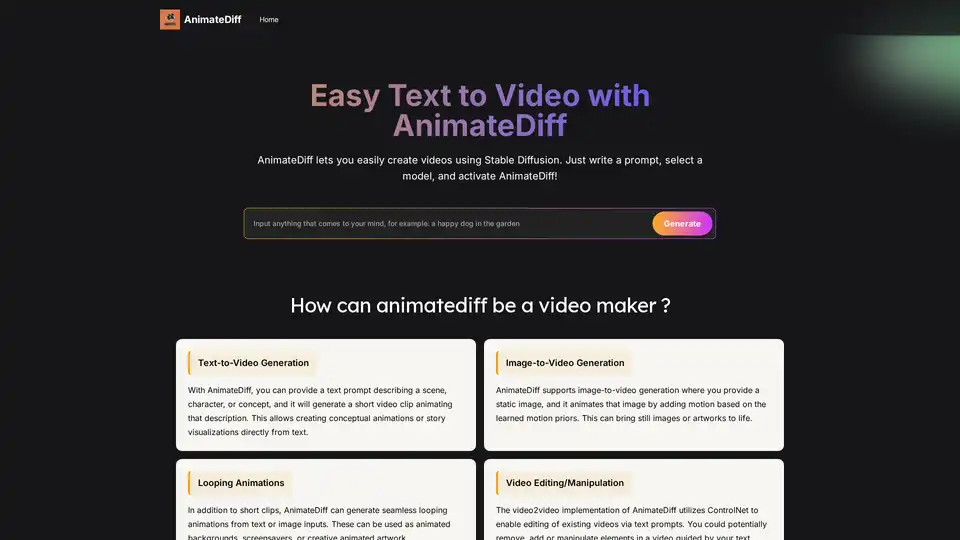

What is AnimateDiff?

AnimateDiff is an innovative AI tool that transforms static images or text prompts into dynamic animated videos by generating smooth sequences of frames. Built on the foundation of Stable Diffusion, it integrates specialized motion modules to predict and apply realistic movements, making it a game-changer for AI-driven video creation. Whether you're an artist sketching ideas or a developer prototyping visuals, AnimateDiff streamlines the process of turning concepts into engaging animations without the need for manual frame-by-frame work. This open-source framework, available via extensions like those for AUTOMATIC1111's WebUI, empowers users to leverage diffusion models for text-to-video and image-to-video generation, opening doors to efficient content creation in fields like art, gaming, and education.

How Does AnimateDiff Work?

At its core, AnimateDiff combines pre-trained text-to-image or image-to-image diffusion models, such as Stable Diffusion, with a dedicated motion module. This module is trained on diverse real-world video clips to capture common motion patterns, dynamics, and transitions, ensuring animations feel natural and lifelike.

Text-to-Video Process

- Input Prompt: Start with a descriptive text prompt outlining the scene, characters, actions, or concepts—e.g., "a serene forest with dancing fireflies at dusk."

- Base Model Generation: The Stable Diffusion backbone generates initial key frames based on the prompt, focusing on visual content.

- Motion Integration: The motion module analyzes the prompt and preceding frames to predict dynamics, interpolating intermediate frames for seamless transitions.

- Output Rendering: The coordinated system produces a short video clip or GIF, typically 16-24 frames at 8-16 FPS, showcasing animated elements in motion.

Image-to-Video Process

For animating existing visuals:

- Upload Image: Provide a static photo, artwork, or AI-generated image.

- Variation Generation: Use Stable Diffusion's img2img to create subtle key frame variations.

- Motion Application: The motion module adds inferred dynamics, animating elements like objects or backgrounds.

- Final Video: Result in a lively clip where the original image comes alive, ideal for breathing life into digital art or personal photos.

This plug-and-play approach means no extensive retraining is required—simply integrate the motion modules into your Stable Diffusion setup. Users can fine-tune outputs with advanced options like Motion LoRA for camera effects (panning, zooming) or ControlNet for guided motions from reference videos, enhancing controllability and creativity.

Key Features of AnimateDiff

- Plug-and-Play Integration: Seamlessly works with Stable Diffusion v1.5 models via extensions, no heavy setup needed for basic use.

- Versatile Generation Modes: Supports text-to-video, image-to-video, looping animations, and even video-to-video editing with text guidance.

- Personalization Options: Combine with DreamBooth or LoRA to animate custom subjects trained on your datasets.

- Advanced Controls: Adjust FPS, frame count, context batch size for smoother motions; enable close loops for seamless cycles or reverse frames for extended fluidity.

- Efficiency: Generates short clips quickly on capable hardware, faster than building monolithic video models from scratch.

These features make AnimateDiff a flexible tool for rapid prototyping, reducing the time from idea to animated output.

How to Use AnimateDiff

Getting started is straightforward, especially with the free online version at animatediff.org, which requires no installation.

Online Usage (No Setup Required)

- Visit animatediff.org.

- Enter your text prompt (e.g., "a cat jumping over a rainbow").

- Select a model and motion style if available.

- Hit generate—the AI processes it server-side and delivers a downloadable GIF or video.

- Ideal for beginners or quick tests, fully online without local resources.

Local Installation for Advanced Users

To unlock full potential:

- Install AUTOMATIC1111's Stable Diffusion WebUI.

- Go to Extensions > Install from URL, paste: https://github.com/continue-revolution/sd-webui-animatediff.

- Download motion modules (e.g., mm_sd_v15_v2.ckpt) and place in the extensions/animatediff/model folder.

- Restart WebUI; AnimateDiff appears in txt2img/img2img tabs.

- Input prompt, enable AnimateDiff, set frames/FPS, and generate.

For Google Colab users, notebooks are available for cloud-based runs. No coding expertise needed beyond basic setup—tutorials guide through dependencies like Python and Nvidia CUDA.

System Requirements

- GPU: Nvidia with 8GB+ VRAM (10GB+ for video-to-video); RTX 3060 or better recommended.

- OS: Windows/Linux primary; macOS via Docker.

- RAM/Storage: 16GB RAM, 1TB storage for models and outputs.

- Compatibility: Stable Diffusion v1.5 only; check for updates on GitHub.

With these, generation times drop to minutes per clip, scaling with hardware power.

Potential Use Cases and Applications

AnimateDiff shines in scenarios demanding quick, AI-assisted animations, aligning with search intents for efficient visual storytelling.

Art and Animation

Artists can prototype sketches or storyboards from text, saving hours on manual drawing. For instance, visualize a character's walk cycle instantly, iterating faster in creative workflows.

Game Development

Rapidly generate asset animations for prototypes—e.g., enemy movements or UI transitions—accelerating pre-production without full animation teams.

Education and Visualization

Turn abstract concepts into engaging videos, like animating historical events or scientific processes, making learning interactive and memorable.

Social Media and Marketing

Create eye-catching posts or ads: describe a product reveal, and get a looping animation ready for Instagram or TikTok, boosting engagement with minimal effort.

Motion Graphics and Pre-Visualization

Produce dynamic intros for videos or preview complex scenes before costly renders/films, ideal for filmmakers or AR/VR developers.

In augmented reality, it animates characters with natural motions; in advertising, it crafts personalized promo clips from brand images.

Why Choose AnimateDiff?

Compared to traditional tools like Adobe After Effects, AnimateDiff automates the heavy lifting, making high-quality animations accessible without pro skills. Its reliance on learned motion priors from real videos ensures realism, while controllability via prompts addresses common pain points in AI generation. Free and open-source, it's cost-effective for hobbyists and pros alike, with community-driven updates via GitHub. Though not perfect for Hollywood-level complexity, it's unbeatable for ideation and short-form content, fostering innovation in AI video tools.

For users searching 'best text-to-video AI' or 'animate images with Stable Diffusion,' AnimateDiff delivers reliable results, backed by its diffusion model heritage and motion expertise.

Who is AnimateDiff For?

- Creative Professionals: Artists, animators, and designers needing fast visualizations.

- Developers and Gamers: For prototyping interactive elements.

- Educators/Content Creators: Building explanatory or entertaining media.

- Marketers/Social Influencers: Quick, customizable animated assets.

- Hobbyists: Anyone curious about AI animation without deep technical barriers.

It's particularly suited for those familiar with Stable Diffusion, but the online demo lowers the entry point.

Limitations and Tips for Best Results

While powerful, AnimateDiff has constraints:

- Motion Scope: Best for simple, training-data-aligned movements; complex actions may need tuning.

- Artifacts: Higher motions can introduce glitches—start with lower frame counts.

- Length/Coherence: Excels at short clips (under 50 frames); long videos risk inconsistency.

- Model Limits: SD v1.5 only; watch for v2 compatibility.

Tips: Use detailed prompts with action descriptors (e.g., "slowly rotating camera"), experiment with LoRAs for styles, and post-process in tools like Premiere for polish. As diffusion tech evolves, AnimateDiff's community continues refining these, promising even smoother outputs.

In summary, AnimateDiff revolutionizes how we create animations, blending Stable Diffusion's image prowess with smart motion prediction. For anyone exploring AI video generation, it's a must-try tool that turns imagination into motion effortlessly.

Best Alternative Tools to "AnimateDiff"

BrainFever AI is a creative studio app that uses AI to generate images from text prompts and animate them. Available on iOS and Mac, it offers powerful image models, video animation, and a wide range of styles.

OpenArt is a free AI image and video generator with 100+ models & styles. Create art, edit images/videos, and train personalized AI models. Popular apps include text to image, image to video & more!

Anime Feet AI is an AI-powered platform that generates high-quality anime feet images and animations from text or existing images. Create stunning anime feet art in various styles easily.

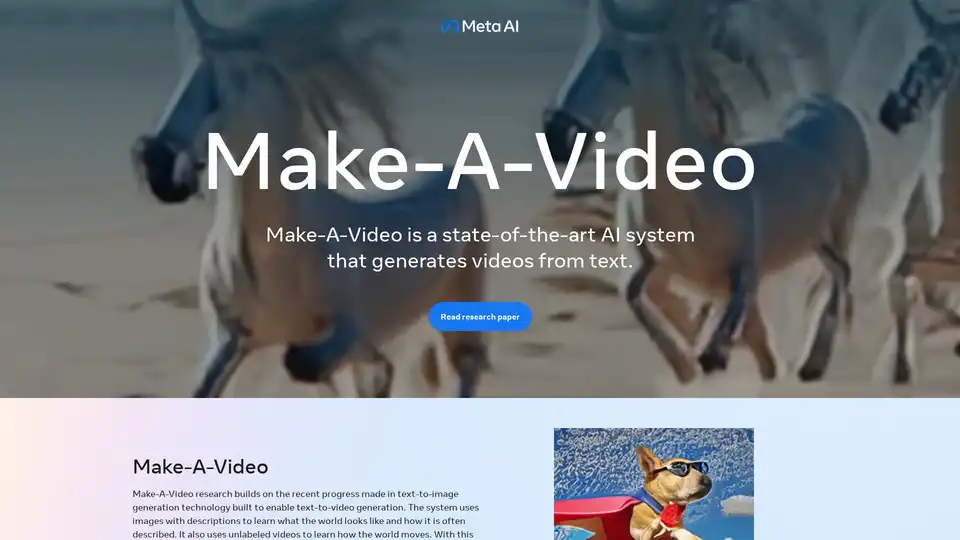

Make-A-Video is a state-of-the-art AI system by Meta AI that generates whimsical, one-of-a-kind videos from text. Bring your imagination to life with AI video generation!

Transform images into professional videos in seconds with Grok Video. AI-powered tool for easy video creation. Try it free today!

VisionFX is an all-in-one AI creative studio that generates images, videos, music, and voice content using advanced AI technology. Perfect for content creators, designers, and marketers.

Transform static images into dynamic videos with advanced AI technology. Quick 30-120 second conversion, high-quality output, and user-friendly interface for effortless video creation.

Create standout videos with Videoleap, your intuitive video editor and video maker. Explore premade templates, advanced features, and AI tools. Start today.

All-in-One AI Creator Tools: Your One-Stop AI Platform for Text, Image, Video, and Digital Human Creation. Transform ideas into stunning visuals quickly with advanced AI features.

Discover Veo3.bot, a free Google Veo 3 AI video generator with native audio. Create high-quality 1080p videos from text or images, featuring precise lip sync and realistic physics—no Gemini subscription needed.

Discover Wan 2.2 AI, a cutting-edge platform for text-to-video and image-to-video generation with cinema-grade controls, professional motion, and 720p resolution. Ideal for creators, marketers, and producers seeking high-quality AI video tools.

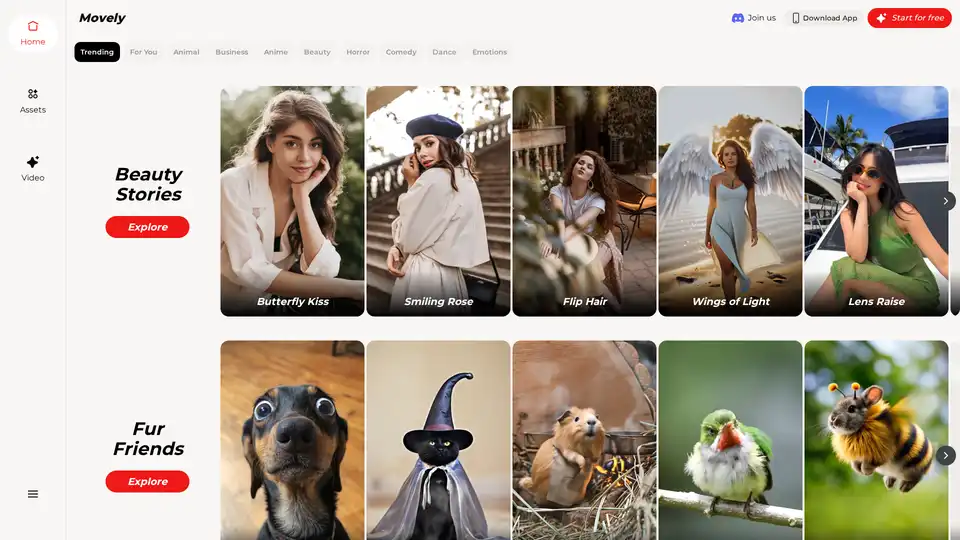

From static photos to dynamic videos in seconds! Movely uses advanced AI technology to transform your images into engaging content and edit photos with simple text commands.

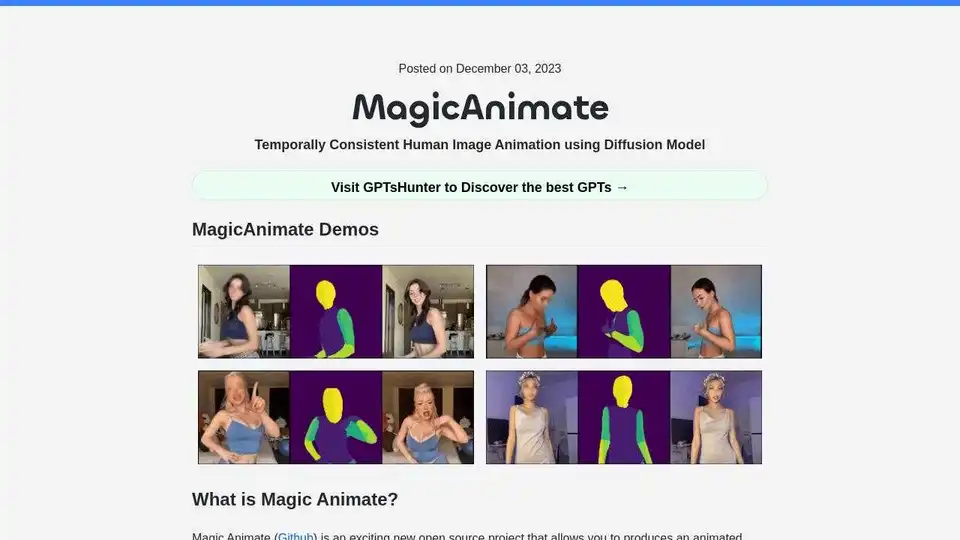

MagicAnimate is an open-source diffusion-based framework for creating temporally consistent human image animation from a single image and a motion video. Generate animated videos with enhanced fidelity.

Create professional-quality videos and images from text, photos, or videos with Magi-1.video. All-in-one AI Video Generator & Image Creator platform.