APIPark

Overview of APIPark

APIPark: The Open-Source LLM Gateway and API Developer Portal

What is APIPark? APIPark is an open-source, all-in-one LLM gateway designed to streamline the management of Large Language Models (LLMs) in production environments. It enhances the safety, stability, and operational integrity of enterprise applications utilizing LLMs. It also provides a solution for building API portals, enabling businesses to securely share internal APIs with partners and streamline collaboration.

Key Features and Benefits

- Multi-LLM Management: Connect to 200+ LLMs and easily switch between them without modifying existing code using OpenAI's API signature.

- Cost Optimization: Optimize LLM costs with fine-grained, visual management of LLMs. Allocate LLM traffic quotas for each tenant and prioritize specific LLMs.

- Load Balancer: Ensures seamless switching between LLMs by efficiently distributing requests across multiple LLM instances, enhancing system responsiveness and reliability.

- Traffic Control: Configure LLM traffic quotas for each tenant and prioritize specific LLMs to ensure optimal resource allocation.

- Real-time Monitoring: Provides dashboards for real-time insights into LLM interactions, helping you understand and optimize performance.

- Semantic Caching: Reduces latency from upstream LLM calls, improves response times, and reduces LLM resource usage. (Coming Soon)

- Flexible Prompt Management: Offers flexible templates for easy management and modification of prompts, unlike traditional methods that hardcode prompts.

- API Conversion: Quickly combine AI models and prompts into new APIs and share them with collaborating developers for immediate use.

- Data Masking: Protects against LLM attacks, abuse, and internal sensitive data leaks through safeguards like data masking.

- API Open Portal: Streamlined solution for building API portals, enabling businesses to securely share internal APIs with partners and streamline collaboration.

- API Billing: Efficiently track each user’s API usage and drive API monetization for your business.

- Access Control: Offers end-to-end API access management, ensuring your APIs are shared and used in compliance with enterprise policies.

- AI Agent Integration: Expanding scenarios for AI applications by integrating with AI Agents.

How does APIPark Work?

APIPark provides a unified API signature (using OpenAI's API signature) that allows you to connect multiple LLMs simultaneously without any code modification. It also provides load balancing solutions to optimize the distribution of requests across multiple LLM instances.

How to Use APIPark

Deploy your LLM Gateway & Developer Portal in 5 minutes with just one command line:

curl -sSO https://download.apipark.com/install/quick-start.sh; bash quick-start.sh

Why is APIPark Important?

APIPark addresses the challenges of managing and optimizing LLMs in production environments. It provides a secure, stable, and cost-effective solution for enterprises looking to leverage the power of LLMs.

Who is APIPark For?

APIPark is designed for:

- Enterprises using LLMs in production.

- Developers building AI-powered applications.

- Businesses looking to share internal APIs with partners.

APIPark vs. Other Solutions

APIPark delivers superior performance compared to Kong and Nginx, handling high concurrency and large requests with low latency and high throughput. It also offers a developer-centric design with simple APIs, clear documentation, and a flexible plugin architecture. Seamless integration with existing tech stacks and strong security features make APIPark a compelling choice.

F.A.Q

- What is LLM / AI gateway?

- What problems does APIPark solve?

- Why should I use APIPark to deploy LLMs?

Best Alternative Tools to "APIPark"

Sagify is an open-source Python tool that streamlines machine learning pipelines on AWS SageMaker, offering a unified LLM Gateway for seamless integration of proprietary and open-source large language models to boost productivity.

LiteLLM is an LLM Gateway that simplifies model access, spend tracking, and fallbacks across 100+ LLMs, all in the OpenAI format.

LM Studio is a user-friendly desktop application for running and downloading open-source large language models (LLMs) like LLaMa and Gemma locally on your computer. It features an in-app chat UI and an OpenAI compatible server for offline AI model interaction, making advanced AI accessible without programming skills.

Explore AI Library, the comprehensive catalog of over 2150 neural networks and AI tools for generative content creation. Discover top AI art models, tools for text-to-image, video generation, and more to boost your creative projects.

Dialoq AI is a unified API platform that allows developers to access and run 200+ AI models with ease, reducing development time and costs. It offers features like caching, load balancing, and automatic fallbacks for reliable AI app development.

Portkey equips AI teams with a production stack: Gateway, Observability, Guardrails, Governance, and Prompt Management in one platform.

UsageGuard provides a unified AI platform for secure access to LLMs from OpenAI, Anthropic, and more, featuring built-in safeguards, cost optimization, real-time monitoring, and enterprise-grade security to streamline AI development.

NextReady is a ready-to-use Next.js template with Prisma, TypeScript, and shadcn/ui, designed to help developers build web applications faster. Includes authentication, payments, and admin panel.

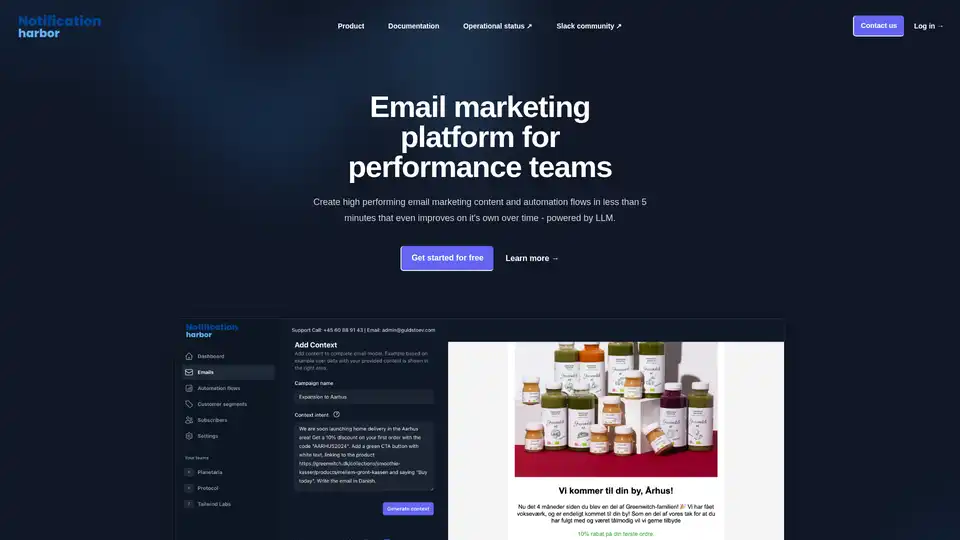

Notification Harbor is an AI-powered email marketing platform that uses LLM to create high-performing email content and automation flows, optimizing email campaigns with real-time personalization.

PromptMage is a Python framework simplifying LLM application development. It offers prompt testing, version control, and an auto-generated API for easy integration and deployment.

Notification Harbor is an AI-powered email marketing platform that leverages LLM to create optimized content and automation flows quickly. It offers AI-generated templates and real-time personalization.

Alan AI is an Adaptive App AI platform that enables self-coding intelligence for enterprise applications. Deliver features on demand with a self-coding system, reducing developer effort and transforming user experiences.

xMem supercharges LLM apps with hybrid memory, combining long-term knowledge and real-time context for smarter AI.

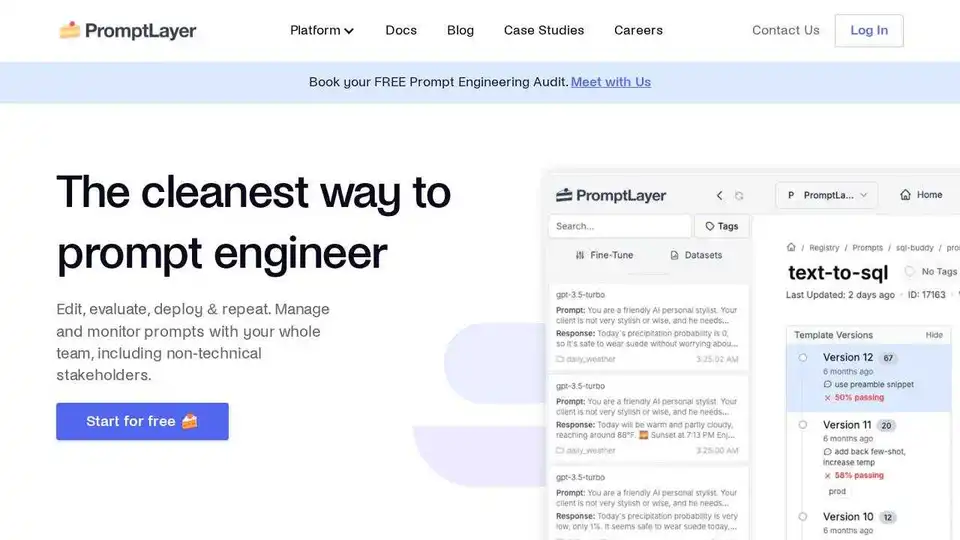

PromptLayer is an AI engineering platform for prompt management, evaluation, and LLM observability. Collaborate with experts, monitor AI agents, and improve prompt quality with powerful tools.