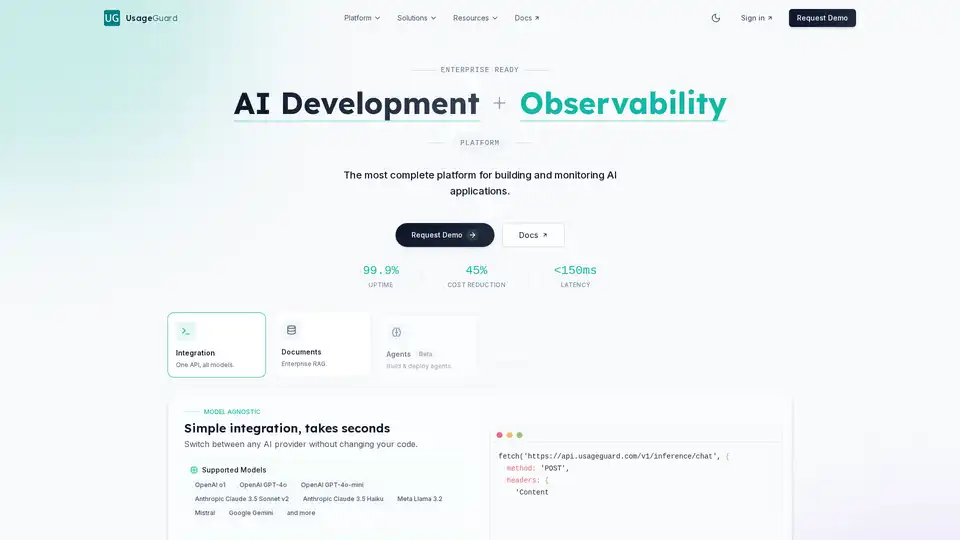

UsageGuard

Overview of UsageGuard

What is UsageGuard?

UsageGuard is a comprehensive enterprise-ready platform designed to empower businesses in building, deploying, and monitoring AI applications with confidence. It serves as a unified gateway for accessing major large language models (LLMs) from providers like OpenAI, Anthropic, Meta, and Google, while incorporating essential features such as security safeguards, cost management, and real-time observability. Unlike fragmented solutions, UsageGuard consolidates AI development, governance, and analytics into one seamless interface, making it ideal for teams scaling AI initiatives without compromising on performance or compliance.

At its core, UsageGuard addresses common pain points in AI adoption: the complexity of integrating multiple models, the risks of insecure deployments, escalating costs from unchecked usage, and the lack of visibility into AI operations. By acting as an intermediary layer between your applications and LLM providers, it ensures safe, efficient, and cost-effective AI utilization. Whether you're developing web apps, mobile solutions, or APIs, UsageGuard's model-agnostic approach allows seamless switching between providers like GPT-4o, Claude 3.5 Sonnet, or Llama 3.2 without rewriting code.

How Does UsageGuard Work?

Getting started with UsageGuard is straightforward and requires minimal setup—often just a few minutes to integrate into your existing infrastructure. The platform functions as a proxy for AI API calls: your application sends requests to UsageGuard's unified endpoint (e.g., https://api.usageguard.com/v1/inference/chat), which handles routing to the chosen LLM, applies security policies, monitors the interaction, and returns responses with low latency (typically under 150ms, adding only 50-100ms overhead).

Here's a step-by-step breakdown of its workflow:

- Integration Phase: Update your API endpoint to UsageGuard and add your API key and connection ID. This single unified API supports all models, enabling real-time streaming, session management for stateful conversations, and request monitoring for full visibility.

- Request Processing: As requests flow through, UsageGuard sanitizes inputs to prevent prompt injection attacks, filters content for moderation, and protects personally identifiable information (PII). It also tracks usage patterns to enforce budgets and limits.

- Response and Monitoring: Responses are streamed back rapidly, while backend analytics capture metrics like latency, token usage, and error rates. This data feeds into dashboards for real-time insights, helping developers debug issues or optimize performance.

- Governance Layer: Security and compliance tools, including SOC2 Type II and GDPR adherence, ensure enterprise-grade protection. For instance, custom policies can be set per project, team, or environment (dev, staging, production).

This intermediary model not only simplifies multi-provider usage but also isolates your data with end-to-end encryption and minimal retention practices, preventing unauthorized access.

Key Features of UsageGuard

UsageGuard stands out with its all-in-one approach, covering every stage of AI application lifecycle. Below are the primary features, drawn from its robust capabilities:

AI Development Tools

- Unified Inference: Access over a dozen models through one API, including OpenAI's o1 and GPT-4o-mini, Anthropic's Claude variants, Meta's Llama 3.2, Mistral, and Google Gemini. Switch providers effortlessly for the best fit per task.

- Enterprise RAG (Retrieval-Augmented Generation): Process documents intelligently, enhancing responses with your proprietary data without exposing it to external providers.

- Agents (Beta): Build and deploy autonomous AI agents for complex workflows, like multi-step reasoning or tool integration.

Observability and Analytics

- Real-Time Monitoring: Track performance metrics, usage patterns, and system health with 99.9% uptime. Features include logging, tracing, and metrics dashboards for proactive debugging.

- Session Management: Maintain context in conversations, ideal for chatbots or interactive apps.

Security and Governance

- Built-in Safeguards: Content filtering, PII protection, and prompt sanitization mitigate risks like injection attacks or harmful outputs.

- Compliance Tools: SOC2 Type II certified, GDPR compliant, with options for data isolation and custom policies.

Cost Control and Optimization

- Usage Tracking: Monitor token consumption, set budgets, and receive alerts to avoid overruns—users report up to 45% cost reductions.

- Automated Management: Enforce limits per connection, optimizing spend across projects.

Deployment Flexibility

- Private Cloud and On-Premise: Host on your AWS infrastructure (US, Europe, Middle East regions) for full control and air-gapped security.

- Global Availability: Low-latency access worldwide, ensuring reliability and data residency compliance.

Compared to alternatives like Langfuse, OpenAI's native tools, or AWS Bedrock, UsageGuard excels in multi-LLM support, comprehensive observability, and integrated spend management, as highlighted in its feature comparison.

Use Cases and Practical Value

UsageGuard is particularly valuable for enterprises building production-grade AI applications. For example:

- Collaborative AI Platforms: Teams at companies like Spanat use it to create trusted, secure environments for shared AI tools, saving months on custom development for monitoring and compliance.

- Scaling Enterprise Software: Leaders at CorporateStack integrate it into ERP systems to expand AI features while controlling costs and performance—essential for high-volume operations.

- R&D and Prototyping: Developers can experiment with multiple models quickly, using observability to iterate faster without security worries.

In terms of practical value, it reduces integration time from weeks to minutes, cuts costs through intelligent tracking (e.g., avoiding unnecessary high-end model calls), and enhances reliability with 99.9% uptime and <150ms latency. For businesses facing AI governance challenges, it provides peace of mind via customizable policies and dedicated 24/7 support with SLAs.

Who is UsageGuard For?

This platform targets mid-to-large enterprises and development teams serious about AI:

- Engineering Leads: Needing secure, scalable AI infrastructure without vendor lock-in.

- DevOps and Security Pros: Focused on compliance, PII protection, and cost governance.

- Product Managers: Building customer-facing apps like chatbots, analytics tools, or document processors.

- Startups Scaling Fast: Wanting enterprise features without the overhead.

It's not ideal for hobbyists due to its enterprise focus, but any organization deploying AI at scale will benefit from its robust toolkit.

Why Choose UsageGuard?

In a crowded AI landscape, UsageGuard differentiates itself by being truly model-agnostic and feature-complete. Testimonials underscore its impact: "UsageGuard's security features were crucial in helping us build a collaborative AI platform that our enterprise customers could trust," notes Eden Köhler, Head of Engineering at Spanat. The platform's minimal code changes, global deployment options, and proactive cost tools make it a strategic investment for long-term AI success.

For implementation details, check the quickstart guide in the docs or request a demo. With continuous expansions to supported models and features, UsageGuard evolves with the AI ecosystem, ensuring your applications stay ahead.

Frequently Asked Questions

How does UsageGuard ensure data privacy?

UsageGuard employs data isolation, end-to-end encryption, and customizable retention to safeguard information, never sharing it with third parties.

Does it support custom LLMs?

Yes, alongside major providers, it accommodates custom models for tailored integrations.

What if I encounter issues?

Access troubleshooting guides, the status page, or 24/7 support for quick resolutions.

By leveraging UsageGuard, businesses can transform AI from a risky experiment into a reliable driver of innovation, all while maintaining control over security, costs, and performance.

Best Alternative Tools to "UsageGuard"

Cloudflare Workers AI allows you to run serverless AI inference tasks on pre-trained machine learning models across Cloudflare's global network, offering a variety of models and seamless integration with other Cloudflare services.

Dialoq AI is a unified API platform that allows developers to access and run 200+ AI models with ease, reducing development time and costs. It offers features like caching, load balancing, and automatic fallbacks for reliable AI app development.

MultiChat AI allows you to chat with top LLMs like GPT-4, Claude-3, Gemini 1.5 Pro, and more, all in one place. Also offers AI image generation and editing tools.

Explore AI Library, the comprehensive catalog of over 2150 neural networks and AI tools for generative content creation. Discover top AI art models, tools for text-to-image, video generation, and more to boost your creative projects.

Xander is an open-source desktop platform that enables no-code AI model training. Describe tasks in natural language for automated pipelines in text classification, image analysis, and LLM fine-tuning, ensuring privacy and performance on your local machine.

xTuring is an open-source library that empowers users to customize and fine-tune Large Language Models (LLMs) efficiently, focusing on simplicity, resource optimization, and flexibility for AI personalization.

Sagify is an open-source Python tool that streamlines machine learning pipelines on AWS SageMaker, offering a unified LLM Gateway for seamless integration of proprietary and open-source large language models to boost productivity.

Discover top prompt engineering jobs on our niche job board. Find AI prompt engineer roles, remote AI jobs, and machine learning opportunities to advance your AI career.

Try Qwen AI for free! Experience advanced AI for text, code generation, image recognition, and more. No credit card required. Start your free trial today!

APIPark is an open-source LLM gateway and API developer portal for managing LLMs in production, ensuring stability and security. Optimize LLM costs and build your own API portal.

Latitude is an open-source platform for prompt engineering, enabling domain experts to collaborate with engineers to deliver production-grade LLM features. Build, evaluate, and deploy AI products with confidence.

Portkey equips AI teams with a production stack: Gateway, Observability, Guardrails, Governance, and Prompt Management in one platform.

Helicone AI Gateway: Routing and monitoring for reliable AI apps. LLMOps platform for fast-growing AI companies.

LiteLLM is an LLM Gateway that simplifies model access, spend tracking, and fallbacks across 100+ LLMs, all in the OpenAI format.