Pydantic AI

Overview of Pydantic AI

Pydantic AI: The GenAI Agent Framework

Pydantic AI is a Python agent framework crafted to facilitate the rapid, confident, and seamless construction of production-grade applications and workflows leveraging Generative AI. It aims to bring the ergonomic design of FastAPI to GenAI application and agent development.

What is Pydantic AI?

Pydantic AI is a tool designed to help developers build applications with Generative AI quickly and efficiently. It leverages Pydantic Validation and modern Python features to provide a smooth development experience.

Key Features:

- Built by the Pydantic Team: Direct access to the source of Pydantic Validation, which is used in many popular AI SDKs.

- Model-Agnostic: Supports a wide range of models and providers, including OpenAI, Anthropic, Gemini, and more. Custom models can also be easily implemented.

- Seamless Observability: Integrates with Pydantic Logfire for real-time debugging, performance monitoring, and cost tracking. Also supports other OpenTelemetry-compatible platforms.

- Fully Type-safe: Designed to provide comprehensive context for auto-completion and type checking, reducing runtime errors.

- Powerful Evals: Enables systematic testing and evaluation of agent performance and accuracy.

- MCP, A2A, and AG-UI: Integrates standards for agent access to external tools and data, interoperability with other agents, and building interactive applications.

- Human-in-the-Loop Tool Approval: Allows flagging tool calls that require approval before execution.

- Durable Execution: Enables building agents that can maintain progress across API failures and application restarts.

- Streamed Outputs: Provides continuous streaming of structured output with immediate validation.

- Graph Support: Offers a powerful way to define graphs using type hints for complex applications.

How does Pydantic AI work?

Pydantic AI works by providing a framework that simplifies the development of AI agents. It uses Pydantic models to define the structure of the agent's inputs and outputs, and it provides tools for connecting the agent to various LLMs and other services.

How to use Pydantic AI?

Installation: Install the

pydantic_aipackage.Basic Usage: Create an

Agentinstance with specified model and instructions.from pydantic_ai import Agent agent = Agent( 'anthropic:claude-sonnet-4-0', instructions='Be concise, reply with one sentence.', ) result = agent.run_sync('Where does "hello world" come from?') print(result.output) """

The first known use of "hello, world" was in a 1974 textbook about the C programming language. """ ```

- Advanced Usage: Add tools, dynamic instructions, and structured outputs to build more powerful agents.

Who is Pydantic AI for?

Pydantic AI is ideal for:

- Developers looking to build GenAI applications and workflows efficiently.

- Teams requiring robust, type-safe, and observable AI agents.

- Projects that need to integrate with various LLMs and services.

Tools & Dependency Injection Example

Pydantic AI facilitates dependency injection, which is useful when you need to inject data or service to your tools. You can define your dependency class and pass it to the agent. Below is the sample code building a support agent for a bank with Pydantic AI:

from dataclasses import dataclass

from pydantic import BaseModel, Field

from pydantic_ai import Agent, RunContext

from bank_database import DatabaseConn

@dataclass

class SupportDependencies:

customer_id: int

db: DatabaseConn

class SupportOutput(BaseModel):

support_advice: str = Field(description='Advice returned to the customer')

block_card: bool = Field(description="Whether to block the customer's card")

risk: int = Field(description='Risk level of query', ge=0, le=10)

support_agent = Agent(

'openai:gpt-5',

deps_type=SupportDependencies,

output_type=SupportOutput,

instructions=(

'You are a support agent in our bank, give the '

'customer support and judge the risk level of their query.'

),

)

@support_agent.instructions

async def add_customer_name(ctx: RunContext[SupportDependencies]) -> str:

customer_name = await ctx.deps.db.customer_name(id=ctx.deps.customer_id)

return f"The customer's name is {customer_name!r}"

@support_agent.tool

async def customer_balance(

ctx: RunContext[SupportDependencies], include_pending: bool

) -> float:

"""Returns the customer's current account balance."""

return await ctx.deps.db.customer_balance(

id=ctx.deps.customer_id,

include_pending=include_pending,

)

Instrumentation with Pydantic Logfire

To monitor agents in action, Pydantic AI seamlessly integrates with Pydantic Logfire, OpenTelemetry observability platform, which enables real-time debugging, evals-based performance monitoring, and behavior, tracing, and cost tracking. The sample code:

...from pydantic_ai import Agent, RunContext

from bank_database import DatabaseConn

import logfire

logfire.configure()

logfire.instrument_pydantic_ai()

logfire.instrument_asyncpg()

...support_agent = Agent(

'openai:gpt-4o',

deps_type=SupportDependencies,

output_type=SupportOutput,

system_prompt=(

'You are a support agent in our bank, give the '

'customer support and judge the risk level of their query.'

),

)

Why Choose Pydantic AI?

Pydantic AI offers a streamlined approach to building GenAI applications. Its foundation on Pydantic Validation, model-agnostic design, seamless observability, and type-safe environment makes it a compelling choice for developers aiming for efficiency and reliability.

Next Steps

To explore Pydantic AI further:

- Install Pydantic AI and follow the examples.

- Refer to the documentation for a deeper understanding.

- Check the API Reference to understand Pydantic AI's interface.

- Join the Slack channel or file an issue on GitHub for any questions.

Best Alternative Tools to "Pydantic AI"

Lunary is an open-source LLM engineering platform providing observability, prompt management, and analytics for building reliable AI applications. It offers tools for debugging, tracking performance, and ensuring data security.

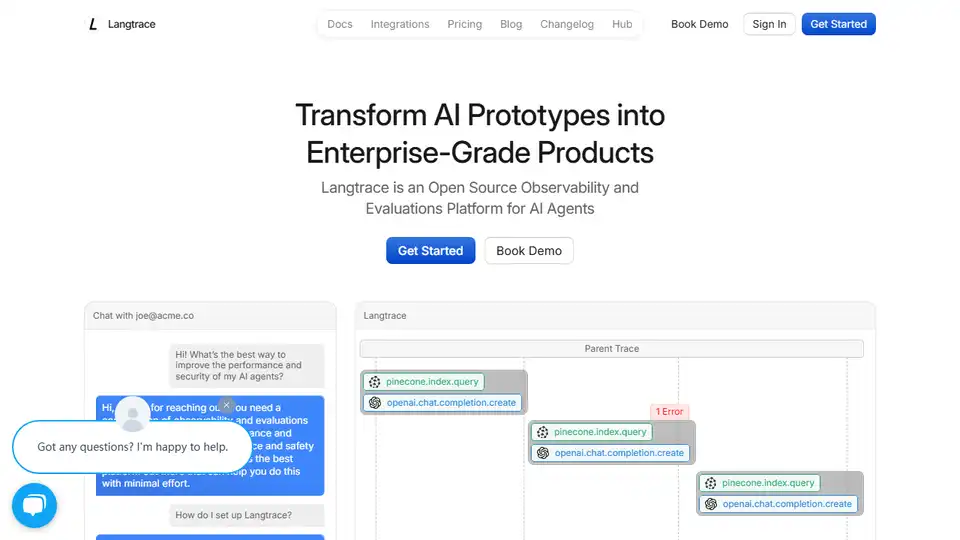

Langtrace is an open-source observability and evaluations platform designed to improve the performance and security of AI agents. Track vital metrics, evaluate performance, and ensure enterprise-grade security for your LLM applications.

Rierino is a powerful low-code platform accelerating ecommerce and digital transformation with AI agents, composable commerce, and seamless integrations for scalable innovation.

Ask On Data is an open-source, GenAI-powered chat based ETL tool for data engineering. Simplify data migration, cleaning, and analysis with an intuitive chat interface.

Discover top prompt engineering jobs on our niche job board. Find AI prompt engineer roles, remote AI jobs, and machine learning opportunities to advance your AI career.

Firecrawl is the leading web crawling, scraping, and search API designed for AI applications. It turns websites into clean, structured, LLM-ready data at scale, powering AI agents with reliable web extraction without proxies or headaches.

Denvr Dataworks provides high-performance AI compute services, including on-demand GPU cloud, AI inference, and a private AI platform. Accelerate your AI development with NVIDIA H100, A100 & Intel Gaudi HPUs.

Abacus.AI: AI Super Assistant for enterprises and professionals.Automate entire enterprise with AI building AI.

Nebius AI Studio Inference Service offers hosted open-source models for faster, cheaper, and more accurate results than proprietary APIs. Scale seamlessly with no MLOps needed, ideal for RAG and production workloads.

Discover Botco.ai's GenAI chatbots, designed to automate compliance, streamline healthcare navigation, and elevate customer engagement with innovative conversational AI solutions.

KaneAI is a GenAI-Native testing agent for high-speed Quality Engineering teams. It enables planning, authoring, and evolving tests using natural language. Discover efficient AI-driven test automation today.

Floatbot.AI is a no-code GenAI platform for building & deploying AI Voice & Chat Agents for enterprise contact center automation and real-time agent assist, integrating with any data source or service.

GenWorlds is the event-based communication framework for building multi-agent systems and a vibrant community of AI enthusiasts.

Gemini CLI is an open-source AI agent that brings the power of Gemini directly into your terminal. Access Gemini models, automate tasks, and integrate with GitHub.