EvalsOne

Overview of EvalsOne

What is EvalsOne?

EvalsOne is a comprehensive platform designed to iteratively develop and optimize generative AI applications. It provides an intuitive evaluation toolbox to streamline LLMOps workflows, build confidence, and gain a competitive edge in the AI landscape.

How to use EvalsOne?

EvalsOne offers a one-stop evaluation toolbox suitable for crafting LLM prompts, fine-tuning RAG processes, and evaluating AI agents. Here's a breakdown of how to use it:

- Prepare Eval Samples with Ease: Use templates and create variable values, run evaluation sample sets from OpenAI Evals, or copy and paste code from the Playground.

- Comprehensive Model Integration: Supports generation and evaluation based on models deployed in various cloud and local environments, including OpenAI, Claude, Gemini, Mistral, Azure, Bedrock, Hugging Face, Groq, Ollama, Coze, FastGPT, and Dify.

- Evaluators Out-of-the-Box: Integrates industry-leading evaluators and allows for the creation of personalized evaluators suitable for complex scenarios.

Why is EvalsOne important?

EvalsOne is important because it helps teams across the AI lifecycle streamline their LLMOps workflow. From developers to researchers and domain experts, EvalsOne provides an intuitive process and interface that empowers:

- Easy creation of evaluation runs and organization in levels

- Quick iteration and in-depth analysis through forked runs

- Creation of multiple prompt versions for comparison and optimization

- Clear and intuitive evaluation reports

Where can I use EvalsOne?

You can use EvalsOne in various LLMOps stages, from development to production environments. It is applicable for:

- Crafting LLM prompts

- Fine-tuning RAG processes

- Evaluating AI agents

Best way to evaluate your Generative AI Apps?

The best way to evaluate your Generative AI Apps with EvalsOne involves using a combination of rule-based and LLM-based approaches, seamlessly integrating human evaluation for expert judgment. EvalsOne supports multiple judging methods, such as rating, scoring, and pass/fail, and provides not only judging results but also the reasoning process.

Best Alternative Tools to "EvalsOne"

HoneyHive provides AI evaluation, testing, and observability tools for teams building LLM applications. It offers a unified LLMOps platform.

UpTrain is a full-stack LLMOps platform providing enterprise-grade tooling to evaluate, experiment, monitor, and test LLM applications. Host on your own secure cloud environment and scale AI confidently.

Tryolabs is an AI and machine learning consulting company that helps businesses create value by providing tailored AI solutions, data engineering, and MLOps.

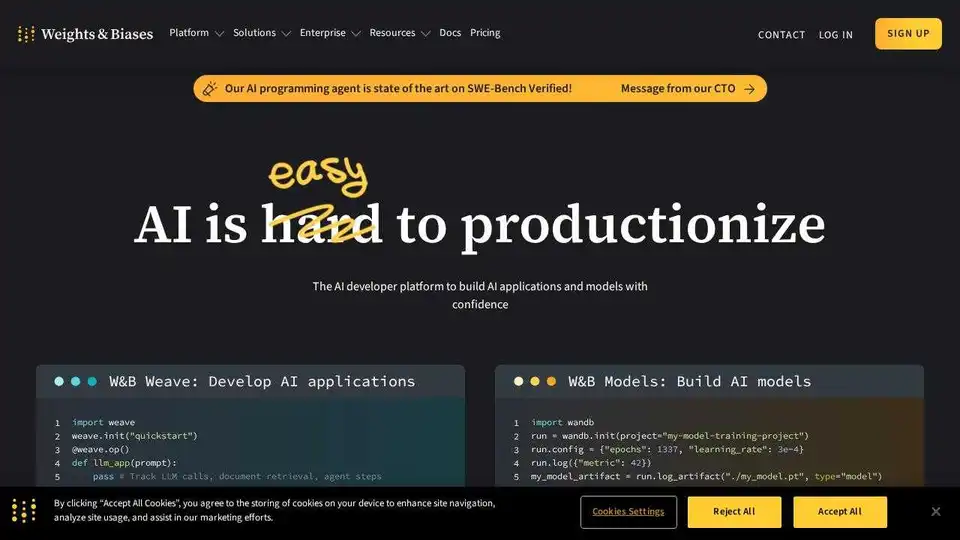

Weights & Biases is the AI developer platform to train and fine-tune models, manage models, and track GenAI applications. Build AI agents and models with confidence.

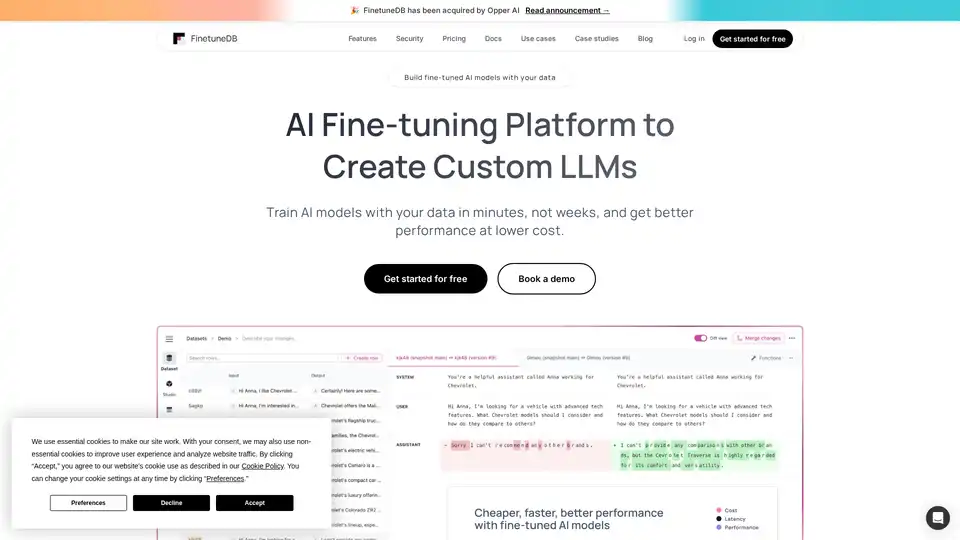

FinetuneDB is an AI fine-tuning platform that lets you create and manage datasets to train custom LLMs quickly and cost-effectively, improving model performance with production data and collaborative tools.

UBIAI enables you to build powerful and accurate custom LLMs in minutes. Streamline your AI development process and fine-tune LLMs for reliable AI solutions.

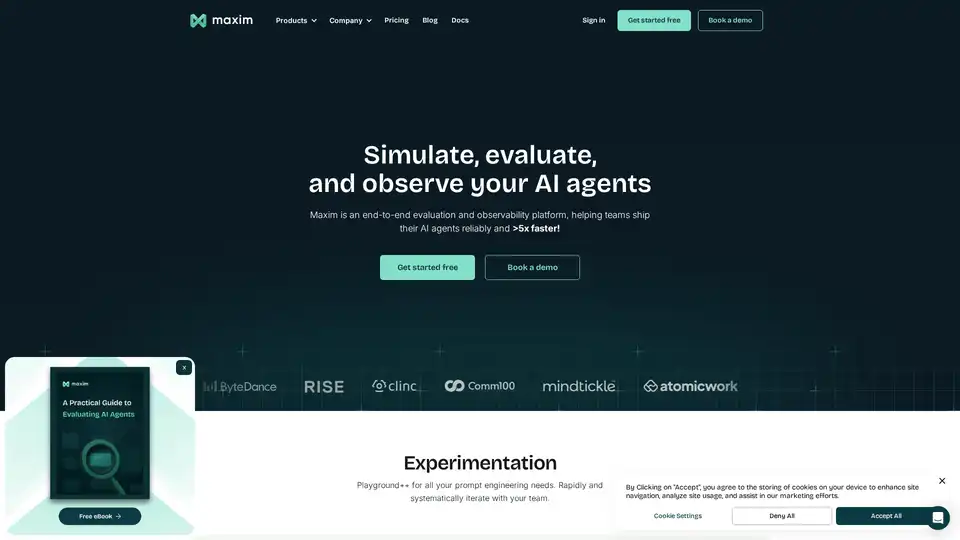

Maxim AI is an end-to-end evaluation and observability platform that helps teams ship AI agents reliably and 5x faster with comprehensive testing, monitoring, and quality assurance tools.

Openlayer is an enterprise AI platform providing unified AI evaluation, observability, and governance for AI systems, from ML to LLMs. Test, monitor, and govern AI systems throughout the AI lifecycle.

Selene by Atla AI provides precise judgments on your AI app's performance. Explore open source LLM Judge models for industry-leading accuracy and reliable AI evaluation.

DomainScore.ai is an AI-powered tool providing comprehensive domain name evaluation and scoring based on relevance, brandability, trustworthiness, SEO, and simplicity.

Arize AI provides a unified LLM observability and agent evaluation platform for AI applications, from development to production. Optimize prompts, trace agents, and monitor AI performance in real time.

Future AGI offers a unified LLM observability and AI agent evaluation platform for AI applications, ensuring accuracy and responsible AI from development to production.

AnswerWriting: Free UPSC Mains answer writing practice with AI evaluation. Improve structure, clarity, and relevance instantly.

Velvet, acquired by Arize, provided a developer gateway for analyzing, evaluating, and monitoring AI features. Arize is a unified platform for AI evaluation and observability, helping accelerate AI development.