Selene

Overview of Selene

Selene by Atla AI: Frontier AI Evaluation Models

What is Selene?

Selene is a suite of open-source LLM Judge models developed by Atla AI, designed to provide precise and reliable evaluations of AI application performance. It helps developers build trust with customers by ensuring the reliability of their generative AI apps through detailed scores and actionable critiques.

How does Selene work?

Selene models function as LLM-as-a-Judge, analyzing AI responses to provide scores and critiques. You can use the Selene models through Hugging Face Transformers, Ollama, or Github.

Selene Models

Explore the right size for your evaluation needs with two primary models:

- Selene 1: The flagship model offering industry-leading accuracy across a wide variety of evaluation tasks. Ideal for pre-production evaluations.

- Selene 1 Mini: A lean, optimized version perfect for running evaluations at inference time, prioritizing speed and efficiency.

Key Features and Benefits

- High Accuracy: Selene is designed to provide the most accurate evaluations available.

- Versatile Evaluation: Suitable for a wide variety of eval tasks.

- Optimized for Speed: Selene 1 Mini is optimized for running evals quickly during inference.

- Open Source: Use and contribute to the models through Hugging Face Transformers.

How to Use Selene

To use Selene, you can leverage the Hugging Face Transformers library. Here's a simple example:

from transformers import AutoModelForCausalLM, AutoTokenizer

device = "cuda" # the device to load the model onto

model_id = "AtlaAI/Selene-1-Mini-Llama-3.1-8B"

model = AutoModelForCausalLM.from_pretrained(model_id, device_map="auto")

tokenizer = AutoTokenizer.from_pretrained(model_id)

prompt = "I heard you can evaluate my responses?" # replace with your eval prompt

messages = [{"role": "user", "content": prompt}]

text = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

model_inputs = tokenizer([text], return_tensors="pt").to(device)

generated_ids = model.generate(model_inputs.input_ids, max_new_tokens=512, do_sample=True)

generated_ids = [output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)]

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

Use Cases

- Evaluating Agent Performance: Use Selene to evaluate the performance of AI agents, track errors, and gain instant insights.

- Building Trust: Ensure the reliability of your generative AI app to build trust with customers.

- Pre-Production Evals: Use Selene 1 for rigorous evaluations before deploying your AI application.

- Inference-Time Evals: Use Selene 1 Mini for quick evaluations during inference.

Why is Selene important?

As AI applications become more prevalent, ensuring their reliability and trustworthiness is crucial. Selene provides a robust and accurate means of evaluating AI performance, empowering developers to create safer and more reliable AI systems. It is particularly important for building trust with customers, especially in generative AI applications where outputs can be unpredictable.

Where can I use Selene?

You can integrate Selene into your AI development workflow using Hugging Face Transformers. Also, you can explore Agent Evals by Atla to enhance and track Agents.

By providing open-source evaluation models, Atla AI contributes to a future with safe and reliable AI.

Best Alternative Tools to "Selene"

Parea AI is an AI experimentation and annotation platform that helps teams confidently ship LLM applications. It offers features for experiment tracking, observability, human review, and prompt deployment.

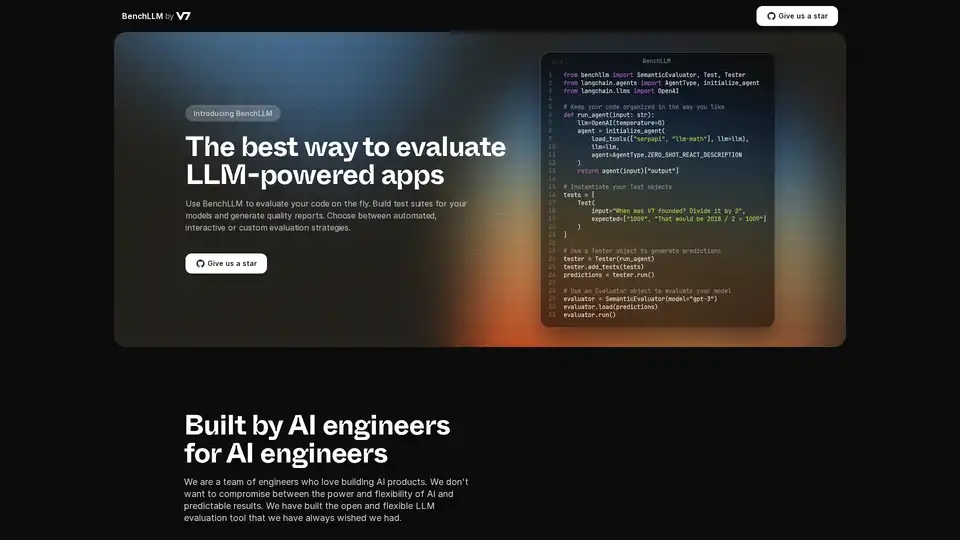

BenchLLM is an open-source tool for evaluating LLM-powered apps. Build test suites, generate reports, and monitor model performance with automated, interactive, or custom strategies.

Teammately is the AI Agent for AI Engineers, automating and fast-tracking every step of building reliable AI at scale. Build production-grade AI faster with prompt generation, RAG, and observability.

Maxim AI is an end-to-end evaluation and observability platform that helps teams ship AI agents reliably and 5x faster with comprehensive testing, monitoring, and quality assurance tools.

Pydantic AI is a GenAI agent framework in Python, designed for building production-grade applications with Generative AI. Supports various models, offers seamless observability, and ensures type-safe development.

Parea AI is the ultimate experimentation and human annotation platform for AI teams, enabling seamless LLM evaluation, prompt testing, and production deployment to build reliable AI applications.

Arize AI provides a unified LLM observability and agent evaluation platform for AI applications, from development to production. Optimize prompts, trace agents, and monitor AI performance in real time.

Bolt Foundry provides context engineering tools to make AI behavior predictable and testable, helping you build trustworthy LLM products. Test LLMs like you test code.

Latitude is an open-source platform for prompt engineering, enabling domain experts to collaborate with engineers to deliver production-grade LLM features. Build, evaluate, and deploy AI products with confidence.

Openlayer is an enterprise AI platform providing unified AI evaluation, observability, and governance for AI systems, from ML to LLMs. Test, monitor, and govern AI systems throughout the AI lifecycle.

Monitor, analyze, and protect AI agents, LLM, and ML models with Fiddler AI. Gain visibility and actionable insights with the Fiddler Unified AI Observability Platform.

Confident AI: DeepEval LLM evaluation platform for testing, benchmarking, and improving LLM application performance.

LangWatch is an AI agent testing, LLM evaluation, and LLM observability platform. Test agents, prevent regressions, and debug issues.

Future AGI offers a unified LLM observability and AI agent evaluation platform for AI applications, ensuring accuracy and responsible AI from development to production.