FILM Frame Interpolation

Overview of FILM Frame Interpolation

What is FILM Frame Interpolation?

FILM, short for Frame Interpolation for Large Motion, is a cutting-edge neural network developed by Google Research to generate smooth intermediate frames in videos, particularly those involving significant scene movement. Unlike traditional methods that rely on pre-trained networks for optical flow or depth estimation, FILM uses a unified single-network approach. This makes it efficient and powerful for creating high-quality interpolations directly from frame triplets during training. Released as an open-source TensorFlow 2 implementation, it's accessible for developers and researchers looking to enhance video fluidity without complex setups.

The model stands out for its ability to handle large scene motions—think fast-moving objects or dynamic camera pans—where conventional interpolation techniques often fail, producing artifacts or blurring. By sharing convolution weights across multi-scale feature extractors, FILM achieves state-of-the-art benchmark scores while keeping the architecture lightweight and trainable from basic inputs.

How Does FILM Work?

At its core, FILM processes two input frames (frame1 and frame2) to predict intermediate frames at specified timestamps. The process begins with a multi-scale feature extraction stage, where convolutional layers analyze the inputs at different resolutions to capture both fine details and broader motion patterns. These features are then fused and refined through a series of upsampling and blending operations to generate the output frame or video sequence.

Key to its innovation is the avoidance of external dependencies. Traditional frame interpolation might compute pixel-level correspondences using optical flow, but FILM learns these implicitly within its network. During inference, you control the interpolation depth with the 'times_to_interpolate' parameter: set to 1 for a single midpoint frame (at t=0.5), or higher (up to 8) for a full video with exponentially more frames (2^times_to_interpolate + 1 total, at 30 FPS). This recursive invocation ensures smooth transitions, even in challenging scenarios like occlusions or rapid deformations.

The underlying technical report from 2022 details how the model was trained on diverse video datasets, optimizing for perceptual quality over pixel-perfect accuracy. This results in visually pleasing outputs that mimic human perception, making it ideal for applications beyond raw synthesis.

How to Use FILM Frame Interpolation?

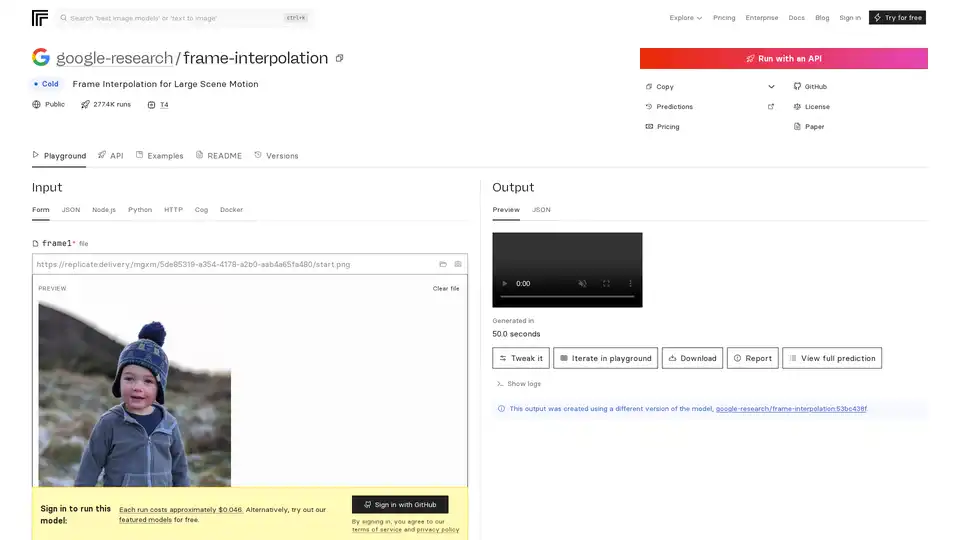

Getting started with FILM is straightforward, thanks to its deployment on platforms like Replicate for API access or its GitHub repository for local runs.

Via Replicate API: Upload two image files as frame1 and frame2. Adjust 'times_to_interpolate' (default 1) to define output complexity. Predictions run on Nvidia T4 GPUs, typically completing in under 4 minutes, with costs around $0.046 per run (about 21 runs per dollar). Outputs include preview images, downloadable videos, or JSON metadata. No sign-in required for free trials on featured models, but GitHub login unlocks full access.

Local Setup with Docker: Clone the GitHub repo at https://github.com/google-research/frame-interpolation. Use Docker for easy environment isolation—pull the image and run predictions via command-line or integrate into Python/Node.js scripts. The Cog framework supports custom inputs, making it extensible for batch processing.

Input Requirements: Frames should be sequential images (e.g., JPEG/PNG). For video interpolation, process pairs recursively. Webcam capture is supported for quick tests.

Examples on Replicate showcase real-world uses, like interpolating sports footage or animated sequences, demonstrating artifact-free results.

Why Choose FILM for Your Projects?

FILM excels where other tools falter, offering superior handling of large motions without the computational overhead of multi-model pipelines. Benchmark scores from the ECCV 2022 paper highlight its edge over competitors in metrics like interpolation PSNR and SSIM. It's open-source under a permissive license, fostering community contributions—forks and related models like zsxkib/film-frame-interpolation-for-large-motion adapt it for video-specific tasks.

Cost-effectiveness is another draw: free local runs versus affordable cloud predictions. Plus, its YouTube demos and paper provide transparent validation, building trust for production use. If you're dealing with jerky low-FPS videos from drones or action cams, FILM turns them into cinematic experiences effortlessly.

Who is FILM Frame Interpolation For?

This tool targets AI enthusiasts, video editors, and machine learning practitioners focused on computer vision.

Developers and Researchers: Ideal for experimenting with neural interpolation in papers or prototypes, especially in areas like video compression or animation.

Content Creators: Filmmakers and YouTubers can upscale frame rates for smoother playback, enhancing mobile or web videos without expensive hardware.

Industry Pros: In gaming (e.g., frame rate boosting for smoother animations) or surveillance (interpolating sparse footage), FILM's efficiency shines. It's not suited for real-time applications due to prediction times but perfect for offline enhancement.

Related models on Replicate, such as pollinations/rife-video-interpolation or zsxkib/st-mfnet, complement FILM by offering video-to-video workflows, but FILM's focus on large motion gives it a niche advantage.

Practical Value and Use Cases

The real power of FILM lies in its versatility. In education, it aids in creating slow-motion analyses for physics demos. For marketing, interpolate product shots to showcase fluid rotations. User feedback from GitHub praises its ease in handling occlusions, common in real footage.

Consider a case: A wildlife videographer with 15 FPS clips from a shaky handheld camera. Using FILM, they generate 30 FPS outputs, preserving details in fast animal movements—transforming raw footage into professional reels.

Pricing schemes are transparent: Replicate's pay-per-run model scales with usage, while self-hosting eliminates ongoing costs. For FAQs, check the README for troubleshooting inputs or version differences (e.g., the current uses google-research/frame-interpolation:53bc438f).

In summary, FILM represents Google's commitment to accessible AI for media enhancement. Whether you're optimizing workflows or pushing research boundaries, it's a reliable choice for superior frame interpolation. Dive into the GitHub repo or Replicate playground to see it in action—your videos will never look the same.

Best Alternative Tools to "FILM Frame Interpolation"

VideoProc Converter AI is a one-stop AI media processing solution for video/image/audio enhancement, converting, editing, compressing, downloading, and recording with GPU acceleration. Supports 4K/8K videos, DVDs, and online media.

WinXDVD: Multimedia solution for DVD ripping, AI video enhancement, and iPhone data management. Enhance videos, rip DVDs quickly, and transfer iPhone data with ease. Trusted by millions worldwide.

VIDIO simplifies video editing with AI, reducing time and making it accessible for beginners. Features include AI-powered motion graphics, highlight creation, object transformation, and video enhancement. Compatible with cloud storage and desktop editors.

Enhance video quality to 8K with HitPaw VikPea, the AI video enhancer that unblurs, restores, and colorizes your videos in one click. Experience fast, stable, and large-scale video enhancement.

Enhance your videos with Topaz Video, an AI-powered software for upscaling, denoising, stabilizing, and smoothing footage. Trusted by creative pros for cinema-grade results.

Winxvideo AI is a comprehensive AI video toolkit to upscale video/image to 4K, stabilize shaky video, boost fps, convert, compress, edit video, and record screen with GPU acceleration.

AVCLabs Video Enhancer AI uses advanced AI technology to enhance video quality, upscale resolution from SD to 8K, restore old footage, colorize black-and-white videos, and stabilize shaky footage with professional-grade results.

AniPortrait is an open-source AI framework for generating photorealistic portrait animations driven by audio or video inputs. It supports self-driven, face reenactment, and audio-driven modes for high-quality video synthesis.

Discover bigmp4, a cutting-edge AI tool for lossless video enlargement to 2K/4K/8K, black-and-white colorization, AI interpolation for smooth 60-240fps, and silky slow motion. Supports MP4, MOV, and more for vivid enhancements.

AnyEnhancer is an AI video enhancer that transforms videos into high quality by upscaling to 4K, denoising, colorizing, smoothing, and restoring faces. Enhance your video now!

Create your own TV shows and movies with Focal's AI-powered video creation software. Generate from a script, edit with chat, and use the latest AI models for video extension, frame interpolation, and more.

Anvsoft offers AI-powered video and photo tools, including AVCLabs Video Enhancer AI, designed to enhance multimedia experiences.

ComfyOnline provides an online environment for running ComfyUI workflows, generating APIs for AI application development.

Aiarty offers AI image/video enhancement and matting software to upscale, enhance, restore images/videos, remove or change backgrounds.