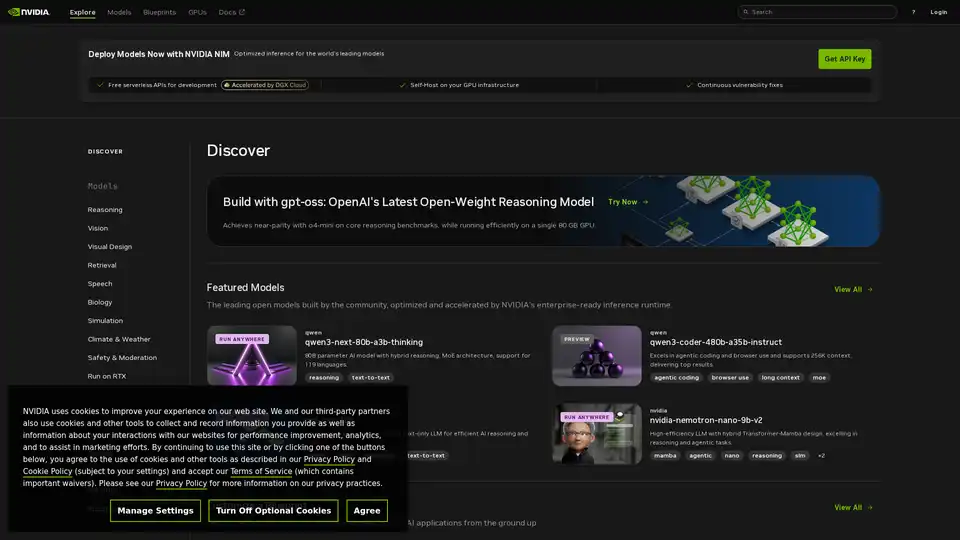

NVIDIA NIM

Overview of NVIDIA NIM

NVIDIA NIM APIs: Accelerating Enterprise Generative AI

NVIDIA NIM (NVIDIA Inference Microservices) APIs are designed to provide optimized inference for leading AI models, enabling developers to build and deploy enterprise-grade generative AI applications. These APIs offer flexibility through both serverless deployment for development and self-hosting options on your own GPU infrastructure.

What is NVIDIA NIM?

NVIDIA NIM is a suite of inference microservices that accelerates the deployment of AI models. It is designed to optimize performance, security, and reliability, making it suitable for enterprise applications. NIM provides continuous vulnerability fixes, ensuring a secure and stable environment for running AI models.

How does NVIDIA NIM work?

NVIDIA NIM works by providing optimized inference for a variety of AI models, including reasoning, vision, visual design, retrieval, speech, biology, simulation, climate & weather, and safety & moderation models. It supports different models like gpt-oss, qwen, and nvidia-nemotron-nano-9b-v2 to fit various use cases.

Key functionalities include:

- Optimized Inference: NVIDIA's enterprise-ready inference runtime optimizes and accelerates open models built by the community.

- Flexible Deployment: Run models anywhere, with options for serverless APIs for development or self-hosting on your GPU infrastructure.

- Continuous Security: Benefit from continuous vulnerability fixes, ensuring a secure environment for running AI models.

Key Features and Benefits

- Free Serverless APIs: Access free serverless APIs for development purposes.

- Self-Hosting: Deploy on your own GPU infrastructure for greater control and customization.

- Broad Model Support: Supports a wide range of models including

qwen,gpt-oss, andnvidia-nemotron-nano-9b-v2. - Optimized for NVIDIA RTX: Designed to run efficiently on NVIDIA RTX GPUs.

How to use NVIDIA NIM?

- Get API Key: Obtain an API key to access the serverless APIs.

- Explore Models: Discover the available models for reasoning, vision, speech, and more.

- Choose Deployment: Select between serverless deployment or self-hosting on your GPU infrastructure.

- Integrate into Applications: Integrate the APIs into your AI applications to leverage optimized inference.

Who is NVIDIA NIM for?

NVIDIA NIM is ideal for:

- Developers: Building generative AI applications.

- Enterprises: Deploying AI models at scale.

- Researchers: Experimenting with state-of-the-art AI models.

Use Cases

NVIDIA NIM can be used in various industries, including:

- Automotive: Developing AI-powered driving assistance systems.

- Gaming: Enhancing game experiences with AI.

- Healthcare: Accelerating medical research and diagnostics.

- Industrial: Optimizing manufacturing processes with AI.

- Robotics: Creating intelligent robots for various applications.

Blueprints

NVIDIA offers blueprints to help you get started with building AI applications:

- AI Agent for Enterprise Research: Build a custom deep researcher to process and synthesize multimodal enterprise data.

- Video Search and Summarization (VSS) Agent: Ingest and extract insights from massive volumes of video data.

- Enterprise RAG Pipeline: Extract, embed, and index multimodal data for fast, accurate semantic search.

- Safety for Agentic AI: Improve safety, security, and privacy of AI systems.

Why choose NVIDIA NIM?

NVIDIA NIM provides a comprehensive solution for deploying AI models with optimized inference, flexible deployment options, and continuous security. By leveraging NVIDIA's expertise in AI and GPU technology, NIM enables you to build and deploy enterprise-grade generative AI applications more efficiently.

By providing optimized inference, a wide range of supported models, and flexible deployment options, NVIDIA NIM is an excellent choice for enterprises looking to leverage the power of generative AI. Whether you are building AI agents, video summarization tools, or enterprise search applications, NVIDIA NIM provides the tools and infrastructure you need to succeed.

What is NVIDIA NIM? It’s an inference microservice that supercharges AI model deployment. How does NVIDIA NIM work? By optimizing AI model deployment through state-of-the-art APIs and blueprints. How to use NVIDIA NIM? Start with an API key, pick a model and integrate it into your enterprise AI application.

Best Alternative Tools to "NVIDIA NIM"

Rierino is a powerful low-code platform accelerating ecommerce and digital transformation with AI agents, composable commerce, and seamless integrations for scalable innovation.

Fireworks AI delivers blazing-fast inference for generative AI using state-of-the-art, open-source models. Fine-tune and deploy your own models at no extra cost. Scale AI workloads globally.

Groq offers a hardware and software platform (LPU Inference Engine) for fast, high-quality, and energy-efficient AI inference. GroqCloud provides cloud and on-prem solutions for AI applications.

Spice.ai is an open source data and AI inference engine for building AI apps with SQL query federation, acceleration, search, and retrieval grounded in enterprise data.

Inferless offers blazing fast serverless GPU inference for deploying ML models. It provides scalable, effortless custom machine learning model deployment with features like automatic scaling, dynamic batching, and enterprise security.

Nebius AI Studio Inference Service offers hosted open-source models for faster, cheaper, and more accurate results than proprietary APIs. Scale seamlessly with no MLOps needed, ideal for RAG and production workloads.

GPUX is a serverless GPU inference platform that enables 1-second cold starts for AI models like StableDiffusionXL, ESRGAN, and AlpacaLLM with optimized performance and P2P capabilities.

Awan LLM offers an unrestricted and cost-effective LLM inference API platform with unlimited tokens, ideal for developers and power users. Process data, complete code, and build AI agents without token limits.

Enable efficient LLM inference with llama.cpp, a C/C++ library optimized for diverse hardware, supporting quantization, CUDA, and GGUF models. Ideal for local and cloud deployment.

ExLlama is a memory-efficient, standalone Python/C++/CUDA implementation of Llama for fast inference with 4-bit GPTQ quantized weights on modern GPUs.

Local AI is a free, open-source native application that simplifies experimenting with AI models locally. It offers CPU inferencing, model management, and digest verification, and doesn't require a GPU.

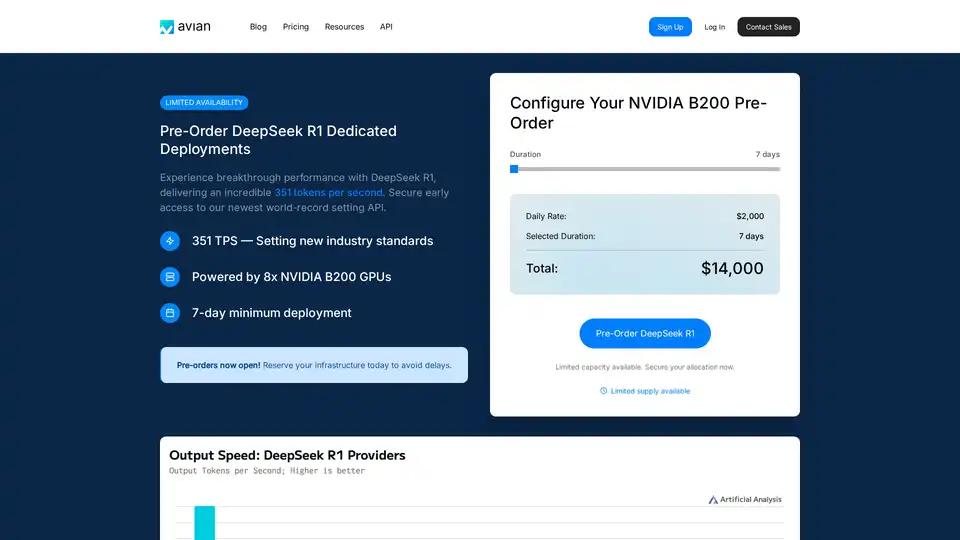

Avian API offers the fastest AI inference for open source LLMs, achieving 351 TPS on DeepSeek R1. Deploy any HuggingFace LLM at 3-10x speed with an OpenAI-compatible API. Enterprise-grade performance and privacy.

Mirai is an on-device AI platform enabling developers to deploy high-performance AI directly within their apps with zero latency, full data privacy, and no inference costs. It offers a fast inference engine and smart routing for optimized performance.

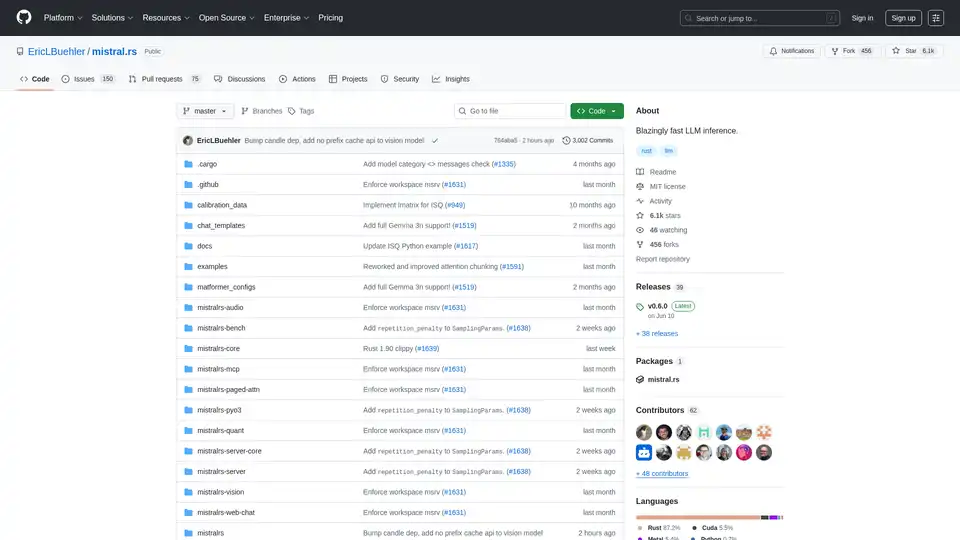

mistral.rs is a blazingly fast LLM inference engine written in Rust, supporting multimodal workflows and quantization. Offers Rust, Python, and OpenAI-compatible HTTP server APIs.