Friendli Inference

Overview of Friendli Inference

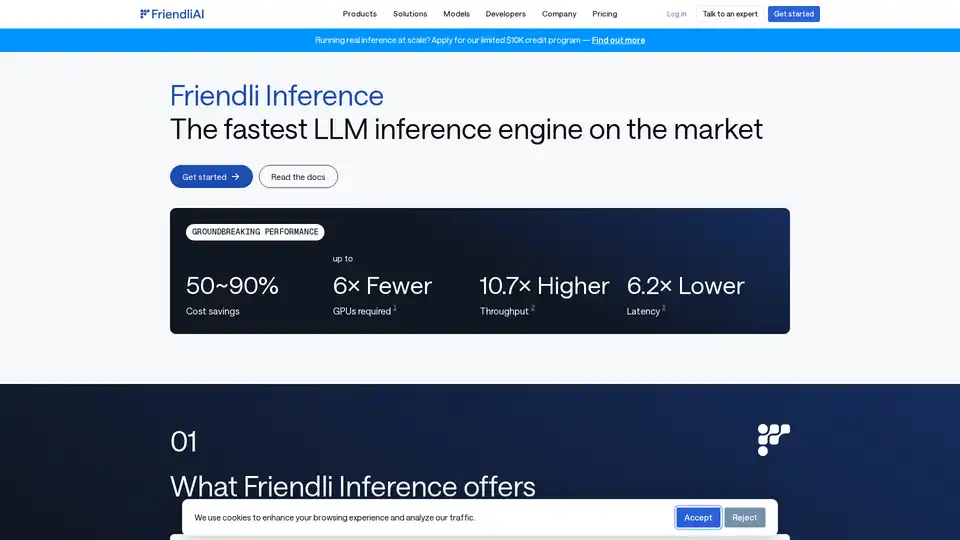

Friendli Inference: The Fastest LLM Inference Engine

What is Friendli Inference?

Friendli Inference is a highly optimized engine designed to accelerate the serving of Large Language Models (LLMs), significantly reducing costs by 50-90%. It stands out as the fastest LLM inference engine on the market, outperforming vLLM and TensorRT-LLM in performance testing.

How does Friendli Inference work?

Friendli Inference achieves its remarkable performance through several key technologies:

- Iteration Batching: This innovative batching technology efficiently handles concurrent generation requests, achieving up to tens of times higher LLM inference throughput compared to conventional batching while maintaining the same latency requirements. It is protected by patents in the US, Korea, and China.

- DNN Library: Friendli DNN Library comprises a set of optimized GPU kernels specifically designed for generative AI. This library enables faster LLM inference for various tensor shapes and data types, supports quantization, Mixture of Experts (MoE), and LoRA adapters.

- Friendli TCache: This intelligent caching system identifies and stores frequently used computational results, reducing the workload on GPUs by leveraging the cached results.

- Speculative Decoding: Friendli Inference natively supports speculative decoding, an optimization technique that speeds up LLM/LMM inference by making educated guesses on future tokens in parallel while generating the current token. This ensures identical model outputs at a fraction of the inference time.

Key Features and Benefits

- Significant Cost Savings: Reduce LLM serving costs by 50-90%.

- Multi-LoRA Serving: Simultaneously supports multiple LoRA models on fewer GPUs, even a single GPU.

- Wide Model Support: Supports a wide range of generative AI models, including quantized models and MoE.

- Groundbreaking Performance:

- Up to 6x fewer GPUs required.

- Up to 10.7x higher throughput.

- Up to 6.2x lower latency.

Highlights

- Running Quantized Mixtral 8x7B on a Single GPU: Friendli Inference can run a quantized Mixtral-7x8B-instruct v0.1 model on a single NVIDIA A100 80GB GPU, achieving at least 4.1x faster response time and 3.8x ~ 23.8x higher token throughput compared to a baseline vLLM system.

- Quantized Llama 2 70B on Single GPU: Seamlessly run AWQ-ed LLMs, such as Llama 2 70B 4-bit, on a single A100 80 GB GPU, enabling efficient LLM deployment and remarkable efficiency gains without sacrificing accuracy.

- Even Faster TTFT with Friendli TCache: Friendli TCache optimizes Time to First Token (TTFT) by reusing recurring computations, delivering 11.3x to 23x faster TTFT compared to vLLM.

How to Use Friendli Inference

Friendli Inference offers three ways to run generative AI models:

- Friendli Dedicated Endpoints: Build and run generative AI models on autopilot.

- Friendli Container: Serve LLM and LMM inferences with Friendli Inference in your private environment.

- Friendli Serverless Endpoints: Call the fast and affordable API for open-source generative AI models.

Why choose Friendli Inference?

Friendli Inference is the ideal solution for organizations looking to optimize the performance and cost-effectiveness of their LLM inference workloads. Its innovative technologies and wide range of features make it a powerful tool for deploying and scaling generative AI models.

Who is Friendli Inference for?

Friendli Inference is suitable for:

- Businesses deploying large language models.

- Researchers working with generative AI.

- Developers building AI-powered applications.

Best way to optimize LLM inference?

The best way to optimize LLM inference is to use Friendli Inference, which offers significant cost savings, high throughput, and low latency compared to other solutions.

Best Alternative Tools to "Friendli Inference"

Anyscale, powered by Ray, is a platform for running and scaling all ML and AI workloads on any cloud or on-premises. Build, debug, and deploy AI applications with ease and efficiency.

vLLM is a high-throughput and memory-efficient inference and serving engine for LLMs, featuring PagedAttention and continuous batching for optimized performance.

Predibase is a developer platform for fine-tuning and serving open-source LLMs. Achieve unmatched accuracy and speed with end-to-end training and serving infrastructure, featuring reinforcement fine-tuning.

Float16.Cloud provides serverless GPUs for fast AI development. Run, train, and scale AI models instantly with no setup. Features H100 GPUs, per-second billing, and Python execution.

CHAI AI is a leading conversational AI platform focused on research and development of generative AI models. It offers tools and infrastructure for building and deploying social AI applications, emphasizing user feedback and incentives.

Stable Code Alpha is Stability AI's first LLM generative AI product for coding, designed to assist programmers and provide a learning tool for new developers.

SuperTechFans provides tools like MultiAI-Chat, a Chrome extension for comparing LLM chat results and PacGen for proxy management.

Allganize provides secure enterprise AI solutions with advanced LLM technology, featuring agentic RAG, no-code AI builders, and on-premise deployment for data sovereignty.

What-A-Prompt is a user-friendly prompt optimizer for enhancing inputs to AI models like ChatGPT and Gemini. Select enhancers, input your prompt, and generate creative, detailed results to boost LLM outputs. Access a vast library of optimized prompts.

Lightning-fast AI platform for developers. Deploy, fine-tune, and run 200+ optimized LLMs and multimodal models with simple APIs - SiliconFlow.

Bottr offers top-tier AI consulting and customizable chatbots for enterprises. Launch intelligent assistants, automate workflows, and integrate with major LLMs like GPT and Claude for secure, scalable AI solutions.

Nebius AI Studio Inference Service offers hosted open-source models for faster, cheaper, and more accurate results than proprietary APIs. Scale seamlessly with no MLOps needed, ideal for RAG and production workloads.

Spice.ai is an open source data and AI inference engine for building AI apps with SQL query federation, acceleration, search, and retrieval grounded in enterprise data.

Proto AICX is an all-in-one platform for local and secure AI, providing inclusive CX automation and multilingual contact center solutions for enterprise and government.