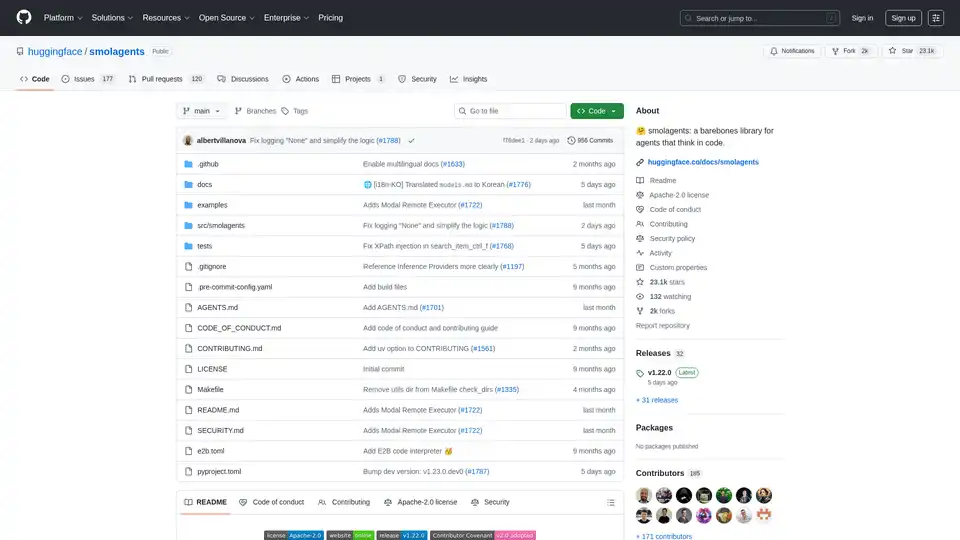

smolagents

Overview of smolagents

What is Smolagents?

Smolagents is a lightweight, open-source Python library designed to simplify the creation of AI agents that reason and execute actions primarily through code. Developed by the Hugging Face team, it stands out for its minimalist approach, packing powerful agentic capabilities into just around 1,000 lines of core code. Unlike bloated frameworks, smolagents strips away unnecessary abstractions, focusing on raw efficiency while supporting advanced features like secure code execution and seamless integration with large language models (LLMs).

At its heart, smolagents enables developers to build agents that "think in code," meaning the AI generates Python snippets to perform tasks rather than relying on rigid JSON tool calls. This code-centric paradigm has proven to reduce steps by up to 30% in complex workflows, making it ideal for tasks requiring multi-step reasoning, such as web searches, data analysis, or planning itineraries. Whether you're a researcher experimenting with open models or an engineer deploying production agents, smolagents offers a flexible foundation for agentic AI systems.

How Does Smolagents Work?

Smolagents operates on a ReAct-inspired loop (Reasoning and Acting), but with a twist: the LLM generates Python code snippets as actions instead of structured outputs. Here's a breakdown of its core mechanism:

Agent Initialization: You start by defining an agent, such as the flagship

CodeAgent, and equip it with tools (e.g., web search, file I/O) and a model backend.Reasoning Phase: The LLM (any supported model) receives the task prompt, past observations, and available tools. It reasons step-by-step and outputs a Python code block describing the intended action.

Execution Phase: The code snippet is executed in a controlled environment. For safety, smolagents supports sandboxes like E2B, Modal, Docker, or even browser-based Pyodide with Deno WebAssembly. This prevents arbitrary code from harming your system.

Observation and Iteration: Results from execution feed back into the loop, allowing the agent to refine its approach until the task is complete.

For example, to answer a query like "How many seconds would it take for a leopard at full speed to run through Pont des Arts?", the agent might generate code to search for leopard speed, bridge length, and perform the calculation—all in one efficient snippet. This contrasts with traditional agents that might require multiple tool calls, leading to more LLM invocations and higher costs.

Smolagents also includes ToolCallingAgent for classic JSON-based actions, giving users flexibility. Multi-agent hierarchies are supported, where one agent delegates to others, enhancing scalability for complex applications.

Core Features of Smolagents

Smolagents packs a punch despite its small footprint. Key features include:

Simplicity and Minimalism: Core logic in

agents.pyis under 1,000 lines, making it easy to understand, modify, and extend. No steep learning curve—just pure Python.Model-Agnostic Design: Works with any LLM via integrations like Hugging Face's InferenceClient, LiteLLM (100+ providers), OpenAI, Anthropic, local Transformers, Ollama, Azure, or Amazon Bedrock. Switch models effortlessly without rewriting code.

Modality Support: Handles text, vision, video, and audio inputs. For instance, vision-enabled agents can process images alongside text prompts, as shown in dedicated tutorials.

Tool Flexibility: Integrate tools from anywhere—LangChain libraries, MCP servers, or even Hugging Face Spaces. Default toolkit includes essentials like web search and code execution.

Hub Integration: Share and load agents directly from the Hugging Face Hub. Push your custom agent as a Space repository for collaboration:

agent.push_to_hub("username/my_agent").Secure Execution: Prioritizes safety with sandbox options. E2B and Modal provide cloud-based isolation, while Docker suits local setups. A built-in secure Python interpreter adds an extra layer for less risky environments.

CLI Tools: Run agents via command line with

smolagentfor general tasks (e.g., trip planning with web search and data imports) orwebagentfor browser automation using Helium.

These features make smolagents versatile for both prototyping and production, emphasizing performance without complexity.

How to Use Smolagents: Step-by-Step Guide

Getting started is straightforward. Install via pip: pip install "smolagents[toolkit]" to include default tools.

Basic Code Agent Example

from smolagents import CodeAgent, WebSearchTool, InferenceClientModel

model = InferenceClientModel(model_id="microsoft/DialoGPT-medium") # Or any LLM

agent = CodeAgent(tools=[WebSearchTool()], model=model, stream_outputs=True)

result = agent.run("Plan a trip to Tokyo, Kyoto, and Osaka between Mar 28 and Apr 7.")

print(result)

This setup leverages code generation for multi-tool actions, like batch searches or calculations.

CLI Usage

For quick runs without scripting:

smolagent "Query here" --model-type InferenceClientModel --model-id Qwen/Qwen2.5-Coder-32B-Instruct --tools web_search --imports pandas numpy- Web-specific:

webagent "Navigate to site and extract details" --model-type LiteLLMModel --model-id gpt-4o

Advanced: Sandboxed Execution

To enable E2B sandbox:

agent = CodeAgent(..., executor="e2b") # Or "modal", "docker"

This ensures code runs in isolation, crucial for untrusted LLM outputs.

Sharing and Loading Agents

Export to Hub for reuse:

agent.push_to_hub("my_agent_space")

loaded_agent = CodeAgent.from_hub("my_agent_space")

Ideal for team projects or public benchmarks.

Benchmarking shows open models like Qwen2.5-Coder rival closed ones (e.g., GPT-4) in agent tasks, with code agents outperforming vanilla LLMs by handling diverse challenges like math, search, and planning.

Main Use Cases and Practical Value

Smolagents shines in scenarios demanding efficient, code-driven automation:

Research and Development: Prototype agentic workflows with open LLMs. Researchers can benchmark models on custom tasks, leveraging the library's transparency.

Data Analysis and Planning: Agents handle web scraping, calculations, and itinerary building—e.g., travel planning with real-time searches and pandas for data crunching.

Web Automation: Use

webagentfor e-commerce, content extraction, or testing, simulating user interactions securely.Multi-Modal Tasks: Combine vision models for image analysis with code execution, like processing video frames or generating reports from visuals.

Production Deployment: Integrate into apps via Hub Spaces or CLI for scalable, low-latency agents.

The practical value lies in its efficiency: fewer LLM calls mean lower costs and faster responses. For instance, code snippets enable parallel actions (e.g., multiple searches in one go), boosting throughput by 30%. It's Apache-2.0 licensed, fostering community contributions—185+ contributors have added features like multilingual docs and remote executors.

Security is baked in, addressing code execution risks with sandboxes and best practices, making it suitable for enterprise use.

Who is Smolagents For?

Developers and AI Engineers: Those tired of over-engineered frameworks will appreciate the hackable core. Customize agents for specific tools or hierarchies.

Researchers: Test open vs. closed models in agent benchmarks; cite it in papers with the provided BibTeX.

Startups and Teams: Quick setup for prototypes, Hub sharing for collaboration.

Hobbyists: CLI tools lower the barrier for experimenting with AI agents.

If you're building agentic systems and value simplicity over bloat, smolagents is your go-to. Why choose it? It democratizes advanced agents, proving open models can match proprietary power while keeping things lightweight and secure.

For more, check the full docs at huggingface.co/docs/smolagents or dive into the GitHub repo. Contribute via the guide to shape its future!

Best Alternative Tools to "smolagents"

Shell2 is an AI assistant interactive platform by Raiden AI, offering data analysis, processing, and generation capabilities. It features session persistence, user uploads, multiplayer collaboration, and an unrestricted environment.

GitHub Next explores the future of software development by prototyping tools and technologies that will change our craft. They identify new approaches to building healthy, productive software engineering teams.

Fetch.ai is a platform that enables the Agentic Economy, allowing users to build, discover, and transact with AI agents. It features tools like Agentverse for creating and exploring agents, and ASI Wallet for accessing the ASI ecosystem.

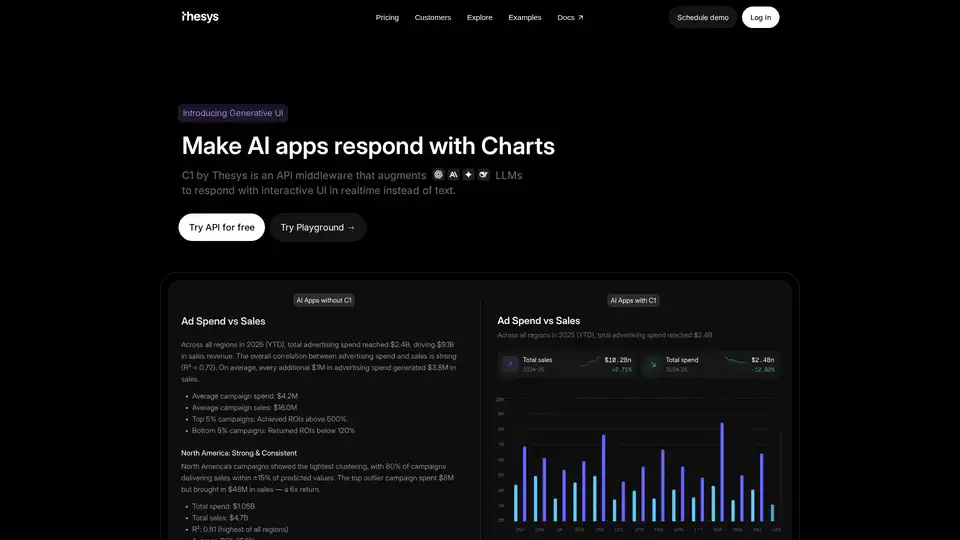

C1 by Thesys is an API middleware that augments LLMs to respond with interactive UI in realtime instead of text, turning responses from your model into live interfaces using React SDK.

Worthify.ai provides AI-powered binary analysis for vulnerability detection and malware analysis, integrating with existing security workflows. Enhance your cybersecurity with AI-driven reverse engineering.

Olostep is a web data API for AI and research agents. It allows you to extract structured web data from any website in real-time and automate your web research workflows. Use cases include data for AI, spreadsheet enrichment, lead generation, and more.

Botpress is a complete AI agent platform powered by the latest LLMs. It enables you to build, deploy, and manage AI agents for customer support, internal automation, and more, with seamless integration capabilities.

Flowise is an open-source generative AI development platform to visually build AI agents and LLM orchestration. Build custom LLM apps in minutes with a drag & drop UI.

Transform your workflow with BrainSoup! Create custom AI agents to handle tasks and automate processes through natural language. Enhance AI with your data while prioritizing privacy and security.

Chatsistant is a versatile AI platform for creating multi-agent RAG chatbots powered by top LLMs like GPT-5 and Claude. Ideal for customer support, sales automation, and e-commerce, with seamless integrations via Zapier and Make for efficient deployment.

Plandex is an open-source, terminal-based AI coding agent designed for large projects and real-world tasks. It features diff review, full auto mode, and up to 2M token context management for efficient software development with LLMs.

Marvin is a powerful Python framework for building AI applications with large language models (LLMs). It simplifies state management, agent coordination, and structured outputs for developers creating intelligent apps.

Qubinets is an open-source platform simplifying the deployment and management of AI and big data infrastructure. Build, connect, and deploy with ease. Focus on code, not configs.

Moveo.AI provides an AI agent platform automating, personalizing, and scaling customer conversations for financial services, improving debt collection and customer experience.