LLM Token Counter

Overview of LLM Token Counter

LLM Token Counter: A Comprehensive Tool for Managing LLM Token Limits

What is LLM Token Counter?

LLM Token Counter is a tool designed to help users manage token limits for various Language Models (LLMs), including GPT-3.5, GPT-4, Claude-3, and Llama-3. It supports many popular models and aims to provide an optimal experience in leveraging generative AI technology.

How does LLM Token Counter work?

LLM Token Counter utilizes Transformers.js, a JavaScript implementation of the Hugging Face Transformers library. Tokenizers are loaded directly in your browser, enabling client-side token count calculation. The efficient Rust implementation ensures fast token count calculation.

Why is LLM Token Counter important?

LLMs have inherent token limitations, and exceeding these limits can lead to unexpected outputs. LLM Token Counter helps you stay within the specified limits to ensure optimal performance.

Will I leak my prompt when using LLM Token Counter?

No, your prompt remains secure. The token count calculation is performed client-side, ensuring that your sensitive information is never transmitted to the server or any external entity. Data privacy is a top priority.

Where can I use LLM Token Counter?

LLM Token Counter is accessible online through a website, making it convenient to use on any device with a browser.

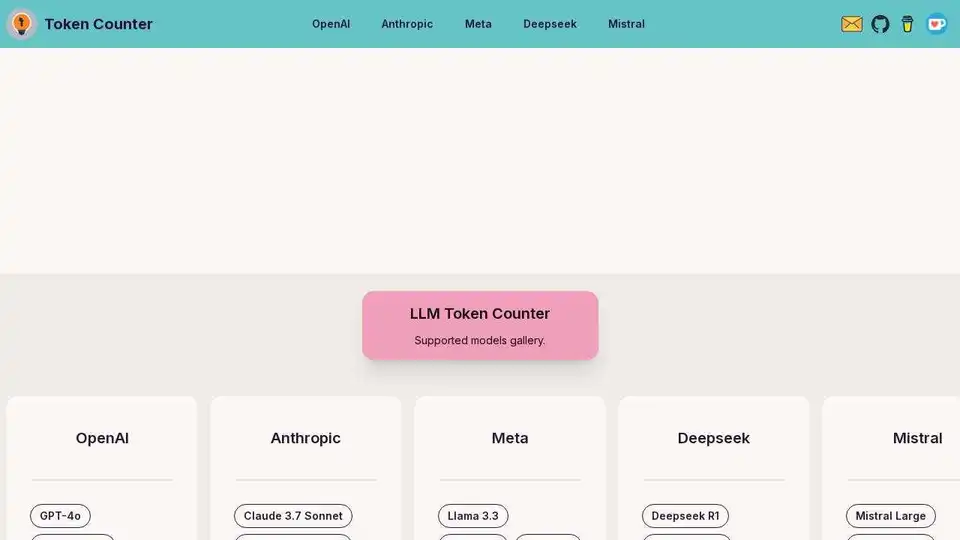

Supported Models:

- OpenAI: GPT-4, GPT-3.5 Turbo, Embedding models

- Anthropic: Claude Opus, Claude Sonnet, Claude Haiku

- Meta: Llama 3, Llama 2, Code Llama

- Deepseek: Deepseek R1, Deepseek V3, Deepseek V2

- Mistral: Mistral Large, Mistral Small, Codestral

Best way to manage LLM token limits?

Use LLM Token Counter to calculate tokens before submitting your prompts to ensure compliance with token limits. Regularly update to the latest models for accurate counts.

If you have any assistance or suggestions for additional features, please feel free to reach out via email.

Best Alternative Tools to "LLM Token Counter"

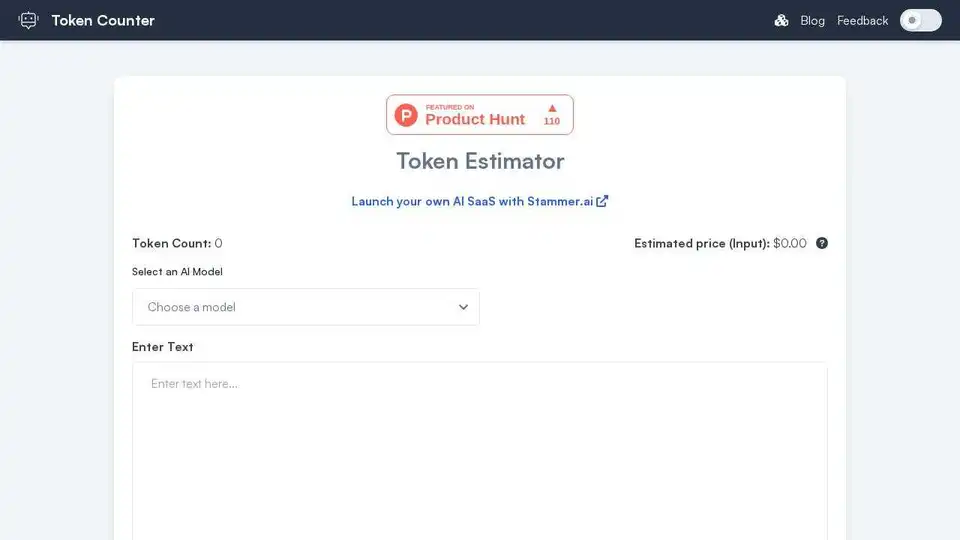

Token Counter: Count tokens, estimate costs for any AI model. Optimize prompts, manage budget, maximize efficiency in AI interactions.

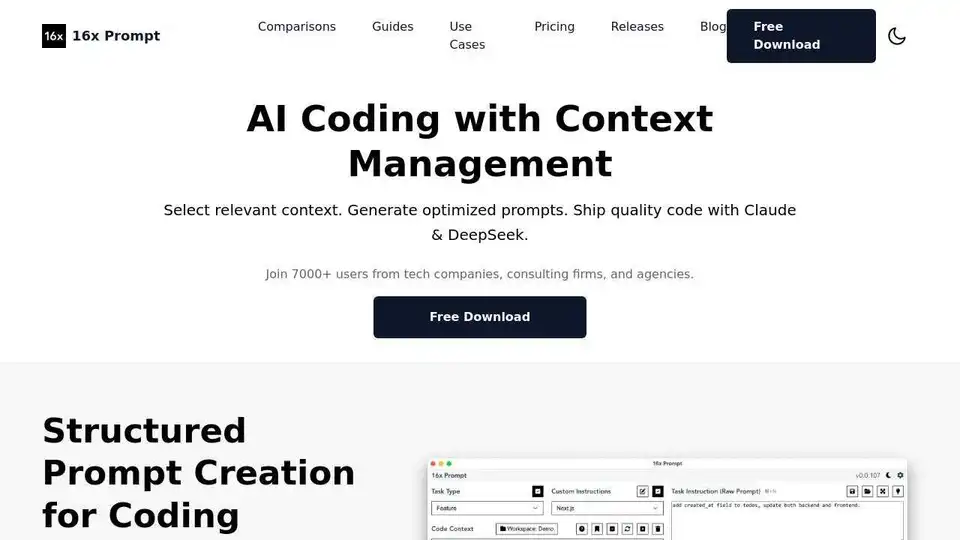

16x Prompt is an AI coding tool for managing code context, customizing prompts, and shipping features faster with LLM API integrations. Ideal for developers seeking efficient AI-assisted coding.

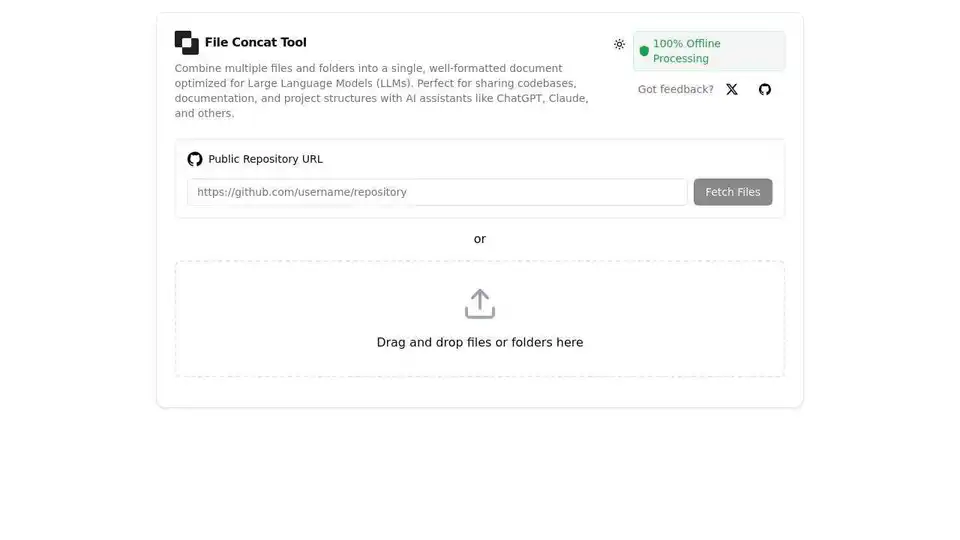

Free online file concatenation tool for AI assistants. Combine files into optimized format for ChatGPT, Claude, Gemini & other LLMs.

Botpress is a complete AI agent platform powered by the latest LLMs. It enables you to build, deploy, and manage AI agents for customer support, internal automation, and more, with seamless integration capabilities.

GPT Researcher is an open-source AI research assistant that automates in-depth research. It gathers information from trusted sources, aggregates results, and generates comprehensive reports quickly. Ideal for individuals and teams seeking unbiased insights.

Awan LLM offers an unrestricted and cost-effective LLM inference API platform with unlimited tokens, ideal for developers and power users. Process data, complete code, and build AI agents without token limits.

Awan LLM provides an unlimited, unrestricted, and cost-effective LLM Inference API platform. It allows users and developers to access powerful LLM models without token limitations, ideal for AI agents, roleplay, data processing, and code completion.

AiPrice offers an API for calculating OpenAI token pricing. Estimate prompt token count accurately for various LLM models. Free plan available, no credit card needed.

DeepSeek v3 is a powerful AI-driven LLM with 671B parameters, offering API access and research paper. Try our online demo for state-of-the-art performance.

Fiorino.AI is an open-source solution for SaaS businesses to track and optimize AI costs. Monitor LLM usage, set spending limits, and automate usage-based billing.

ModelFusion: Complete LLM toolkit for 2025 with cost calculators, prompt library, and AI observability tools for GPT-4, Claude, and more.

Langtrace is an open-source observability and evaluations platform designed to improve the performance and security of AI agents. Track vital metrics, evaluate performance, and ensure enterprise-grade security for your LLM applications.

Secure your LLM API keys with Backmesh, an open-source backend. Prevent leaks, control access, and implement rate limits to reduce LLM API costs.

Mercury by Inception, the fastest diffusion LLMs for AI applications. Powering cutting-edge coding, voice, search, and agents with blazing fast inference and frontier quality.