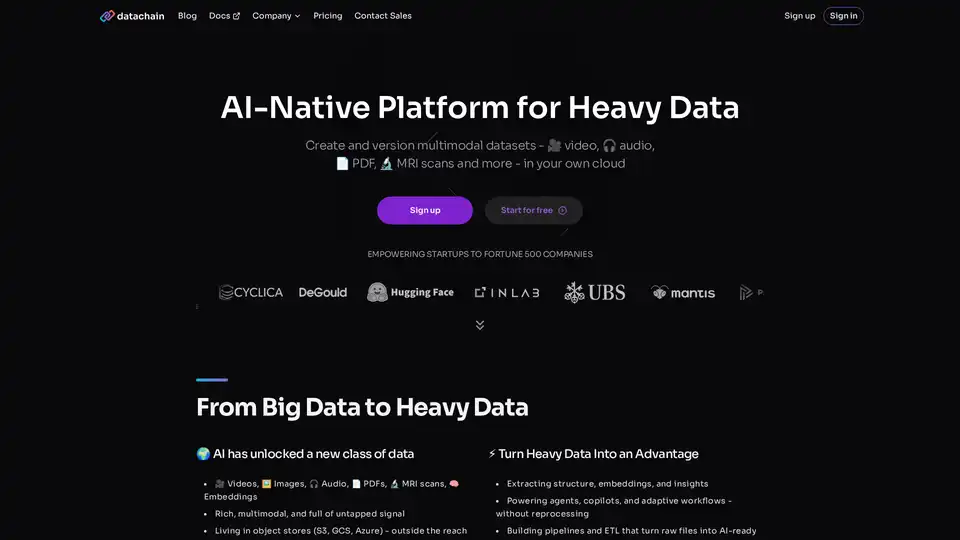

DataChain

Overview of DataChain

What is DataChain?

DataChain is an AI-native platform designed to handle the complexities of heavy data in the era of advanced machine learning and artificial intelligence. It stands out by providing a centralized registry for multimodal datasets, including videos, audio files, PDFs, images, MRI scans, and even embeddings. Unlike traditional SQL-based tools that struggle with unstructured or large-scale data stored in object stores like S3, GCS, or Azure, DataChain bridges the gap between developer-friendly workflows and enterprise-scale processing. This platform empowers startups all the way to Fortune 500 companies to curate, enrich, and version their datasets efficiently, turning raw, multimodal inputs into actionable AI knowledge.

At its core, DataChain addresses the shift from big data to what it calls 'heavy data'—rich, unstructured formats brimming with untapped potential for AI applications. Whether you're building agents, copilots, or adaptive workflows, DataChain ensures your data pipeline doesn't require constant reprocessing, saving time and resources while unlocking deeper insights.

How Does DataChain Work?

DataChain operates on a developer-first philosophy, combining the simplicity of Python with the scalability of SQL-like operations. Here's a breakdown of its key mechanisms:

Centralized Dataset Registry: All datasets are tracked with full lineage, metadata, and versioning. You can access them seamlessly through a user interface (UI), chat interfaces, integrated development environments (IDEs), or even AI agents via the Model Control Protocol (MCP). This registry acts as a single source of truth, making it easy to manage dependencies and reproduce results.

Python Simplicity Meets SQL Scale: Developers write in one familiar language—Python—across both code and data operations. This eliminates the silos created by separate SQL tools, enhancing integration with IDEs and AI agents. For instance, you can query and manipulate heavy data without switching contexts, streamlining your workflow.

Local Development and Cloud Scaling: Start building and testing data pipelines in your local IDE for rapid iteration. Once ready, scale effortlessly to hundreds of GPUs in the cloud with zero code rework. This hybrid approach maximizes productivity without compromising on performance for large-scale tasks.

Zero Data Copy and Lock-In: Your original files—videos, images, audio—remain in their native storage like S3. DataChain simply references and tracks versions, avoiding unnecessary duplication or vendor lock-in. This not only reduces costs but also ensures data sovereignty and flexibility.

The platform leverages large language models (LLMs) and machine learning models to extract structure, embeddings, and insights from unstructured sources. For example, it can apply models to videos or PDFs during ETL (Extract, Transform, Load) processes, organizing chaos into AI-ready formats.

Core Features of DataChain

DataChain's suite of tools covers every stage of data handling for AI projects. Key features include:

Multimodal Data Mastery: Handle diverse formats like video (🎥), audio (🎧), PDFs (📄), images (🖼️), and medical scans (🔬 MRI) in one place. Extract insights using LLMs to process unstructured content effortlessly.

Seamless ETL Pipelines: Build automated workflows to turn raw files into enriched datasets. Filter, join, and update data at scale, powering everything from experiment tracking to model versioning.

Data Lineage and Reproducibility: Track every dependency between code, data, and models. Reproduce datasets on demand and automate updates, which is crucial for reproducible ML research and compliance.

Large-Scale Processing: Manage millions or billions of files without bottlenecks. Compute updates efficiently and leverage ML for advanced filtration, making it ideal for heavy data scenarios.

Integration and Accessibility: Supports UI, chat, IDEs, and agents. Open-source elements via GitHub repository allow customization, while the cloud-based Studio provides a ready-to-use environment.

These features are backed by trusted partnerships with global industry leaders, ensuring reliability for high-stakes AI deployments.

How to Use DataChain

Getting started with DataChain is straightforward and free to begin:

Sign Up: Create an account on the DataChain website to access the platform. No upfront costs—start exploring immediately.

Set Up Your Environment: Connect your object storage (e.g., S3) and import datasets. Use the intuitive UI or Python SDK to begin curating data.

Build Pipelines: Develop in your local IDE using Python. Apply ML models for enrichment, then deploy to the cloud for scaling.

Version and Track: Register datasets with metadata and lineage. Use MCP for agent interactions or query via natural language.

Monitor and Iterate: Leverage the registry to reproduce results, update datasets via ETL, and analyze insights for your AI models.

Documentation, a Quick Start guide, and Discord community support make onboarding smooth. For enterprise needs, contact sales for pricing and features tailored to your scale.

Why Choose DataChain?

In a landscape where AI demands ever-larger, more complex datasets, DataChain provides a competitive edge by making heavy data accessible and manageable. Traditional tools fall short on unstructured formats, leading to silos and inefficiencies. DataChain eliminates these pain points with its zero-copy approach, reducing storage costs by up to 100% in some cases, and its developer-centric design accelerates time-to-insight.

Teams using DataChain report faster experiment tracking, seamless model versioning, and robust pipeline automation. It's particularly valuable for avoiding reprocessing in iterative AI development, where changes in data or models can otherwise cascade into hours of rework. Plus, with no lock-in, you retain control over your infrastructure.

Compared to alternatives, DataChain's focus on multimodal heavy data sets it apart—it's not just another data management tool; it's built for the next wave of AI, from generative models to real-time agents.

Who is DataChain For?

DataChain is ideal for a wide range of users in the AI ecosystem:

Developers and Data Scientists: Those building ML pipelines who need Python-native tools for multimodal data without SQL hurdles.

AI/ML Teams in Startups and Enterprises: From early-stage innovators to Fortune 500 companies dealing with video analysis, audio transcription, or medical imaging.

Researchers and Analysts: Anyone requiring reproducible datasets with full lineage for experiments in computer vision, NLP, or multimodal AI.

Product Builders: Creating copilots, agents, or adaptive systems that rely on enriched, versioned knowledge bases.

If you're grappling with unstructured data in object storage and want to harness it for AI without the overhead, DataChain is your go-to solution.

Practical Value and Use Cases

DataChain delivers tangible value by transforming heavy data into a strategic asset. Consider these real-world applications:

Media and Entertainment: Process video and audio libraries to extract embeddings for recommendation engines or content moderation.

Healthcare: Version MRI scans and PDFs for AI-driven diagnostics, ensuring compliance with data lineage tracking.

E-Commerce: Enrich product images and descriptions using LLMs to build personalized search and virtual try-on features.

Research Labs: Automate ETL for large-scale datasets in multimodal learning, speeding up model training cycles.

Users praise its scalability—handling billions of files effortlessly—and the productivity boost from IDE integration. While pricing details are available upon contact, the free tier lowers barriers for experimentation.

In summary, DataChain redefines data management for AI at scale. By curating, enriching, and versioning multimodal datasets with minimal friction, it empowers efficient teams to lead in the heavy data revolution. Ready to turn your data into an AI advantage? Sign up today and explore its GitHub for open-source contributions.

Best Alternative Tools to "DataChain"

Tafi Avatar, part of Daz 3D, provides procedurally generated, normalized 3D character and environment datasets for AI training. It offers parametric character generation at scale, realistic human anatomy, and pipeline flexibility.

Nomic Atlas is an AI-native data platform that operationalizes large unstructured datasets for AI applications, data analytics, and workflows. It offers tools for data exploration, collaboration, and integration.

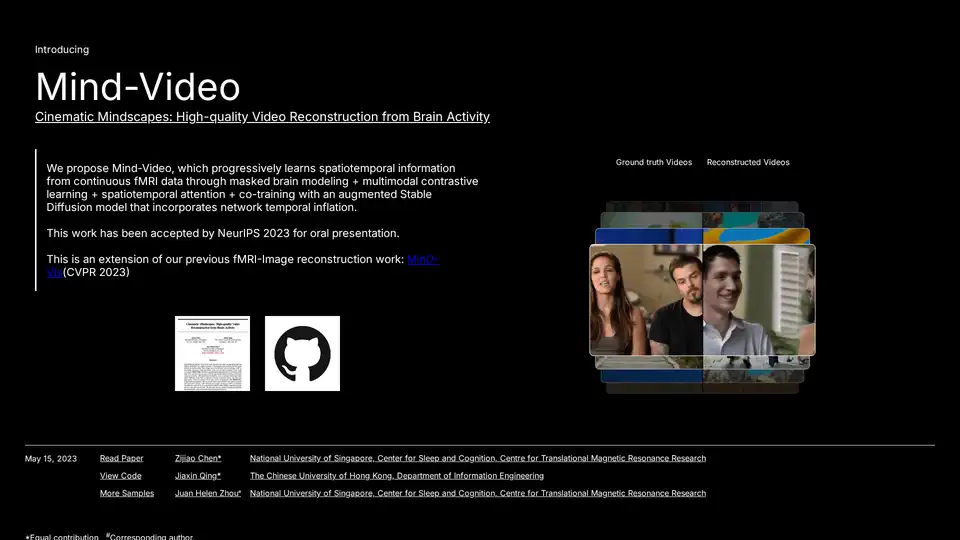

Mind-Video uses AI to reconstruct videos from brain activity captured via fMRI. This innovative tool combines masked brain modeling, multimodal contrastive learning, and spatiotemporal attention to generate high-quality video.

Maxim AI is an end-to-end evaluation and observability platform that helps teams ship AI agents reliably and 5x faster with comprehensive testing, monitoring, and quality assurance tools.

Lightning-fast AI platform for developers. Deploy, fine-tune, and run 200+ optimized LLMs and multimodal models with simple APIs - SiliconFlow.

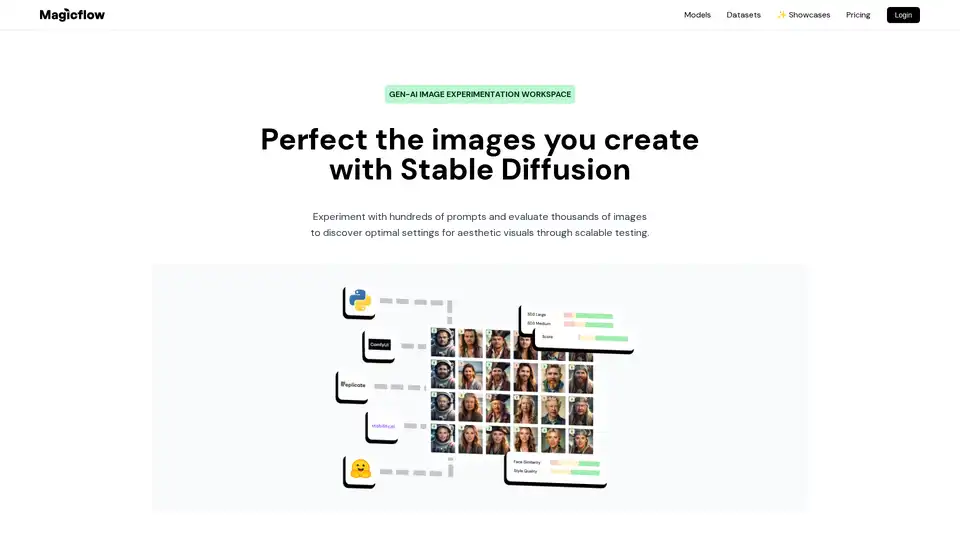

Magicflow AI is a generative AI image experimentation workspace that enables bulk image generation, evaluation, and team collaboration for perfecting Stable Diffusion outputs.

FiftyOne is the leading open-source visual AI & computer vision data platform trusted by top enterprises to maximize AI performance with better data. Data Curation, Smarter Annotation, Model Evaluation.

Innovatiana delivers expert data labeling and builds high-quality AI datasets for ML, DL, LLM, VLM, RAG, and RLHF, ensuring ethical and impactful AI solutions.

Explore the world of GPT6, a superintelligent AI with humor and advanced capabilities, including multimodal support and real-time learning. Chat with GPT6 and experience the future of AI!

Join the Llama Family, an open-source community dedicated to advancing AI through Llama models. Explore various models, contribute to the ecosystem, and help build towards AGI.

T-Rex Label is an AI-powered data annotation tool supporting Grounding DINO, DINO-X, and T-Rex models. It's compatible with COCO and YOLO datasets, offering features like bounding boxes, image segmentation, and mask annotation for efficient computer vision dataset creation.

Ocular AI is a multimodal data lakehouse platform that allows you to ingest, curate, search, annotate, and train custom AI models on unstructured data. Built for the multi-modal AI era.

Bakery simplifies AI model fine-tuning & monetization. Perfect for AI startups, ML engineers, and researchers. Explore powerful open-source AI models for language, image, and video generation.

Covariant Brain is an AI Robotics platform powering warehouse automation with RFM-1, enabling robots to pick virtually any item on Day One and adapt to changing business needs.