diffusers.js

Overview of diffusers.js

What is diffusers.js?

Diffusers.js is an innovative JavaScript library designed to bring the power of Stable Diffusion—a leading AI model for image generation—directly into web browsers. This WebGPU-accelerated demo allows users to generate high-quality AI images without needing a dedicated GPU or complex setup. By porting the Stable Diffusion pipeline from Python to JavaScript, diffusers.js makes advanced AI art creation accessible on everyday web platforms, leveraging modern browser capabilities like WebGPU for efficient performance.

At its core, diffusers.js targets developers, AI enthusiasts, and creators who want to experiment with text-to-image generation in a lightweight, client-side environment. It supports key features from the original Stable Diffusion ecosystem, including prompt-based image synthesis, negative prompts to refine outputs, and customizable parameters for fine-tuned results. Whether you're prototyping web apps or simply exploring AI-generated visuals, this tool democratizes access to state-of-the-art diffusion models.

How Does diffusers.js Work?

The magic of diffusers.js lies in its technical adaptations for the web. Originally built on Python's diffusers library, the JavaScript port involves translating the Stable Diffusion pipeline, which uses denoising diffusion probabilistic models (DDPM) to iteratively refine random noise into coherent images based on textual descriptions.

Here's a simplified breakdown of the process:

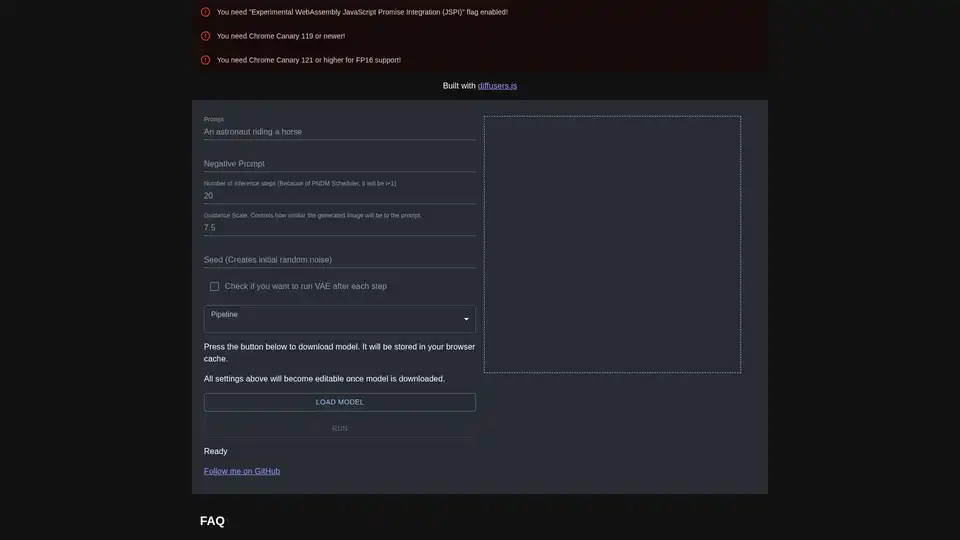

- Model Loading: Users download pre-trained Stable Diffusion models (like those from Hugging Face) into the browser's cache via a simple 'LOAD MODEL' button. This stores the model locally for repeated use, reducing load times on subsequent sessions.

- Input Configuration: Once loaded, you can input a positive prompt (e.g., 'a futuristic cityscape at sunset') and a negative prompt (e.g., 'blurry, low quality') to guide the generation. Additional controls include:

- Number of inference steps: Typically 20-50, adjusted for quality vs. speed (note: uses PNDM scheduler, so actual steps are i+1).

- Guidance scale: A value like 7.5 that determines how closely the output adheres to the prompt—higher values make it more literal.

- Seed: For reproducible results by controlling initial random noise.

- VAE (Variational Autoencoder) option: Run after each step for enhanced image decoding and quality.

- Execution: Hit 'RUN' to initiate inference. WebGPU handles the heavy computations, compiling the model to run efficiently on compatible hardware.

Behind the scenes, the developer patched ONNX Runtime, Emscripten, and Binaryen (a WebAssembly compiler) to manage memory allocations over 4GB, which is crucial for large models. This also required updates to the WebAssembly specification and V8 engine integrations in Chrome. The result? Smooth, browser-based AI generation that rivals desktop setups, though it demands specific flags like 'Experimental WebAssembly JavaScript Promise Integration (JSPI)' enabled in Chrome Canary (version 119+ for basics, 121+ for FP16 support).

How to Use diffusers.js?

Getting started with diffusers.js is straightforward but requires a compatible browser setup. Follow these steps for the best experience:

- Browser Preparation: Use Chrome Canary (build 119 or newer; 121+ recommended for half-precision floating-point support). Enable the experimental JSPI flag in chrome://flags.

- Access the Demo: Visit the diffusers.js WebGPU demo page. You'll see input fields for prompts, sliders for parameters, and buttons for loading and running.

- Download the Model: Click 'LOAD MODEL' to fetch the Stable Diffusion checkpoint. This may take a few minutes initially, as it's cached in your browser for future runs.

- Configure and Generate: Enter your prompt, tweak settings, and press 'RUN'. The demo processes the input and displays the generated image. All settings become editable post-download.

- Troubleshooting: If you encounter issues like protobuf parsing errors, clear site data via DevTools (Application > Storage). For memory errors (e.g., sbox_fatal_memory_exceeded), ensure you have at least 8GB RAM and reload the page.

This client-side approach means no server dependency, making it ideal for offline experimentation once loaded. For developers, the library's source is available on GitHub (@dakenf), inviting contributions to expand WebGPU AI capabilities.

Why Choose diffusers.js?

In a sea of cloud-based AI tools, diffusers.js stands out for its privacy-focused, zero-latency generation. No data leaves your device, addressing concerns in creative workflows where IP protection matters. It's also cost-free—no API fees or subscriptions—perfect for hobbyists or educators demonstrating diffusion models.

Performance-wise, WebGPU acceleration delivers results comparable to native implementations, especially on modern GPUs. Users report generating 512x512 images in under a minute on high-end laptops, with outputs that capture intricate details from prompts. The demo's FAQ highlights real-world fixes, showing the tool's robustness.

Compared to alternatives like browser extensions for Stable Diffusion, diffusers.js offers deeper customization without installation hassles. It's a testament to web tech's evolution, pushing boundaries in edge AI computing.

Who is diffusers.js For?

This tool appeals to a diverse audience:

- Web Developers: Integrate AI image gen into apps using JavaScript, enhancing user experiences with dynamic visuals.

- AI Researchers and Students: Experiment with diffusion models in accessible environments, learning concepts like schedulers (PNDM) and classifiers.

- Digital Artists and Content Creators: Quickly prototype ideas from text prompts, iterating with seeds and guidance for artistic control.

- Tech Enthusiasts: Tinker with WebGPU and WebAssembly for cutting-edge browser demos.

It's not suited for production-scale needs (e.g., high-volume rendering) due to browser memory limits, but excels in prototyping and education.

Practical Value and Use Cases

Diffusers.js unlocks numerous applications:

- Creative Prototyping: Generate concept art for games, UI designs, or marketing visuals on the fly.

- Educational Demos: Teach AI principles in classrooms, showing how prompts influence outputs without software installs.

- Web App Integration: Build interactive tools like custom avatar generators or storyboarding aids.

- Personal Projects: Create unique wallpapers or social media graphics using browser-only resources.

The practical value shines in its empowerment: Anyone with a compatible browser can now harness Stable Diffusion's magic, fostering innovation in web-based AI. Follow @dakenf on GitHub for updates on WebGPU advancements and potential expansions, like multi-model support.

In summary, diffusers.js redefines browser-based AI, making sophisticated image generation as simple as loading a webpage. Whether you're curious about diffusion tech or building the next web AI hit, this demo is your gateway.

Best Alternative Tools to "diffusers.js"

Experience HENGPLAY, the No.1 online baccarat platform, featuring AI-driven fairness, secure transactions, VIP rooms, and transparent live broadcasts for a winning experience.

Hotpot AI Art Generator is a free, no-login tool leveraging Stable Diffusion for stunning text-to-image creations. Millions use it to produce art, illustrations, and photos effortlessly, enhancing creativity in marketing and personal projects.

Chat with AI using your API keys. Pay only for what you use. GPT-4, Gemini, Claude, and other LLMs supported. The best chat LLM frontend UI for all AI models.

Patee.io offers AI-powered automatic transcription from audio tapes, video clips, meetings, and seminars into text. Start at just 20 THB with free trials and email delivery for efficient speech-to-text conversion.

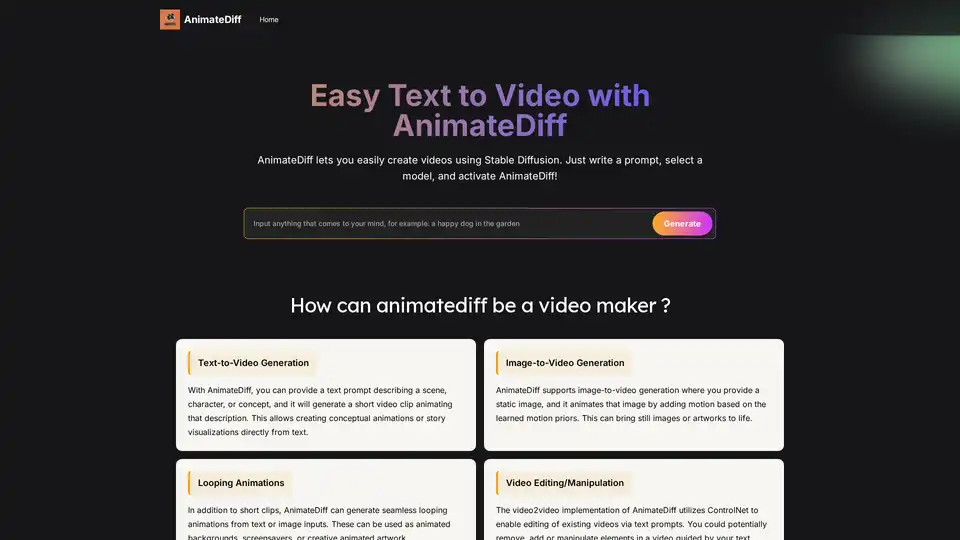

AnimateDiff is a free online video maker that brings motion to AI-generated visuals. Create animations from text prompts or animate existing images with natural movements learned from real videos. This plug-and-play framework adds video capabilities to diffusion models like Stable Diffusion without retraining. Explore the future of AI content creation with AnimateDiff's text-to-video and image-to-video generation tools.

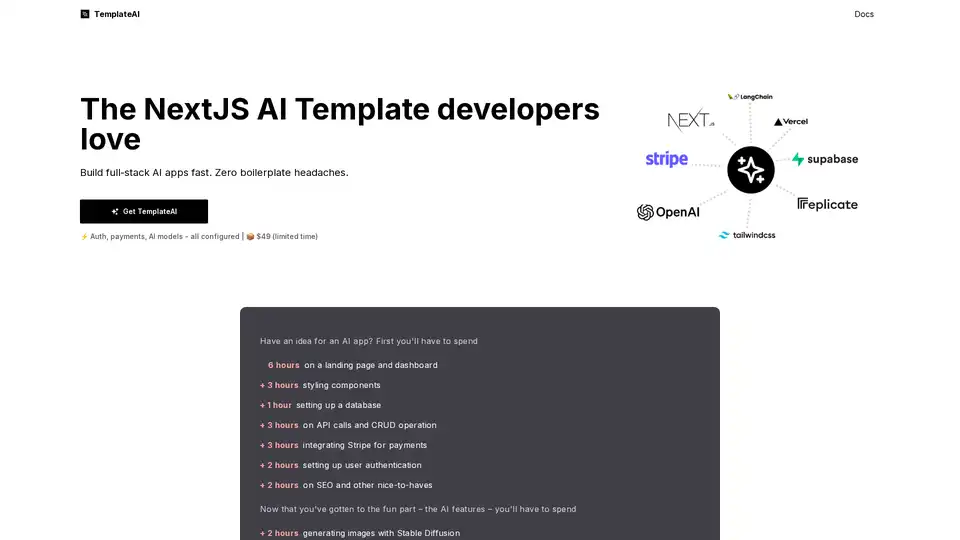

TemplateAI is the leading NextJS template for AI apps, featuring Supabase auth, Stripe payments, OpenAI/Claude integration, and ready-to-use AI components for fast full-stack development.

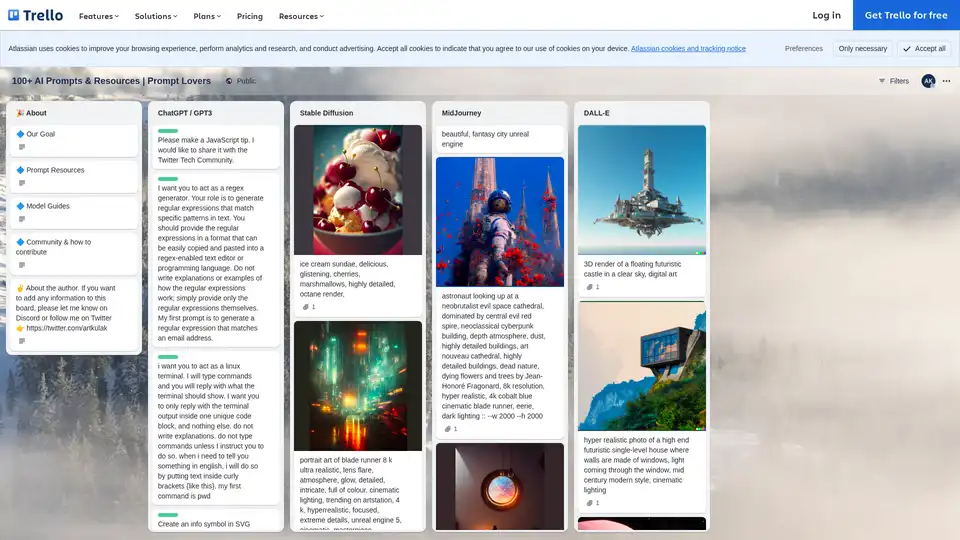

Explore the Prompt Lovers Trello board with 100+ AI prompts and resources for ChatGPT, Stable Diffusion, MidJourney, and DALL-E, ideal for writers, developers, and artists seeking creative inspiration.

Sagify is an open-source Python tool that streamlines machine learning pipelines on AWS SageMaker, offering a unified LLM Gateway for seamless integration of proprietary and open-source large language models to boost productivity.

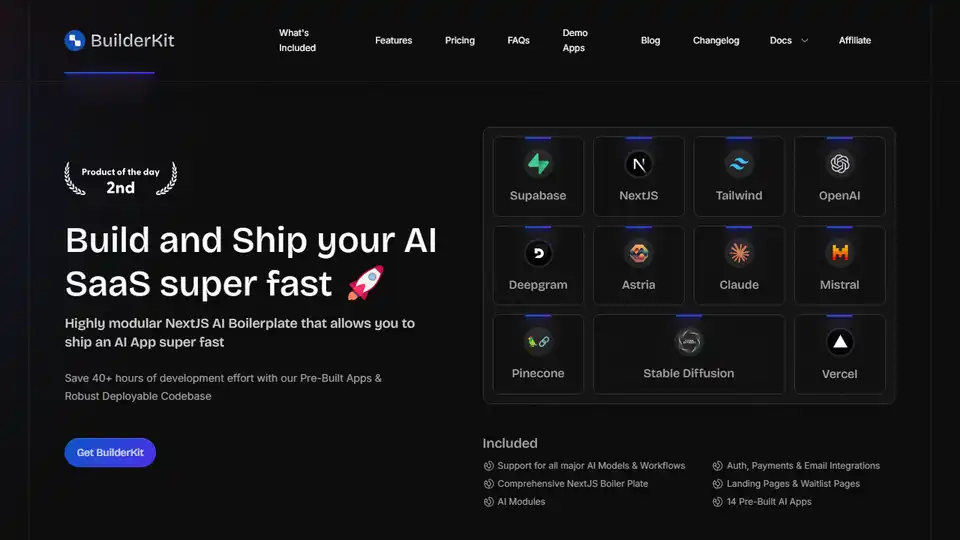

BuilderKit is a NextJS AI Boilerplate that allows you to build and ship AI SaaS applications super fast. Save 40+ hours of development with pre-built apps and robust codebase.

dreamlook.ai offers lightning-fast Stable Diffusion finetuning, enabling users to train models 2.5x faster and generate high-quality images quickly. Extract LoRA files to reduce download size.

Anime Art Studio is a 100% free AI anime generator with 24/7 access to 100+ stable diffusion anime models. Transform text into stunning anime art with ease.

Generate AI image variations of an input image using Stable Diffusion. Free and easy to use online AI image generator. Create copyright-free variations for your projects.

Simplify AI deployment with Synexa. Run powerful AI models instantly with just one line of code. Fast, stable, and developer-friendly serverless AI API platform.

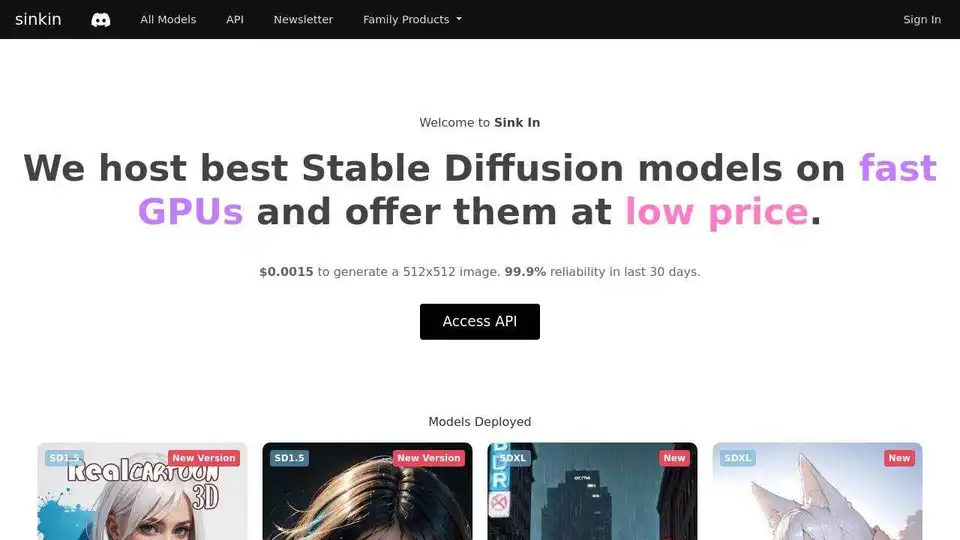

Run Stable Diffusion AI image generation models on state-of-art infra. Build AI applications with our fast, reliable and cost-effective APIs.