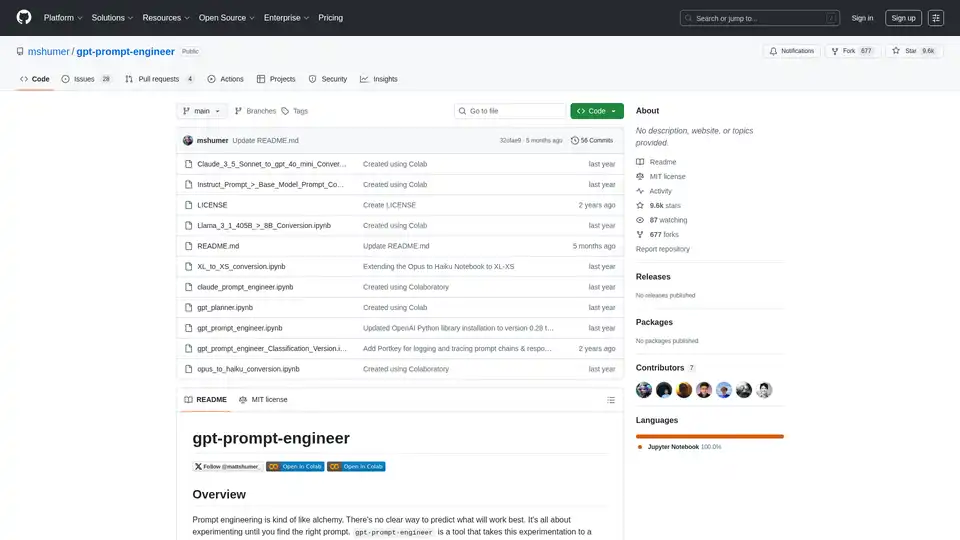

gpt-prompt-engineer

Overview of gpt-prompt-engineer

What is gpt-prompt-engineer?

gpt-prompt-engineer is an open-source tool designed to automate the process of prompt engineering for large language models (LLMs) like GPT-4, GPT-3.5-Turbo, and Claude 3. It helps users discover optimal prompts by generating, testing, and ranking multiple prompts based on user-defined test cases.

How does gpt-prompt-engineer work?

- Prompt Generation: The tool uses LLMs to generate a diverse range of prompts based on a provided use-case description and associated test cases.

- Prompt Testing: Each generated prompt is tested against the provided test cases to evaluate its performance.

- ELO Rating System: An ELO rating system is employed to rank the prompts based on their performance. Each prompt starts with an initial ELO rating, and the ratings are adjusted based on the prompt's performance against the test cases. This enables users to easily identify the most effective prompts.

Key Features of gpt-prompt-engineer

- Automated Prompt Generation: Automatically generates a multitude of potential prompts based on a given use-case and test cases.

- Prompt Testing and Ranking: Systematically tests each prompt against the test cases and ranks them using an ELO rating system to identify the most effective ones.

- Claude 3 Opus Support: A specialized version takes full advantage of Anthropic's Claude 3 Opus model, allowing for automated test case generation and multiple input variables.

- Claude 3 Opus → Haiku Conversion: This feature enables users to leverage Claude 3 Opus to define the latent space and Claude 3 Haiku for efficient output generation, reducing latency and cost.

- Classification Version: Designed for classification tasks, this version evaluates the correctness of a test case by matching it to the expected output ('true' or 'false') and provides a table with scores for each prompt.

- Weights & Biases Logging: Optional logging to Weights & Biases for tracking configurations, system and user prompts, test cases, and final ELO ratings.

- Portkey Integration: Offers optional integration with Portkey for logging and tracing prompt chains and their responses.

How to use gpt-prompt-engineer?

- Setup: Open the desired notebook in Google Colab or a local Jupyter notebook. Choose between the standard version, the classification version, or the Claude 3 version depending on your use case.

- API Key Configuration: Add your OpenAI API key or Anthropic API key to the designated line in the notebook.

- Define Use-Case and Test Cases: For the GPT-4 version, define your use-case and test cases. The use-case is a description of what you want the AI to do, and test cases are specific prompts that you would like the AI to respond to.

- Configure Input Variables (for Claude 3 version): Define input variables in addition to the use-case description, specifying the variable name and its description.

- Generate Optimal Prompts: Call the

generate_optimal_promptfunction with the use-case description, test cases, and the desired number of prompts to generate. - Evaluate Results: The final ELO ratings will be printed in a table, sorted in descending order. The higher the rating, the better the prompt. For the classification version, the scores for each prompt will be printed in a table.

Who is gpt-prompt-engineer for?

gpt-prompt-engineer is ideal for:

- AI developers and researchers seeking to optimize prompts for LLMs.

- Businesses looking to improve the performance of AI-powered applications.

- Individuals interested in exploring prompt engineering techniques.

- Anyone looking to reduce the cost and latency of LLM-based applications.

Use Cases:

- Automating the generation of landing page headlines.

- Creating personalized email responses.

- Optimizing prompts for content generation.

- Building cost-effective AI systems using Claude 3 Opus and Haiku.

Why choose gpt-prompt-engineer?

- Time Savings: Automates the prompt engineering process, saving significant time and effort.

- Improved Performance: Helps discover optimal prompts that lead to improved performance of LLMs.

- Cost Reduction: Enables the creation of cost-effective AI systems by leveraging efficient models like Claude 3 Haiku.

- Flexibility: Supports various LLMs and use cases, including classification tasks.

License

gpt-prompt-engineer is MIT licensed.

Project Link

Best Alternative Tools to "gpt-prompt-engineer"

Athina is a collaborative AI platform that helps teams build, test, and monitor LLM-based features 10x faster. With tools for prompt management, evaluations, and observability, it ensures data privacy and supports custom models.

Parea AI is the ultimate experimentation and human annotation platform for AI teams, enabling seamless LLM evaluation, prompt testing, and production deployment to build reliable AI applications.

Maxim AI is an end-to-end evaluation and observability platform that helps teams ship AI agents reliably and 5x faster with comprehensive testing, monitoring, and quality assurance tools.

UsageGuard provides a unified AI platform for secure access to LLMs from OpenAI, Anthropic, and more, featuring built-in safeguards, cost optimization, real-time monitoring, and enterprise-grade security to streamline AI development.

Discover Analyst Intelligence Platform: the first AI tool for non-engineers to write SQL in Google BigQuery, automating data cleaning and analysis for efficient big data insights.

Xander is an open-source desktop platform that enables no-code AI model training. Describe tasks in natural language for automated pipelines in text classification, image analysis, and LLM fine-tuning, ensuring privacy and performance on your local machine.

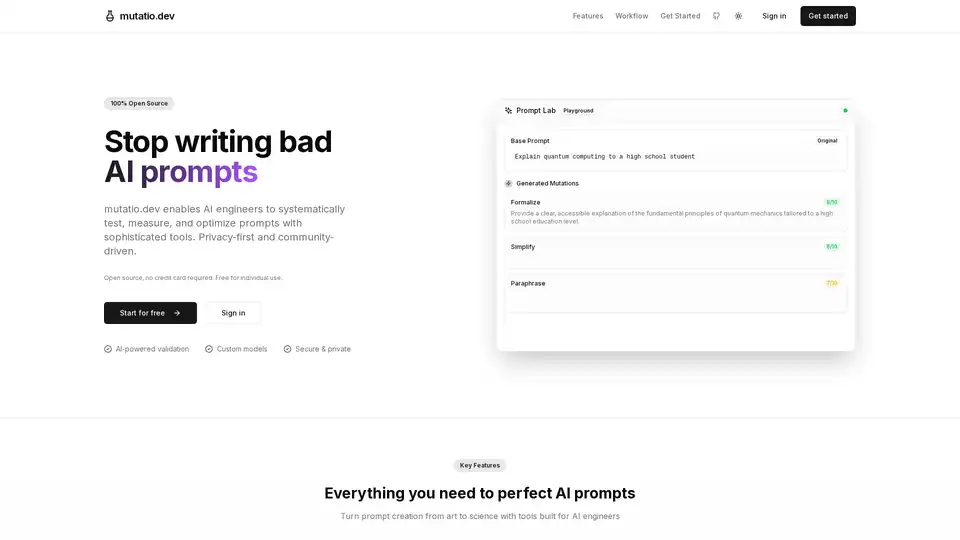

Mutatio.dev is an open-source AI tool for prompt engineering, enabling systematic mutation, validation, and optimization of prompts using custom LLMs. Privacy-focused, browser-based, with model flexibility for AI engineers.

Promptimize AI is a browser extension that enhances AI prompts, making it easy for anyone to reliably use AI to boost productivity. It offers one-click enhancements, custom variables, and integration across AI platforms.

Prompt Genie is an AI-powered tool that instantly creates optimized super prompts for LLMs like ChatGPT and Claude, eliminating prompt engineering hassles. Test, save, and share via Chrome extension for 10x better results.

What-A-Prompt is a user-friendly prompt optimizer for enhancing inputs to AI models like ChatGPT and Gemini. Select enhancers, input your prompt, and generate creative, detailed results to boost LLM outputs. Access a vast library of optimized prompts.

GPT Prompt Lab is a free AI prompt generator that helps content creators craft high-quality prompts for ChatGPT, Gemini, and more from any topic. Generate, test, and optimize prompts for blogs, emails, code, and SEO content in seconds.

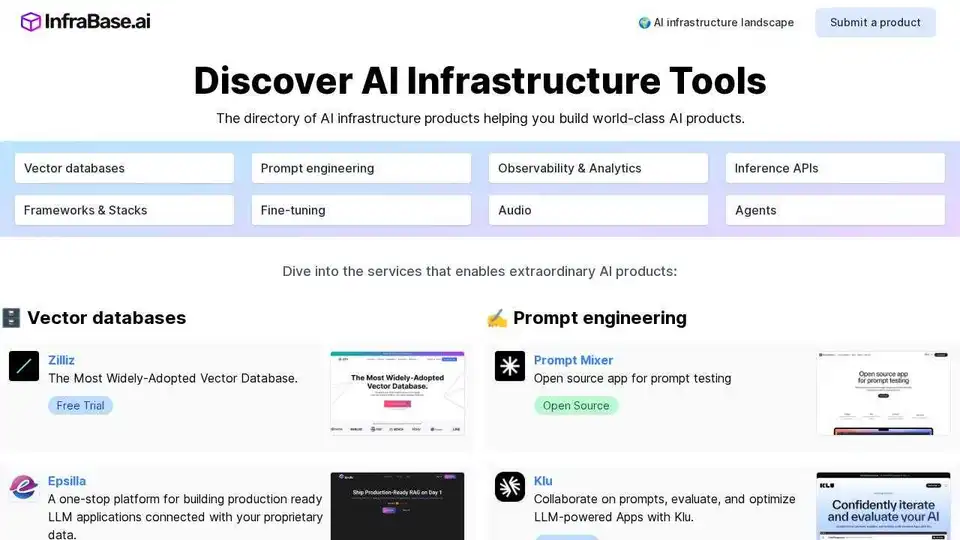

Infrabase.ai is the directory for discovering AI infrastructure tools and services. Find vector databases, prompt engineering tools, inference APIs, and more to build world-class AI products.

ModelFusion: Complete LLM toolkit for 2025 with cost calculators, prompt library, and AI observability tools for GPT-4, Claude, and more.

Compare and share side-by-side prompts with Google's Gemini Pro vs OpenAI's ChatGPT to find the best AI model for your needs.