OpenUI

Overview of OpenUI

What is OpenUI?

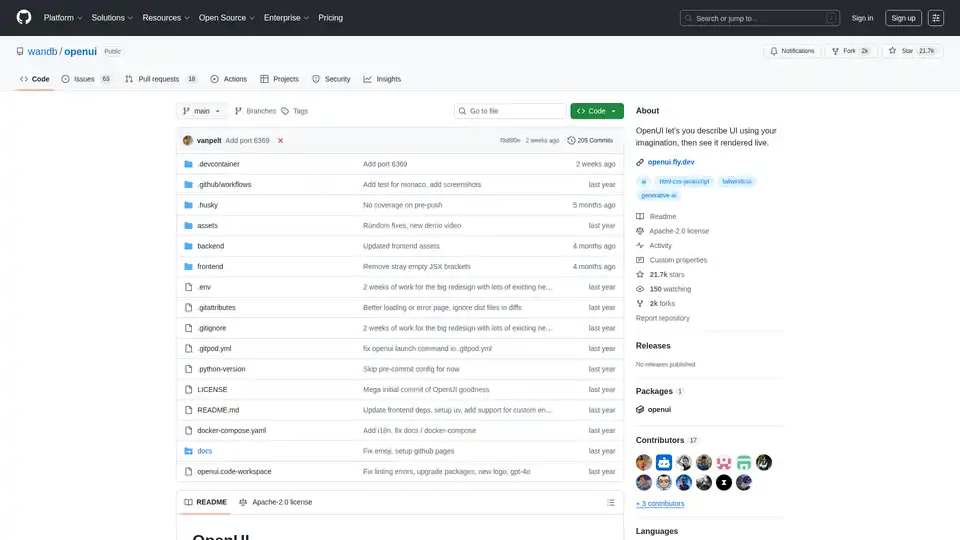

OpenUI is an innovative open-source project designed to revolutionize how developers and designers build user interfaces. Hosted on GitHub by Weights & Biases (W&B), it empowers users to describe UI elements using simple natural language prompts, powered by large language models (LLMs), and instantly see them rendered live in the browser. Whether you're brainstorming ideas or prototyping applications, OpenUI turns imaginative descriptions into functional UI code, supporting outputs like HTML, React components, Svelte, or even Web Components. This tool is particularly valuable in the era of generative AI, where rapid iteration is key to innovation.

Unlike proprietary alternatives, OpenUI is fully open-source under the Apache-2.0 license, allowing anyone to fork, modify, or contribute to its development. It's already garnered over 21.7k stars and 2k forks on GitHub, reflecting strong community interest in AI-assisted UI generation. At its core, OpenUI leverages LLMs to bridge the gap between conceptual ideas and executable code, making UI development more accessible and fun.

How Does OpenUI Work?

OpenUI operates by integrating with various LLM providers to process your textual descriptions and generate corresponding UI markup. Here's a breakdown of its underlying mechanism:

Input Processing: You start by typing a description in the web interface, such as "a modern login form with email and password fields, styled in Tailwind CSS." The tool sends this prompt to the selected LLM backend.

LLM Generation: Using models from OpenAI (e.g., GPT-4o), Groq, Gemini, Anthropic (Claude), or even local options like Ollama and LiteLLM-compatible services, the AI interprets the prompt and outputs structured code. It supports multimodal inputs if using vision-capable models like LLaVA.

Live Rendering: The generated code is immediately rendered in a live preview pane. You can iterate by requesting changes, like "add a forgot password link" or "convert to React components."

Code Export and Conversion: Beyond rendering, OpenUI can transform the output into different frameworks. For instance, it might generate vanilla HTML/CSS/JS first, then convert to React or Svelte on demand. This flexibility is powered by the backend's Python scripts and frontend TypeScript code.

The architecture includes a Python backend for LLM interactions via LiteLLM (a unified proxy for hundreds of models) and a TypeScript-based frontend for the interactive UI. Environment variables handle API keys securely, ensuring seamless integration without hardcoding sensitive data. For local setups, it uses tools like uv for dependency management and Docker for containerized deployment.

In terms of technical details, OpenUI's repo structure separates frontend (React-like with Vite) and backend (FastAPI-inspired), with assets for demos and docs. Recent updates include i18n support, custom endpoint configurations, and Monaco editor integration for code tweaking—showcasing its evolution toward more robust features.

How to Use OpenUI?

Getting started with OpenUI is straightforward, whether you're running it locally or via a demo. Follow these steps for optimal results:

Access the Demo: Head to the live demo at openui.fly.dev to test without setup. Describe a UI, select a model (if API keys are configured), and watch it render.

Local Installation via Docker (Recommended for Beginners):

- Ensure Docker is installed.

- Set your API keys:

export OPENAI_API_KEY=your_key_here(and others like ANTHROPIC_API_KEY if needed). - For Ollama integration: Install Ollama locally, pull a model (e.g.,

ollama pull llava), and run:docker run --rm -p 7878:7878 -e OPENAI_API_KEY -e OLLAMA_HOST=http://host.docker.internal:11434 ghcr.io/wandb/openui. - Visit http://localhost:7878 to start generating UIs.

From Source (For Developers):

- Clone the repo:

git clone https://github.com/wandb/openui. - Navigate to backend:

cd openui/backend. - Install dependencies:

uv sync --frozen --extra litellm(uv is a fast Python package manager). - Activate virtual env:

source .venv/bin/activate. - Set API keys and run:

python -m openui. - For dev mode with frontend: Run

npm run devin the frontend directory for hot reloading.

- Clone the repo:

Advanced Configurations:

- LiteLLM Custom Proxy: Create a

litellm-config.yamlfile for overriding model endpoints, useful for self-hosted setups like LocalAI. - Ollama for Offline Use: Set

OLLAMA_HOSTto point to your instance (e.g., http://127.0.0.1:11434). Models like LLaVA enable image-based prompts. - Gitpod or Codespaces: For cloud-based dev, these preconfigure the environment—ideal for testing without local hardware.

- LiteLLM Custom Proxy: Create a

Tips for best results: Use descriptive prompts with specifics (e.g., "responsive navbar with Tailwind classes"). If generation slows, opt for faster models like Groq. The tool auto-detects available models from your env vars, populating the settings modal accordingly.

Why Choose OpenUI?

In a crowded field of AI tools, OpenUI stands out for its open-source ethos and focus on UI-specific generation. Traditional UI building often involves tedious wireframing and coding, but OpenUI accelerates this with generative AI, cutting prototyping time from hours to minutes. It's not as polished as commercial tools like v0, but its transparency allows customization—perfect for teams integrating LLMs into workflows.

Key advantages include:

- Broad Model Support: Works with 100+ LLMs via LiteLLM, from cloud APIs to local inference.

- Framework Agnostic: Outputs adaptable code for HTML, React, Svelte, etc., reducing vendor lock-in.

- Community-Driven: Active GitHub with 205 commits, recent redesigns, and contributions from 20+ developers.

- Cost-Effective: Free to run locally; only pay for API usage if using paid LLMs.

- Educational Value: Great for learning how LLMs handle code gen, with transparent backend logic.

Users praise its fun, iterative nature—ideal for sparking creativity without setup friction. For instance, designers can visualize ideas quickly, while developers debug AI outputs in the Monaco editor.

Who is OpenUI For?

OpenUI targets a diverse audience in the AI and development space:

- UI/UX Designers: Rapidly prototype interfaces from sketches or ideas, validating concepts before full implementation.

- Frontend Developers: Generate boilerplate code for Tailwind-styled components, speeding up React or Svelte projects.

- AI Enthusiasts and Researchers: Experiment with LLM applications in UI generation, especially with open models like those from Ollama.

- Product Teams at Startups: Prototype MVPs affordably, integrating with W&B's ecosystem for ML app building.

- Educators and Students: Teach generative AI concepts through hands-on UI creation.

It's especially suited for those familiar with basic command-line tools, though the Docker option lowers the barrier. If you're building LLM-powered apps, OpenUI serves as a practical example of AI-augmented development.

Practical Value and Use Cases

The real-world utility of OpenUI shines in scenarios demanding quick iterations:

Rapid Prototyping: Describe a dashboard and get a live, interactive mockup—tweak via chat-like refinements.

Code Snippet Generation: Need a responsive form? Prompt it, copy the React code, and integrate into your app.

Accessibility Testing: Generate UIs and evaluate AI's adherence to best practices like semantic HTML.

Collaborative Design: Share prompts in team settings for consistent UI visions.

From user feedback on GitHub issues (63 open), common enhancements include better error handling and multi-page support, indicating active growth. Pricing? Entirely free as open-source, though LLM costs apply (e.g., OpenAI tokens).

In summary, OpenUI democratizes UI creation through generative AI, fostering efficiency and innovation. For the best experience, explore the repo's docs and contribute—it's a vibrant project pushing the boundaries of AI in design.

Best Alternative Tools to "OpenUI"

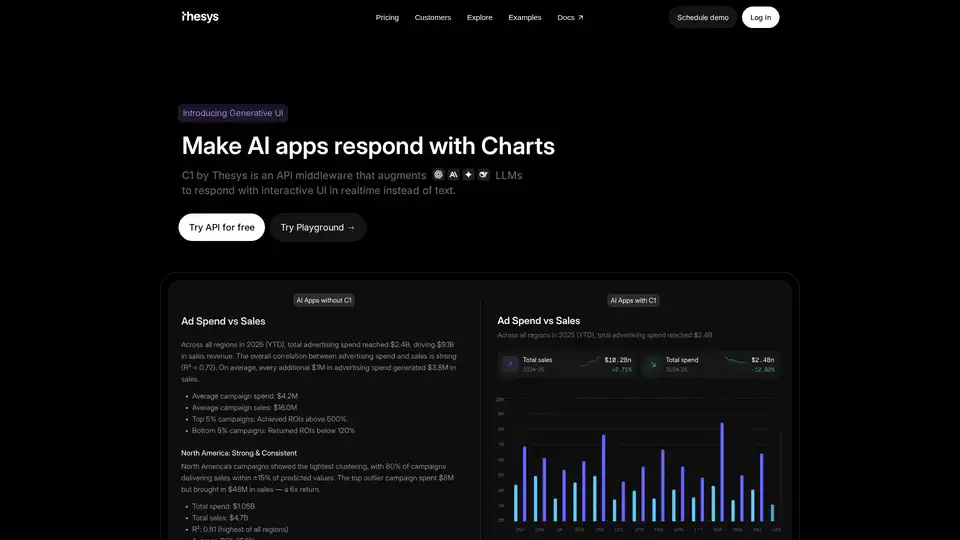

C1 by Thesys is an API middleware that augments LLMs to respond with interactive UI in realtime instead of text, turning responses from your model into live interfaces using React SDK.

GenSearch revolutionizes search using generative AI, allowing users to create custom AI-powered search engines with personalized experiences and seamless integrations.

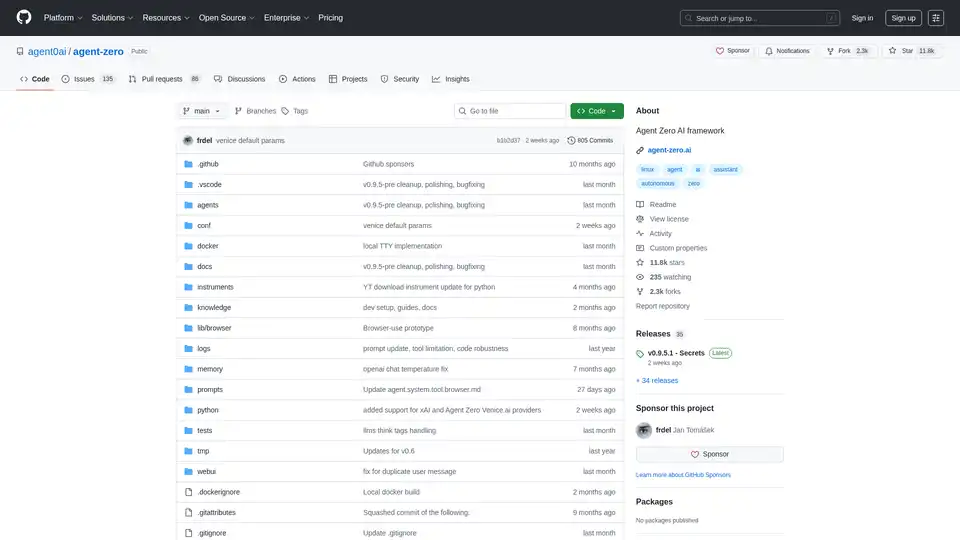

Agent Zero is an open-source AI framework for building autonomous agents that learn and grow organically. It features multi-agent cooperation, code execution, and customizable tools.

Flowise is an open-source generative AI development platform to visually build AI agents and LLM orchestration. Build custom LLM apps in minutes with a drag & drop UI.

Chat with AI using your API keys. Pay only for what you use. GPT-4, Gemini, Claude, and other LLMs supported. The best chat LLM frontend UI for all AI models.

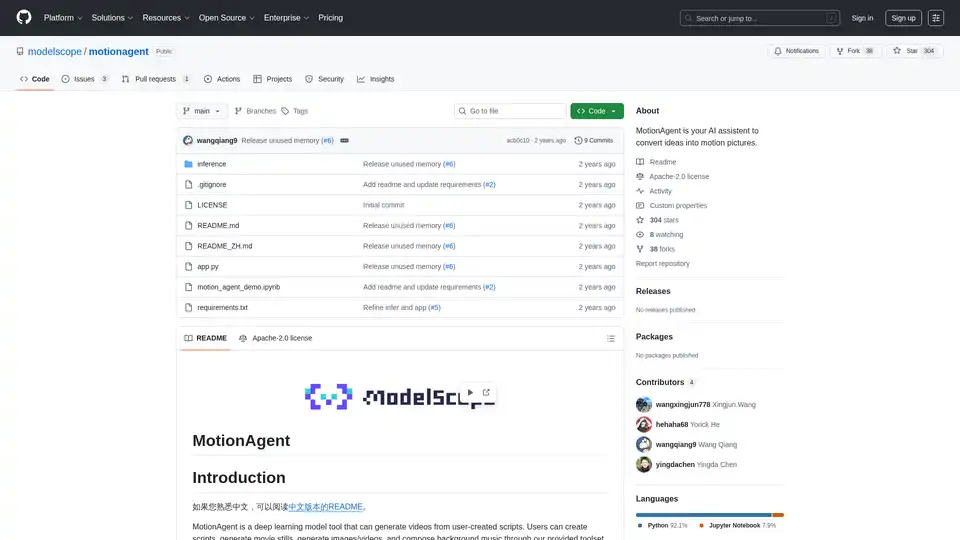

MotionAgent is an open-source AI tool that transforms ideas into motion pictures by generating scripts, movie stills, high-res videos, and custom background music using models like Qwen-7B-Chat and SDXL.

Soverin is the ultimate AI marketplace for discovering, buying, and leveraging top AI apps and agents. Automate over 10,000 tasks, from building agents to scaling customer support, and boost productivity with trending automation tools.

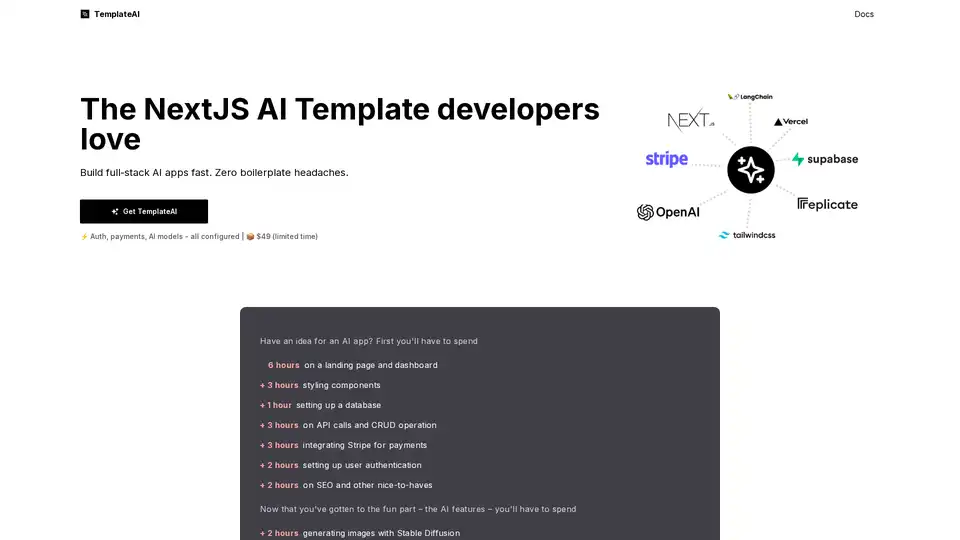

TemplateAI is the leading NextJS template for AI apps, featuring Supabase auth, Stripe payments, OpenAI/Claude integration, and ready-to-use AI components for fast full-stack development.

Rierino is a powerful low-code platform accelerating ecommerce and digital transformation with AI agents, composable commerce, and seamless integrations for scalable innovation.

PixieBrix is a workforce AI platform delivered as a browser extension and web app, connecting to your existing tools to automate workflows and deploy AI assistance securely. Boost productivity with AI productivity tools.

NextReady is a ready-to-use Next.js template with Prisma, TypeScript, and shadcn/ui, designed to help developers build web applications faster. Includes authentication, payments, and admin panel.

Quick Snack is an AI-powered tool built on Expo Snack that lets you create React Native apps by interacting with an LLM/AI Assistant. Currently in early alpha.

16x Prompt is an AI coding tool for managing code context, customizing prompts, and shipping features faster with LLM API integrations. Ideal for developers seeking efficient AI-assisted coding.

Meteron AI is an all-in-one AI toolset that handles LLM and generative AI metering, load-balancing, and storage, freeing developers to focus on building AI-powered products.