Promptfoo

Overview of Promptfoo

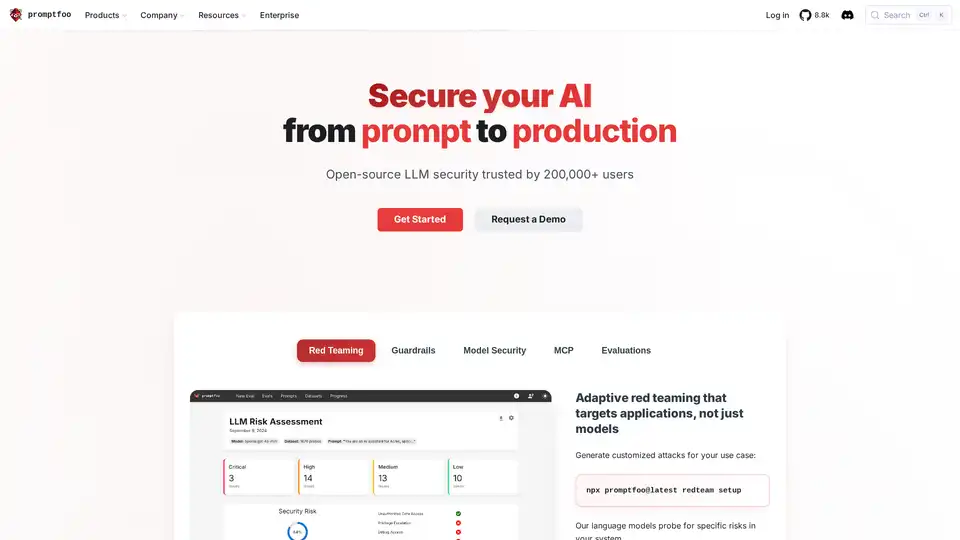

Promptfoo: Secure Your AI From Prompt to Production

Promptfoo is an open-source LLM security tool designed to help developers secure their AI applications from prompt to production. With a strong focus on AI red-teaming and evaluations, Promptfoo allows users to find and fix vulnerabilities, maximize output quality, and catch regressions.

What is Promptfoo?

Promptfoo is a security-first, developer-friendly tool that provides adaptive red teaming targeting applications, not just models. It is trusted by over 200,000 users and adopted by 44 Fortune 500 companies. It’s designed to secure your AI applications by identifying potential vulnerabilities and ensuring the reliability of your LLMs.

How does Promptfoo work?

Promptfoo operates by generating customized attacks tailored to your specific use case. Here’s how it works:

- Customized Attacks: The tool generates attacks specific to your industry, company, and application, rather than relying on generic canned attacks.

- Language Model Probing: Specialized language models probe your system for specific risks.

- Vulnerability Detection: It identifies direct and indirect prompt injections, jailbreaks, data and PII leaks, insecure tool use vulnerabilities, unauthorized contract creation, and toxic content generation.

Key Features

- Red Teaming:

- Generates customized attacks using language models.

- Targets specific risks in your system.

- Identifies vulnerabilities like prompt injections and data leaks.

- Guardrails:

- Helps tailor jailbreaks to your guardrails.

- Model Security:

- Ensures secure model usage in your AI applications.

- Evaluations:

- Evaluates the performance and security of your AI models.

Why Choose Promptfoo?

- Find Vulnerabilities You Care About: Promptfoo helps you discover vulnerabilities specific to your industry, company, and application.

- Battle-Tested at Enterprise Scale: Adopted by numerous Fortune 500 companies and embraced by a large open-source community.

- Security-First, Developer-Friendly: Offers a command-line interface with live reloads and caching. It requires no SDKs, cloud dependencies, or logins.

- Flexible Deployment: You can get started in minutes with the CLI tool or opt for managed cloud or on-premises enterprise solutions.

How to use Promptfoo?

To get started with Promptfoo, you can use the command-line interface (CLI). The CLI tool allows for quick setup and testing. For more advanced features and support, you can choose managed cloud or on-premises enterprise solutions.

Here is command to set up red teaming:

npx promptfoo@latest redteam setup

Who is Promptfoo for?

Promptfoo is designed for:

- Developers: Securing AI applications and ensuring the reliability of LLMs.

- Enterprises: Protecting against AI vulnerabilities and ensuring compliance.

- Security Teams: Implementing AI red-teaming and evaluations.

Community and Support

Promptfoo has a vibrant open-source community of over 200,000 developers. It provides extensive documentation, release notes, and a blog to help users stay informed and get the most out of the tool.

Conclusion

Promptfoo is a comprehensive tool for securing AI applications, trusted by a large community and numerous enterprises. By focusing on customized attacks and providing a security-first approach, Promptfoo helps developers find vulnerabilities, maximize output quality, and ensure the reliability of their AI systems. Whether you're a developer or part of a large enterprise, Promptfoo offers the features and flexibility you need to secure your AI applications effectively.

Best Alternative Tools to "Promptfoo"

Secure your AI systems with Mindgard's automated red teaming and security testing. Identify and resolve AI-specific risks, ensuring robust AI models and applications.

Lakera is an AI-native security platform that helps enterprises accelerate GenAI initiatives by providing real-time threat detection, prompt attack prevention, and data leakage protection.

Learn Prompting offers comprehensive prompt engineering courses, covering ChatGPT, LLMs, and AI security, trusted by millions worldwide. Start learning for free!

Robust Intelligence is an AI application security platform that automates the evaluation and protection of AI models, data, and applications. It helps enterprises secure AI and safety, decouple AI development from security, and protect against evolving threats.

Kindo is an AI-native terminal designed for technical operations, integrating security, development, and IT engineering into a single hub. It offers AI automation with a DevSecOps-specific LLM and features like incident response automation and compliance automation.

WhyLabs provides AI observability, LLM security, and model monitoring. Guardrail Generative AI applications in real-time to mitigate risks.

Secure your LLM API keys with Backmesh, an open-source backend. Prevent leaks, control access, and implement rate limits to reduce LLM API costs.

Roo Code is an open-source AI-powered coding assistant for VS Code, featuring AI agents for multi-file editing, debugging, and architecture. It supports various models, ensures privacy, and customizes to your workflow for efficient development.

Langtail is a low-code platform for testing and debugging AI apps with confidence. Test LLM prompts with real-world data, catch bugs, and ensure AI security. Try it for free!

Langtrace is an open-source observability and evaluations platform designed to improve the performance and security of AI agents. Track vital metrics, evaluate performance, and ensure enterprise-grade security for your LLM applications.

Polygraf AI is an enterprise-grade AI security platform offering AI content detection, data-privacy redaction, and secure LLM governance. It operates on zero-trust principles and is deployable on-premises.

Superagent provides runtime protection for AI agents with purpose-trained models. It guards against attacks, verifies outputs, and redacts sensitive data in real time, ensuring security and compliance.

Securiti Data Command Center™ is a unified platform for Data+AI intelligence, controls, and orchestration across hybrid multicloud, enabling secure data and AI usage through security, governance, privacy, and compliance.

Anycode AI provides autonomous AI solutions for engineering teams, automating data mapping, code security, and legacy system conversion, accelerating development and enhancing team productivity.