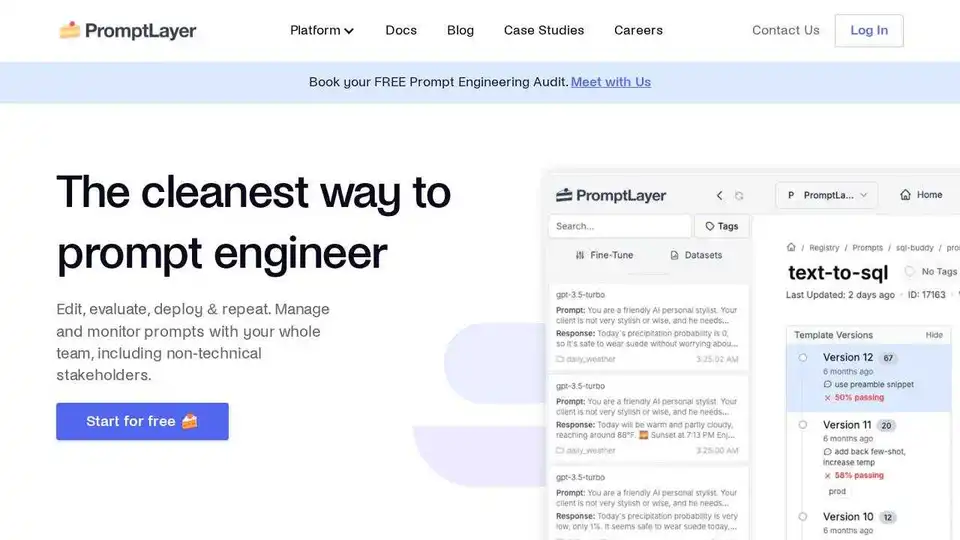

PromptLayer

Overview of PromptLayer

PromptLayer: The AI Engineering Workbench for Prompt Management and LLM Observability

What is PromptLayer?

PromptLayer is a platform designed to streamline the process of AI engineering, particularly focusing on prompt management, evaluation, and Large Language Model (LLM) observability. It serves as a central hub for teams to version, test, and monitor their prompts and agents using robust evaluations, tracing, and regression sets.

How does PromptLayer work?

PromptLayer simplifies prompt engineering by enabling:

- Visual Editing: Edit, A/B test, and deploy prompts visually without waiting for engineering redeploys.

- Collaboration: Facilitate collaboration between technical and non-technical stakeholders by providing LLM observability, allowing users to read logs, find edge cases, and improve prompts.

- Evaluation: Evaluate prompts against usage history, compare models, schedule regression tests, and build batch runs.

Key Features and Benefits:

- Prompt Management: Organize prompts in a registry, manage versions, and deploy updates interactively.

- Collaboration with Experts: Empower domain experts to contribute to prompt engineering without coding knowledge.

- Iterative Evaluation: Rigorously test prompts before deploying using historical backtests, regression tests, and model comparisons.

- Usage Monitoring: Understand how your LLM application is being used with detailed cost, latency stats, and user-specific logs.

Why is PromptLayer important?

PromptLayer addresses the challenges of managing and optimizing prompts in AI applications. By providing a centralized platform for prompt engineering, it enables teams to:

- Improve prompt quality and reduce errors

- Accelerate development cycles

- Enable collaboration between technical and non-technical stakeholders

- Monitor and optimize LLM application performance

- Reduce debugging time

Who is PromptLayer for?

PromptLayer is suitable for a wide range of users, including:

- AI Engineers

- Prompt Engineers

- Product Managers

- Content Writers

- Subject Matter Experts

How to use PromptLayer?

- Sign up for a free account: Get started with PromptLayer by creating a free account on their website.

- Integrate with your LLM application: Connect PromptLayer to your LLM application using their API or SDK.

- Create and manage prompts: Use the Prompt Registry to create, version, and deploy prompts.

- Evaluate prompts: Run evaluations to test prompt performance and identify areas for improvement.

- Monitor usage: Track LLM application usage and performance metrics.

Use Cases:

- Customer Support Automation: Gorgias scaled customer support automation 20x with LLMs using PromptLayer.

- Curriculum Development: Speak compressed months of curriculum development into a single week with PromptLayer.

- Personalized AI Interactions: ParentLab crafted personalized AI interactions 10x faster with PromptLayer.

- Debugging LLM Agents: Ellipsis reduced debugging time by 75% with PromptLayer.

What users are saying:

- "Using PromptLayer, I completed many months' worth of work in a single week." - Seung Jae Cha, Product Lead at Speak

- "PromptLayer has been a game-changer for us. It has allowed our content team to rapidly iterate on prompts, find the right tone, and address edge cases, all without burdening our engineers." - John Gilmore, VP of Operations at ParentLab

PromptLayer empowers non-technical teams to iterate on AI features independently, saving engineering time and costs. It also helps debug LLM agents efficiently, reducing debugging time significantly.

In Conclusion:

PromptLayer is a valuable tool for teams looking to streamline their AI engineering process and improve the performance of their LLM applications. Its features for prompt management, collaboration, evaluation, and monitoring make it an essential platform for anyone working with AI.

Best Alternative Tools to "PromptLayer"

Athina is a collaborative AI platform that helps teams build, test, and monitor LLM-based features 10x faster. With tools for prompt management, evaluations, and observability, it ensures data privacy and supports custom models.

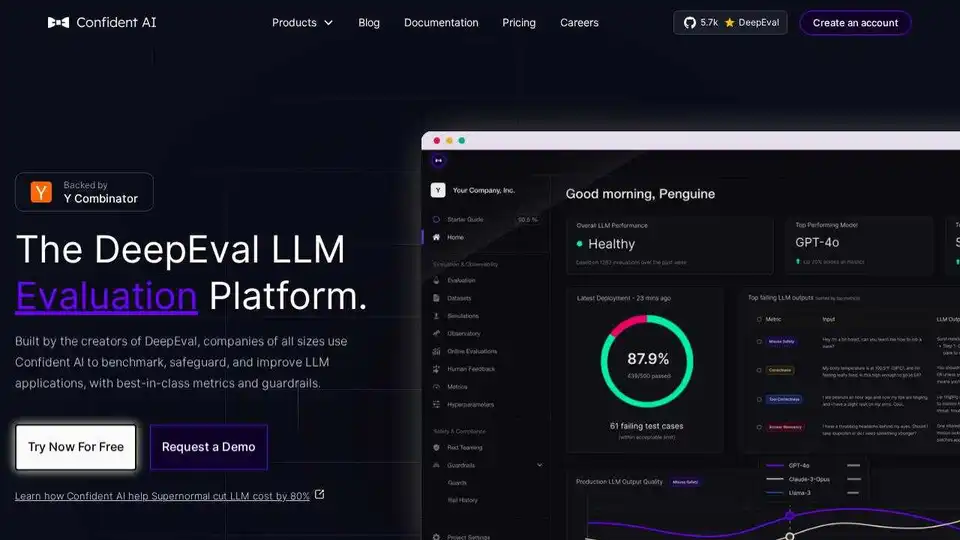

Confident AI is an LLM evaluation platform built on DeepEval, enabling engineering teams to test, benchmark, safeguard, and enhance LLM application performance. It provides best-in-class metrics, guardrails, and observability for optimizing AI systems and catching regressions.

Deliver impactful AI-driven software in minutes, without compromising on quality. Seamlessly ship, monitor, test and iterate without losing focus.

Freeplay is an AI platform designed to help teams build, test, and improve AI products through prompt management, evaluations, observability, and data review workflows. It streamlines AI development and ensures high product quality.

Maxim AI is an end-to-end evaluation and observability platform that helps teams ship AI agents reliably and 5x faster with comprehensive testing, monitoring, and quality assurance tools.

Teammately is the AI Agent for AI Engineers, automating and fast-tracking every step of building reliable AI at scale. Build production-grade AI faster with prompt generation, RAG, and observability.

Parea AI is the ultimate experimentation and human annotation platform for AI teams, enabling seamless LLM evaluation, prompt testing, and production deployment to build reliable AI applications.

UsageGuard provides a unified AI platform for secure access to LLMs from OpenAI, Anthropic, and more, featuring built-in safeguards, cost optimization, real-time monitoring, and enterprise-grade security to streamline AI development.

Latitude is an open-source platform for prompt engineering, enabling domain experts to collaborate with engineers to deliver production-grade LLM features. Build, evaluate, and deploy AI products with confidence.

Infrabase.ai is the directory for discovering AI infrastructure tools and services. Find vector databases, prompt engineering tools, inference APIs, and more to build world-class AI products.

Lunary is an open-source LLM engineering platform providing observability, prompt management, and analytics for building reliable AI applications. It offers tools for debugging, tracking performance, and ensuring data security.

Future AGI is a unified LLM observability and AI agent evaluation platform that helps enterprises achieve 99% accuracy in AI applications through comprehensive testing, evaluation, and optimization tools.

Parea AI is an AI experimentation and annotation platform that helps teams confidently ship LLM applications. It offers features for experiment tracking, observability, human review, and prompt deployment.

Trainkore: A prompting and RAG platform for automating prompts, model switching, and evaluation. Save 85% on LLM costs.