Spice.ai

Overview of Spice.ai

Spice.ai: The Open Source Data and AI Inference Engine

What is Spice.ai?

Spice.ai is an open-source data and AI inference engine designed to empower developers to build AI applications with ease. It provides the building blocks for creating data-driven AI solutions, enabling SQL query federation, acceleration, search, and retrieval grounded in enterprise data.

Key Features and Benefits

- SQL Query Federation: Join data across various sources, including databases, data warehouses, data lakes, and APIs, using a single SQL query. This eliminates data silos and simplifies data access.

- Data Acceleration: Achieve fast, low-latency, and high-concurrency query performance through data materialization and acceleration in-memory or using embedded databases like DuckDB or SQLite.

- AI Model Deployment & Serving: Load and serve local models like Llama3, or utilize hosted AI platforms such as OpenAI, xAI, and NVidia NIM. Spice.ai provides purpose-built LLM tools for search and retrieval.

- Composable Building Blocks: Incrementally adopt building blocks for data access, acceleration, search, retrieval, and AI inference. Compose them to develop apps and agents grounded in data.

- Real-Time Data Updates: Keep accelerations updated in real-time with Change-Data-Capture (CDC) using Debezium.

- Multi-Cloud and High Availability: Deploy the Spice Cloud Platform in a multi-cloud environment with high availability, security, performance, and compliance, backed by an enterprise-level SLA and support. It is SOC 2 Type II certified.

- Ecosystem Compatibility: Seamlessly integrate with popular data science libraries such as NumPy, Pandas, TensorFlow, and PyTorch.

How Does Spice.ai Work?

Spice.ai leverages a portable compute engine built in Rust on Apache Arrow and DataFusion to provide a fast single-node query engine. This allows you to query data from various sources and accelerate it for low-latency access.

How to Use Spice.ai

- Connect to Your Data: Spice.ai offers connectors for over 30 modern and legacy sources, including Databricks, MySQL, and CSV files on FTP servers. It supports industry-standard protocols such as ODBC, JDBC, ADBC, HTTP, and Apache Arrow Flight (gRPC).

- Query Your Data: Use SQL to query your data across multiple sources. Spice.ai provides a SQL query federation feature that allows you to join data from different sources in a single query.

- Accelerate Your Data: Materialize and accelerate your data in-memory or using embedded databases like DuckDB or SQLite. This will improve the performance of your queries.

- Deploy and Serve AI Models: Load and serve local models or utilize hosted AI platforms. Spice.ai provides purpose-built LLM tools for search and retrieval.

Who is Spice.ai For?

Spice.ai is designed for developers, data scientists, and AI engineers who want to build data-driven AI applications. It is suitable for a wide range of use cases, including:

- AI-powered applications: Build intelligent applications that leverage data from various sources.

- Real-time analytics: Analyze data in real-time to gain insights and make informed decisions.

- Machine learning: Train and deploy machine learning models with ease.

Why is Spice.ai Important?

Spice.ai simplifies the process of building data-driven AI applications by providing a comprehensive set of tools and features. It eliminates the need to build complex data pipelines and infrastructure, allowing developers to focus on building innovative AI solutions.

What is the Spice Cloud Platform?

The Spice Cloud Platform is a managed service that provides a multi-cloud, high-availability environment for running Spice.ai. It includes features such as enterprise-grade security, performance, and compliance, backed by an enterprise-level SLA and support.

Get Started with Spice.ai

Getting started with Spice.ai is easy. You can start for free and query with just three lines of code.

Use Cases

- Building data-driven applications

- Implementing real-time data analytics

- Powering machine learning workflows

With its composable building blocks and enterprise-grade infrastructure, Spice.ai empowers developers to create the next generation of intelligent applications.

Spice.ai Pricing

Spice.ai offers a variety of pricing plans to meet the needs of different users. You can start with a free plan and upgrade to a paid plan as your needs grow.

In Conclusion

Spice.ai is a powerful open-source data and AI inference engine that simplifies the process of building data-driven AI applications. Whether you're building AI-powered applications, performing real-time analytics, or training machine learning models, Spice.ai provides the building blocks you need to succeed. It offers SQL query federation, data acceleration, and AI model deployment, all while being easy to get started with. If you are a developer looking for a way to accelerate your data and build AI applications then Spice.ai may be the perfect solution.

Best Alternative Tools to "Spice.ai"

Nexa SDK enables fast and private on-device AI inference for LLMs, multimodal, ASR & TTS models. Deploy to mobile, PC, automotive & IoT devices with production-ready performance across NPU, GPU & CPU.

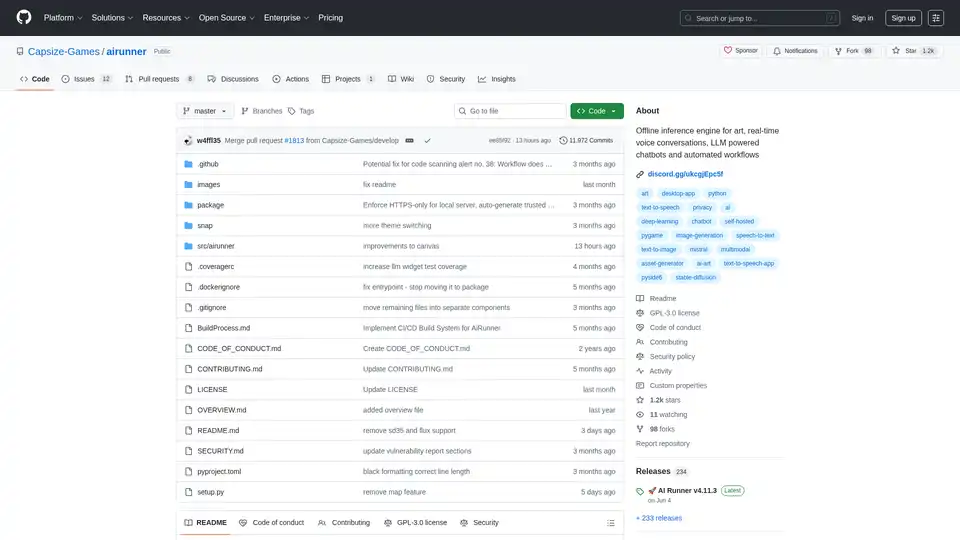

AI Runner is an offline AI inference engine for art, real-time voice conversations, LLM-powered chatbots, and automated workflows. Run image generation, voice chat, and more locally!

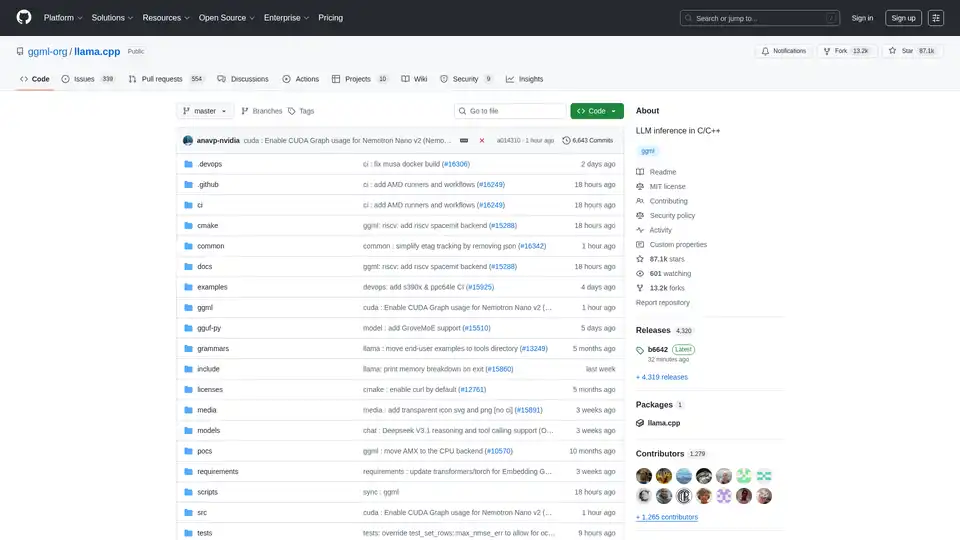

Enable efficient LLM inference with llama.cpp, a C/C++ library optimized for diverse hardware, supporting quantization, CUDA, and GGUF models. Ideal for local and cloud deployment.

Explore NVIDIA NIM APIs for optimized inference and deployment of leading AI models. Build enterprise generative AI applications with serverless APIs or self-host on your GPU infrastructure.

Discover Fast3D, the AI-powered solution for generating high-quality 3D models from text and images in seconds. Explore features, applications in gaming, and future trends.

AniPortrait is an open-source AI framework for generating photorealistic portrait animations driven by audio or video inputs. It supports self-driven, face reenactment, and audio-driven modes for high-quality video synthesis.

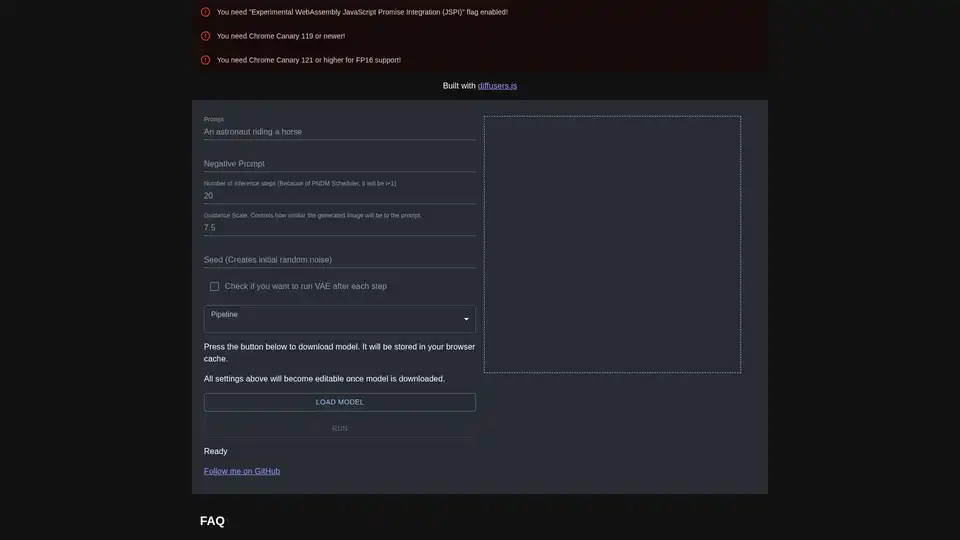

diffusers.js is a JavaScript library enabling Stable Diffusion AI image generation in the browser via WebGPU. Download models, input prompts, and create stunning visuals directly in Chrome Canary with customizable settings like guidance scale and inference steps.

Explore Qwen3 Coder, Alibaba Cloud's advanced AI code generation model. Learn about its features, performance benchmarks, and how to use this powerful, open-source tool for development.

Online AI manga translator with OCR for vertical/horizontal text. Batch processing and layout-preserving typesetting for manga and doujin.

MindSpore is an open-source AI framework developed by Huawei, supporting all-scenario deep learning training and inference. It features automatic differentiation, distributed training, and flexible deployment.

Cirrascale AI Innovation Cloud accelerates AI development, training, and inference workloads. Test and deploy on leading AI accelerators with high throughput and low latency.

Unlock SUFY's free CDN and scalable object storage for seamless data management and media AI. Get 100GB CDN/mo & 3000 minutes of video transcoding free.

LM-Kit provides enterprise-grade toolkits for local AI agent integration, combining speed, privacy, and reliability to power next-generation applications. Leverage local LLMs for faster, cost-efficient, and secure AI solutions.

DeepSeek v3 is a powerful AI-driven LLM with 671B parameters, offering API access and research paper. Try our online demo for state-of-the-art performance.