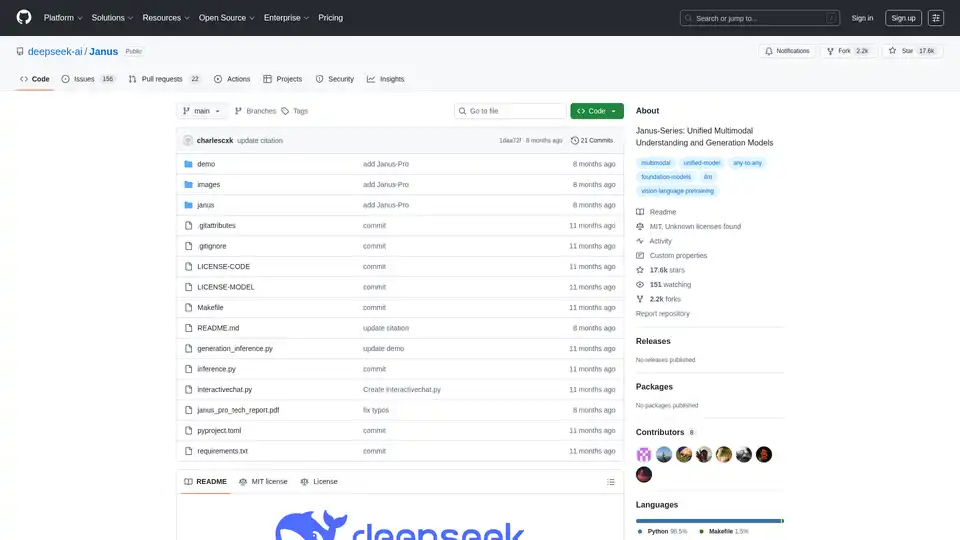

Janus-Series

Overview of Janus-Series

Janus-Series: Unified Multimodal Understanding and Generation Models

Janus-Series is a set of unified multimodal models developed by DeepSeek AI, designed for both understanding and generating content across different modalities. The series includes Janus, Janus-Pro, and JanusFlow, each offering unique features and improvements over previous versions.

What is Janus-Series?

Janus-Series represents a novel approach to multimodal learning by unifying understanding and generation within a single framework. This approach addresses limitations in previous models and enhances flexibility and performance across various tasks.

How does Janus-Series work?

The core innovation of Janus lies in decoupling visual encoding into separate pathways while utilizing a single transformer architecture. This decoupling alleviates conflicts between the roles of the visual encoder in understanding and generation, leading to improved overall performance.

Key Components:

- Janus: The foundational model that decouples visual encoding for unified multimodal understanding and generation.

- Janus-Pro: An advanced version of Janus that incorporates an optimized training strategy, expanded training data, and scaling to larger model sizes. Janus-Pro achieves significant improvements in both multimodal understanding and text-to-image instruction-following capabilities.

- JanusFlow: Integrates autoregressive language models with rectified flow, a state-of-the-art method in generative modeling. It achieves comparable or superior performance to specialized models while outperforming existing unified approaches.

Key Features and Capabilities

- Unified Multimodal Understanding and Generation: The models can understand and generate content across different modalities, such as text and images.

- Decoupled Visual Encoding: Separates visual encoding pathways to improve the model's ability to both understand and generate visual content.

- Text-to-Image Generation: Can generate images from textual descriptions, with Janus-Pro enhancing the stability and quality of text-to-image generation.

- Autoregressive Framework: Uses an autoregressive framework to unify multimodal understanding and generation.

- Integration with Rectified Flow (JanusFlow): JanusFlow integrates autoregressive language models with rectified flow for improved generative modeling.

How to use Janus-Series?

- Model Download: Download the desired model from the Hugging Face links provided in the documentation. Available models include Janus-1.3B, JanusFlow-1.3B, Janus-Pro-1B, and Janus-Pro-7B.

- Quick Start: Follow the quick start guides provided for each model to begin using it.

- Inference: Use the provided scripts (e.g.,

inference.py,generation_inference.py,interactivechat.py) to perform inference tasks.

Why choose Janus-Series?

- High Flexibility: The decoupled visual encoding enhances the framework's flexibility, allowing it to adapt to different tasks and modalities.

- Strong Performance: Janus models match or exceed the performance of task-specific models in various benchmarks.

- Unified Architecture: The use of a single, unified transformer architecture simplifies the model and improves its efficiency.

Who is Janus-Series for?

- Researchers: Ideal for researchers working on multimodal learning, computer vision, and natural language processing.

- Developers: Suitable for developers building applications that require multimodal understanding and generation capabilities.

- AI Practitioners: Useful for AI practitioners looking for a versatile and high-performing multimodal model.

Use cases

- Text-to-image generation: Create images from textual descriptions, useful for content creation and design.

- Visual understanding: Analyze and interpret visual content, enabling applications in image recognition and understanding.

- Multimodal understanding: Understand and generate content across different modalities, opening opportunities for advanced AI applications.

License

The code repository is licensed under the MIT License. The use of Janus models is subject to the DeepSeek Model License. Commercial usage is permitted under these terms.

Best Alternative Tools to "Janus-Series"

InstaLM: Chat with Claude, GPT, Gemini & more directly on your macOS & iOS device. Enjoy voice interaction, file attachments & custom assistants with a privacy-first design.

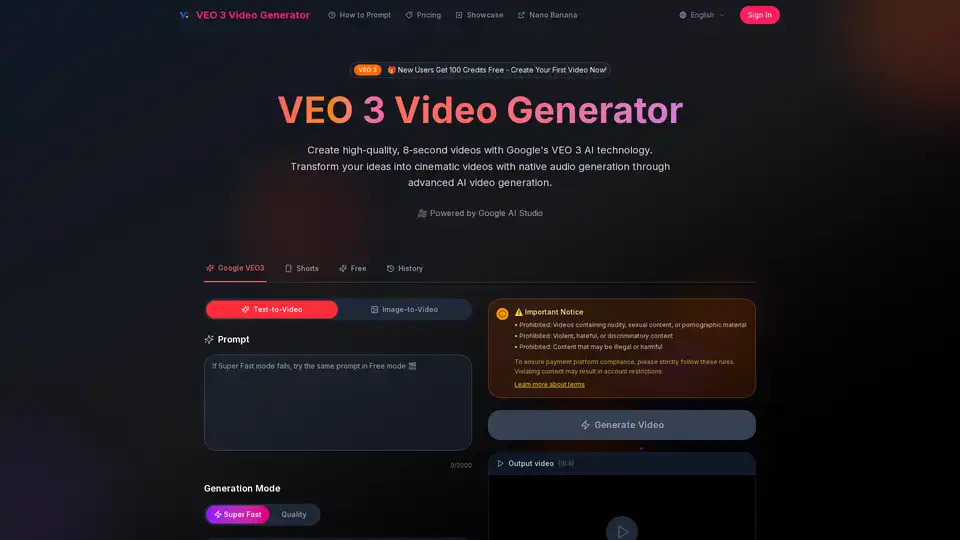

Create high-quality 8-second videos with VEO 3 Video Generator, Google's advanced AI video generator. Generate cinematic videos with native audio through Google AI Studio.

PIA is an all-in-one AI platform integrating over 100 advanced models including GPT-4.5, Claude 4, Gemini 2.5 for chat, image generation, video creation, and AI search. Fast, accurate, and accessible anytime.

BAGEL is an open-source unified multimodal AI model that combines image generation, editing, and understanding capabilities with advanced reasoning, offering photorealistic outputs and comparable performance to proprietary systems like GPT-4o.

Wan 2.5 is an open-source AI platform for native multimodal video generation with synchronized audio. Create stunning 1080p videos from text or images.

Lightning-fast AI platform for developers. Deploy, fine-tune, and run 200+ optimized LLMs and multimodal models with simple APIs - SiliconFlow.

Gemini-powered AI image editor excelling in character consistency, text-based editing & multi-image fusion with world knowledge understanding.

Magicflow AI is a generative AI image experimentation workspace that enables bulk image generation, evaluation, and team collaboration for perfecting Stable Diffusion outputs.

Nano Banana is the best AI image editor. Transform any image with simple text prompts using Google's Gemini Flash model. New users get free credits for advanced editing like photo restoration and virtual makeup.

Seedream 4.0 is a cutting-edge AI image generator powered by ByteDance, offering ultra-fast 1.8-second generation, 4K resolution, batch processing, and advanced editing for creators and businesses seeking photorealistic visuals.

Discover Flux Kontext Image Generator, an advanced AI tool for transforming ideas into stunning images with natural language editing, fast results, and consistent styles. Ideal for creators seeking precise visual modifications.

Discover Nano Banana AI, powered by Gemini 2.5 Flash Image, for free online image generation and editing. Create consistent characters, edit photos effortlessly, and explore styles like anime or 3D conversions at NanoBananaArt.ai.

Turn your ideas into videos in seconds with Media.io's AI Video Generator. Just enter text or upload an image to create stunning, watermark-free videos—100% free.

Molmo AI is a powerful open-source multimodal AI model designed for rich interactions with physical and virtual environments, outperforming larger models in benchmarks.