Cloudflare Workers AI

Overview of Cloudflare Workers AI

What is Cloudflare Workers AI?

Cloudflare Workers AI is a serverless AI platform that allows developers to run machine learning inference tasks directly on Cloudflare's global network. This means you can deploy AI applications closer to your users, resulting in faster performance and lower latency. It eliminates the need for complex infrastructure setup, making it easier and more cost-effective to integrate AI into your applications.

How does Cloudflare Workers AI work?

Cloudflare Workers AI leverages the Cloudflare Workers platform to execute AI models on NVIDIA GPUs distributed across Cloudflare's global network. This allows for running generative AI tasks without additional setup.

Key features include:

- Serverless AI on GPUs: Run AI models on a global network of NVIDIA GPUs.

- Pre-trained Models: Choose from a catalog of popular models like Llama-2, Whisper, and ResNet50.

- Global Availability: Access AI models from Workers, Pages, or any service via a REST API.

- Vector Database Integration: Use Vectorize to generate and store embeddings for enhanced AI workflows.

- AI Gateway: Improve reliability and scalability with caching, rate limiting, and analytics.

- Multi-Cloud Training: Use R2 for cost-effective, egress-free data storage for multi-cloud training architectures.

How to use Cloudflare Workers AI?

- Select a Model: Choose a pre-trained model from the Workers AI catalog based on your needs (e.g., text generation, image classification, speech recognition).

- Integrate with Workers: Use the Workers AI API within your Cloudflare Worker to send data to the model and receive the inference results.

- Deploy Globally: Cloudflare automatically distributes your AI application across its global network, ensuring low latency for users worldwide.

Example Use Cases:

- Image Classification: Identify objects or scenes in images.

- Sentiment Analysis: Determine the sentiment (positive, negative, neutral) of text.

- Speech Recognition: Convert audio to text.

- Text Generation: Generate creative text formats of content.

- Translation: Translate text from one language to another.

Why choose Cloudflare Workers AI?

- Low Latency: Run AI models closer to your users for faster response times.

- Scalability: Cloudflare's global network automatically scales to handle increased demand.

- Cost-Effectiveness: Pay-as-you-go pricing eliminates the need for upfront infrastructure investments. The platform provides 10K free daily neurons before billing starts.

- Ease of Use: Simplified setup and integration with other Cloudflare services streamline AI development.

- Enhanced Control and Protection: AI Gateway adds a layer of control and protection in LLM applications, allowing you to apply rate-limits and caching to protect back-end infrastructure and avoid surprise bills.

- Cost-Effective Training: Egress-free storage with R2 makes multi-cloud architectures for training LLMs affordable.

Who is Cloudflare Workers AI for?

Cloudflare Workers AI is ideal for developers and businesses looking to:

- Integrate AI into their web applications without managing complex infrastructure.

- Deliver fast, low-latency AI experiences to users around the world.

- Scale their AI applications efficiently and cost-effectively.

Customer Success:

Bhanu Teja Pachipulusu, Founder of SiteGPT.ai, states:

"We use Cloudflare for everything – storage, cache, queues, and most importantly for training data and deploying the app on the edge, so I can ensure the product is reliable and fast. It's also been the most affordable option, with competitors costing more for a single day's worth of requests than Cloudflare costs in a month."

Best Alternative Tools to "Cloudflare Workers AI"

Baseten is a platform for deploying and scaling AI models in production. It offers performant model runtimes, cross-cloud high availability, and seamless developer workflows, powered by the Baseten Inference Stack.

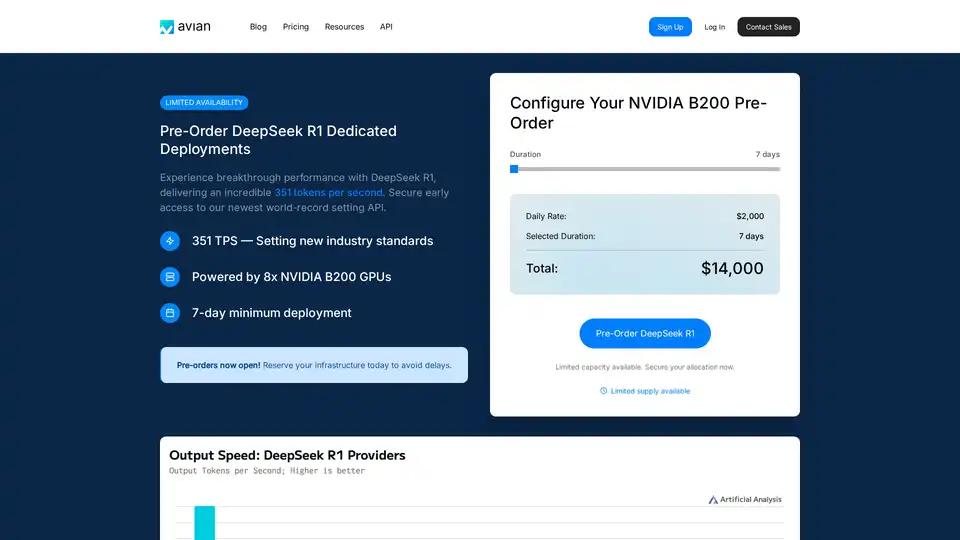

Avian API offers the fastest AI inference for open source LLMs, achieving 351 TPS on DeepSeek R1. Deploy any HuggingFace LLM at 3-10x speed with an OpenAI-compatible API. Enterprise-grade performance and privacy.

AIMLAPI provides access to 300+ AI models through a single, low-latency API. Save up to 80% compared to OpenAI with fast, cost-efficient AI solutions for machine learning.

Float16.cloud offers serverless GPUs for AI development. Deploy models instantly on H100 GPUs with pay-per-use pricing. Ideal for LLMs, fine-tuning, and training.

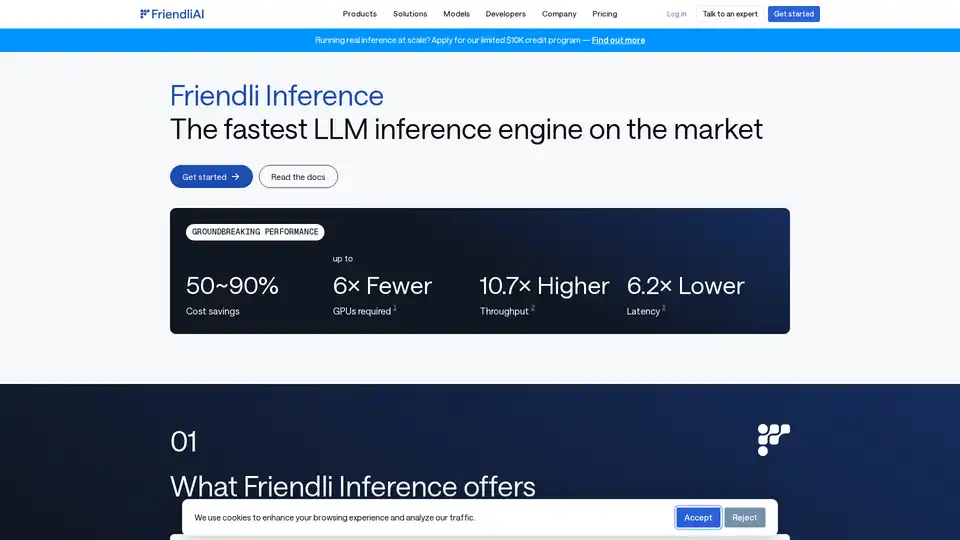

Friendli Inference is the fastest LLM inference engine, optimized for speed and cost-effectiveness, slashing GPU costs by 50-90% while delivering high throughput and low latency.

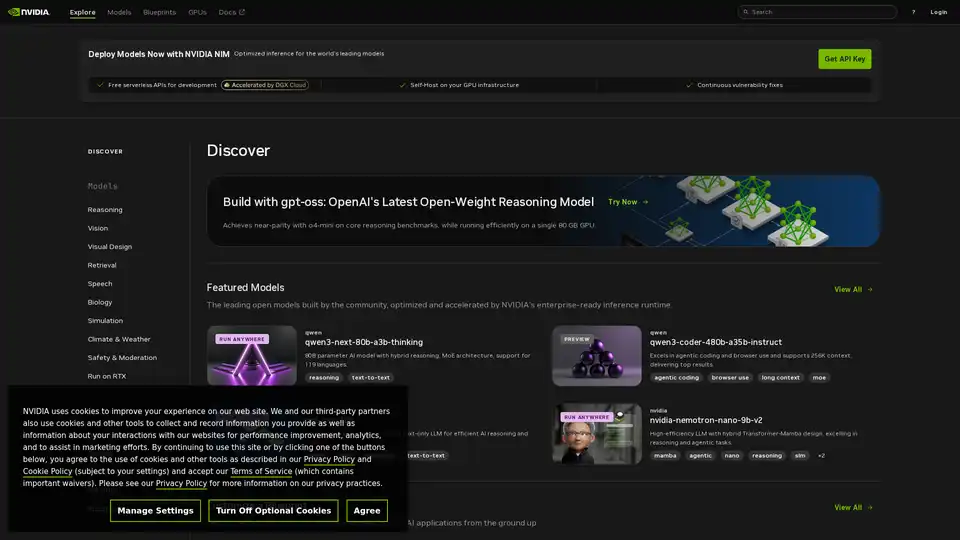

Explore NVIDIA NIM APIs for optimized inference and deployment of leading AI models. Build enterprise generative AI applications with serverless APIs or self-host on your GPU infrastructure.

Runpod is an AI cloud platform simplifying AI model building and deployment. Offering on-demand GPU resources, serverless scaling, and enterprise-grade uptime for AI developers.

GPUX is a serverless GPU inference platform that enables 1-second cold starts for AI models like StableDiffusionXL, ESRGAN, and AlpacaLLM with optimized performance and P2P capabilities.

Lightning-fast AI platform for developers. Deploy, fine-tune, and run 200+ optimized LLMs and multimodal models with simple APIs - SiliconFlow.

Inferless offers blazing fast serverless GPU inference for deploying ML models. It provides scalable, effortless custom machine learning model deployment with features like automatic scaling, dynamic batching, and enterprise security.

EnergeticAI is TensorFlow.js optimized for serverless functions, offering fast cold-start, small module size, and pre-trained models, making AI accessible in Node.js apps up to 67x faster.

Simplify AI deployment with Synexa. Run powerful AI models instantly with just one line of code. Fast, stable, and developer-friendly serverless AI API platform.

Modal: Serverless platform for AI and data teams. Run CPU, GPU, and data-intensive compute at scale with your own code.

Instantly run any Llama model from HuggingFace without setting up any servers. Over 11,900+ models available. Starting at $10/month for unlimited access.