Modal

Overview of Modal

What is Modal?

Modal is a serverless platform designed for AI and data teams, offering high-performance infrastructure for AI inference, large-scale batch processing, and sandboxed code execution. It simplifies deploying and scaling AI applications, allowing developers to focus on code rather than infrastructure management.

Key Features:

- Serverless AI Inference: Scale AI inference seamlessly without managing servers.

- Large-Scale Batch Processing: Run high-volume workloads efficiently with serverless pricing.

- Sandboxed Code Execution: Execute code securely and flexibly.

- Sub-Second Container Starts: Iterate quickly in the cloud with a Rust-based container stack.

- Zero Config Files: Define hardware and container requirements next to Python functions.

- Autoscaling to Hundreds of GPUs: Handle unpredictable load by scaling to thousands of GPUs.

- Fast Cold Boots: Load gigabytes of weights in seconds with optimized container file system.

- Flexible Environments: Bring your own image or build one in Python.

- Seamless Integrations: Export function logs to Datadog or OpenTelemetry-compatible providers.

- Data Storage: Manage data effortlessly with network volumes, key-value stores, and queues.

- Job Scheduling: Set up cron jobs, retries, and timeouts to control workloads.

- Web Endpoints: Deploy and manage web services with custom domains and secure HTTPS endpoints.

- Built-In Debugging: Troubleshoot efficiently with the modal shell.

How to use Modal?

Using Modal involves defining hardware and container requirements next to your Python functions. The platform automatically scales resources based on the workload. It supports deploying custom models, popular frameworks, and anything that can run in a container.

- Define your functions: Specify the hardware and container requirements.

- Deploy your code: Modal handles the deployment and scaling.

- Integrate with other services: Use integrations with Datadog, S3, and other cloud providers.

Why is Modal important?

Modal is important because it simplifies the deployment and scaling of AI applications. It eliminates the need for developers to manage complex infrastructure, allowing them to focus on building and iterating on their models and code. The platform's serverless pricing model also helps to reduce costs by only charging for the resources consumed.

Where can I use Modal?

Modal can be used in a variety of applications, including:

- Generative AI inference

- Fine-tuning and training

- Batch processing

- Web services

- Job queues

- Data analysis

Best way to get started with Modal?

The best way to get started with Modal is to visit their website and explore their documentation and examples. They offer a free plan with $30 of compute per month, which is enough to get started and experiment with the platform. The community Slack channel is also a great resource for getting help and connecting with other users.

Best Alternative Tools to "Modal"

Float16.Cloud provides serverless GPUs for fast AI development. Run, train, and scale AI models instantly with no setup. Features H100 GPUs, per-second billing, and Python execution.

Runpod is an AI cloud platform simplifying AI model building and deployment. Offering on-demand GPU resources, serverless scaling, and enterprise-grade uptime for AI developers.

Runpod is an all-in-one AI cloud platform that simplifies building and deploying AI models. Train, fine-tune, and deploy AI effortlessly with powerful compute and autoscaling.

Novita AI provides 200+ Model APIs, custom deployment, GPU Instances, and Serverless GPUs. Scale AI, optimize performance, and innovate with ease and efficiency.

Cerebrium is a serverless AI infrastructure platform simplifying the deployment of real-time AI applications with low latency, zero DevOps, and per-second billing. Deploy LLMs and vision models globally.

GPUX is a serverless GPU inference platform that enables 1-second cold starts for AI models like StableDiffusionXL, ESRGAN, and AlpacaLLM with optimized performance and P2P capabilities.

Inferless offers blazing fast serverless GPU inference for deploying ML models. It provides scalable, effortless custom machine learning model deployment with features like automatic scaling, dynamic batching, and enterprise security.

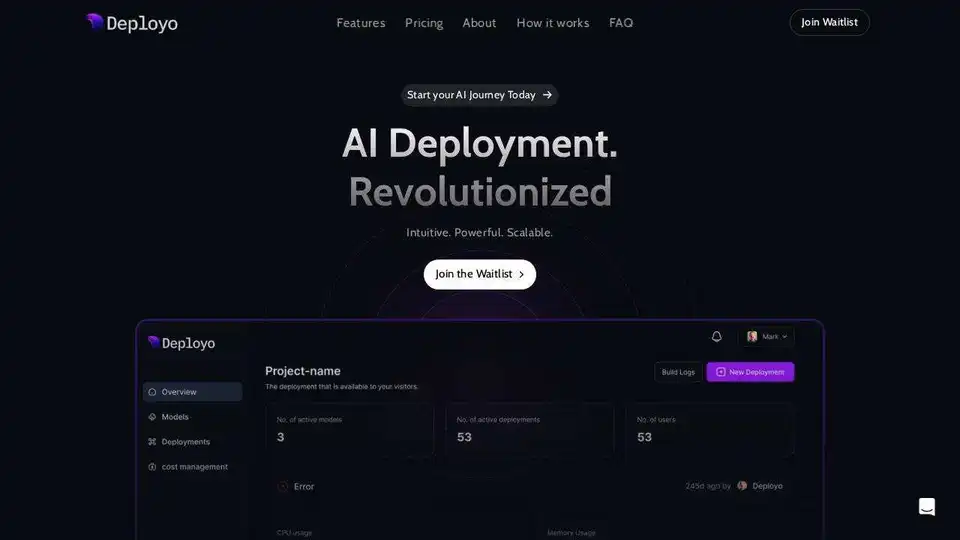

Deployo simplifies AI model deployment, turning models into production-ready applications in minutes. Cloud-agnostic, secure, and scalable AI infrastructure for effortless machine learning workflow.

fal.ai: Easiest & most cost-effective way to use Gen AI. Integrate generative media models with a free API. 600+ production ready models.

The AI Engineer Pack by ElevenLabs is the AI starter pack every developer needs. It offers exclusive access to premium AI tools and services like ElevenLabs, Mistral, and Perplexity.

Scade.pro is a comprehensive no-code AI platform that enables users to build AI features, automate workflows, and integrate 1500+ AI models without technical skills.

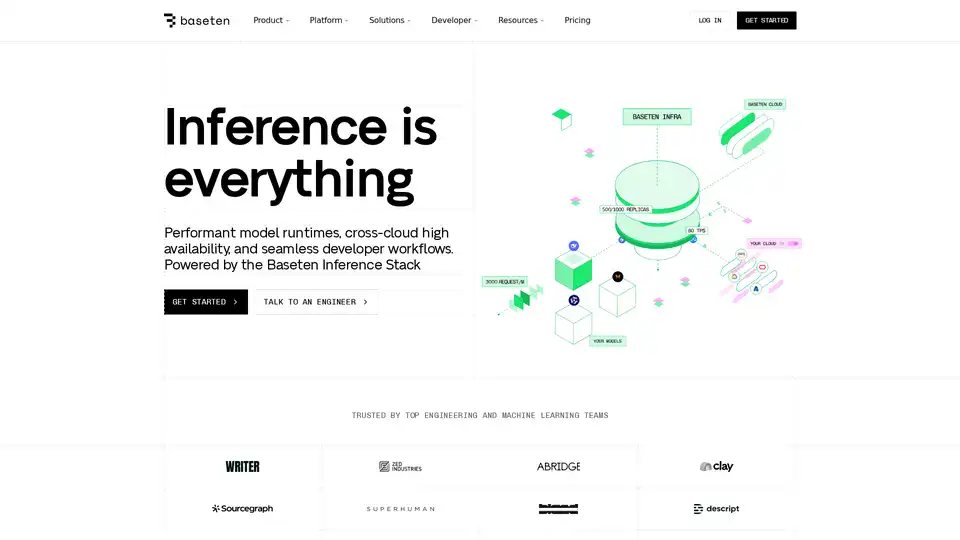

Baseten is a platform for deploying and scaling AI models in production. It offers performant model runtimes, cross-cloud high availability, and seamless developer workflows, powered by the Baseten Inference Stack.

Instantly run any Llama model from HuggingFace without setting up any servers. Over 11,900+ models available. Starting at $10/month for unlimited access.

Explore NVIDIA NIM APIs for optimized inference and deployment of leading AI models. Build enterprise generative AI applications with serverless APIs or self-host on your GPU infrastructure.