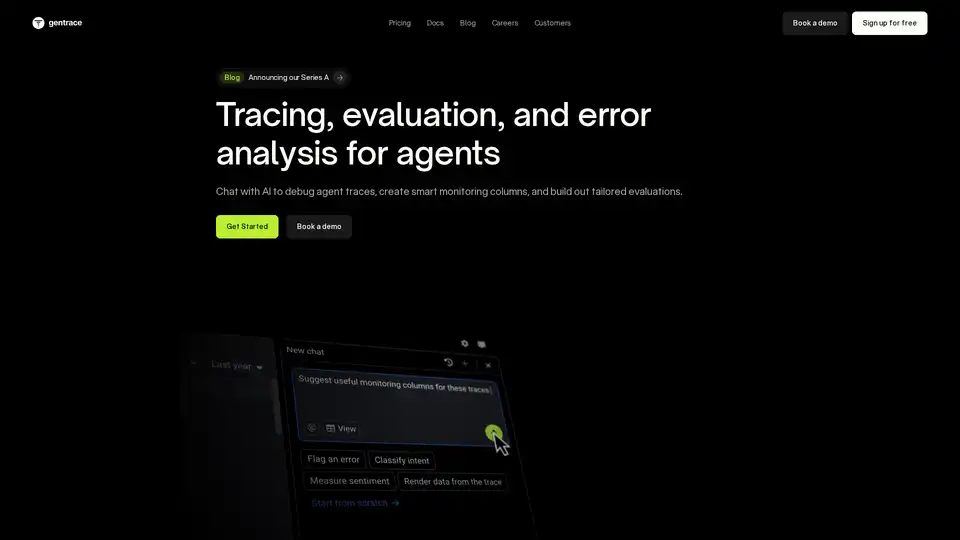

Gentrace

Overview of Gentrace

What is Gentrace?

Gentrace is a platform designed to help teams trace, evaluate, and analyze errors in their AI agents and Large Language Model (LLM) applications. It provides tools for debugging agent traces, automating evaluations, and building tailored evaluations to ensure reliable AI output.

How Does Gentrace Work?

Gentrace works by providing a tracing SDK that integrates with common agent frameworks and LLMs. This SDK allows developers to trace AI agent interactions, capture data, and send it to the Gentrace platform for analysis. The platform then provides tools for:

- Chatting with traces: An AI-powered chat interface inspired by Cursor, allowing users to ask questions about their agent traces and identify issues.

- Generating custom monitoring code: AI-driven generation of monitoring code tailored to specific use cases, which automatically runs on every trace to spot issues.

- Setting up notifications: Instant notifications for critical AI issues and regular quality summaries to track AI performance.

- Evaluating agent performance: Tools for lightweight evaluations that deliver immediate insights, and comprehensive testing workflows.

Key Features of Gentrace

- Error Analysis: Identify and fix AI issues using AI-powered chat with full context of agent traces.

- Custom Monitoring: Generate custom monitoring code tailored to specific use cases to automatically spot issues in AI output.

- Easy Installation: Minimal tracing SDK for quickly tracing AI agents, with widespread compatibility with common agent frameworks and LLMs.

- Evaluation Tools: Capture regressions before they go live with powerful evaluation tools and lightweight setup.

- Flexible Dataset Management: Store test data in Gentrace or your codebase and organize it efficiently with built-in management tools.

- Enterprise-Ready Security: Enterprise-level security through SOC 2 Type II and ISO 27001 compliance, with options for cloud or self-hosted deployment.

How to Use Gentrace

- Generate API Key: Click to generate a unique API key.

- Authenticate: Install the Gentrace SDK using npm.

- Initialize in Your Project: Use TypeScript or Python code to initialize the SDK and define an LLM interaction.

// Run a "unit test" evaluation

await evalOnce('rs-in-strawberry', async () => {

const response = await openai.chat.completions.create({

model: 'gpt-o4-mini',

messages: [{ role: 'user', content: 'How many rs in

strawberry? Return only the number.'}],

});

const output = response.choices[0].message.content;

if (output !== '3') {

throw new Error('Output is not 3: ${output}’ );

}

});

Why Choose Gentrace?

Gentrace offers several advantages for teams working with AI agents and LLMs:

- Improved Debugging: Gentrace Chat helps quickly identify and fix issues in agent traces.

- Automated Monitoring: Custom monitoring code generation automates the process of spotting issues in AI output.

- Comprehensive Evaluation: Powerful evaluation tools help capture regressions before they go live.

- Enterprise-Level Security: Enterprise-ready security features ensure the safety and compliance of your AI applications.

Who is Gentrace For?

Gentrace is designed for:

- AI Engineers: Who need to debug and monitor AI agent performance.

- Machine Learning Engineers: Who are building and deploying LLM applications.

- Data Scientists: Who are working on evaluating and improving AI models.

- Teams: Who are building and deploying AI-powered products.

Practical Value of Gentrace

Gentrace provides practical value by:

- Reducing debugging time: By providing AI-powered chat and tracing tools, Gentrace helps developers quickly identify and fix issues in their AI agents.

- Improving AI quality: By automating monitoring and evaluation, Gentrace helps ensure that AI agents are performing as expected.

- Accelerating development: By providing a comprehensive platform for AI agent development, Gentrace helps teams build and deploy AI-powered products more quickly.

User Review

Gentrace was the right product for us because it allowed us to implement our own custom evaluations, which was crucial for our unique use cases. It's dramatically improved our ability to predict the impact of even small changes in our LLM implementations.

Madeline Gilbert Staff Machine Learning Engineer at Quizlet

Conclusion

Gentrace is a comprehensive platform for tracing, evaluating, and analyzing errors in AI agents and LLM applications. With its powerful debugging tools, automated monitoring, and enterprise-level security features, Gentrace is a valuable tool for teams building and deploying AI-powered products. Whether you're an AI engineer, machine learning engineer, or data scientist, Gentrace can help you build more reliable and effective AI applications.

Best Alternative Tools to "Gentrace"

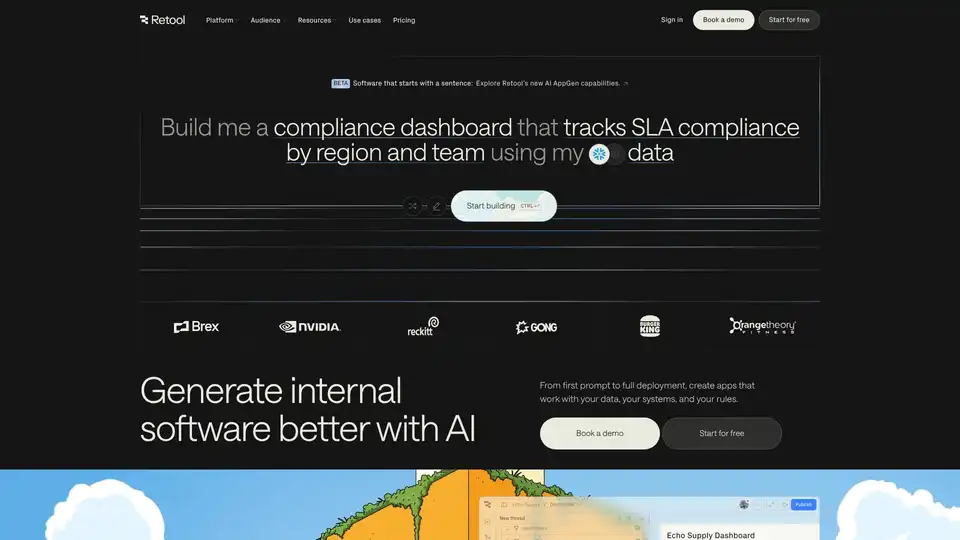

Retool is an AI-powered platform that allows you to build, deploy, and manage internal tools. Connect to any database, API, or LLM and leverage AI throughout your business to streamline processes and make data-driven decisions.

Lunary is an open-source LLM engineering platform providing observability, prompt management, and analytics for building reliable AI applications. It offers tools for debugging, tracking performance, and ensuring data security.

Vivgrid is an AI agent infrastructure platform that helps developers build, observe, evaluate, and deploy AI agents with safety guardrails and low-latency inference. It supports GPT-5, Gemini 2.5 Pro, and DeepSeek-V3.

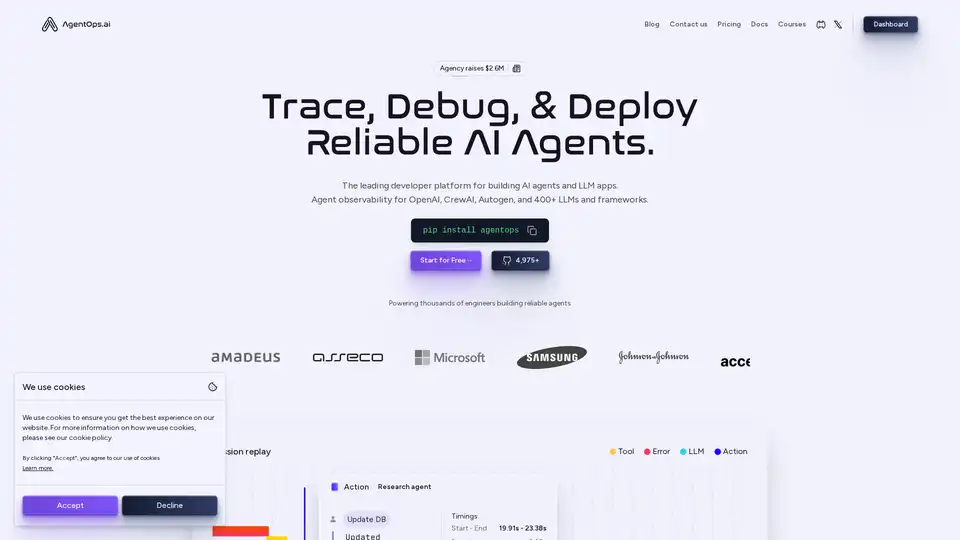

AgentOps is a developer platform for building reliable AI agents and LLM apps. It offers agent observability, time travel debugging, cost tracking, and fine-tuning capabilities.

Code Fundi is an AI-powered coding assistant designed to help developers and teams build software faster. It offers features like AI code generation, debugging, documentation, and real-time monitoring.

Maxim AI is an end-to-end evaluation and observability platform that helps teams ship AI agents reliably and 5x faster with comprehensive testing, monitoring, and quality assurance tools.

Pydantic AI is a GenAI agent framework in Python, designed for building production-grade applications with Generative AI. Supports various models, offers seamless observability, and ensures type-safe development.

UsageGuard provides a unified AI platform for secure access to LLMs from OpenAI, Anthropic, and more, featuring built-in safeguards, cost optimization, real-time monitoring, and enterprise-grade security to streamline AI development.

Dynamiq is an on-premise platform for building, deploying, and monitoring GenAI applications. Streamline AI development with features like LLM fine-tuning, RAG integration, and observability to cut costs and boost business ROI.

Arize AI provides a unified LLM observability and agent evaluation platform for AI applications, from development to production. Optimize prompts, trace agents, and monitor AI performance in real time.

Keywords AI is a leading LLM monitoring platform designed for AI startups. Monitor and improve your LLM applications with ease using just 2 lines of code. Debug, test prompts, visualize logs and optimize performance for happy users.

Elixir is an AI Ops and QA platform designed for monitoring, testing, and debugging AI voice agents. It offers automated testing, call review, and LLM tracing to ensure reliable performance.

Refact.ai, the #1 open-source AI agent for software development, automates coding, debugging, and testing with full context awareness. An open-source alternative to Cursor and Copilot.

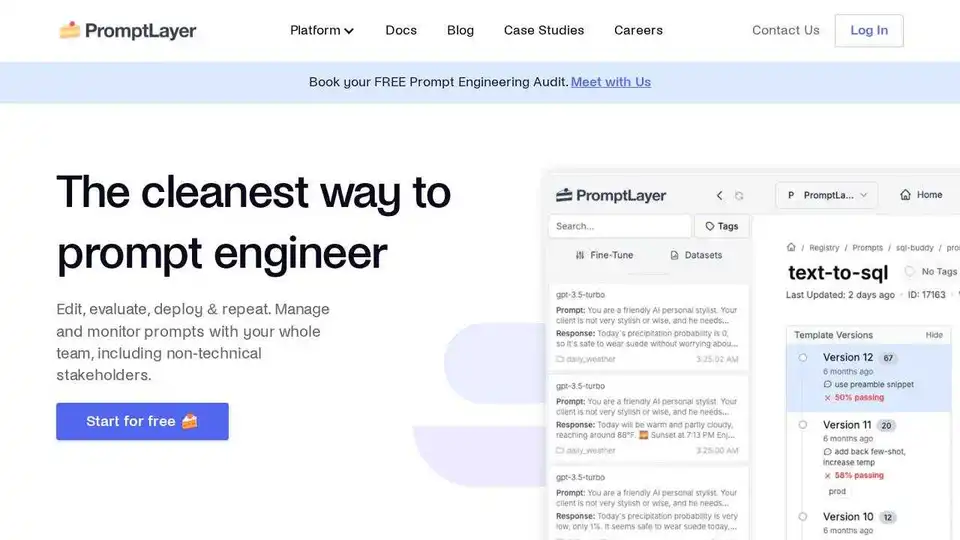

PromptLayer is an AI engineering platform for prompt management, evaluation, and LLM observability. Collaborate with experts, monitor AI agents, and improve prompt quality with powerful tools.