Qwen3 Coder

Overview of Qwen3 Coder

What is Qwen3 Coder?

Qwen3 Coder stands out as Alibaba Cloud's groundbreaking open-source large language model (LLM) specifically designed for code generation, comprehension, and agentic task execution in software development. With a massive 480 billion parameters built on a Mixture-of-Experts (MoE) architecture, this model pushes the boundaries of AI-assisted coding. Trained on an enormous 7.5 trillion tokens—70% focused on source code across 358 programming languages—Qwen3 Coder delivers performance on par with proprietary giants like GPT-4, all while being fully accessible under the Apache 2.0 license. Whether you're a solo developer tackling quick fixes or a team handling repository-level refactoring, this tool transforms passive code suggestions into proactive, intelligent assistance.

Unlike earlier models that merely autocomplete snippets, Qwen3 Coder embodies a new era of AI software agents. It doesn't just write code; it reasons through problems, plans multi-step solutions, integrates with tools, and debugs iteratively. This evolution from basic completion in Qwen1 to agentic capabilities in Qwen3 marks a ~40% to ~85% leap in benchmark scores like HumanEval, making it an essential resource for modern developers seeking efficient, high-quality code workflows.

How Does Qwen3 Coder Work?

At its core, Qwen3 Coder operates through a sophisticated MoE architecture, where 480 billion total parameters are distributed across 160 expert modules. During inference, only 35 billion parameters activate, ensuring blazing-fast performance without overwhelming hardware. The model employs a 62-layer causal Transformer with grouped-query attention, natively supporting a 256K token context window—expandable to 1M using Alibaba's YaRN technique. This allows it to process entire codebases, long documentation, or complex project histories in one go, a game-changer for handling large-scale software projects.

The training process is revolutionary. Pretraining drew from a cleaned corpus using Qwen2.5-Coder to filter noisy data and synthesize high-quality examples, emphasizing best practices in coding. What sets it apart is the execution-driven reinforcement learning (RL): the model was fine-tuned across millions of code execution cycles in 20,000 parallel environments. Rewards were given only for code that runs correctly and passes tests, going beyond syntax to ensure functional accuracy. This RL approach, combined with multi-step reasoning for workflows like tool usage and debugging, enables agentic behaviors—think of it as an AI co-pilot that anticipates needs and refines outputs autonomously.

For instance, when generating code, Qwen3 Coder first analyzes requirements, plans the structure (e.g., outlining a quicksort algorithm in Python), then executes and verifies it. Native function-calling supports seamless API integrations, making it ideal for embedding in IDEs or CI/CD pipelines.

Core Features of Qwen3 Coder

Qwen3 Coder's features make it a powerhouse for diverse coding tasks:

- Agentic Coding Workflows: Handles multi-turn interactions, from requirement gathering to iterative debugging, simulating a human developer's process.

- State-of-the-Art Performance: Achieves ~85% pass@1 on HumanEval, outperforming open-source peers like CodeLlama (67%) and matching GPT-4, especially in real-world scenarios.

- Ultra-Long Context Handling: 256K tokens standard, up to 1M extended, for analyzing full repositories without losing context.

- Polyglot Expertise: Supports 358 languages, including Python, Rust, Haskell, SQL, and more, with 70% training emphasis on code.

- Advanced RL Training: Learned from execution feedback, ensuring generated code is not just syntactically correct but practically viable.

- Open and Integrable: Apache 2.0 licensed, available on Hugging Face, ModelScope, and Alibaba Cloud APIs for commercial use.

These elements address common pain points in development, like error-prone manual coding or fragmented toolchains, by providing a unified, intelligent platform.

How to Use Qwen3 Coder?

Getting started with Qwen3 Coder is straightforward, offering flexibility for different setups:

- Cloud API Access: Leverage Alibaba Cloud's ModelStudio or DashScope for OpenAI-compatible APIs—no hardware hassles, pay-per-use for scalability.

- Local Deployment: Download from Hugging Face (e.g., Qwen/Qwen3-Coder-480B-A35B-Instruct) and use the Transformers library. A quick Python example:

This generates functional code snippets instantly.from transformers import AutoTokenizer, AutoModelForCausalLM device = "cuda" # Adjust for your hardware tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen3-Coder-480B-A35B-Instruct") model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen3-Coder-480B-A35B-Instruct", device_map="auto").eval() input_text = "# Write a quick sort algorithm in Python" model_inputs = tokenizer([input_text], return_tensors="pt").to(device) generated_ids = model.generate(model_inputs.input_ids, max_new_tokens=512, do_sample=False)[0] output = tokenizer.decode(generated_ids[len(model_inputs.input_ids[0]):], skip_special_tokens=True) print(output) - IDE Integration: Plug into VSCode via extensions like Claude Code (adapted for Qwen), or use the Qwen Code CLI for terminal commands.

- Quantized Options: Community GGUF versions (4-bit/8-bit) run on a single RTX 4090 GPU, democratizing access for individual users.

Hardware needs vary: full model requires multiple A100/H100 GPUs, but quantized or API versions lower the barrier. Key capabilities include code completion, bug fixing, repo analysis, and multi-step solving—perfect for automating repetitive tasks.

Why Choose Qwen3 Coder?

In a crowded field of AI coding tools, Qwen3 Coder shines for its blend of power, openness, and practicality. It outperforms predecessors like Qwen2.5-Coder (72% HumanEval) by incorporating agentic RL, reducing debugging time by up to 50% in complex projects per user reports. Developers praise its accuracy in polyglot environments and long-context prowess, which prevents context-loss errors common in smaller models.

For commercial viability, the Apache 2.0 license allows unrestricted use, unlike proprietary options with usage fees. Benchmarks confirm its edge: against CodeLlama's modest 100K context and 67% accuracy, Qwen3 offers 256K+ and 85%, ideal for enterprise-scale development. Its execution-driven training ensures reliable outputs, minimizing production bugs—a critical value for teams under tight deadlines.

Who is Qwen3 Coder For?

This tool targets a wide audience in software development:

- Individual Developers and Hobbyists: For quick code generation and learning across 358 languages.

- Professional Teams: Repo-level refactoring, automated testing, and integration in agile workflows.

- AI Researchers: Experimenting with MoE architectures, RL for agents, or fine-tuning on custom datasets.

- Startups and Enterprises: Cost-effective alternative to paid APIs, with cloud scalability for high-volume tasks.

If you're frustrated with incomplete suggestions or syntax-focused tools, Qwen3 Coder's agentic approach provides deeper assistance, boosting productivity without steep learning curves.

Best Ways to Maximize Qwen3 Coder in Your Workflow

To get the most out of it:

- Start with API for prototyping, then deploy locally for privacy-sensitive projects.

- Combine with tools like Git for repo analysis or Jupyter for interactive debugging.

- Fine-tune on domain-specific code (e.g., finance algorithms) using provided scripts.

- Monitor performance with benchmarks like HumanEval to track improvements.

User feedback highlights its role in accelerating feature development— one developer noted cutting a refactoring task from days to hours. While it excels in structured tasks, pairing it with human oversight ensures optimal results in creative coding.

Performance Benchmarks and Comparisons

| Model | Size (Params) | Max Context | HumanEval Pass@1 | License |

|---|---|---|---|---|

| Qwen3 Coder | 480B (35B active, MoE) | 256K (up to 1M) | ~85% | Apache 2.0 |

| CodeLlama-34B | 34B (dense) | 100K | ~67% | Meta Custom |

| StarCoder-15B | 15.5B (dense) | 8K | ~40% | Open RAIL |

| GPT-4 | Proprietary | 8K-32K | ~85% | Proprietary |

These stats underscore Qwen3's leadership in open-source AI code generation, balancing scale with efficiency.

Frequently Asked Questions (FAQ)

- What makes Qwen3 Coder's performance state-of-the-art? Its execution-driven RL and massive MoE architecture ensure functional code with ~85% accuracy on benchmarks.

- How does the 256K context window help developers? It enables full codebase analysis, reducing errors in large projects.

- What is 'agentic coding' and how does Qwen3 Coder achieve it? It's multi-step, tool-using development; achieved via RL in parallel environments for planning and debugging.

- Can I use Qwen3 Coder for commercial projects? Yes, under Apache 2.0 for unrestricted commercial applications.

- How many programming languages does Qwen3 Coder support? 358, covering mainstream and niche ones like Haskell and SQL.

- What hardware is needed to run the 480B model? Multiple high-end GPUs for full; quantized versions on single consumer cards.

- How does Qwen3 Coder compare to predecessors? Dramatic improvements in agentic features and accuracy over Qwen2.5.

- Is there an API without self-hosting? Yes, via Alibaba Cloud's services.

- What does 'execution-driven RL' mean? Training rewards based on real code runs and tests, not just patterns.

- Where to find documentation? Hugging Face, ModelScope, or Alibaba Cloud repos.

Qwen3 Coder isn't just another LLM—it's a catalyst for smarter, faster software engineering, empowering developers worldwide with cutting-edge, open-source innovation.

Best Alternative Tools to "Qwen3 Coder"

Allganize offers enterprise AI solutions with secure, on-premise AI, automating workflows and transforming proprietary data into a competitive advantage. Features include Agentic RAG, Generative BI, and a No-Code App Builder.

Devento is an AI-powered platform that allows you to build and deploy full-stack applications using AI agents and secure micro-VM sandboxes. It simplifies the development process, from chatting with AI to deploying functional apps.

MyShell AI is an AI consumer layer empowering everyone to build, share, and own AI Agents. Explore AI-powered entertainment and utility with shared ownership.

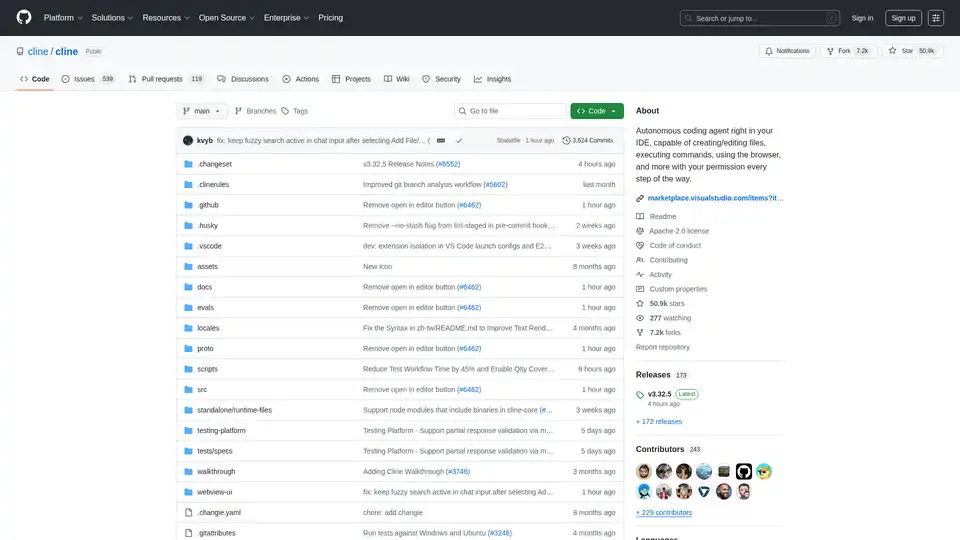

Cline is an autonomous AI coding agent for VS Code that creates/edits files, executes commands, uses the browser, and more with your permission.

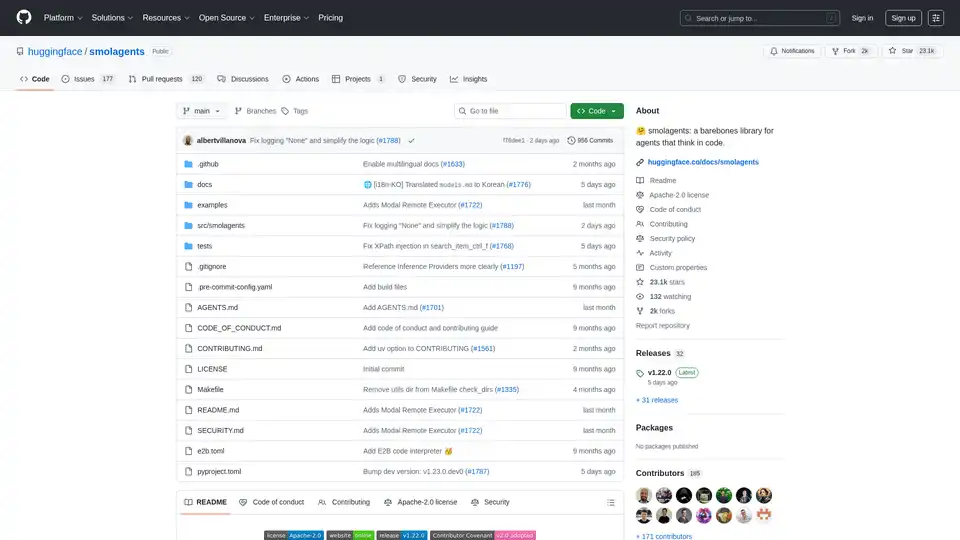

Smolagents is a minimalistic Python library for creating AI agents that reason and act through code. It supports LLM-agnostic models, secure sandboxes, and seamless Hugging Face Hub integration for efficient, code-based agent workflows.

A next generation enterprise studio for AI builders to train, validate, tune and deploy AI models. Discover IBM watsonx.ai's integrated tools for scalable generative AI development.

The world's first agentic AI browser that automates web and desktop-based tasks. Providing deep search, cross-app workflow automation, images, coding and even music-all with military-grade security.

Substrate is the ultimate platform for compound AI, offering powerful SDKs with optimized models, vector storage, code interpreter, and agentic control. Build efficient multi-step AI workflows faster than ever—ditch LangChain for streamlined development.

Roo Code is an open-source AI-powered coding assistant for VS Code, featuring AI agents for multi-file editing, debugging, and architecture. It supports various models, ensures privacy, and customizes to your workflow for efficient development.

Cursor is the ultimate AI-powered code editor designed to boost developer productivity with features like intelligent autocomplete, agentic coding, and seamless integrations for efficient software building.

Zed is a high-performance code editor built in Rust, designed for collaboration with humans and AI. Features include AI-powered agentic editing, native Git support, and remote development.

Ardor is a full-stack agentic app builder that allows you to build and deploy production-ready AI agentic apps from spec generation to code, infrastructure, deployment, and monitoring with just a prompt.

Dify is an open-source platform to build production-ready AI applications, agentic workflows, and RAG pipelines. Empower your team with no-code AI.

Respell: Run your business with Agentic AI workflows. Automate with no-code Agents for controllability and performance.